https://blog.csdn.net/qq_35591253/article/details/140580666

环境

树莓派5b

ubuntu20.04 tls长期稳定版本

miniconda 默认环境

python3.11 (python3.7不行)

后期自动暗转ncnn 转换加速模型库

(系统5B刷好可以直接用在4B,但是aconda必须重装,不然运行报错核心错误。)

1下载

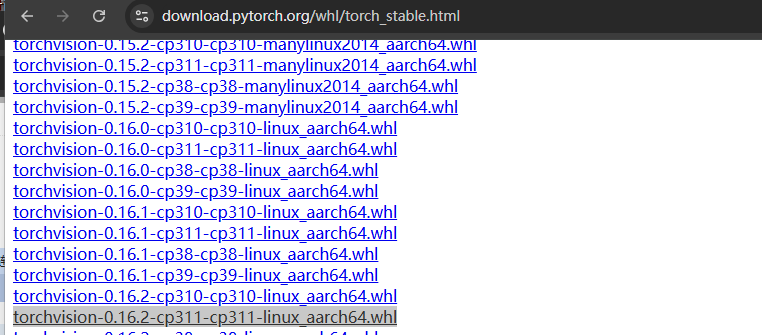

https://download.pytorch.org/whl/torch_stable.html

[TMVA] 要求 torch<2.5 否则在 ARM 上会提示错误 root-project/root#16718

2降级 numpy 到1.0版本 不要超过2

pip uninstall numpy pip install -U numpy==1.26.4

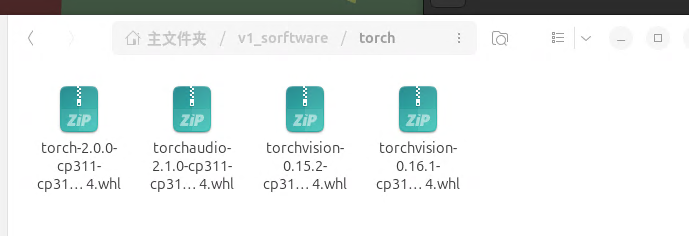

3 安装torch库

pip install 安装包

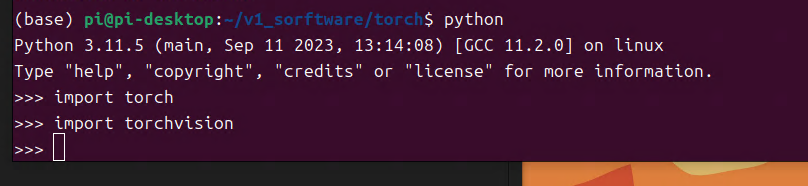

检查是否成功

python import torch import torchvision exit()

4 部署yolo

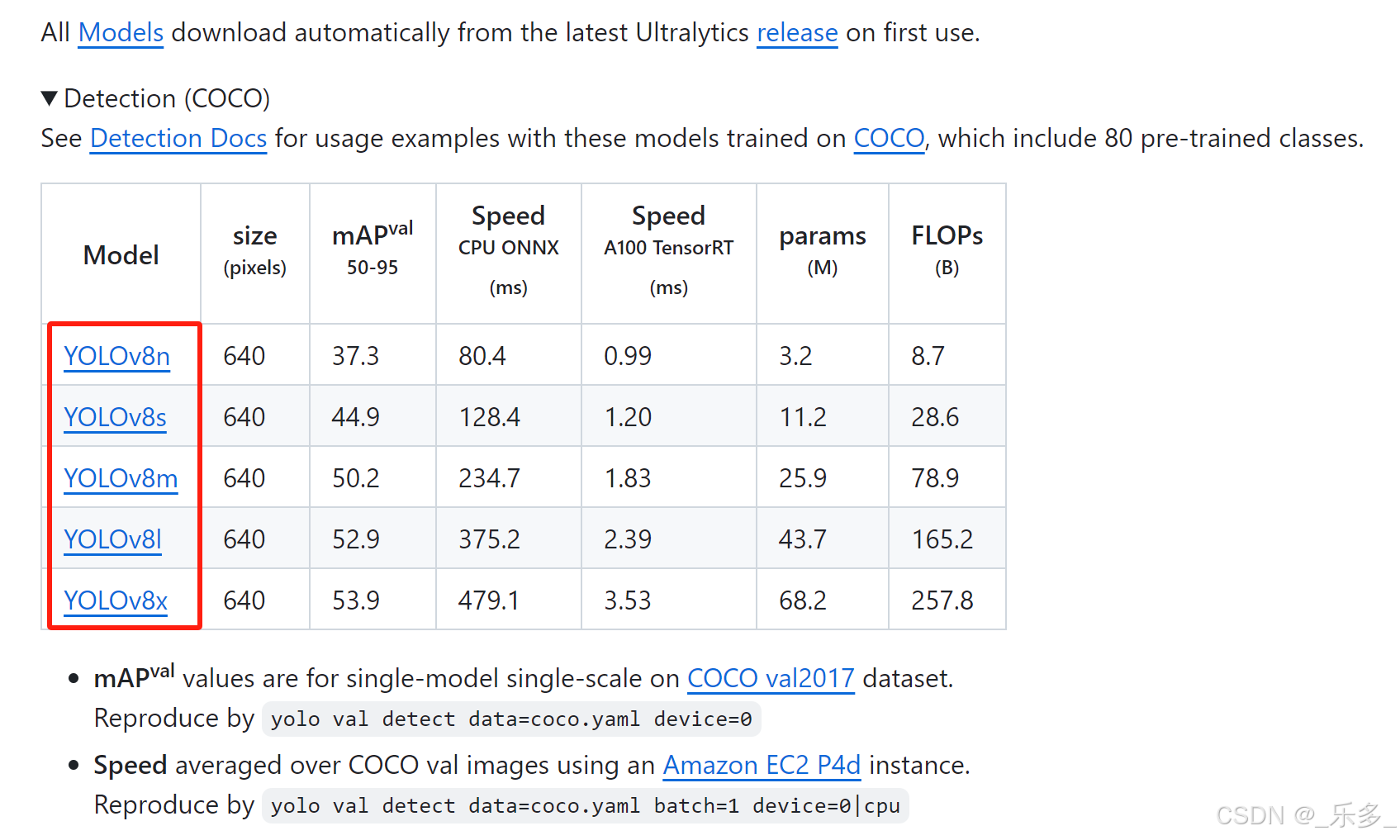

一、下载YOLO权重文件

https://github.com/ultralytics/ultralytics?tab=readme-ov-file

拉到网页最下面,选择适合的模型,下载到本地

二、环境配置

安装以下两个库

pip install ultralytics pip install opencv-python

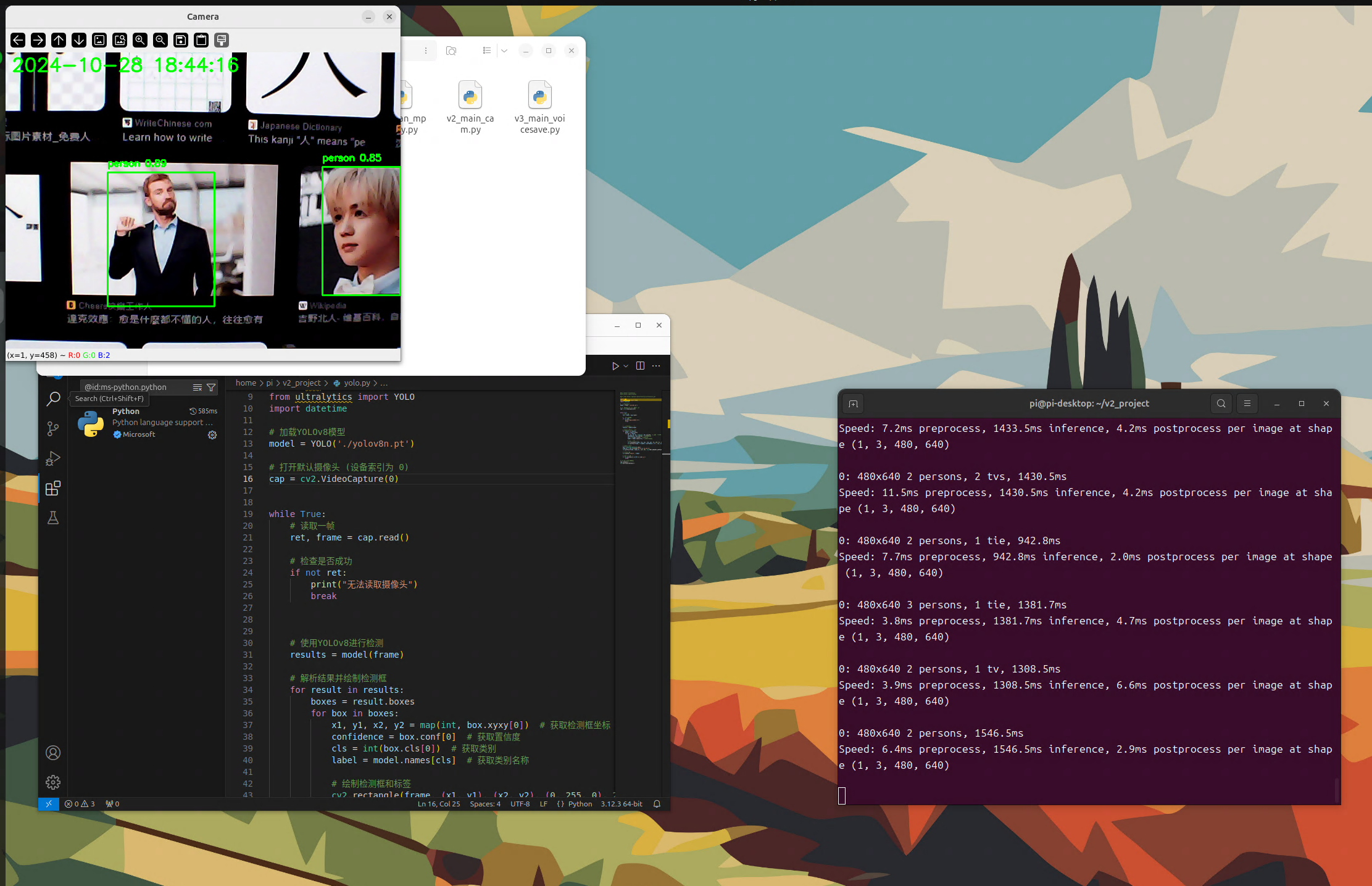

代码1 纯pytorch运行

# conda activate base # python3.11

#pip install ultralytics

#pip install opencv-python

#git clone https://github.com/ultralytics/ultralytics.git

# if cam can not open -- sudo chmod 777 /dev/video0

import cv2

from ultralytics import YOLO

import datetime

# 加载YOLOv8模型

model = YOLO('./yolov8n.pt')

# 打开默认摄像头 (设备索引为 0)

cap = cv2.VideoCapture(0)

while True:

# 读取一帧

ret, frame = cap.read()

# 检查是否成功

if not ret:

print("无法读取摄像头")

break

# 使用YOLOv8进行检测

results = model(frame)

# 解析结果并绘制检测框

for result in results:

boxes = result.boxes

for box in boxes:

x1, y1, x2, y2 = map(int, box.xyxy[0]) # 获取检测框坐标

confidence = box.conf[0] # 获取置信度

cls = int(box.cls[0]) # 获取类别

label = model.names[cls] # 获取类别名称

# 绘制检测框和标签

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 2)

cv2.putText(frame, f"{label} {confidence:.2f}", (x1, y1 - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

# 获取当前时间

now = datetime.datetime.now()

time_str = now.strftime("%Y-%m-%d %H:%M:%S")

cv2.putText(frame, time_str, (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2, cv2.LINE_AA)

# 显示当前帧

cv2.imshow('Camera', frame)

# 按下 'q' 键退出

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 释放摄像头并关闭窗口

cap.release()

cv2.destroyAllWindows()

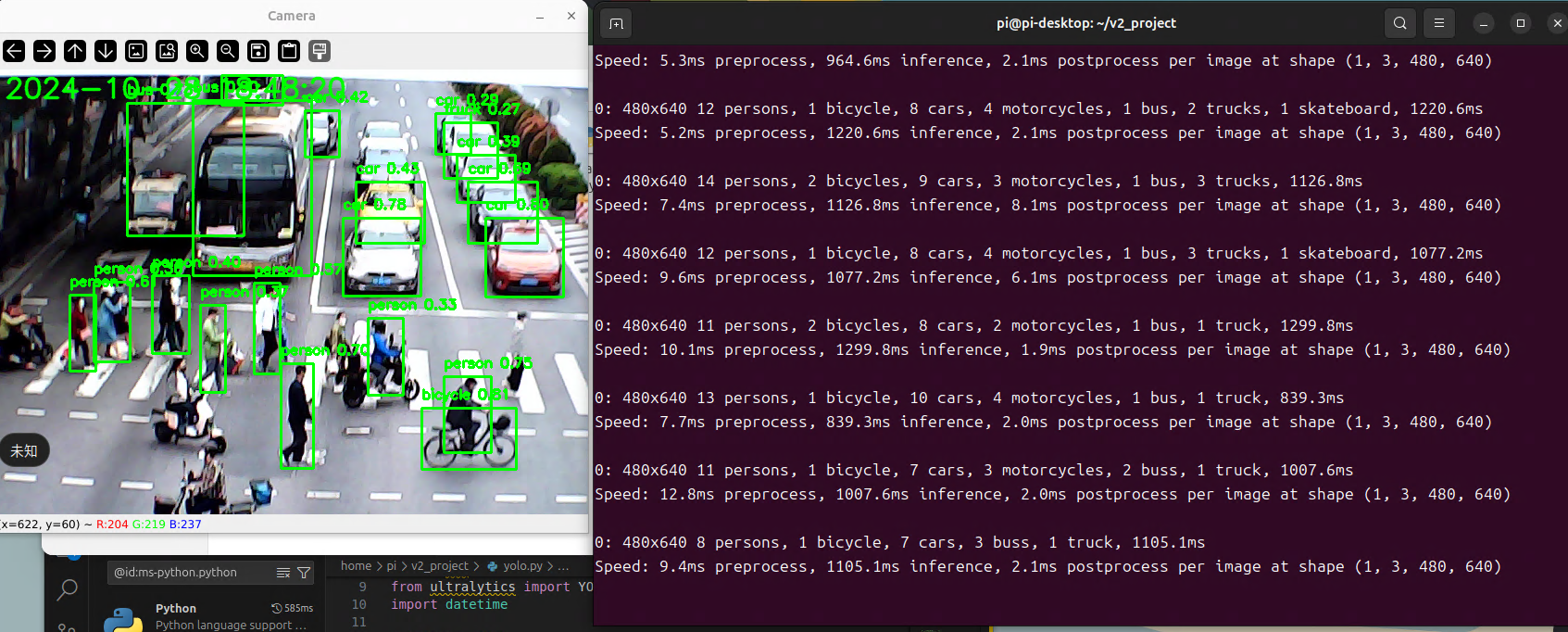

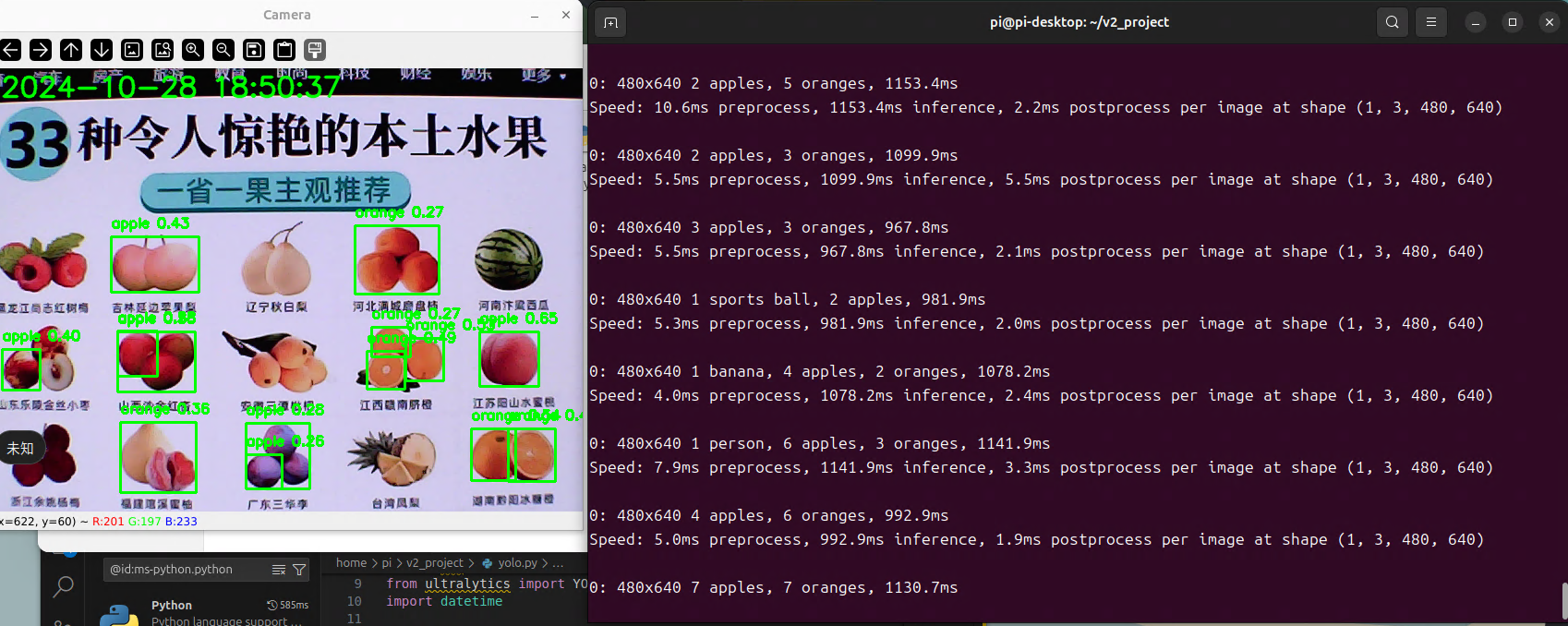

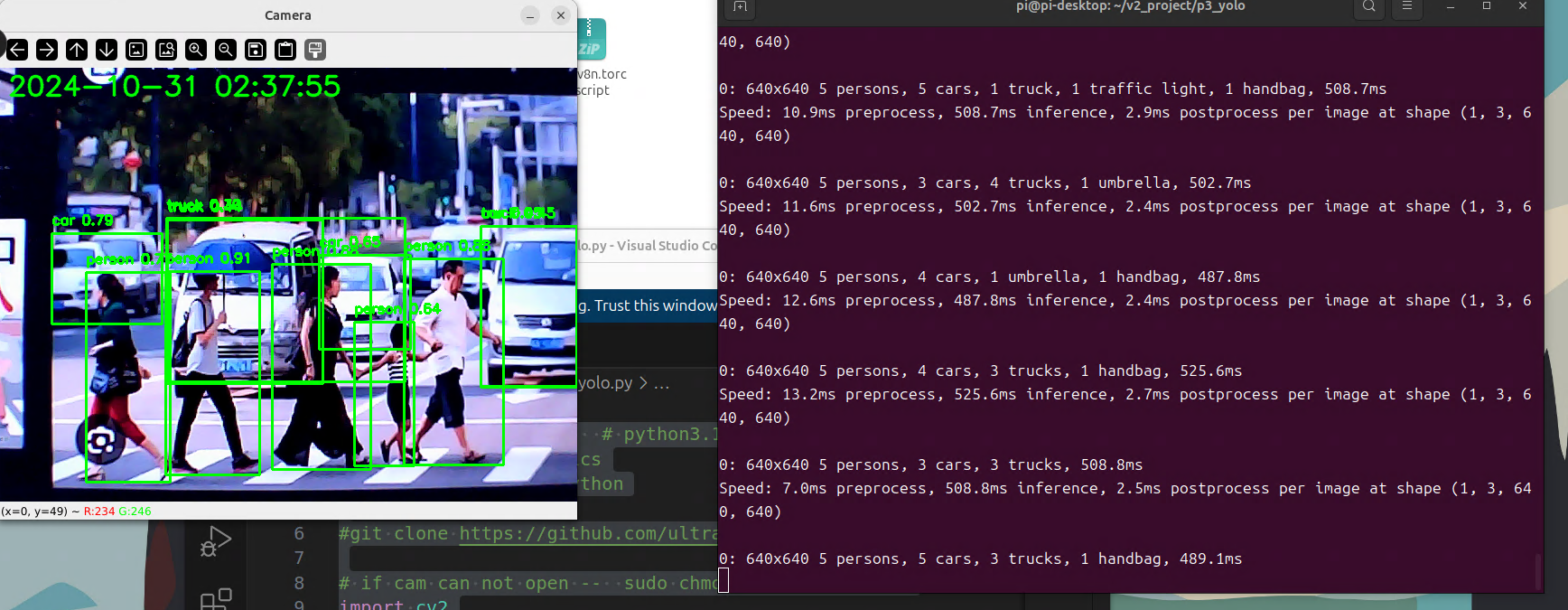

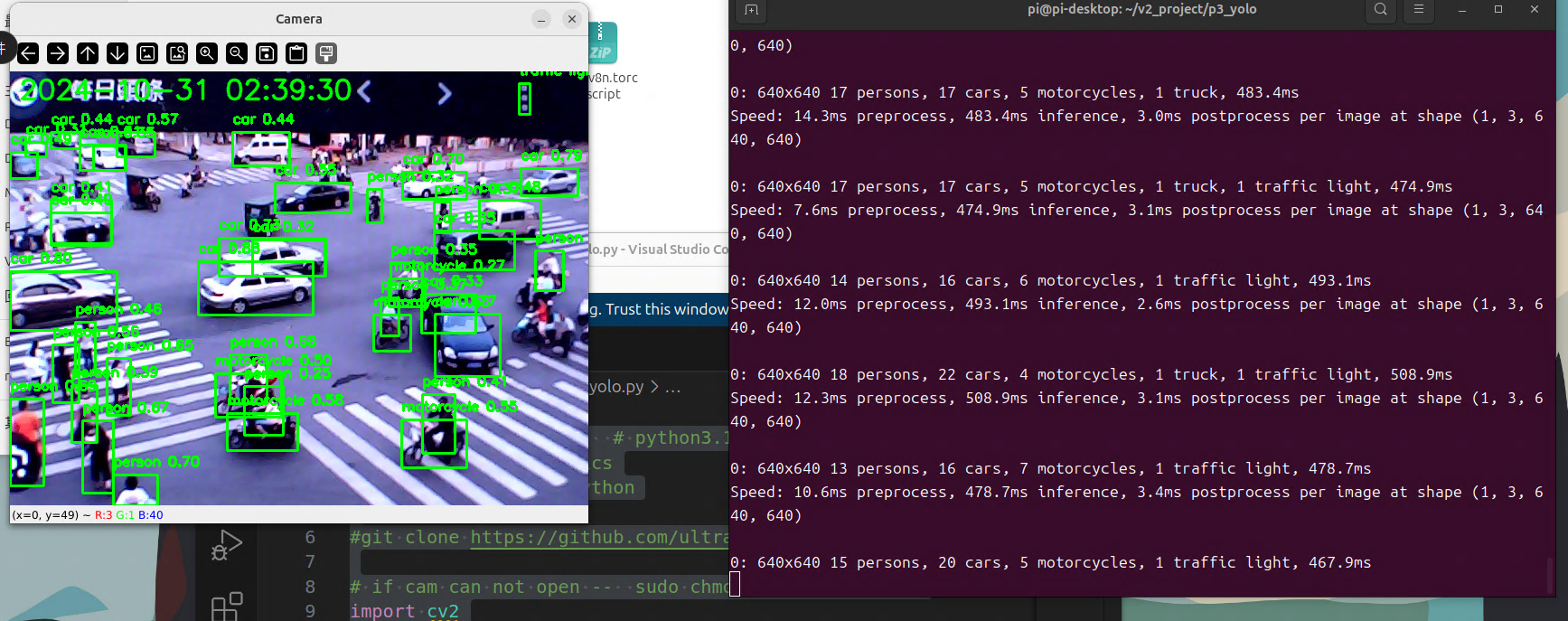

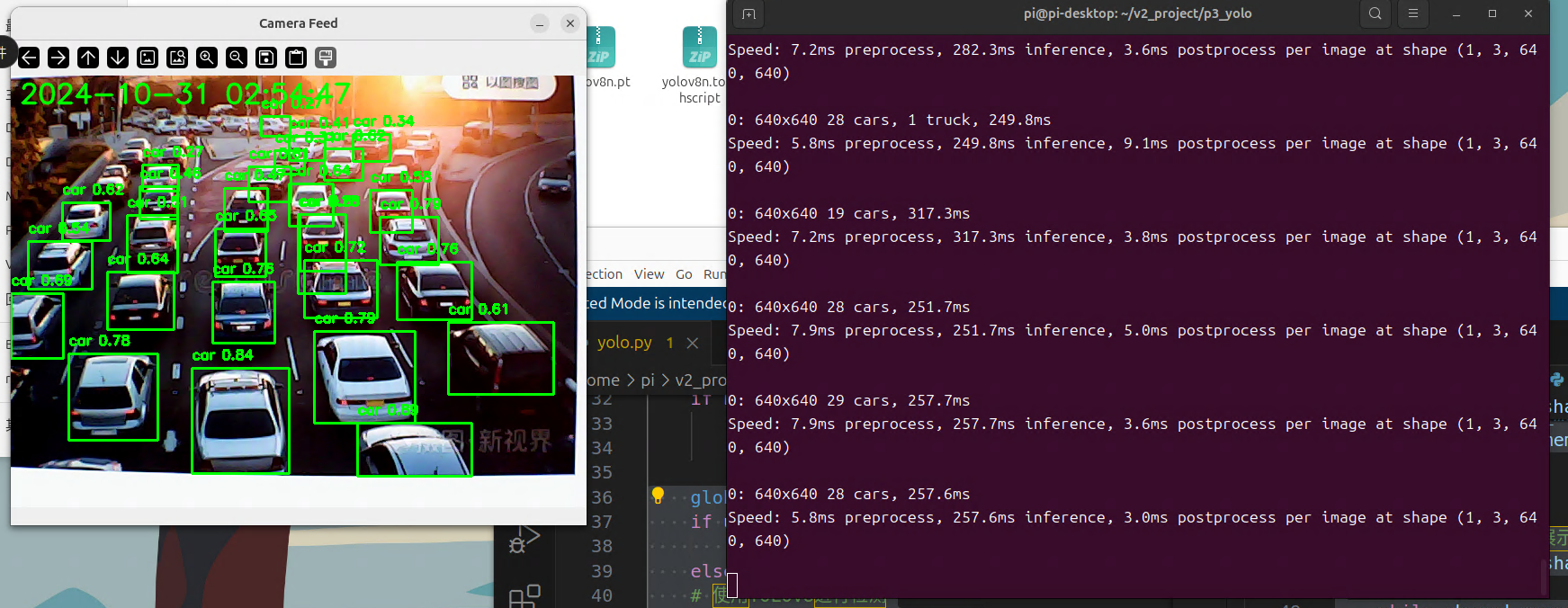

树莓派5B 速度

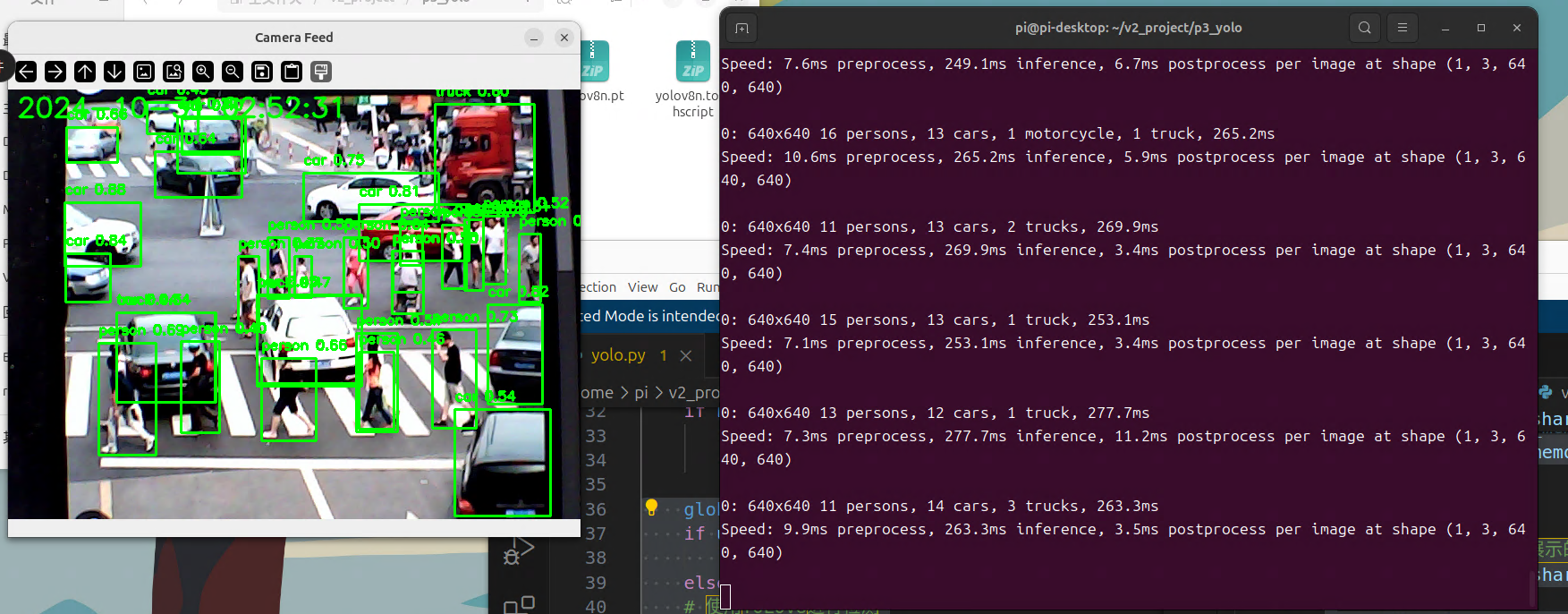

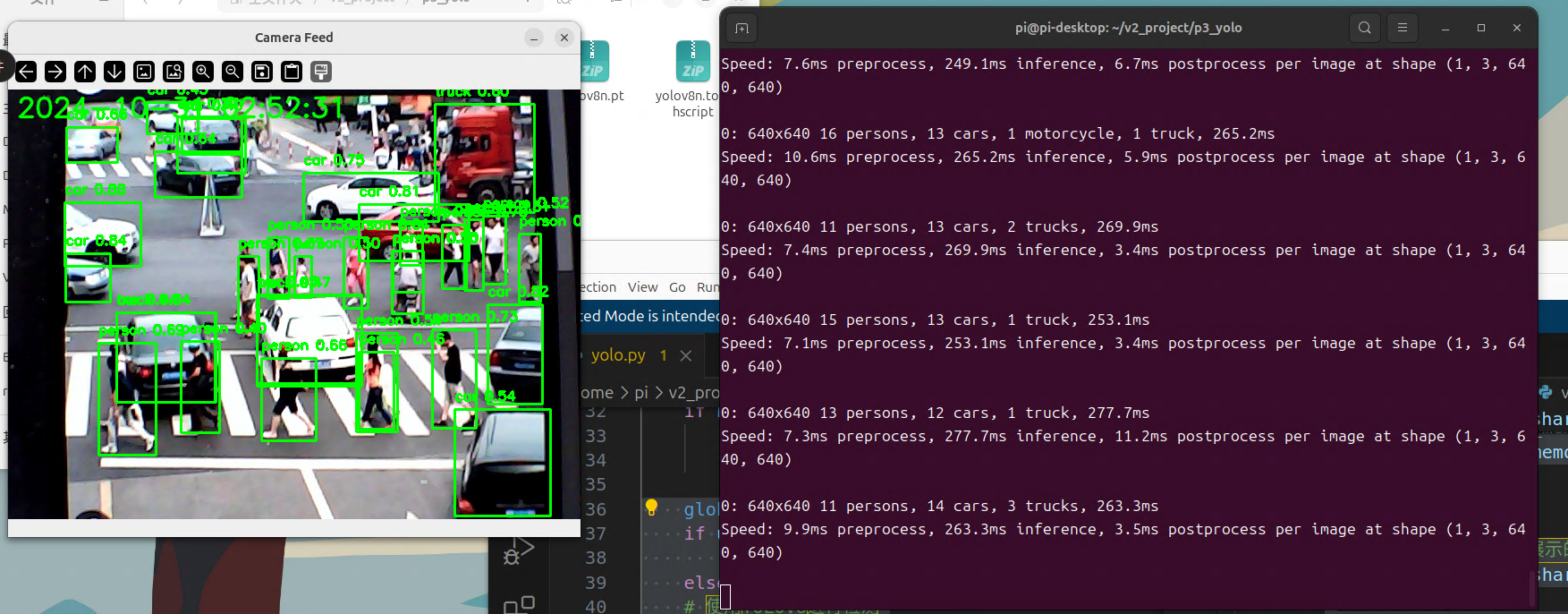

代码2 转换ncnn模型加速运行

貌似加速了一倍,后期再配合多线程处理

# conda activate base # python3.11

#pip install ultralytics

#pip install opencv-python

#git clone https://github.com/ultralytics/ultralytics.git

# if cam can not open -- sudo chmod 777 /dev/video0

import cv2

from ultralytics import YOLO

import datetime

use_ncnn = 1

# 加载YOLOv8模型

model = YOLO('./yolov8n.pt')

# Export the model to NCNN format

model.export(format="ncnn") # creates 'yolo11n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("yolov8n_ncnn_model")

# 打开默认摄像头 (设备索引为 0)

cap = cv2.VideoCapture(0)

while True:

# 读取一帧

ret, frame = cap.read()

# 检查是否成功

if not ret:

print("无法读取摄像头")

break

global results

if use_ncnn==1:

results = ncnn_model(frame)

else:

# 使用YOLOv8进行检测

results = model(frame)

# 解析结果并绘制检测框

for result in results:

boxes = result.boxes

for box in boxes:

x1, y1, x2, y2 = map(int, box.xyxy[0]) # 获取检测框坐标

confidence = box.conf[0] # 获取置信度

cls = int(box.cls[0]) # 获取类别

label = model.names[cls] # 获取类别名称

# 绘制检测框和标签

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 2)

cv2.putText(frame, f"{label} {confidence:.2f}", (x1, y1 - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

# 获取当前时间

now = datetime.datetime.now()

time_str = now.strftime("%Y-%m-%d %H:%M:%S")

cv2.putText(frame, time_str, (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2, cv2.LINE_AA)

# 显示当前帧

cv2.imshow('Camera', frame)

# 按下 'q' 键退出

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 释放摄像头并关闭窗口

cap.release()

cv2.destroyAllWindows()

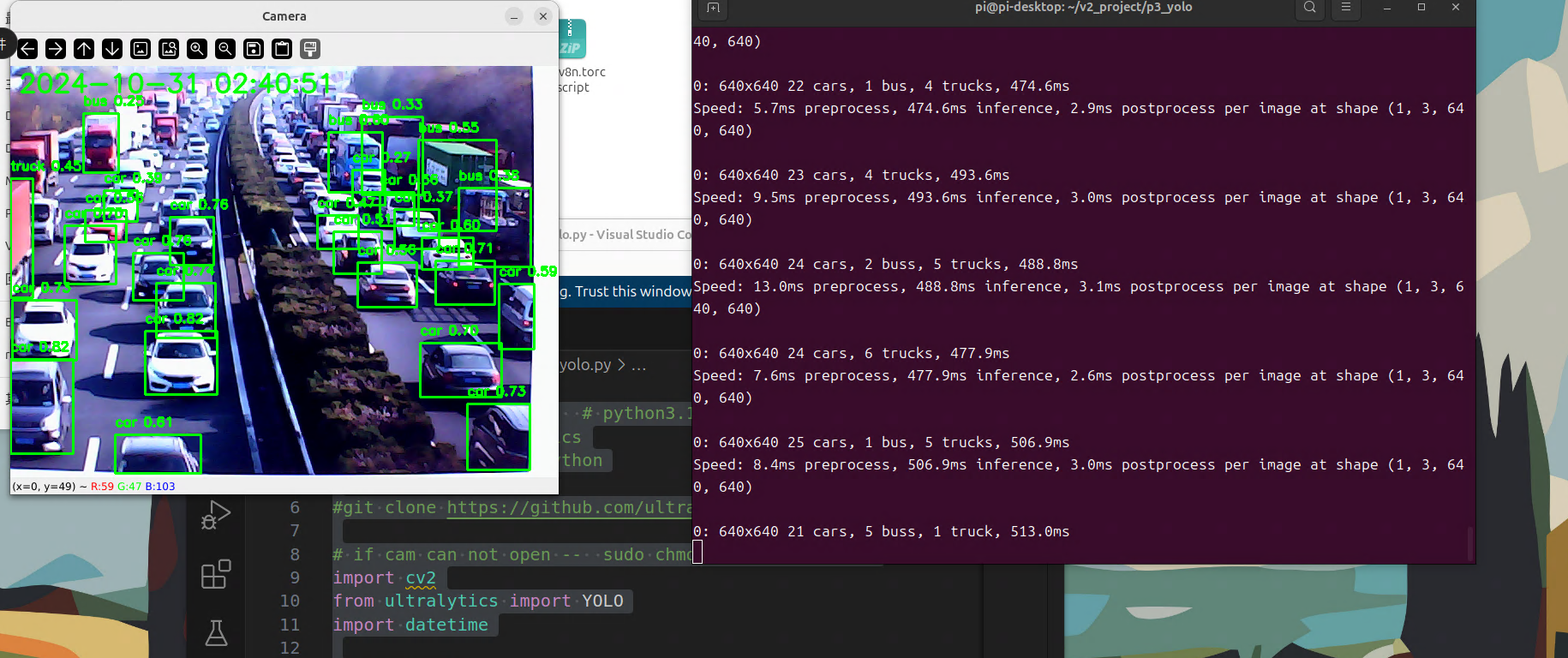

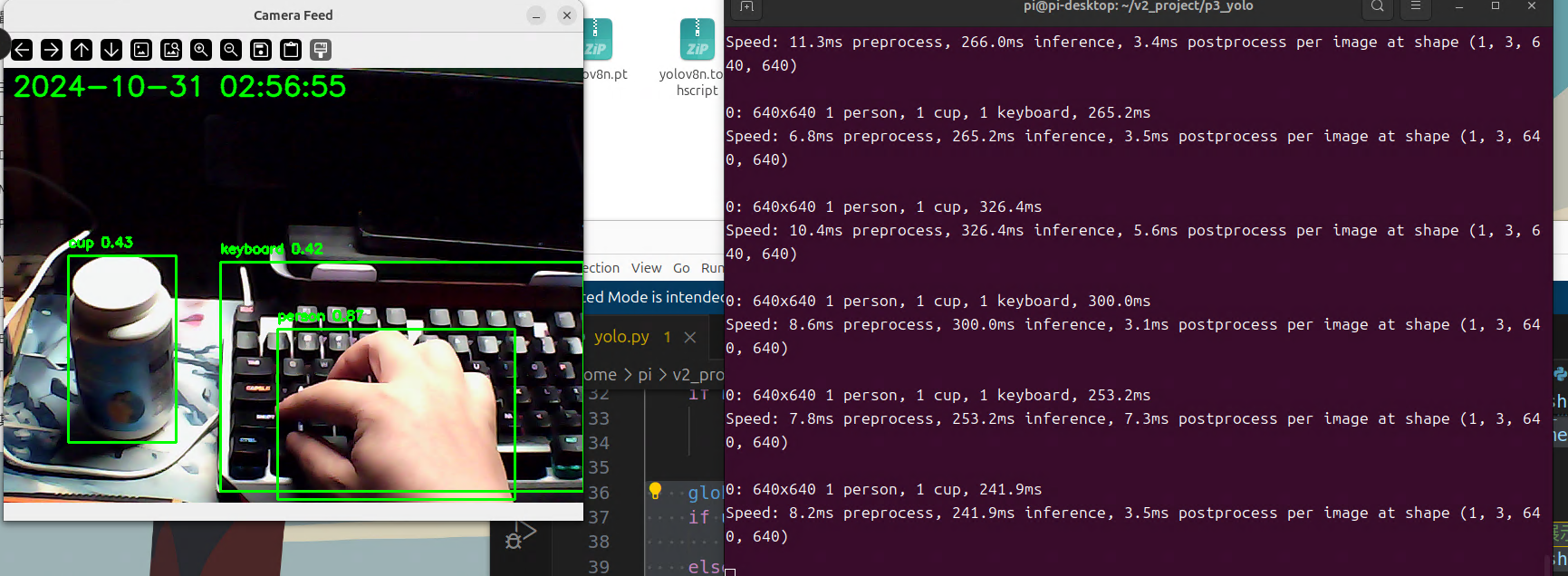

代码3 多线程加速

貌似到了200ms一帧

import cv2

import threading

import numpy as np

from ultralytics import YOLO

import datetime

use_ncnn = 1

# 加载YOLOv8模型

model = YOLO('./yolov8n.pt')

# Export the model to NCNN format

model.export(format="ncnn") # creates 'yolo11n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("yolov8n_ncnn_model")

# 共享内存类

class SharedMemory:

def __init__(self):

self.frame = None

self.lock = threading.Lock()

self.running = True # 添加运行状态标志

# 从 USB 相机读取图像的线程

def capture_thread(shared_memory):

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print("无法打开相机")

return

while shared_memory.running:

ret, frame = cap.read()

if not ret:

print("无法读取图像")

break

# 将图像存储到共享内存

with shared_memory.lock:

shared_memory.frame = frame.copy()

cap.release()

# 从共享内存读取图像并展示的线程

def display_thread(shared_memory):

while shared_memory.running:

with shared_memory.lock:

if shared_memory.frame is not None:

global results

if use_ncnn==1:

results = ncnn_model(shared_memory.frame)

else:

# 使用YOLOv8进行检测

results = model(shared_memory.frame)

frame = shared_memory.frame

# 解析结果并绘制检测框

for result in results:

boxes = result.boxes

for box in boxes:

x1, y1, x2, y2 = map(int, box.xyxy[0]) # 获取检测框坐标

confidence = box.conf[0] # 获取置信度

cls = int(box.cls[0]) # 获取类别

label = model.names[cls] # 获取类别名称

# 绘制检测框和标签

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 2)

cv2.putText(frame, f"{label} {confidence:.2f}", (x1, y1 - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

# 获取当前时间

now = datetime.datetime.now()

time_str = now.strftime("%Y-%m-%d %H:%M:%S")

cv2.putText(frame, time_str, (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2, cv2.LINE_AA)

cv2.imshow('Camera Feed', frame)

# 检查按键是否为 'q'

if cv2.waitKey(1) & 0xFF == ord('q'):

shared_memory.running = False # 设置运行状态为 False

break

cv2.destroyAllWindows()

# 主函数

if __name__ == "__main__":

shared_memory = SharedMemory()

# 启动捕获线程

capture_thread = threading.Thread(target=capture_thread, args=(shared_memory,))

capture_thread.start()

# 启动显示线程

display_thread = threading.Thread(target=display_thread, args=(shared_memory,))

display_thread.start()

capture_thread.join()

display_thread.join()

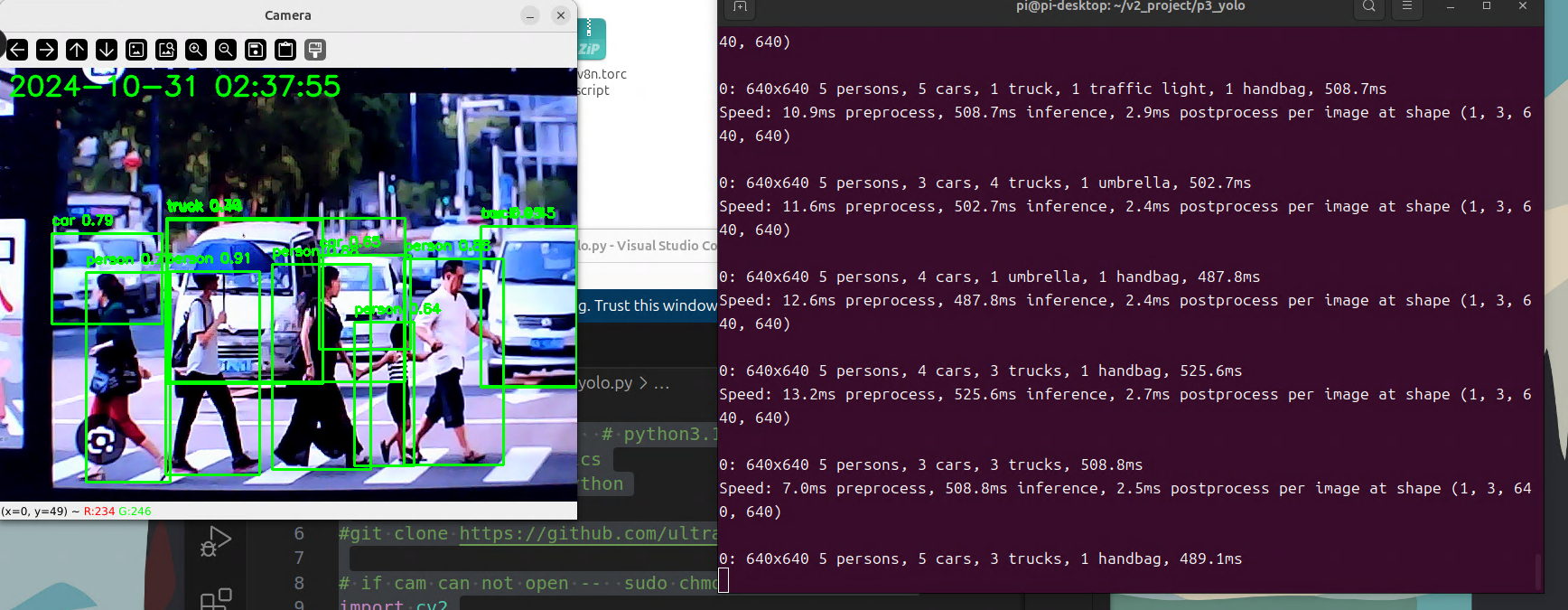

树莓派4B速度

500-780ms 秒 1张 640*480

未来

1 yolo模型转换onnx加速运行

https://docs.ultralytics.com/guides/raspberry-pi/#raspberry-pi-5-yolo11-benchmarks

下载

https://github.com/pnnx/pnnx/releases/download/20240819/pnnx-20240819-linux-aarch64.zip

安装

比较图表

我们仅包含了 YOLO11n 和 YOLO11s 模型的基准测试,因为其他模型太大,无法在 Raspberry Pi 上运行,并且无法提供良好的性能。

问题汇总

问题1 usb相机打不开 权限问题

检查依赖库是不是装齐了 ,尝试装库

sudo apt-get install v4l-utils

# sudo chmod 777 /dev/video0 权限

长期给与

最有可能是一个权限问题/dev/video0。

检查您是否属于“视频”群组。id -a

如果你在群组列表中没有看到视频,请添加 sudo usermod -a -G video

对于 Ubuntu 用户:(20.04)sudo usermod -a -G video $LOGNAME

注销并重新登录并尝试一下。

问题2 numpty适配torch和torchvision 必须是1.版本不能是2

问题3