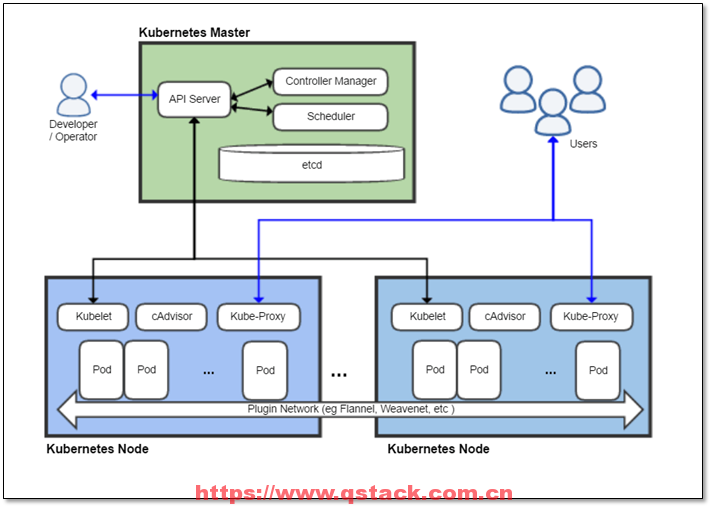

k8s架构

本次课程架构

2.k8s的安装

环境准备(修改ip地址,主机名,host解析)

| 主机 | ip | 内存 | 软件 |

|---|---|---|---|

| k8s-master | 10.0.0.11 | 1g | etcd,api-server,controller-manager,scheduler |

| k8s-node1 | 100.0.12 | 2g | etcd,kubelet,kube-proxy,docker,flannel |

| k8s-node2 | 10.0.0.13 | 2g | ectd,kubelet,kube-proxy,docker,flannel |

| k8s-node3 | 10.0.0.14 | 1g | kubelet,kube-proxy,docker,flannel |

2.1 颁发证书:

准备证书颁发工具

在node3节点上

[root@k8s-node3 ~]# mkdir /opt/softs [root@k8s-node3 ~]# cd /opt/softs [root@k8s-node3 softs]# rz -E rz waiting to receive. [root@k8s-node3 softs]# ls cfssl cfssl-certinfo cfssl-json [root@k8s-node3 softs]# chmod +x /opt/softs/* [root@k8s-node3 softs]# ln -s /opt/softs/* /usr/bin/ [root@k8s-node3 softs]# mkdir /opt/certs [root@k8s-node3 softs]# cd /opt/certs

编辑ca证书配置文件

vi /opt/certs/ca-config.json i{ "signing": { "default": { "expiry": "175200h" }, "profiles": { "server": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth" ] }, "client": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "client auth" ] }, "peer": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } 编辑ca证书请求配置文件

vi /opt/certs/ca-csr.json

i{

"CN": "kubernetes-ca",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}

生成CA证书和私钥

[root@k8s-node3 certs]# cfssl gencert -initca ca-csr.json | cfssl-json -bare ca -

2020/09/27 17:20:56 [INFO] generating a new CA key and certificate from CSR

2020/09/27 17:20:56 [INFO] generate received request

2020/09/27 17:20:56 [INFO] received CSR

2020/09/27 17:20:56 [INFO] generating key: rsa-2048

2020/09/27 17:20:56 [INFO] encoded CSR

2020/09/27 17:20:56 [INFO] signed certificate with serial number 409112456326145160001566370622647869686523100724

[root@k8s-node3 certs]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

2.2 部署etcd集群

| 主机名 | ip | 角色 |

|---|---|---|

| k8s-master | 10.0.0.11 | etcd lead |

| k8s-node1 | 10.0.0.12 | etcd follow |

| k8s-node2 | 10.0.0.13 | etcd follow |

颁发etcd节点之间通信的证书

vi /opt/certs/etcd-peer-csr.json

i{

"CN": "etcd-peer",

"hosts": [

"10.0.0.11",

"10.0.0.12",

"10.0.0.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

[root@k8s-node3 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssl-json -bare etcd-peer

2020/09/27 17:29:49 [INFO] generate received request

2020/09/27 17:29:49 [INFO] received CSR

2020/09/27 17:29:49 [INFO] generating key: rsa-2048

2020/09/27 17:29:49 [INFO] encoded CSR

2020/09/27 17:29:49 [INFO] signed certificate with serial number 15140302313813859454537131325115129339480067698

2020/09/27 17:29:49 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-node3 certs]# ls etcd-peer*

etcd-peer.csr etcd-peer-csr.json etcd-peer-key.pem etcd-peer.pem

安装etcd服务

在k8s-master,k8s-node1,k8s-node2上

yum install etcd -y

#在node3上发送证书到/etc/etcd目录

[root@k8s-node3 certs]# scp -rp *.pem root@10.0.0.11:/etc/etcd/

root@10.0.0.11's password:

ca-key.pem 100% 1675 1.1MB/s 00:00

ca.pem 100% 1354 1.0MB/s 00:00

etcd-peer-key.pem 100% 1679 961.2KB/s 00:00

etcd-peer.pem 100% 1428 203.5KB/s 00:00

[root@k8s-node3 certs]#

[root@k8s-node3 certs]# scp -rp *.pem root@10.0.0.12:/etc/etcd/

The authenticity of host '10.0.0.12 (10.0.0.12)' can't be established.

ECDSA key fingerprint is SHA256:cHKT5G6hYgv1k1zTfc36tZrLNQqJhc1JeBTeke545Fk.

ECDSA key fingerprint is MD5:24:4e:94:6d:46:82:0a:61:3a:1e:83:3f:75:82:e1:aa.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.0.0.12' (ECDSA) to the list of known hosts.

root@10.0.0.12's password:

ca-key.pem 100% 1675 1.4MB/s 00:00

ca.pem 100% 1354 1.1MB/s 00:00

etcd-peer-key.pem 100% 1679 833.9KB/s 00:00

etcd-peer.pem 100% 1428 708.5KB/s 00:00

[root@k8s-node3 certs]# scp -rp *.pem root@10.0.0.13:/etc/etcd/

The authenticity of host '10.0.0.13 (10.0.0.13)' can't be established.

ECDSA key fingerprint is SHA256:cHKT5G6hYgv1k1zTfc36tZrLNQqJhc1JeBTeke545Fk.

ECDSA key fingerprint is MD5:24:4e:94:6d:46:82:0a:61:3a:1e:83:3f:75:82:e1:aa.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.0.0.13' (ECDSA) to the list of known hosts.

root@10.0.0.13's password:

ca-key.pem 100% 1675 1.4MB/s 00:00

ca.pem 100% 1354 1.1MB/s 00:00

etcd-peer-key.pem 100% 1679 1.1MB/s 00:00

etcd-peer.pem 100% 1428 471.5KB/s 00:00

[root@k8s-node3 certs]#

#master节点

[root@k8s-master ~]# chown -R etcd:etcd /etc/etcd/*.pem

[root@k8s-master ~]# grep -Ev '^$|#' /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.0.0.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

ETCD_NAME="node1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="node1=https://10.0.0.11:2380,node2=https://10.0.0.12:2380,node3=https://10.0.0.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_AUTO_TLS="true"

ETCD_PEER_CERT_FILE="/etc/etcd/etcd-peer.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/etcd-peer-key.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ca.pem"

ETCD_PEER_AUTO_TLS="true"

#node1和node2需修改

ETCD_LISTEN_PEER_URLS="https://10.0.0.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

ETCD_NAME="node1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.11:2379,http://127.0.0.1:2379"

#3个etcd节点同时启动

systemctl start etcd

systemctl enable etcd

#验证

[root@k8s-master ~]# etcdctl member list

55fcbe0adaa45350: name=node3 peerURLs=https://10.0.0.13:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.13:2379 isLeader=false

cebdf10928a06f3c: name=node1 peerURLs=https://10.0.0.11:2380 clientURLs=http://127.0.0.1:2379,https://10.0.0.11:2379 isLeader=false

f7a9c20602b8532e: name=node2