增量学习/训练

针对大型数据集,数据过大无法加载到内存,使用增量训练方式

sklearn

def generator(all_file_path):

for filename in all_file_path:

try:

bytedata = open(filename, "rb").read()

except:

bytedata = None

if bytedata is None:

continue

byte_ngram = byteNgram(bytedata)

label = label_to_index[pathlib.Path(filename).parent.parent.parent.parent.parent.name]

yield byte_ngram,label

def get_batch(data_iter, batch_size):

data = [(item) for item in itertools.islice(data_iter, batch_size)]

return data

def iter_batch(data_iter, batch_size):

data = get_batch(data_iter, batch_size)

while len(data):

try:

x,y = zip(*data)

yield x,y

except:

print("data error")

finally:

data = get_batch(data_iter, batch_size)

predicts = []

y_train = ()

for i, (x_batch_text, y_batch) in enumerate(minibatch_iterators):

x_batch = vectorizer.transform(x_batch_text)

# sgd

clf.partial_fit(x_batch, y_batch, classes=all_classes)

y_train += y_batch

predicts = np.hstack([predicts,clf.predict(x_batch)])

if i % 500 == 0:

print("iter %s ============== " % i)

metrics(y_train,predicts)

【1】

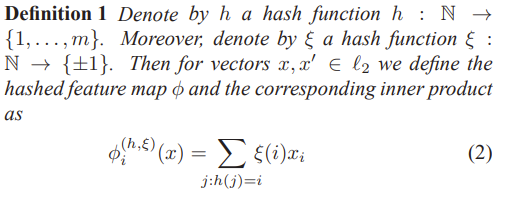

文中用到了HashingVectorizer , 在这里解释下

使用两个hash函数(避免原始特征的哈希后位置在一起导致词频累加特征值突然变大)

第一个hash函数:相当于分桶降维;第二个hash函数:hash到 {-1,1}

lightgbm

import lightgbm as lgb

def increment():

# 第一步,初始化模型为None,设置模型参数

gbm=None

params = {

'task': 'train',

'objective': 'multiclass',

'num_class':"3",

'boosting_type': 'gbdt',

'learning_rate': 0.1,

'num_leaves': 31,

'tree_learner': 'serial',

'min_data_in_leaf': 100,

'metric': ['multi_logloss','multi_error'],

'max_bin': 255,

'num_trees': 300

}

# 第二步,流式读取数据(每次10万)

CHUNK_SIZE = 1000000

all_data = pd.read_csv(path, chunksize=CHUNK_SIZE)

i = 0

for data_chunk in all_data:

print ('Size of uploaded chunk: %i instances, %i features' % (data_chunk.shape))

# preprocess

data_chunk = shuffle(data_chunk)

x_train, y_train = pipeline(data_chunk)

# 创建lgb的数据集

lgb_train = lgb.Dataset(x_train, y_train)

lgb_eval = lgb.Dataset(x_test, y_test)

# 第三步:增量训练模型

# 重点来了,通过 init_model 和 keep_training_booster 两个参数实现增量训练

gbm = lgb.train(params,

lgb_train,

num_boost_round=1000,

valid_sets=lgb_eval,

early_stopping_rounds=10,

verbose_eval=False,

init_model=gbm, # 如果gbm不为None,那么就是在上次的基础上接着训练

keep_training_booster=True) # 增量训练

# 输出模型评估分数

score_train = dict([(s[1], s[2]) for s in gbm.eval_train()])

score_valid = dict([(s[1], s[2]) for s in gbm.eval_valid()])

print('当前模型在训练集的得分是:loss=%.4f, erro=%.4f'%(score_train['multi_logloss'], score_train['multi_error']))

print('当前模型在测试集的得分是:loss=%.4f, erro=%.4f' % (score_valid['multi_logloss'], score_valid['multi_error']))

i += 1

return gbm

gbm = increment()

【2】

tensorflow

加载上次保存的网络,接着训练就好了

# 定义dataset

def load_and_preprocess_from_path_label(path, label):

return load_and_preprocess_image(path), label

def make_dataset(image_paths, image_labels, image_count, BATCH_SIZE=32, AUTOTUNE=tf.data.experimental.AUTOTUNE):

ds = tf.data.Dataset.from_tensor_slices((image_paths, image_labels))

image_label_ds = ds.map(load_and_preprocess_from_path_label, num_parallel_calls=AUTOTUNE)

# 设置一个和数据集大小一致的 shuffle buffer size(随机缓冲区大小)以保证数据

# 被充分打乱。

ds = image_label_ds.shuffle(buffer_size=image_count)

# ds = ds.repeat()

ds = ds.batch(BATCH_SIZE)

# 当模型在训练的时候,`prefetch` 使数据集在后台取得 batch。

ds = ds.prefetch(buffer_size=AUTOTUNE)

return ds

【3】

references:

【2】增量训练. https://zhuanlan.zhihu.com/p/41422048

【3】sklearn hash. https://www.cnblogs.com/pinard/p/6688348.html