MySQL 5.6的一个bug引发的故障

突然收到告警,提示mysql宕机了,该服务器是从库。于是尝试登录服务器看看能否登录,发现可以登录,查看mysql进程也存在,尝试登录提示

ERROR 1040 (HY000): Too many connections

最大连接数设置的3000,怎么会连接数不够了呢。于是使用gdb修改一下最大连接数:

gdb -p $(cat pid_mysql.pid) -ex "set max_connections=5000" -batch

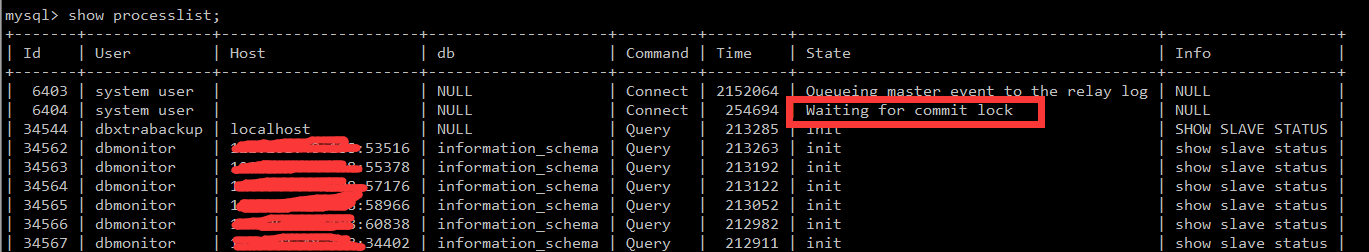

修改以后可以登录了,于是show processlist看看是啥情况:

发现监控程序执行show slave status都被卡住了,最后把最大连接数用完,导致Too many connections。复制卡在了Waiting for commit lock。查阅资料以后发现是触发了bug。https://bugs.mysql.com/bug.php?id=70307,改bug在5.6.23已经修复。我的版本是 5.6.17

mysql> SELECT a.trx_id, trx_state, trx_started, b.id AS thread_id, b.info, b.user, b.host, b.db, b.command, b.state FROM information_schema.`INNODB_TRX` a, information_schema.`PROCESSLI ST` b WHERE a.trx_mysql_thread_id = b.id ORDER BY a.trx_started; +----------+-----------+---------------------+-----------+------+-------------+------+------+---------+-------------------------+ | trx_id | trx_state | trx_started | thread_id | info | user | host | db | command | state | +----------+-----------+---------------------+-----------+------+-------------+------+------+---------+-------------------------+ | 51455154 | RUNNING | 2017-08-02 02:20:07 | 6404 | NULL | system user | | NULL | Connect | Waiting for commit lock | +----------+-----------+---------------------+-----------+------+-------------+------+------+---------+-------------------------+ 1 row in set (0.03 sec)

可以看到在凌晨2点左右的时候卡住的,突然发现凌晨2点这个时候正是xtrabackup备份数据的时间。xtrabackup备份的时候执行flushs tables with read lock和show slave status会有可能和SQL Thread形成死锁,导致SQL Thread一直被卡主。原因是SQL Thread的DML操作完成之后,持有rli->data_lock锁,commit的时候等待MDL_COMMIT,而flush tables with read lock之后执行的show slave status会等待rli->data_lock;修复方法是rli->data_lock锁周期只在DML操作期间持有。

stop slave没有用,正常停止没有用,最后只能kill -9,问题还是比较严重的,解决的方法就是升级新版本。

浙公网安备 33010602011771号

浙公网安备 33010602011771号