[2023-03-24 06:36:35 +0000] [217] [ERROR] Exception in ASGI application

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/uvicorn/protocols/http/h11_impl.py", line 407, in run_asgi

result = await app( # type: ignore[func-returns-value]

File "/usr/local/lib/python3.8/dist-packages/uvicorn/middleware/proxy_headers.py", line 78, in __call__

return await self.app(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/fastapi/applications.py", line 199, in __call__

await super().__call__(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/starlette/applications.py", line 112, in __call__

await self.middleware_stack(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/starlette/middleware/errors.py", line 181, in __call__

raise exc from None

File "/usr/local/lib/python3.8/dist-packages/starlette/middleware/errors.py", line 159, in __call__

await self.app(scope, receive, _send)

File "/usr/local/lib/python3.8/dist-packages/starlette/middleware/base.py", line 26, in __call__

await response(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/starlette/responses.py", line 224, in __call__

await run_until_first_complete(

File "/usr/local/lib/python3.8/dist-packages/starlette/concurrency.py", line 24, in run_until_first_complete

[task.result() for task in done]

File "/usr/local/lib/python3.8/dist-packages/starlette/concurrency.py", line 24, in <listcomp>

[task.result() for task in done]

File "/usr/local/lib/python3.8/dist-packages/starlette/responses.py", line 216, in stream_response

async for chunk in self.body_iterator:

File "/usr/local/lib/python3.8/dist-packages/starlette/middleware/base.py", line 56, in body_stream

task.result()

File "/usr/local/lib/python3.8/dist-packages/starlette/middleware/base.py", line 38, in coro

await self.app(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/starlette_exporter/middleware.py", line 289, in __call__

await self.app(scope, receive, wrapped_send)

File "/usr/local/lib/python3.8/dist-packages/starlette/exceptions.py", line 82, in __call__

raise exc from None

File "/usr/local/lib/python3.8/dist-packages/starlette/exceptions.py", line 71, in __call__

await self.app(scope, receive, sender)

File "/usr/local/lib/python3.8/dist-packages/starlette/routing.py", line 580, in __call__

await route.handle(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/starlette/routing.py", line 241, in handle

await self.app(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/starlette/routing.py", line 55, in app

await response(scope, receive, send)

File "/usr/local/lib/python3.8/dist-packages/starlette/responses.py", line 146, in __call__

await self.background()

File "/usr/local/lib/python3.8/dist-packages/starlette/background.py", line 35, in __call__

await task()

File "/usr/local/lib/python3.8/dist-packages/starlette/background.py", line 20, in __call__

await run_in_threadpool(self.func, *self.args, **self.kwargs)

File "/usr/local/lib/python3.8/dist-packages/starlette/concurrency.py", line 40, in run_in_threadpool

return await loop.run_in_executor(None, func, *args)

File "/usr/lib/python3.8/concurrent/futures/thread.py", line 57, in run

result = self.fn(*self.args, **self.kwargs)

File "/app/ymir_app/app/libs/datasets.py", line 330, in ats_import_dataset_in_backgroud

task = crud.task.create_placeholder(

File "/app/ymir_app/app/crud/crud_task.py", line 81, in create_placeholder

db.commit()

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/session.py", line 1428, in commit

self._transaction.commit(_to_root=self.future)

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/session.py", line 829, in commit

self._prepare_impl()

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/session.py", line 808, in _prepare_impl

self.session.flush()

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/session.py", line 3298, in flush

self._flush(objects)

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/session.py", line 3438, in _flush

transaction.rollback(_capture_exception=True)

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/util/langhelpers.py", line 70, in __exit__

compat.raise_(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/util/compat.py", line 207, in raise_

raise exception

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/session.py", line 3398, in _flush

flush_context.execute()

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/unitofwork.py", line 456, in execute

rec.execute(self)

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/unitofwork.py", line 630, in execute

util.preloaded.orm_persistence.save_obj(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/persistence.py", line 242, in save_obj

_emit_insert_statements(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/orm/persistence.py", line 1219, in _emit_insert_statements

result = connection._execute_20(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/engine/base.py", line 1582, in _execute_20

return meth(self, args_10style, kwargs_10style, execution_options)

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/sql/elements.py", line 323, in _execute_on_connection

return connection._execute_clauseelement(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/engine/base.py", line 1451, in _execute_clauseelement

ret = self._execute_context(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/engine/base.py", line 1813, in _execute_context

self._handle_dbapi_exception(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/engine/base.py", line 1994, in _handle_dbapi_exception

util.raise_(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/util/compat.py", line 207, in raise_

raise exception

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/engine/base.py", line 1770, in _execute_context

self.dialect.do_execute(

File "/usr/local/lib/python3.8/dist-packages/sqlalchemy/engine/default.py", line 717, in do_execute

cursor.execute(statement, parameters)

File "/usr/local/lib/python3.8/dist-packages/pymysql/cursors.py", line 148, in execute

result = self._query(query)

File "/usr/local/lib/python3.8/dist-packages/pymysql/cursors.py", line 310, in _query

conn.query(q)

File "/usr/local/lib/python3.8/dist-packages/pymysql/connections.py", line 548, in query

self._affected_rows = self._read_query_result(unbuffered=unbuffered)

File "/usr/local/lib/python3.8/dist-packages/pymysql/connections.py", line 775, in _read_query_result

result.read()

File "/usr/local/lib/python3.8/dist-packages/pymysql/connections.py", line 1156, in read

first_packet = self.connection._read_packet()

File "/usr/local/lib/python3.8/dist-packages/pymysql/connections.py", line 692, in _read_packet

packet_header = self._read_bytes(4)

File "/usr/local/lib/python3.8/dist-packages/pymysql/connections.py", line 748, in _read_bytes

raise err.OperationalError(

sqlalchemy.exc.OperationalError: (pymysql.err.OperationalError) (2013, 'Lost connection to MySQL server during query')

[SQL: INSERT INTO task (name, hash, type, state, parameters, config, percent, duration, error_code, user_id, project_id, dataset_id, model_stage_id, is_terminated, is_deleted, last_message_datetime, create_datetime, update_datetime) VALUES (%(name)s, %(hash)s, %(type)s, %(state)s, %(parameters)s, %(config)s, %(percent)s, %(duration)s, %(error_code)s, %(user_id)s, %(project_id)s, %(dataset_id)s, %(model_stage_id)s, %(is_terminated)s, %(is_deleted)s, %(last_message_datetime)s, %(create_datetime)s, %(update_datetime)s)]

[parameters: {'name': 't0000001000012b2ae341679639795', 'hash': 't0000001000012b2ae341679639795', 'type': 5, 'state': 1, 'parameters': '{"group_name": "from_ats_6579a9116a", "description": null, "project_id": 12, "input_url": null, "input_dataset_id": null, "input_dataset_name": null, "input_path": "/data/ymir-workplace/ymir-sharing/3c87e23bb8904b638a9479d6e68aea23", "strategy": 4, "source": 5, "import_type": 5}', 'config': None, 'percent': 0, 'duration': None, 'error_code': None, 'user_id': 1, 'project_id': 12, 'dataset_id': None, 'model_stage_id': None, 'is_terminated': 0, 'is_deleted': 0, 'last_message_datetime': datetime.datetime(2023, 3, 24, 6, 36, 35, 351864), 'create_datetime': datetime.datetime(2023, 3, 24, 6, 36, 35, 351870), 'update_datetime': datetime.datetime(2023, 3, 24, 6, 36, 35, 351873)}]

(Background on this error at: http://sqlalche.me/e/14/e3q8)

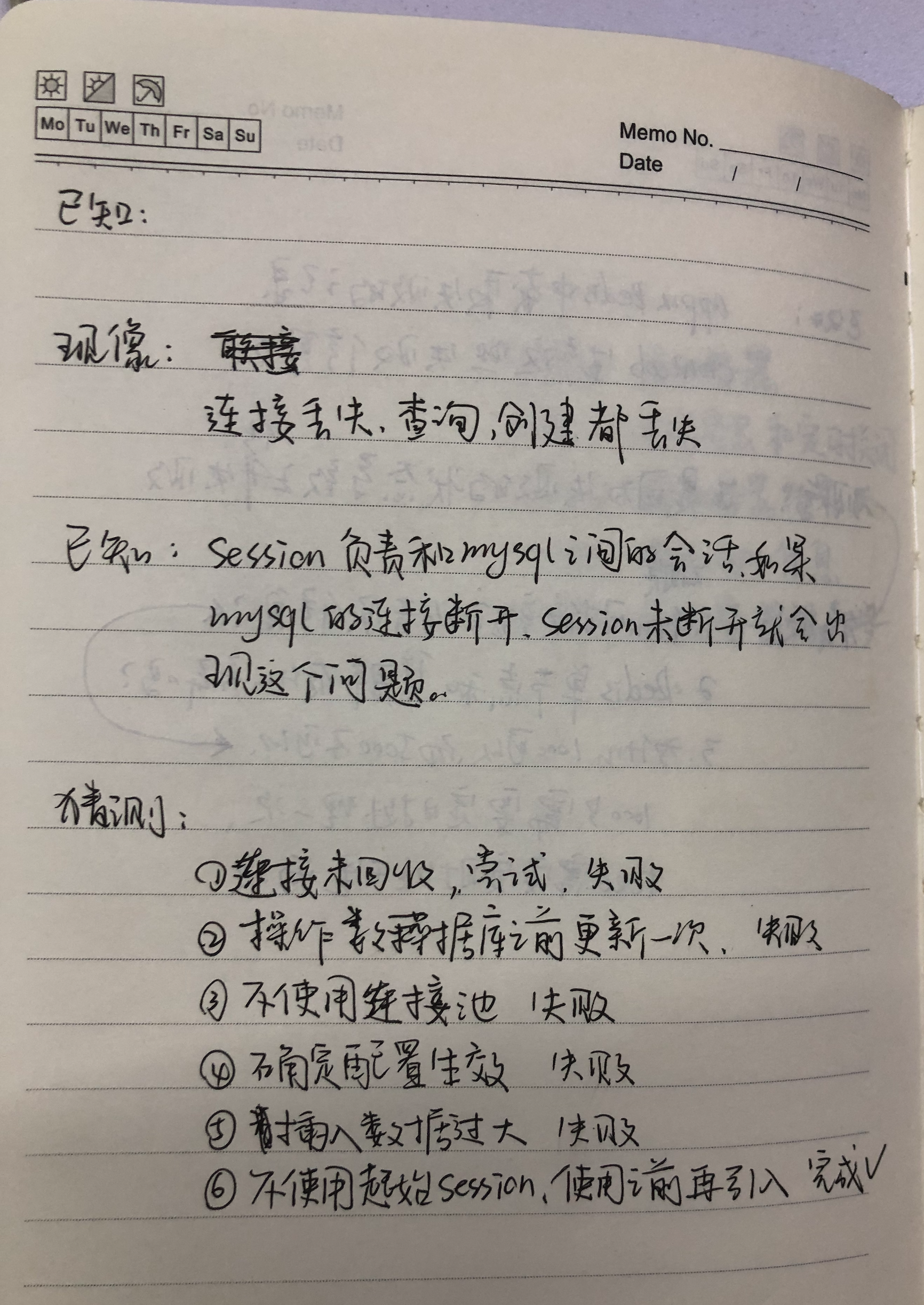

从字面意思来看就是数据库在查询时丢失了连接,这里的连接也就是session。这个接口是一个操作很多的任务,要下载大量数据集,通常在20G以上,所以设计成异步接口。请求接口之后获取一个数据库session,然后处理简单任务直接返回一个成功的状态,最后将耗时任务放在后台任务完成。这里的后台任务是Fastapi自身的功能,专门用于处理一些小型的耗时任务,如发送邮件等。lost connect 就是发生在后台任务中。

抽象任务流程:

所以通过分析这个任务的流程可以发现是持有session过长导致的。从接口请求的开始就获取了该session,然后将session传递到后台任务中,经过30分钟之后才再次使用该session,就发生了lost connection的问题。

知道问题症状所在就知道如何对症下药的了,就是在后台下载任务30分钟之后更新数据库时重新获取一个session,不复用之前的session,这样就就解决了这个问题。

这个问题之所以没有发现是因为按照官网的介绍pool_recycle字段就是负责回收session,配合pool_pre_ping每次使用session之前检查一次就能解决这个session断联的问题。但是似乎在配置的pool_recycle醒没有生效。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

2022-04-10 《天才源自刻意练习》读书笔记