功能丰富的API -- The Functional API

功能丰富的API

搭建

1 import numpy as np 2 import tensorflow as tf 3 from tensorflow import keras 4 from tensorflow.keras import layers

介绍

Keras functional API 比 tf.keras.Sequential API 自由度更高。这个API可以解决非线性特殊模型结构,共享层甚至多输入或多输出。

深度学习的主要思想是层的有向无环图(DGA)。所以函数式方法就这大家DGA的一种途径。这点事序贯模型做不到的。

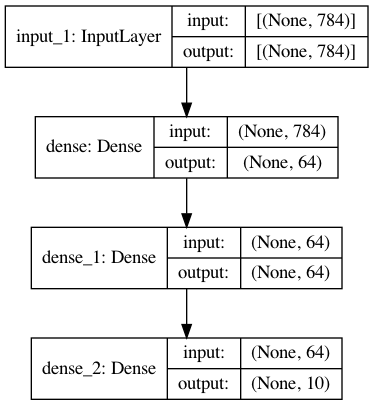

例如,下面的模型:

(input: 784-dimensional vectors) ↧ [Dense (64 units, relu activation)] ↧ [Dense (64 units, relu activation)] ↧ [Dense (10 units, softmax activation)] ↧ (output: logits of a probability distribution over 10 classes)

这是一个基础的三层图。用函数式方法构建这个模型,从创建输入节点开始:

inputs = keras.Input(shape=(784,))

数据的尺寸被设置为一个784维的向量。由于指定了每个样本的尺寸,所以批大小通常省略。

如果,举个例子,你有一张图片尺寸是(32,32,3),你将这么定义:

# Just for demonstration purposes. img_inputs = keras.Input(shape=(32, 32, 3))

返回的输入包含有关提供给模型的输入数据的形状和dtype的信息。形状:

inputs.shape

TensorShape([None, 784])

inputs.dtype

tf.float32

通过调用这个输入对象上的一个层,你可以在层图中创建一个新节点:

dense = layers.Dense(64, activation="relu") x = dense(inputs)

#亦或者

dense = layers.Dense(64, activation="relu")(inputs)

#之所以称之为函数式,也是因为他的形式像f(x)

“图层调用”动作就像从“输入”到你创建的这个图层绘制一个箭头。您将输入“传递”到密集层(dense layer),然后得到x作为输出。

让我们在图层图中添加更多图层:

x = layers.Dense(64, activation="relu")(x) outputs = layers.Dense(10)(x)

此时,你可以通过在层图中指定输入和输出来创建Model:

model = keras.Model(inputs=inputs, outputs=outputs, name="mnist_model")

让我们看看模型总结是什么样的:

model.summary()

Model: "mnist_model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) [(None, 784)] 0 _________________________________________________________________ dense (Dense) (None, 64) 50240 _________________________________________________________________ dense_1 (Dense) (None, 64) 4160 _________________________________________________________________ dense_2 (Dense) (None, 10) 650 ================================================================= Total params: 55,050 Trainable params: 55,050 Non-trainable params: 0 _________________________________________________________________

你甚至可以将模型以图标的形式绘出来:

并可选择显示绘制图形中每一层的输入和输出形状:

keras.utils.plot_model(model, "my_first_model_with_shape_info.png", show_shapes=True)

这个数字和代码几乎是相同的。在代码版本中,连接箭头被调用操作替换。

对于深度学习模型来说,“层图”是一种直观的心理图像,而功能性API是一种创建与此密切相关的模型的方式。

Training, evaluation, and inference 训练、评估、判断

对于使用功能API构建的模型,训练、评估和推理的工作方式与序列模型完全相同。

Model类提供了一个内置的训练循环(fit()方法)和一个内置的评估循环(evaluate()方法)。请注意,您可以轻松地自定义这些循环来实现监督学习之外的训练例程(例如GANs)。

在这里,加载MNIST图像数据,将其重塑为向量,在数据上拟合模型(同时在验证分割上监控性能),然后在测试数据上评估模型:

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data() x_train = x_train.reshape(60000, 784).astype("float32") / 255 x_test = x_test.reshape(10000, 784).astype("float32") / 255 model.compile( loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True), optimizer=keras.optimizers.RMSprop(), metrics=["accuracy"],

) history = model.fit(x_train, y_train, batch_size=64, epochs=2, validation_split=0.2) test_scores = model.evaluate(x_test, y_test, verbose=2) print("Test loss:", test_scores[0]) print("Test accuracy:", test_scores[1])

Epoch 1/2 750/750 [==============================] - 2s 2ms/step - loss: 0.5648 - accuracy: 0.8473 - val_loss: 0.1793 - val_accuracy: 0.9474 Epoch 2/2 750/750 [==============================] - 1s 1ms/step - loss: 0.1686 - accuracy: 0.9506 - val_loss: 0.1398 - val_accuracy: 0.9576 313/313 - 0s - loss: 0.1401 - accuracy: 0.9580 Test loss: 0.14005452394485474 Test accuracy: 0.9580000042915344

For further reading, see the training and evaluation guide.

保存并且序列化

对于使用函数式API构建的模型,保存模型和序列化的工作方式与顺序模型相同。保存函数模型的标准方法是调用model.save()将整个模型保存为单个文件。您以后可以从这个文件重新创建相同的模型,即使构建模型的代码不再可用。

这个保存的文件包括:-模型架构-模型权重值(在训练期间学到的)-模型训练配置,如果有(通过编译)-优化器和它的状态,如果有(重新开始训练)

model.save("path_to_my_model") del model # Recreate the exact same model purely from the file: model = keras.models.load_model("path_to_my_model")

INFO:tensorflow:Assets written to: path_to_my_model/assets

For details, read the model serialization & saving guide.

使用相同的层图来定义多个模型

在函数式API中,通过在层图中指定它们的输入和输出来创建模型。这意味着一个图层图可以用来生成多个模型。

在下面的示例中,使用相同的层堆栈实例化两个模型:一个将图像输入转换为16维向量的编码器模型,以及一个用于训练的端到端自动编码器模型。

encoder_input = keras.Input(shape=(28, 28, 1), name="img") x = layers.Conv2D(16, 3, activation="relu")(encoder_input) x = layers.Conv2D(32, 3, activation="relu")(x) x = layers.MaxPooling2D(3)(x) x = layers.Conv2D(32, 3, activation="relu")(x) x = layers.Conv2D(16, 3, activation="relu")(x) encoder_output = layers.GlobalMaxPooling2D()(x) encoder = keras.Model(encoder_input, encoder_output, name="encoder") encoder.summary() x = layers.Reshape((4, 4, 1))(encoder_output) x = layers.Conv2DTranspose(16, 3, activation="relu")(x) x = layers.Conv2DTranspose(32, 3, activation="relu")(x) x = layers.UpSampling2D(3)(x) x = layers.Conv2DTranspose(16, 3, activation="relu")(x) decoder_output = layers.Conv2DTranspose(1, 3, activation="relu")(x) autoencoder = keras.Model(encoder_input, decoder_output, name="autoencoder") autoencoder.summary()

Model: "encoder" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= img (InputLayer) [(None, 28, 28, 1)] 0 _________________________________________________________________ conv2d (Conv2D) (None, 26, 26, 16) 160 _________________________________________________________________ conv2d_1 (Conv2D) (None, 24, 24, 32) 4640 _________________________________________________________________ max_pooling2d (MaxPooling2D) (None, 8, 8, 32) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 6, 6, 32) 9248 _________________________________________________________________ conv2d_3 (Conv2D) (None, 4, 4, 16) 4624 _________________________________________________________________ global_max_pooling2d (Global (None, 16) 0 ================================================================= Total params: 18,672 Trainable params: 18,672 Non-trainable params: 0 _________________________________________________________________ Model: "autoencoder" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= img (InputLayer) [(None, 28, 28, 1)] 0 _________________________________________________________________ conv2d (Conv2D) (None, 26, 26, 16) 160 _________________________________________________________________ conv2d_1 (Conv2D) (None, 24, 24, 32) 4640 _________________________________________________________________ max_pooling2d (MaxPooling2D) (None, 8, 8, 32) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 6, 6, 32) 9248 _________________________________________________________________ conv2d_3 (Conv2D) (None, 4, 4, 16) 4624 _________________________________________________________________ global_max_pooling2d (Global (None, 16) 0 _________________________________________________________________ reshape (