第七周作业 第12章 情感分析

第7周实训作业

1 import pandas as pd 2 import re 3 import jieba.posseg as psg 4 import numpy as np 5 6 7 # 去重,去除完全重复的数据 8 reviews = pd.read_csv("reviews.csv") 9 reviews = reviews[['content', 'content_type']].drop_duplicates() 10 content = reviews['content']

1 # 去除去除英文、数字等 2 # 由于评论主要为京东美的电热水器的评论,因此去除这些词语 3 strinfo = re.compile('[0-9a-zA-Z]|京东|美的|电热水器|热水器|') 4 content = content.apply(lambda x: strinfo.sub('', x))

1 import numpy as np 2 # 分词 3 worker = lambda s: [(x.word, x.flag) for x in psg.cut(s)] # 自定义简单分词函数 4 seg_word = content.apply(worker) 5 6 # 将词语转为数据框形式,一列是词,一列是词语所在的句子ID,最后一列是词语在该句子的位置 7 n_word = seg_word.apply(lambda x: len(x)) # 每一评论中词的个数 8 9 n_content = [[x+1]*y for x,y in zip(list(seg_word.index), list(n_word))] 10 index_content = sum(n_content, []) # 将嵌套的列表展开,作为词所在评论的id 11 12 seg_word = sum(seg_word, []) 13 word = [x[0] for x in seg_word] # 词 14 15 nature = [x[1] for x in seg_word] # 词性 16 17 content_type = [[x]*y for x,y in zip(list(reviews['content_type']), 18 list(n_word))] 19 content_type = sum(content_type, []) # 评论类型 20 21 result = pd.DataFrame({"index_content":index_content, 22 "word":word, 23 "nature":nature, 24 "content_type":content_type}) 25 26 # 删除标点符号 27 result = result[result['nature'] != 'x'] # x表示标点符号 28 29 # 删除停用词 30 stop_path = open("stoplist.txt", 'r',encoding='UTF-8') 31 stop = stop_path.readlines() 32 stop = [x.replace('\n', '') for x in stop] 33 word = list(set(word) - set(stop)) 34 result = result[result['word'].isin(word)] 35 36 # 构造各词在对应评论的位置列 37 n_word = list(result.groupby(by = ['index_content'])['index_content'].count()) 38 index_word = [list(np.arange(0, y)) for y in n_word] 39 index_word = sum(index_word, []) # 表示词语在改评论的位置 40 41 # 合并评论id,评论中词的id,词,词性,评论类型 42 result['index_word'] = index_word

1 # 提取含有名词类的评论 2 ind = result[['n' in x for x in result['nature']]]['index_content'].unique() 3 result = result[[x in ind for x in result['index_content']]]

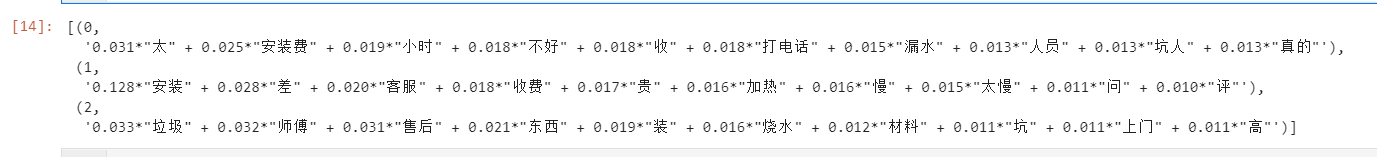

1 import matplotlib.pyplot as plt 2 from wordcloud import WordCloud 3 4 frequencies = result.groupby(by = ['word'])['word'].count() 5 frequencies = frequencies.sort_values(ascending = False) 6 backgroud_Image=plt.imread('pl.jpg') 7 wordcloud = WordCloud(font_path="C:\Windows\Fonts\STZHONGS.ttf", 8 max_words=100, 9 background_color='white', 10 mask=backgroud_Image) 11 my_wordcloud = wordcloud.fit_words(frequencies) 12 plt.imshow(my_wordcloud) 13 # 解决中文显示问题 14 plt.rcParams['font.sans-serif'] = ['SimHei'] 15 plt.rcParams['axes.unicode_minus'] = False 16 plt.title('学号:3150') 17 18 plt.axis('off') 19 plt.show() 20 21 # 将结果写出 22 result.to_csv("word.csv", index = False, encoding = 'utf-8')

1 import pandas as pd 2 import numpy as np 3 word = pd.read_csv("word.csv") 4 5 # 读入正面、负面情感评价词 6 pos_comment = pd.read_csv("正面评价词语(中文).txt", header=None,sep="\n", 7 encoding = 'utf-8', engine='python') 8 neg_comment = pd.read_csv("负面评价词语(中文).txt", header=None,sep="\n", 9 encoding = 'utf-8', engine='python') 10 pos_emotion = pd.read_csv("正面情感词语(中文).txt", header=None,sep="\n", 11 encoding = 'utf-8', engine='python') 12 neg_emotion = pd.read_csv("负面情感词语(中文).txt", header=None,sep="\n", 13 encoding = 'utf-8', engine='python') 14 15 # 合并情感词与评价词 16 positive = set(pos_comment.iloc[:,0])|set(pos_emotion.iloc[:,0]) 17 negative = set(neg_comment.iloc[:,0])|set(neg_emotion.iloc[:,0]) 18 intersection = positive&negative # 正负面情感词表中相同的词语 19 positive = list(positive - intersection) 20 negative = list(negative - intersection) 21 positive = pd.DataFrame({"word":positive, 22 "weight":[1]*len(positive)}) 23 negative = pd.DataFrame({"word":negative, 24 "weight":[-1]*len(negative)}) 25 26 posneg = positive.append(negative) 27 28 # 将分词结果与正负面情感词表合并,定位情感词 29 data_posneg = posneg.merge(word, left_on = 'word', right_on = 'word', 30 how = 'right') 31 data_posneg = data_posneg.sort_values(by = ['index_content','index_word'])

1 # 代码12-7 修正情感倾向 2 3 # 根据情感词前时候有否定词或双层否定词对情感值进行修正 4 # 载入否定词表 5 notdict = pd.read_csv("not.csv") 6 7 # 处理否定修饰词 8 data_posneg['amend_weight'] = data_posneg['weight'] # 构造新列,作为经过否定词修正后的情感值 9 data_posneg['id'] = np.arange(0, len(data_posneg)) 10 only_inclination = data_posneg.dropna() # 只保留有情感值的词语 11 only_inclination.index = np.arange(0, len(only_inclination)) 12 index = only_inclination['id'] 13 14 for i in np.arange(0, len(only_inclination)): 15 review = data_posneg[data_posneg['index_content'] == 16 only_inclination['index_content'][i]] # 提取第i个情感词所在的评论 17 review.index = np.arange(0, len(review)) 18 affective = only_inclination['index_word'][i] # 第i个情感值在该文档的位置 19 if affective == 1: 20 ne = sum([i in notdict['term'] for i in review['word'][affective - 1]]) 21 if ne == 1: 22 data_posneg['amend_weight'][index[i]] = -\ 23 data_posneg['weight'][index[i]] 24 elif affective > 1: 25 ne = sum([i in notdict['term'] for i in review['word'][[affective - 1, 26 affective - 2]]]) 27 if ne == 1: 28 data_posneg['amend_weight'][index[i]] = -\ 29 data_posneg['weight'][index[i]] 30 31 # 更新只保留情感值的数据 32 only_inclination = only_inclination.dropna() 33 34 # 计算每条评论的情感值 35 emotional_value = only_inclination.groupby(['index_content'], 36 as_index=False)['amend_weight'].sum() 37 38 # 去除情感值为0的评论 39 emotional_value = emotional_value[emotional_value['amend_weight'] != 0]

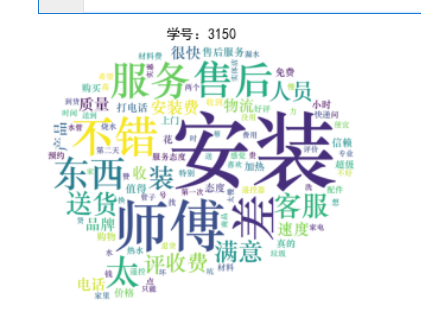

1 # 代码12-8 查看情感分析效果 2 3 # 给情感值大于0的赋予评论类型(content_type)为pos,小于0的为neg 4 emotional_value['a_type'] = '' 5 emotional_value['a_type'][emotional_value['amend_weight'] > 0] = 'pos' 6 emotional_value['a_type'][emotional_value['amend_weight'] < 0] = 'neg' 7 8 # 查看情感分析结果 9 result = emotional_value.merge(word, 10 left_on = 'index_content', 11 right_on = 'index_content', 12 how = 'left') 13 14 result = result[['index_content','content_type', 'a_type']].drop_duplicates() 15 confusion_matrix = pd.crosstab(result['content_type'], result['a_type'], 16 margins=True) # 制作交叉表 17 (confusion_matrix.iat[0,0] + confusion_matrix.iat[1,1])/confusion_matrix.iat[2,2] 18 19 # 提取正负面评论信息 20 ind_pos = list(emotional_value[emotional_value['a_type'] == 'pos']['index_content']) 21 ind_neg = list(emotional_value[emotional_value['a_type'] == 'neg']['index_content']) 22 posdata = word[[i in ind_pos for i in word['index_content']]] 23 negdata = word[[i in ind_neg for i in word['index_content']]] 24 25 # 绘制词云 26 import matplotlib.pyplot as plt 27 from wordcloud import WordCloud 28 # 正面情感词词云 29 freq_pos = posdata.groupby(by = ['word'])['word'].count() 30 freq_pos = freq_pos.sort_values(ascending = False) 31 backgroud_Image=plt.imread('pl.jpg') 32 wordcloud = WordCloud(font_path="C:\Windows\Fonts\STZHONGS.ttf", 33 max_words=100, 34 background_color='white', 35 mask=backgroud_Image) 36 pos_wordcloud = wordcloud.fit_words(freq_pos) 37 plt.imshow(pos_wordcloud) 38 plt.axis('off') 39 plt.show() 40 # 负面情感词词云 41 freq_neg = negdata.groupby(by = ['word'])['word'].count() 42 freq_neg = freq_neg.sort_values(ascending = False) 43 neg_wordcloud = wordcloud.fit_words(freq_neg) 44 plt.imshow(neg_wordcloud) 45 # 解决中文显示问题 46 plt.rcParams['font.sans-serif'] = ['SimHei'] 47 plt.rcParams['axes.unicode_minus'] = False 48 plt.title('学号:3150') 49 plt.axis('off') 50 plt.show() 51 52 # 将结果写出,每条评论作为一行 53 posdata.to_csv("posdata.csv", index = False, encoding = 'utf-8') 54 negdata.to_csv("negdata.csv", index = False, encoding = 'utf-8')

1 # 代码12-9 建立词典及语料库 2 3 import pandas as pd 4 import numpy as np 5 import re 6 import itertools 7 import matplotlib.pyplot as plt 8 9 # 载入情感分析后的数据 10 posdata = pd.read_csv("posdata.csv", encoding = 'utf-8') 11 negdata = pd.read_csv("negdata.csv", encoding = 'utf-8') 12 13 from gensim import corpora, models 14 # 建立词典 15 pos_dict = corpora.Dictionary([[i] for i in posdata['word']]) # 正面 16 neg_dict = corpora.Dictionary([[i] for i in negdata['word']]) # 负面 17 18 # 建立语料库 19 pos_corpus = [pos_dict.doc2bow(j) for j in [[i] for i in posdata['word']]] # 正面 20 neg_corpus = [neg_dict.doc2bow(j) for j in [[i] for i in negdata['word']]] # 负面

暂无

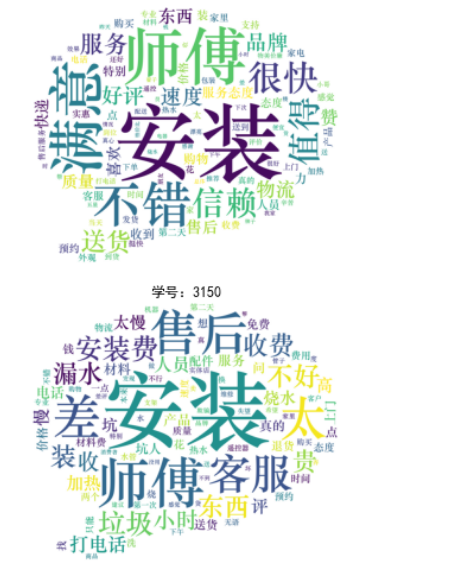

1 # 代码12-10 主题数寻优 2 3 # 构造主题数寻优函数 4 def cos(vector1, vector2): # 余弦相似度函数 5 dot_product = 0.0; 6 normA = 0.0; 7 normB = 0.0; 8 for a,b in zip(vector1, vector2): 9 dot_product += a*b 10 normA += a**2 11 normB += b**2 12 if normA == 0.0 or normB==0.0: 13 return(None) 14 else: 15 return(dot_product / ((normA*normB)**0.5)) 16 17 # 主题数寻优 18 def lda_k(x_corpus, x_dict): 19 20 # 初始化平均余弦相似度 21 mean_similarity = [] 22 mean_similarity.append(1) 23 24 # 循环生成主题并计算主题间相似度 25 for i in np.arange(2,11): 26 lda = models.LdaModel(x_corpus, num_topics = i, id2word = x_dict) # LDA模型训练 27 for j in np.arange(i): 28 term = lda.show_topics(num_words = 50) 29 30 # 提取各主题词 31 top_word = [] 32 for k in np.arange(i): 33 top_word.append([''.join(re.findall('"(.*)"',i)) \ 34 for i in term[k][1].split('+')]) # 列出所有词 35 36 # 构造词频向量 37 word = sum(top_word,[]) # 列出所有的词 38 unique_word = set(word) # 去除重复的词 39 40 # 构造主题词列表,行表示主题号,列表示各主题词 41 mat = [] 42 for j in np.arange(i): 43 top_w = top_word[j] 44 mat.append(tuple([top_w.count(k) for k in unique_word])) 45 46 p = list(itertools.permutations(list(np.arange(i)),2)) 47 l = len(p) 48 top_similarity = [0] 49 for w in np.arange(l): 50 vector1 = mat[p[w][0]] 51 vector2 = mat[p[w][1]] 52 top_similarity.append(cos(vector1, vector2)) 53 54 # 计算平均余弦相似度 55 mean_similarity.append(sum(top_similarity)/l) 56 return(mean_similarity) 57 58 # 计算主题平均余弦相似度 59 pos_k = lda_k(pos_corpus, pos_dict) 60 neg_k = lda_k(neg_corpus, neg_dict) 61 62 # 绘制主题平均余弦相似度图形 63 from matplotlib.font_manager import FontProperties 64 font = FontProperties(size=14) 65 #解决中文显示问题 66 plt.rcParams['font.sans-serif']=['SimHei'] 67 plt.rcParams['axes.unicode_minus'] = False 68 fig = plt.figure(figsize=(10,8)) 69 ax1 = fig.add_subplot(211) 70 ax1.plot(pos_k) 71 ax1.set_xlabel('正面评论LDA主题数寻优', fontproperties=font) 72 73 ax2 = fig.add_subplot(212) 74 ax2.plot(neg_k) 75 ax2.set_xlabel('负面评论LDA主题数寻优', fontproperties=font)

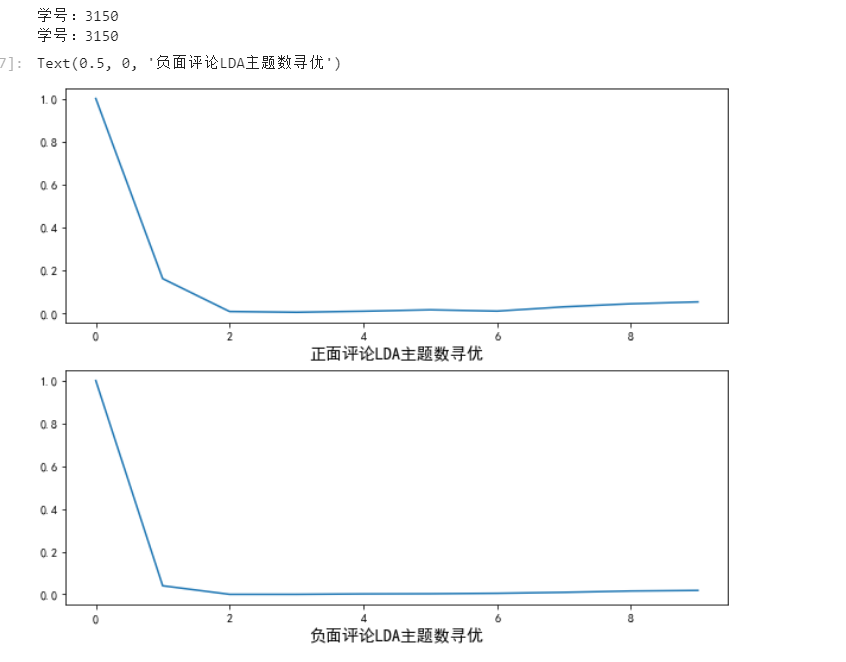

1 # 代码12-11 LDA主题分析 2 3 # LDA主题分析 4 pos_lda = models.LdaModel(pos_corpus, num_topics = 3, id2word = pos_dict) 5 neg_lda = models.LdaModel(neg_corpus, num_topics = 3, id2word = neg_dict) 6 pos_lda.print_topics(num_words = 10) 7 8 neg_lda.print_topics(num_words = 10)