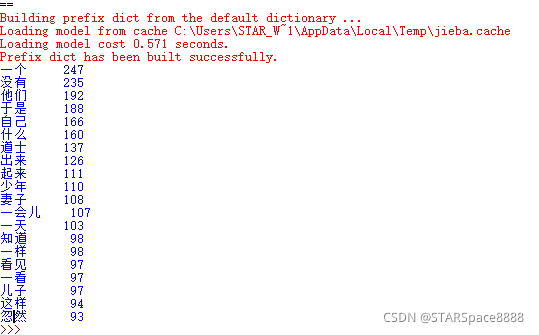

jieba 分词 聊斋

import jieba

# -*- coding: utf-8 -*-

txt = open("D:\\python\\jieba\\聊斋志异.txt", "r", encoding='gb18030').read()

words = jieba.lcut(txt)

counts = {}

for word in words:

if len(word) == 1: # 单个词语不计算在内

continue

else:

counts[word] = counts.get(word, 0) + 1 # 遍历所有词语,每出现一次其对应的值加 1

items = list(counts.items())#将键值对转换成列表

items.sort(key=lambda x: x[1], reverse=True) # 根据词语出现的次数进行从大到小排序

for i in range(20):

word, count = items[i]

print("{0:<5}{1:>5}".format(word, count))