深度学习-Tensorflow2.2-多分类{8}-多输出模型实例-20

``

import tensorflow as tf

from tensorflow import keras

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import pathlib

import os

import random

os.environ['TF_FORCE_GPU_ALLOW_GROWTH'] = 'true'

import IPython.display as display

gpu_ok = tf.test.is_gpu_available()

print("tf version:", tf.__version__)

print("use GPU", gpu_ok) # 判断是否使用gpu进行训练

data_dir = "F:/py/ziliao/数据集/multi-output-classification/dataset" # 定义路径

data_root = pathlib.Path(data_dir)

data_root

for item in data_root.iterdir():

print(item) # 查看所有目录

all_image_paths = list(data_root.glob("*/*")) # 使用glob方法取出所有图片

image_count = len(all_image_paths)

image_count # 查看图片张数

all_image_paths = [str(path) for path in all_image_paths]

random.shuffle(all_image_paths) # 对所有图片路径进行乱序

# 提取目录名称

label_names = sorted(item.name for item in data_root.glob("*/") if item.is_dir())

label_names

# 因为要预测颜色和种类 使用切割 set取出唯一的值 进行提取

color_label_names = set(name.split("_")[0] for name in label_names)

item_label_names = set(name.split("_")[1] for name in label_names)

color_label_names,item_label_names # 颜色,种类

# 编写一个对应的索引

color_label_to_index = dict((name,index)for index,name in enumerate(color_label_names))

item_label_to_index = dict((name,index)for index,name in enumerate(item_label_names))

color_label_to_index,item_label_to_index

# 对每一个图片label进行编码

all_image_labels = [pathlib.Path(path).parent.name for path in all_image_paths]

all_image_labels[:5],len(all_image_labels)

# 把颜色及物品转换成对应的序号

color_labels = [color_label_to_index[label.split("_")[0]]for label in all_image_labels]

item_labels = [item_label_to_index[label.split("_")[1]]for label in all_image_labels]

color_labels[:5],item_labels[:5]

# 绘制图片 及 对应的label

for n in range(3):

image_index = random.choice(range(len(all_image_paths)))

display.display(display.Image(all_image_paths[image_index],width=100,height=100))

print(all_image_labels[image_index])

print()

加载和格式化图像

img_path = all_image_paths[0]

img_path

img_raw = tf.io.read_file(img_path)

print(repr(img_raw)[:100]+"...")

img_tensor = tf.image.decode_image(img_raw)

print(img_tensor.shape)

print(img_tensor.dtype)

img_tensor = tf.cast(img_tensor,tf.float32)

img_tensor = tf.image.resize(img_tensor,[224,224])

img_final = img_tensor/255.0

print(img_final.shape)

print(img_final.numpy().min())

print(img_final.numpy().max())

def load_and_preporocess_image(path):

image = tf.io.read_file(path) # 读取图片

image = tf.image.decode_jpeg(image,channels=3) # 对图片进行解码

image = tf.image.resize(image,[224,224]) # 定义图片形状

image = tf.cast(image,tf.float32) # 改变图片的数据类型

image = image/255.0 # 归一化

image = 2*image-1 # 归一化到-1到1 之间

return image

image_path = all_image_paths[0] # 取出第一个路径

label = all_image_labels[0] # 取出第一个label

plt.imshow((load_and_preporocess_image(image_path)+1)/2) # 使用上面函数对图片进行处理

plt.grid(False)

plt.xlabel(label)

print()

path_ds = tf.data.Dataset.from_tensor_slices(all_image_paths) # 创建样本数据集

AUTOTUNE = tf.data.experimental.AUTOTUNE

image_ds = path_ds.map(load_and_preporocess_image,num_parallel_calls=AUTOTUNE)

label_ds = tf.data.Dataset.from_tensor_slices((color_labels,item_labels)) # 创建目标值数据集

for ele in label_ds.take(3):

print(ele[0].numpy(),ele[1].numpy())

image_label_ds = tf.data.Dataset.zip((image_ds,label_ds)) # 拼接

image_label_ds

# 划分数据集

test_count = int(image_count*0.2) # 测试集取百分之20

train_count = image_count-test_count

train_data = image_label_ds.skip(test_count) # 训练集 : skip 跳过 test数据集

test_data = image_label_ds.take(test_count) # 测试集

BATCH_SIZE = 32 # 定义批次

train_data = train_data.shuffle(buffer_size=train_count).repeat(-1) # 对训练集进行乱序,repeat(-1) 一直重复

train_data = train_data.batch(BATCH_SIZE)

train_data = train_data.prefetch(buffer_size=AUTOTUNE)

train_data

test_data = test_data.batch(BATCH_SIZE)

建立模型

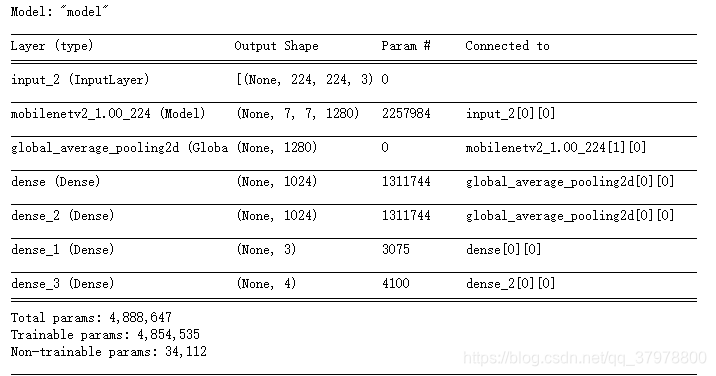

mobile_net = tf.keras.applications.MobileNetV2(input_shape=(224, 224, 3),

include_top=False)

inputs = tf.keras.Input(shape=(224, 224, 3))

x = mobile_net(inputs)

x = tf.keras.layers.GlobalAveragePooling2D()(x)

x1 = tf.keras.layers.Dense(1024,activation="relu")(x)

out_color = tf.keras.layers.Dense(len(color_label_names),

activation="softmax")(x1)

x2 = tf.keras.layers.Dense(1024,activation="relu")(x)

out_item = tf.keras.layers.Dense(len(item_label_names),

activation="softmax")(x2)

model = tf.keras.Model(inputs = inputs,

outputs = [out_color,out_item])

model.summary()

# 编译

model.compile(optimizer="adam",

loss="sparse_categorical_crossentropy",

metrics=["acc"])

train_steps = train_count//BATCH_SIZE

test_steps = test_count//BATCH_SIZE

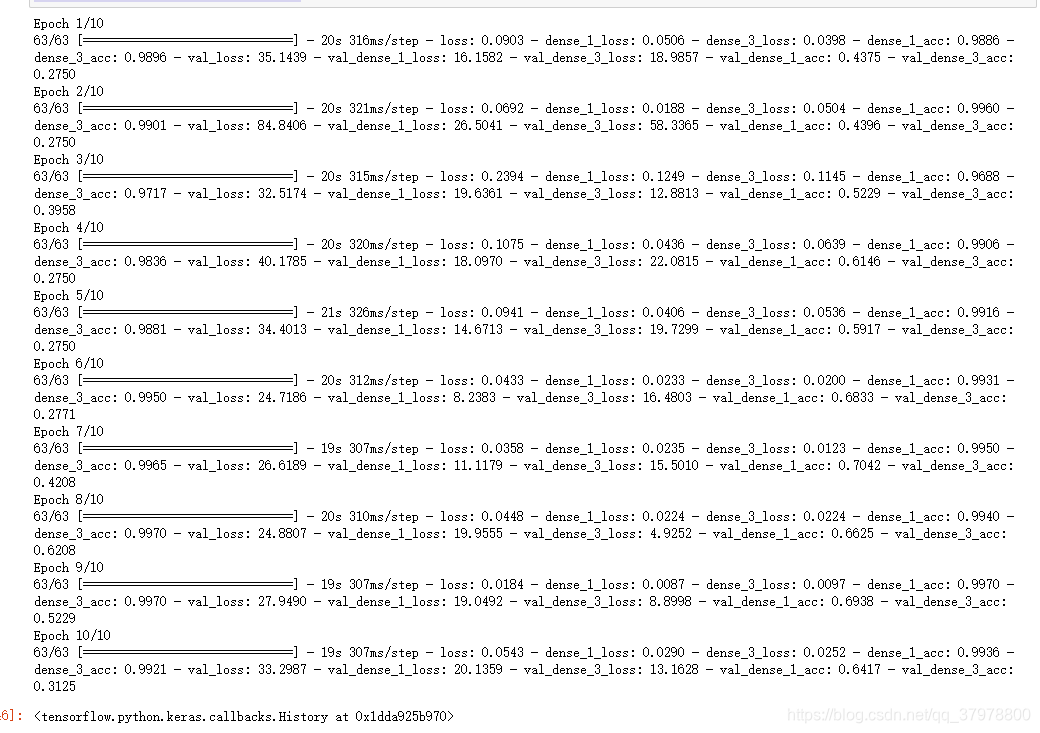

model.fit(train_data,

epochs=10,

steps_per_epoch=train_steps,

validation_data=test_data,

validation_steps=test_steps)