TCP Data Flow and Window Management(3)

Example

We create a TCP connection and cause the receiving process to pause before consuming data from the network.

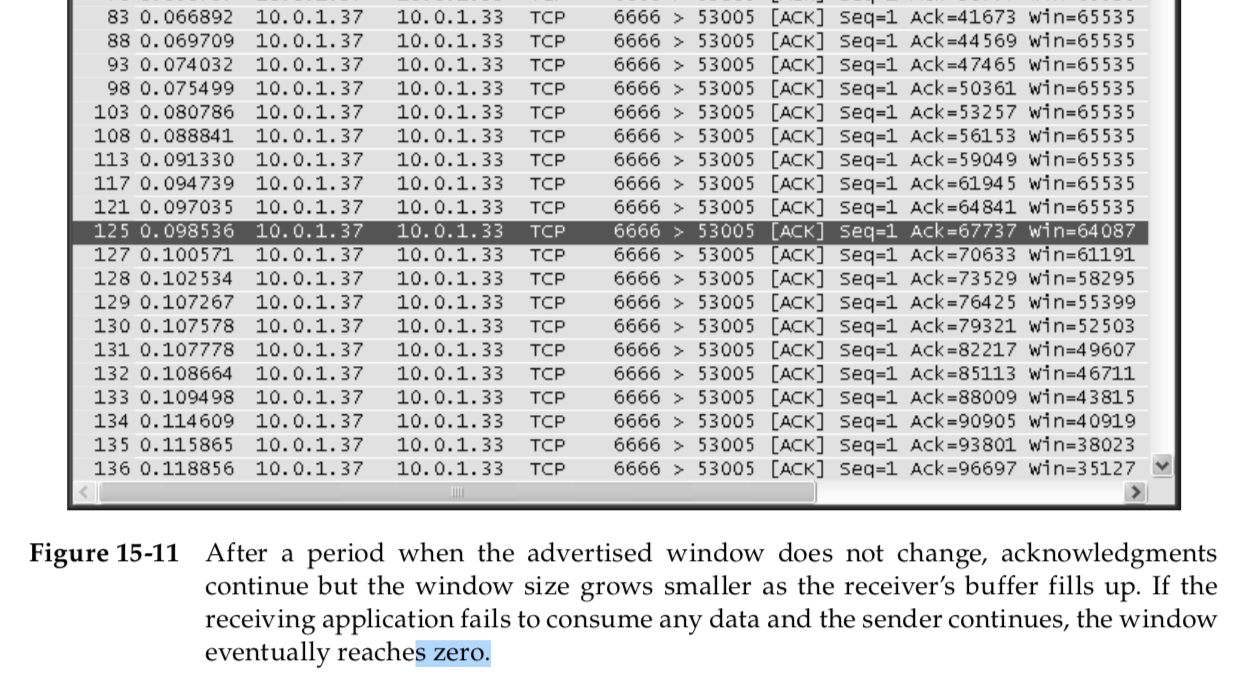

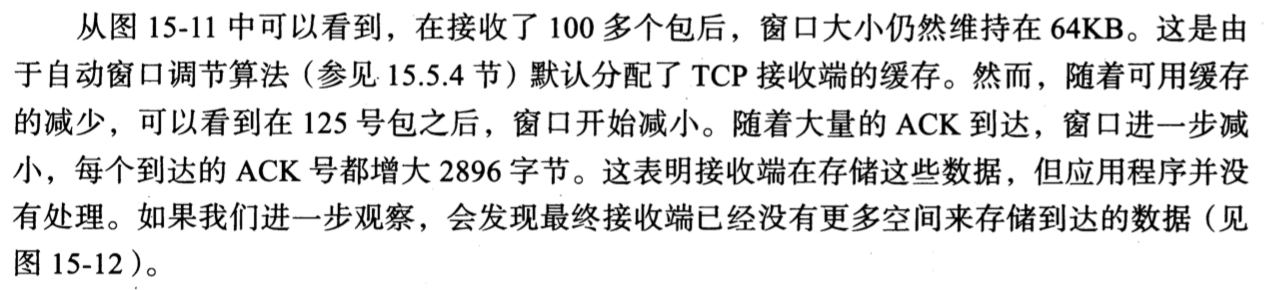

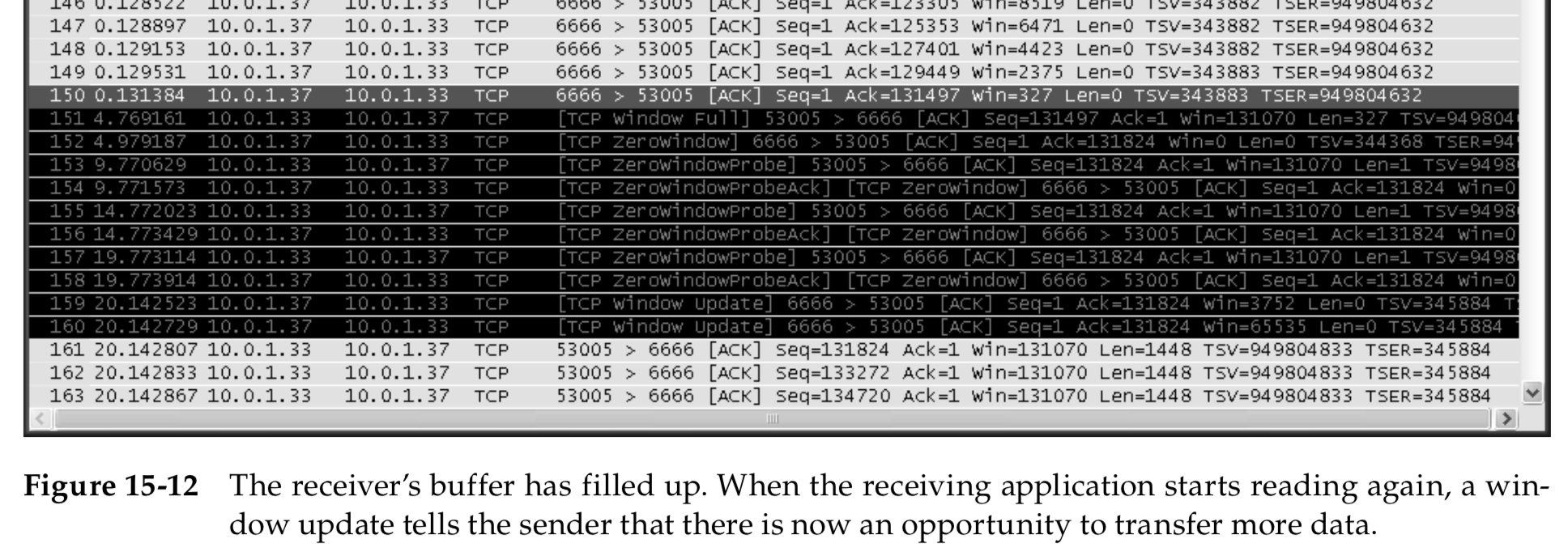

This arranges for the receiver to pause 20s prior to consuming data from the net- work. The result is that eventually the receiver’s advertised window begins to close, as shown with packet 125(0.0985s) in Figure 15-11.

There are numerous points that we can summarize using Figures 15-11 and 15-12:

-

The sender does not have to transmit a full window’s worth of data.

-

A single segment from the receiver acknowledges data and slides the win- dow to the right at the same time. This is because the window advertise- ment is relative to the ACK number in the same segment.

-

The size of the window can decrease, as shown by the series of ACKs in Figure 15-11, but the right edge of the window does not move left, so as to avoid window shrinkage.

-

The receiver does not have to wait for the window to fill before sending an ACK.

Silly Window Syndrome (SWS)

When it occurs, small data segments are exchanged across the connection instead of full-size segments [RFC0813].

This leads to undesirable inef- ficiency because each segment has relatively high overhead—a small number of data bytes relative to the number of bytes in the headers.

SWS can be caused by either end of a TCP connection: the receiver can adver- tise small windows (instead of waiting until a larger window can be advertised),

and the sender can transmit small data segments (instead of waiting for addi- tional data to send a larger segment).

Correct avoidance of silly window syndrome requires a TCP to implement rules specifically for this purpose, whether operating as a sender or a receiver.

TCP never knows ahead of time how a peer TCP will behave. The following rules are applied:

-

When operating as a receiver,small windows are not advertised. The receive algorithm specified by [RFC1122] is to not send a segment advertising a larger window than is currently being advertised (which can be 0) until the window can be increased by either one full-size segment (i.e., the receive MSS) or by one-half of the receiver’s buffer space, whichever is smaller. Note that there are two cases where this rule can come into play: when buf- fer space has become available because of an application consuming data from the network, and when TCP must respond to a window probe.

-

When sending, small segments are not sent and the Nagle algorithm gov- erns when to send. Senders avoid SWS by not transmitting a segment unless at least one of the following conditions is true:

-

A full-size (send MSS bytes) segment can be sent.

-

TCP can send at least one-half of the maximum-size window that the other end has ever advertised on this connection.

-

TCP can send everything it has to send and either (i) an ACK is not cur- rently expected (i.e., we have no outstanding unacknowledged data) or (ii) the Nagle algorithm is disabled for this connection.

-

Example

Large Buffers and Auto-Tuning

In this chapter, we have seen that an application using a small receive buffer size may be doomed to significant throughput degradation compared to other applica- tions using TCP in similar conditions.

Even if the receiver specifies a large enough buffer, the sender might specify too small a buffer, ultimately leading to bad per- formance.

This problem became so important that many TCP stacks now decouple the allocation of the receive buffer from the size specified by the application.

In most cases, the size specified by the application is effectively ignored, and the operating system instead uses either a large fixed value or a dynamically calcu- lated value.

With Linux 2.4 and later, sender-side auto-tuning is supported.

With version 2.6.7 and later, both receiver- and sender-side auto-tuning is supported.

However, auto-tuning is subject to limits placed on the buffer sizes.

The following Linux sysctl variables control the sender and receiver maximum buffer sizes.

The val- ues after the equal sign are the default values (which may vary depending on the particular Linux distribution), which should be increased if the system is to be used in high bandwidth-delay-product environments:

In addition, the auto-tuning parameters are given by the following variables:

net.ipv4.tcp_rmem = 4096 87380 174760 net.ipv4.tcp_wmem = 4096 16384 131072

Each of these variables contains three values: the minimum, default, and max- imum buffer size used by auto-tuning.

(diffs between tcp buffer and window: https://serverfault.com/questions/445487/difference-between-tcp-recv-buffer-and-tcp-receive-window-size)

Example

At the receiver, we do not specify any setting for the receive buffer, but we do arrange for an initial delay of 20s before the application performs any reads.

We can see that after the initial packets, the window increases, which corresponds to the sender’s increase in the data sending rate.

We explore the sender’s data rate control when we investigate TCP congestion control in Chapter 16.

For now, we need only know that when the sender starts up, it typically starts by sending one packet and then increases the amount of outstanding data by one MSS packet for

each ACK it receives that indicates progress. Thus, it typically sends two MSS-size segments for each ACK it receives.

Looking at the pattern of the window advertisements—10712, 13536, 16360, 19184, . . .—we can see that the advertised window is increased by twice the MSS on each ACK,

which mimics the way the sender’s congestion control scheme oper- ates, as we shall see in Chapter 16.

Provided enough memory is available at the receiver, the advertised window is always larger than what the sender is permit- ted to send according to its congestion control limitations.

This is the best case— the minimal amount of buffer space is being used and advertised by the receiver that keeps the sender sending as fast as possible.

The problem of TCP applications using too-small buffers became a signifi- cant one as faster wide area Internet connections became available.