Concurrent Programming(5)

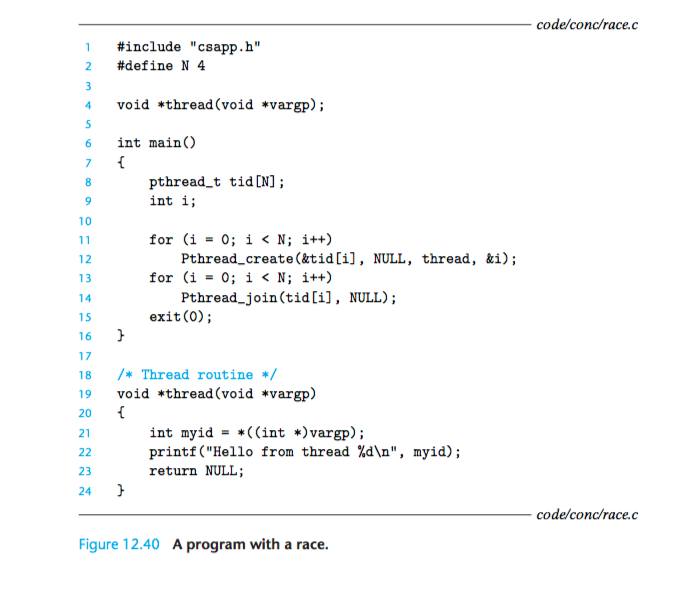

1 a thread-unsafe code version:

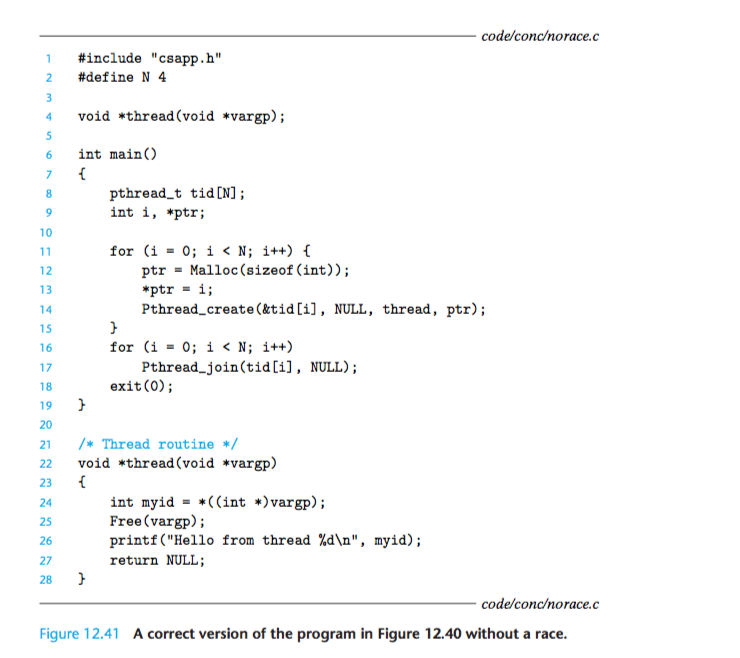

1.1 correct version 1:

1.2 correct version 2 and its pros and cons:

-

Another approach is to pass the integer i directly, rather than passing a pointer to i:

for (i = 0; i < N; i++) Pthread_create(&tid[i], NULL, thread, (void *)i);In the thread routine, we cast the argument back to an int and assign it to myid:

int myid = (int) vargp;

advantages and diadvantages:

The advantage is that it reduces overhead by eliminating the calls to malloc and free.

A significant disadvantage is that it assumes that pointers are at least as large as ints. While this assumption is true for all modern systems, it might not be true for legacy or future systems.

2 In Figure 12.41, we might be tempted to free the allocated memory block immedi- ately after line 15 in the main thread, instead of freeing it in the peer thread. But this would be a bad idea. Why?

If we free the block immediately after the call to pthread_create in line 15, then we will introduce a new race, this time between the call to free in the main thread, and the assignment statement in line 25 of the thread routine.

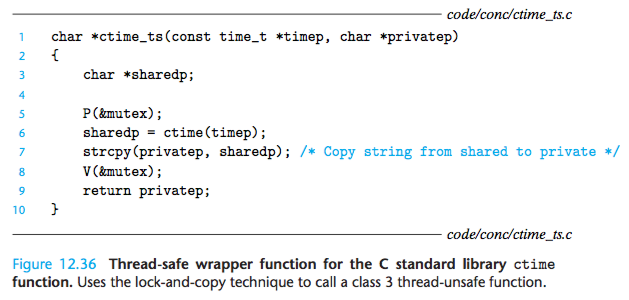

3 The ctime_ts function in Figure 12.36 is thread-safe, but not reentrant. Explain.

The ctime_ts function is not reentrant because each invocation shares the same static variable returned by the ctime function.

However, it is thread- safe because the accesses to the shared variable are protected by P and V opera- tions, and thus are mutually exclusive.

4

The solution to the first readers-writers problem in Figure 12.26 gives priority to readers, but this priority is weak in the sense that a writer leaving its critical section might restart a waiting writer instead of a waiting reader. Describe a scenario where this weak priority would allow a collection of writers to starve a reader.

Suppose that a particular semaphore implementation uses a LIFO stack of threads for each semaphore. When a thread blocks on a semaphore in a P operation, its ID is pushed onto the stack.

Similarly, the V operation pops the top thread ID from the stack and restarts that thread.

Given this stack implementation, an adversarial(对抗) writer in its critical section could simply wait until another writer blocks on the semaphore before releasing the semaphore. In this scenario, a waiting reader might wait forever as two writers passed control back and forth.

Notice that although it might seem more intuitive to use a FIFO queue rather than a LIFO stack, using such a stack is not incorrect and does not violate the semantics of the P and V operations.

5

Let p denote the number of producers, c the number of consumers, and n the buffer size in units of items.

For each of the following scenarios, indicate whether the mutex semaphore in sbuf_insert and sbuf_remove is necessary or not.

A. p=1,c=1,n>1

B. p=1,c=1,n=1

C. p>1,c>1,n=1

A. p = 1, c = 1, n > 1: Yes, the mutex semaphore is necessary because the producer and consumer can concurrently access the buffer.

B. p = 1, c = 1, n = 1: No, the mutex semaphore is not necessary in this case, because a nonempty buffer is equivalent to a full buffer. When the buffer contains an item, the producer is blocked. When the buffer is empty, the consumer is blocked. So at any point in time, only a single thread can access the buffer, and thus mutual exclusion is guaranteed without using the mutex.

C. p > 1, c > 1, n = 1: No, the mutex semaphore is not necessary in this case either, by the same argument as the previous case.

6

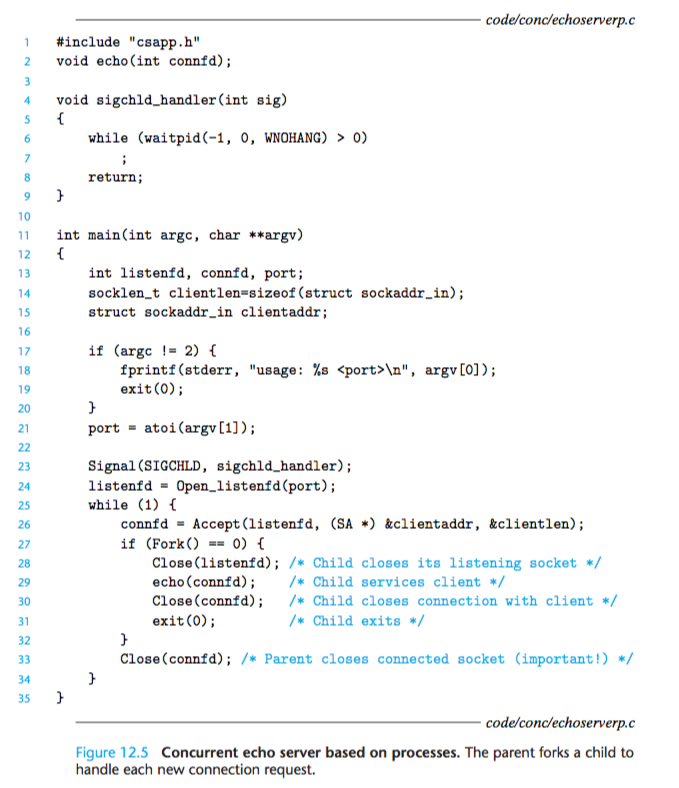

In the process-based server in Figure 12.5, we were careful to close the connected descriptor in two places: the parent and child processes.

However, in the threads- based server in Figure 12.14, we only closed the connected descriptor in one place: the peer thread. Why?

Since threads run in the same process, they all share the same descriptor table. No matter how many threads use the connected descriptor, the reference count for the connected descriptor’s file table is equal to 1. Thus, a single close operation is sufficient to free the memory resources associated with the connected descriptor when we are through with it.

7

After the parent closes the connected descriptor in line 33 of the concurrent server in Figure 12.5, the child is still able to communicate with the client using its copy of the descriptor. Why?

When the parent forks the child, it gets a copy of the connected descriptor and the reference count for the associated file table is incremented from 1 to 2.

When the parent closes its copy of the descriptor, the reference count is decremented from 2 to 1.

Since the kernel will not close a file until the reference counter in its file table goes to 0, the child’s end of the connection stays open.

8

If we were to delete line 30 of Figure 12.5, which closes the connected descriptor, the code would still be correct, in the sense that there would be no memory leak. Why?

When a process terminates for any reason, the kernel closes all open descriptors.

Thus, the child’s copy of the connected file descriptor will be closed automatically when the child exits.

9

In most Unix systems, typing ctrl-d indicates EOF on standard input.

What happens if you type ctrl-d to the program in Figure 12.6 while it is blocked in the call to select?

Recall that a descriptor is ready for reading if a request to read 1 byte from that descriptor would not block.

If EOF becomes true on a descriptor, then the descriptor is ready for reading because the read operation will return immediately with a zero return code indicating EOF.

Thus, typing ctrl-d causes the select function to return with descriptor 0 in the ready set.