理解Nginx负载均衡

准备

服务器

搭建三台用于测试的虚拟机

| 名称 | IP | 服务 |

|---|---|---|

| node01 | 192.168.198.131 | Nginx、模拟业务(8080) |

| node02 | 192.168.198.130 | 模拟业务(8080) |

| node03 | 192.168.198.132 | 模拟业务(8080) |

修改hostname和hosts

$ vim /etc/hosts

192.168.198.131 node01

$ vim /etc/hostname

node01

$ reboot

## 其余两台也改下,并重启使配置生效

在node01上安装Nginx服务

$ echo -e "deb http://nginx.org/packages/ubuntu/ $(lsb_release -cs) nginx\ndeb-src http://nginx.org/packages/ubuntu/ $(lsb_release -cs) nginx" | sudo tee /etc/apt/sources.list.d/nginx.list

$ wget -O- http://nginx.org/keys/nginx_signing.key | sudo apt-key add -

$ sudo apt-get update

$ sudo apt-get install nginx

模拟业务

使用https://start.spring.io快速新建Spring Boot项目,添加Web模块,并编写以下代码:

@RestController

@RequestMapping("/test")

public class DemoController {

@GetMapping

public String test() throws UnknownHostException {

return "this is : " + Inet4Address.getLocalHost();

}

}

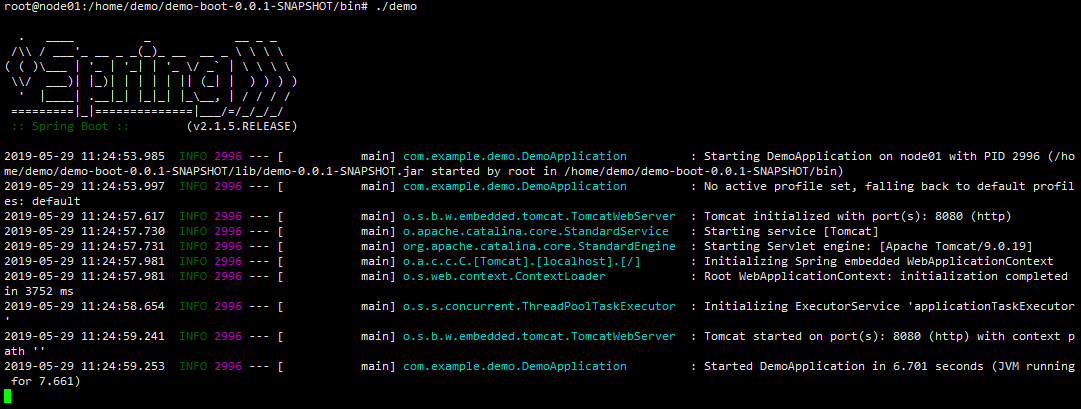

打包并部署到服务器,我使用的是The Application Plugin,部署完毕启动

测试下:

## node01

$ curl 192.168.198.131:8080/test

...

this is : node01/192.168.198.131

## node02

$ curl 192.168.198.130:8080/test

...

this is : node02/192.168.198.130

## node03

$ curl 192.168.198.132:8080/test

...

this is : node03/192.168.198.132

Nginx负载均衡

Round Robin(轮询)

请求在服务器之间均匀分布,可以设置服务器权重。

$ vim /etc/nginx/conf/demo.conf

upstream backend {

server 192.168.198.131:8080;

server 192.168.198.132:8080;

server 192.168.198.130:8080;

}

server {

listen 80;

server_name 192.168.198.131;

location / {

proxy_pass http://backend;

}

}

$ service nginx restart

测试下

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131 # node01

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132 # node03

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.130 # node02

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131 # node01

可以看到,每台服务器访问到的次数是相等的。

Least Connections

请求分配到连接数最少的服务器,可以设置服务器权重。

$ vim /etc/nginx/conf/demo.conf

upstream backend {

least_conn;

server 192.168.198.131:8080;

server 192.168.198.132:8080;

server 192.168.198.130:8080;

}

$ service nginx restart

这个不知道怎么模拟出连接数最少场景。

IP Hash

从客户端的IP地址来确定请求应该发送给哪台服务器。在这种情况下,使用IPv4地址的前三个八位字节或整个IPv6地址来计算散列值。该方法能保证来自同一地址的请求分配到同一台服务器,除非该服务器不可用。

$ vim /etc/nginx/conf/demo.conf

upstream backend {

ip_hash;

server 192.168.198.131:8080;

server 192.168.198.132:8080;

server 192.168.198.130:8080;

}

$ service nginx restart

测试下

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131

可以看到,请求都被分配到node01节点。

接下来,将node01节点关闭,看看会发生什么:

$ ps -ef | grep demo

root 3343 1764 0 11:52 pts/0 00:00:23 java -jar /home/demo/demo-boot-0.0.1-SNAPSHOT/lib/demo-0.0.1-SNAPSHOT.jar

root 4529 1764 0 13:11 pts/0 00:00:00 grep --color=auto demo

$ kill -9 3343

$ ps -ef | grep demo

root 4529 1764 0 13:11 pts/0 00:00:00 grep --color=auto demo

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132

由于node01节点不可用,请求都被分配到node03节点。

Generic Hash

与上面的IP_HASH类似,通用HASH按照用户定义的参数来计算散列值,参数可以是文本字符串,变量或组合。例如,参数可以是远端地址:

$ vim /etc/nginx/conf/demo.conf

upstream backend {

hash $remote_addr consistent;

server 192.168.198.131:8080;

server 192.168.198.132:8080;

server 192.168.198.130:8080;

}

$ service nginx restart

测试下

$ curl 192.168.198.131/test

...

this is : node02/192.168.198.130

$ curl 192.168.198.131/test

...

this is : node02/192.168.198.130

$ curl 192.168.198.131/test

...

this is : node02/192.168.198.130

可以看到,请求都被分配到了node02节点。

👉上面的consistent是可选参数,如果设置了,将采用Ketama一致性hash算法计算散列值。

关于一致性Hash,可以查看我的另一篇博客:理解一致性Hash算法

Random

请求会被随机分配到一台服务器,可以设置服务器权重。

$ vim /etc/nginx/conf/demo.conf

upstream backend {

random;

server 192.168.198.131:8080;

server 192.168.198.132:8080;

server 192.168.198.130:8080;

}

$ service nginx restart

测试下

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131

$ curl 192.168.198.131/test

...

this is : node02/192.168.198.130

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.130

可以看到,请求是被随机分配到三台服务器的。

Weights

除了设置负载均衡算法,我们还可以为服务器设置权重,权重默认值是1

$ vim /etc/nginx/conf/demo.conf

upstream backend {

server 192.168.198.131:8080 weight=5;

server 192.168.198.132:8080 weight=10;

server 192.168.198.130:8080;

}

$ service nginx restart

测试下

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132

$ curl 192.168.198.131/test

...

this is : node03/192.168.198.132

$ curl 192.168.198.131/test

...

this is : node01/192.168.198.131

可以看到,5次请求中,node03(weight=10)占了3次,node01(weight=5)占了2次,node02(weight=1)1次都没有。

理论上来说,上面的配置,访问16次,node03应被分配10次,node01应被分配5次,node02应被分配1次。