scrapy爬虫小案例2(腾讯招聘案例-下载多页问题)

爬虫四步:

- 新建项目 (scrapy startproject xxx):新建一个新的爬虫项目

- 明确目标 (编写items.py):明确你想要抓取的目标

- 制作爬虫 (spiders/xxspider.py):制作爬虫开始爬取网页

- 存储内容 (pipelines.py):设计管道存储爬取内容

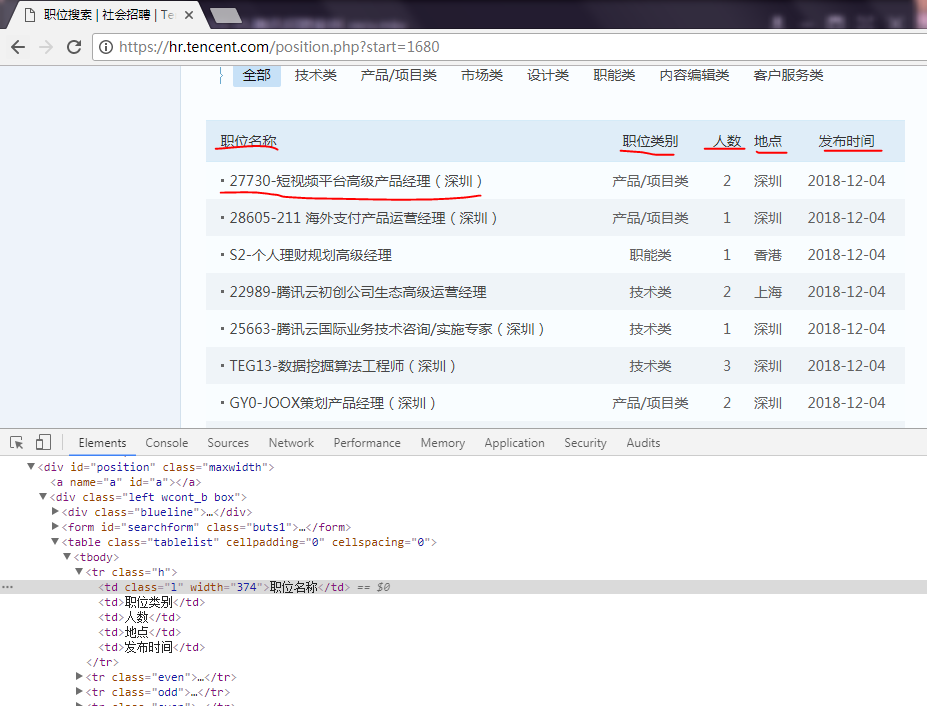

爬取内容

1.创建名为tencent的项目,写items.py文件

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class TencentItem(scrapy.Item): # define the fields for your item here like: # 职位名称 position_name = scrapy.Field() # 连接 position_link = scrapy.Field() # 职位类别 position_type = scrapy.Field() # 招聘人数 people_num = scrapy.Field() # 工作地点 work_location = scrapy.Field() # 发布时间 publish_time = scrapy.Field()

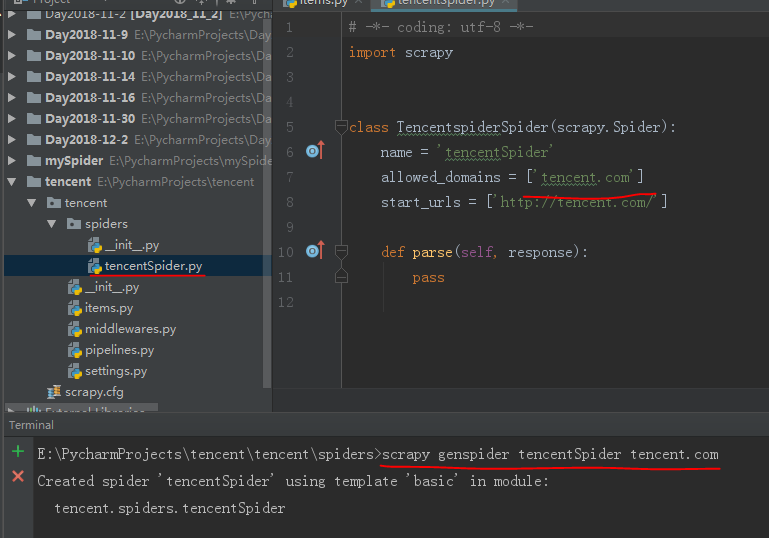

2.创建爬虫文件

3.写tencentSpider.py

# -*- coding: utf-8 -*- import scrapy from tencent.items import TencentItem class TencentspiderSpider(scrapy.Spider): name = 'tencentSpider' allowed_domains = ['tencent.com'] url = 'https://hr.tencent.com/position.php?&start=' offset = 0 start_urls = [url + str(offset)] def parse(self, response): for each in response.xpath("//tr[@class='even'] | //tr[@class='odd']"): # 初始化模型对象 item = TencentItem() # 职位名称 item['position_name'] = each.xpath("./td[1]/a/text()").extract()[0] # 连接 item['position_link'] = each.xpath("./td[1]/a/@href").extract()[0] # 职位类别 item['position_type'] = each.xpath("./td[2]/text()").extract()[0] # 招聘人数 item['people_num'] = each.xpath("./td[3]/text()").extract()[0] # 工作地点 item['work_location'] = each.xpath("./td[4]/text()").extract()[0] # 发布时间 item['publish_time'] = each.xpath("./td[5]/text()").extract()[0] yield item if self.offset < 1680: self.offset += 10 # 将请求重新发送给调度器入队列,出队列,交给下载器下载 yield scrapy.Request(self.url + str(self.offset), callback=self.parse)

4.修改settings.py

# xxxxxxxx ITEM_PIPELINES = { 'tencent.pipelines.TencentPipeline': 300, } # xxxxxxxxxx

5.修改pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import json class TencentPipeline(object): def __init__(self): self.filename = open('tencent.json', 'wb+') def process_item(self, item, spider): text = json.dumps(dict(item), ensure_ascii=False, indent=2) self.filename.write(text.encode('utf-8')) return item def close_spider(self, spider): self.filename.close()

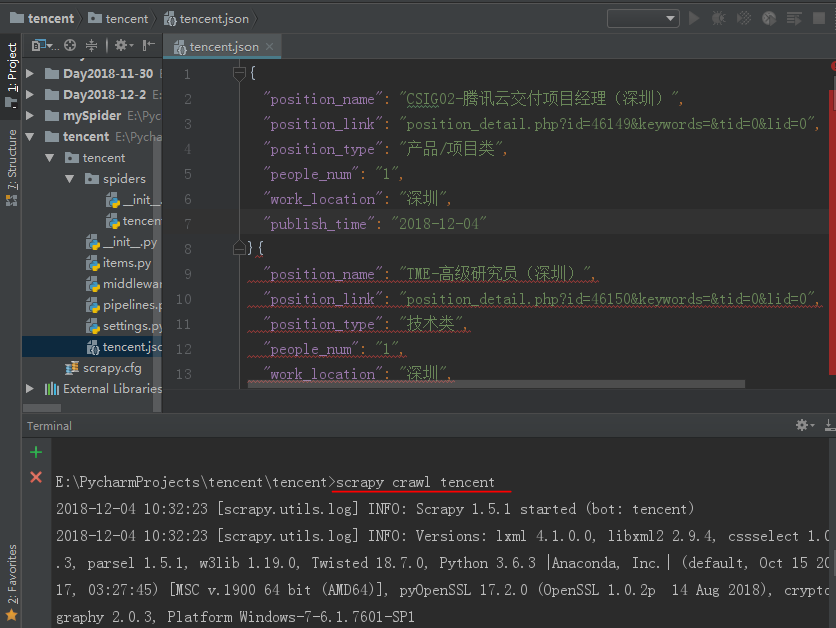

5.运行

浙公网安备 33010602011771号

浙公网安备 33010602011771号