【CVPR2020】Non-local neural networks with grouped bilinear attention transforms

【CVPR2020】Non-local neural networks with grouped bilinear attention transforms

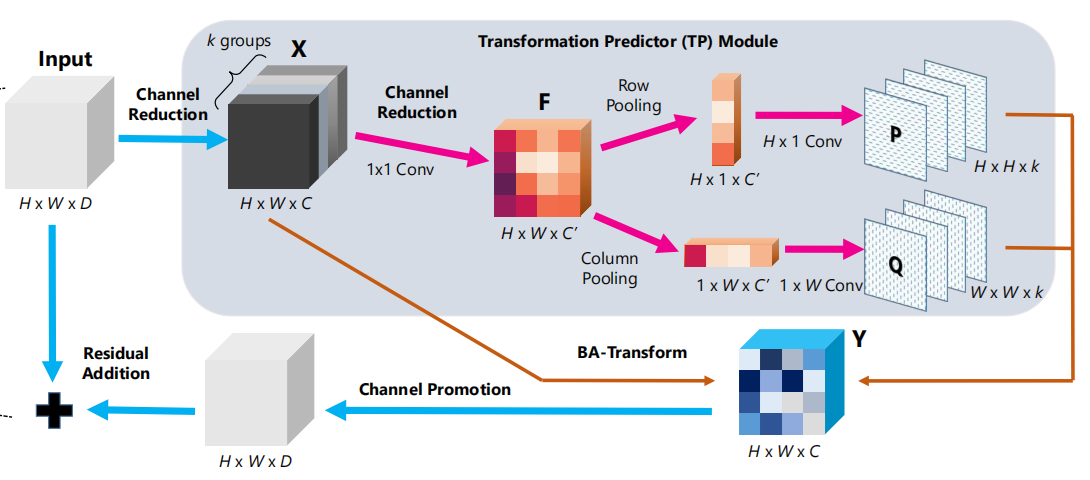

作者提出了一种名为 bilinear attention 的 non-local 的建模方法,目标是把输入特征 变成 ,是通过矩阵相乘来实现的(如下图所示,即bilinear)。关键难题是,如何构建 矩阵和 矩阵 ?

如下图所示, 和 矩阵的生成,是通过一维卷积来实现的,实际操作中,作者对 矩阵都进行了降维处理,进一步减少了计算量。

模块的整体架构如下图所示,可以看出是经过BA变换,得到矩阵和矩阵,然后再和输入的相乘,就可以得到矩阵。

论文有趣的地方是 Motivation 的阐述,作者说,研究动机来自于人类视觉系统,视觉系统有bottom-up的机制(Marr的视觉理论,由边缘抽象成整体概念),了有 top-down 机制(Gestalt的视觉理论,由整体快速关注到感兴趣区域),论文方法与两个机制的对应,作者这样描述的:

- Our proposed BA-Transform supports a large variety of operations on the attended image or video parts albeit its simplicity, including numerous affine transformation (selective scaling, shift, rotation, cropping etc.), suppressing / strengthening local structure or even global reasoning. 这就对应了human are remarkably capable of capturing attention patterns(Bottom-up机制)

- Bilinear matrix multiplication is amenable to efficient differential calculation. Top-down supervision can be gradually back-propagated to shallow layers and enforce the consistency between learned attentions and top-down supervision.

这就对应了 top-down 机制

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY