2020系统综合实践 第4次实践作业

docker-compose.yml 配置

version: "3" services: nginx: image: nginx:latest container_name: nginx_tomcat restart: always ports: - 80:80 links: - tomcat1:tomcat1 - tomcat2:tomcat2 volumes: - ./webserver:/webserver - ./nginx/nginx.conf:/etc/nginx/nginx.conf depends_on: - tomcat1 - tomcat2 tomcat1: image: tomcat:latest container_name: tomcat1 restart: always volumes: - ./webserver/tomcatA:/usr/local/apache-tomcat-8.5.55/webapps/ROOT tomcat2: image: tomcat:latest container_name: tomcat2 restart: always volumes: - ./webserver/tomcatB:/usr/local/apache-tomcat-8.5.55/webapps/ROOT

nginx反向代理配置nginx.conf

user nginx; worker_processes 1; error_log /var/log/nginx/error.log warn; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; client_max_body_size 10m; sendfile on; #tcp_nopush on; keepalive_timeout 65; #gzip on; upstream tomcat_client { server tomcat1:8080; server tomcat2:8080; } server { server_name localhost; listen 80 default_server; listen [::]:80 default_server ipv6only=on; location / { proxy_pass http://tomcat_client; proxy_redirect default; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; } } }

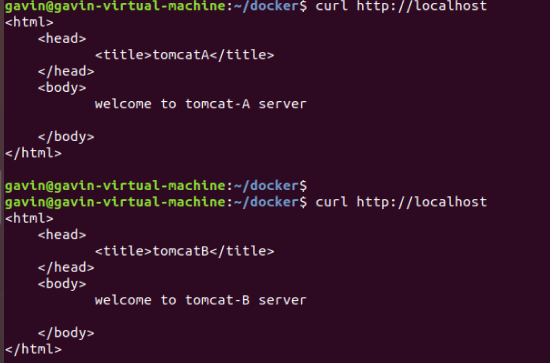

默认轮询结果

权重查询策略修改nginx.conf

upstream tomcat_client { server tomcat1:8080 weight=1; server tomcat2:8080 weight=2; }

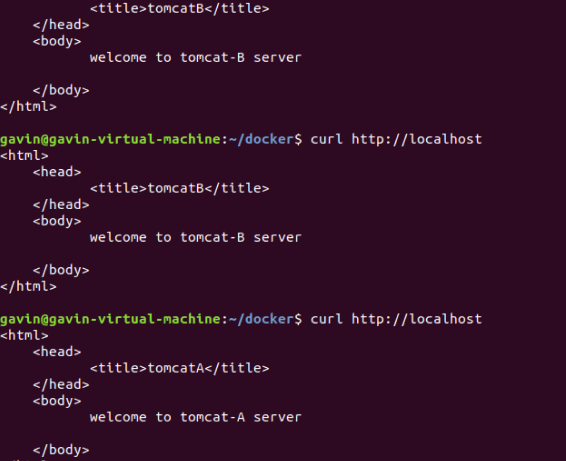

查询结果

(2) 使用Docker-compose部署javaweb运行环境

docker-compose.yml

version: '2' services: tomcat: image: tomcat hostname: ga container_name: tomcat_web ports: - "5050:8080" volumes: - "$PWD/webapps:/usr/local/tomcat/webapps" mymysql: build: . image: mymysql:test container_name: mysql_web ports: - "3309:3306" command: [ '--character-set-server=utf8mb4', '--collation-server=utf8mb4_unicode_ci' ] environment: MYSQL_ROOT_PASSWORD: "123456"

sql的Dockerfile

FROM registry.saas.hand-china.com/tools/mysql:5.7.17 # mysql的工作位置 ENV WORK_PATH /usr/local/ # 定义会被容器自动执行的目录 ENV AUTO_RUN_DIR /docker-entrypoint-initdb.d #复制gropshop.sql到/usr/local COPY grogshop.sql /usr/local/ #把要执行的shell文件放到/docker-entrypoint-initdb.d/目录下,容器会自动执行这个shell COPY docker-entrypoint.sh $AUTO_RUN_DIR/ #给执行文件增加可执行权限 RUN chmod a+x $AUTO_RUN_DIR/docker-entrypoint.sh # 设置容器启动时执行的命令 #CMD ["sh", "/docker-entrypoint-initdb.d/import.sh"]

docker-entrypoint.sh配置

#!/bin/bash

mysql -uroot -p123456 << EOF # << EOF 必须要有

source /usr/local/grogshop.sql;

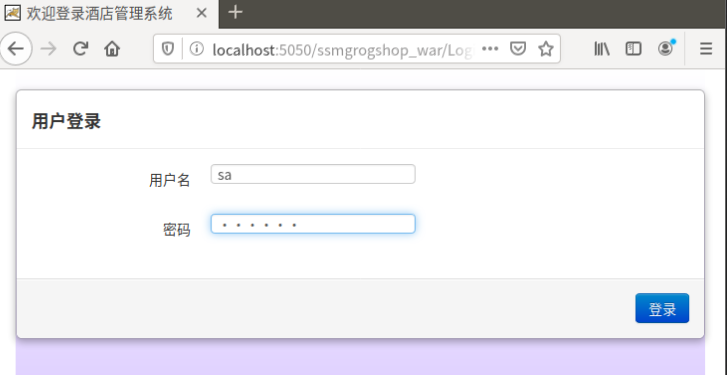

tomcat连接sql成功

新增数据及查询成功

(3)使用Docker搭建大数据集群环境

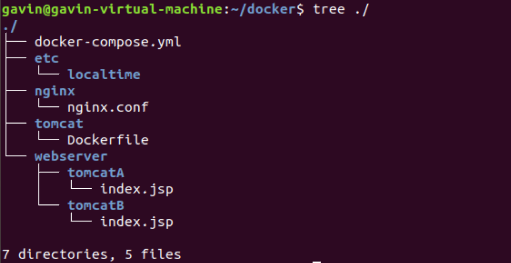

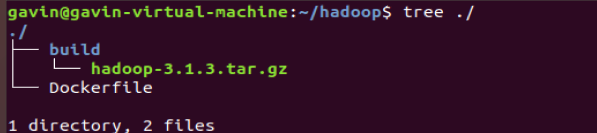

目录结构

Dockerfile构建Ubuntu

FROM ubuntu:18.04

启动容器

docker run -it -v --name ubuntu ubuntu:18.04

容器内换源后

apt-get update

apt-get install vim

apt-get install ssh

配置ssh

自动启动sshd服务:在~/.bashrc末尾添加

/etc/init.d/ssh start

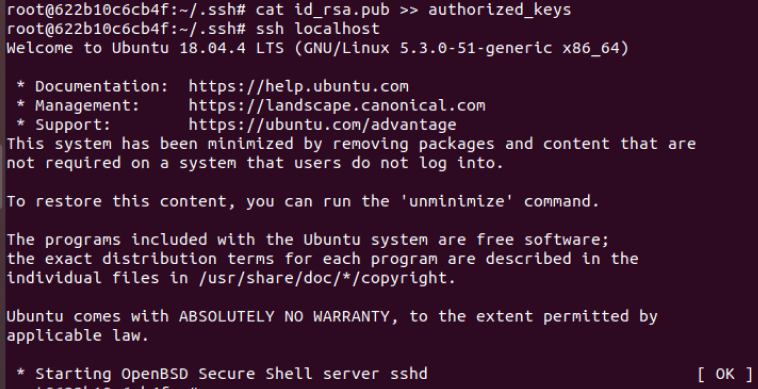

配置ssh无密码连接本地sshd服务

ssh-keygen -t rsa #一直按回车键即可

cat id_dsa.pub >> authorized_keys

无密码连接localhost成功

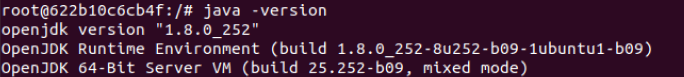

安装JDK(因为用的hadoop3.1.3所以安装JDK8)

apt-get install openjdk-8-jdk

vim ~/.bashrc插入

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

source ~/.bashrc后生效,可看到配置成功

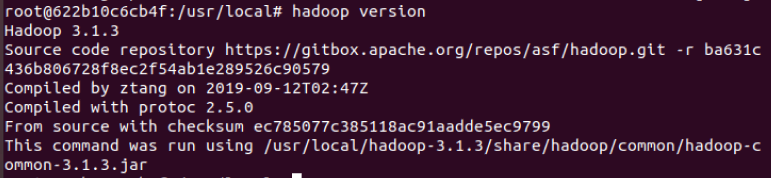

安装Hadoop(在/root/build中已有3.1.3的压缩包,进入/bulid直接解压缩)

tar -zxf source ~/.bashrchadoop-3.1.3.tar.gz -C /usr/local

vim ~/.bashrc 进入用户环境变量配置文件,插入如下代码

export HADOOP_HOME=/usr/local/hadoop-3.1.3

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$JAVA_HOME/bin

source ~/.bashrc 命令使环境变量生效

保存前面配置的镜像

docker commit 622b10c6cb4f ubuntu/hadoop

配置Hadoop集群

# 第一个终端

docker run -it -h master --name master ubuntu/hadoop

# 第二个终端

docker run -it -h slave01 --name slave01 ubuntu/hadoop

# 第三个终端

docker run -it -h slave02 --name slave02 ubuntu/hadoop

配置master,slave01和slave02的地址信息,这样他们才能找到彼此,分别打开/etc/hosts可以查看本机的ip和主机名信息,最后得到三个ip和主机地址信息如下:

172.17.0.2 master 172.17.0.3 slave01 172.17.0.4 slave02

打开master上的workers文件,输入两个slave的主机名:

vim etc/hadoop/workers

# 将localhost替换成两个slave的主机名

slave01

slave02

以上配置完成,第一次启动Hadoop需格式化namenode

hdfs namenode -format

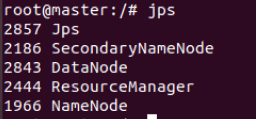

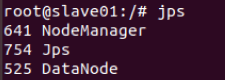

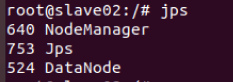

执行start-all.sh查看jps(若namenode未启动需再格式化一次,datanode未启动可以通过hadoop-daemon.sh start datanode 命令启动datanode)

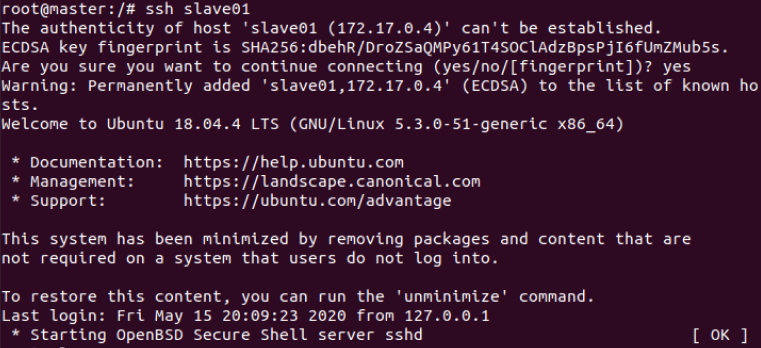

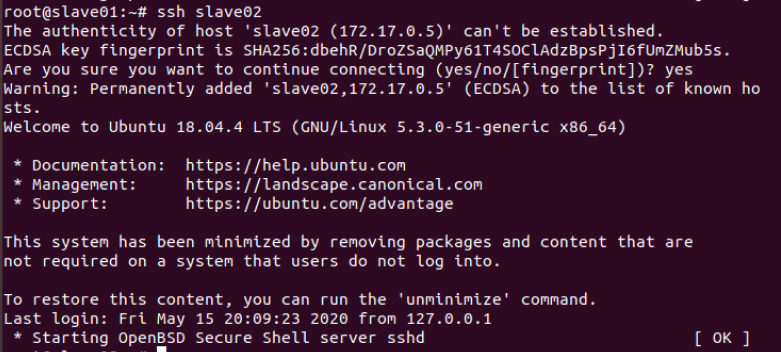

master测试slave的ssh服务

运行hadoop实例

hdfs dfs -mkdir input #在分布式文件系统上的输入文件夹 hdfs -put /usr/local/Hadoop-3.1.3/etc/hadoop/*.xml input hadoop jar /usr/local/Hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar grep input output 'dfs[a-z.]+'

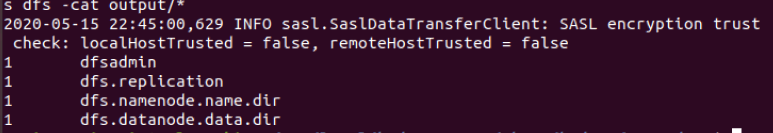

查看输出结果

(4)实验总结

nginx+tomcat集群,compose启动后访问localhost报404,人都呆了。检查了半天路径也没配错,进入容器一看webapps目录是空的。东西全在webapps.dist中。百度了以后将webapps.dist改名为webapps就行了,然而我改名完访问localhost是tomcat自带的主页....又整活了好久发现 可以直接docker cp index.jsp tomcat1:/usr/local/tomcat/webapps/ROOT/index.jsp 就覆盖了原本的主页从而显示自己配置的主页。实在是太坑了.....

| nginx+tomcat | 8h |

| javaweb配置 | 3h |

| hadoop集群 | 4h |

| 博客 | 2h |

浙公网安备 33010602011771号

浙公网安备 33010602011771号