2023数据采集与融合技术实践作业四

作业①

要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

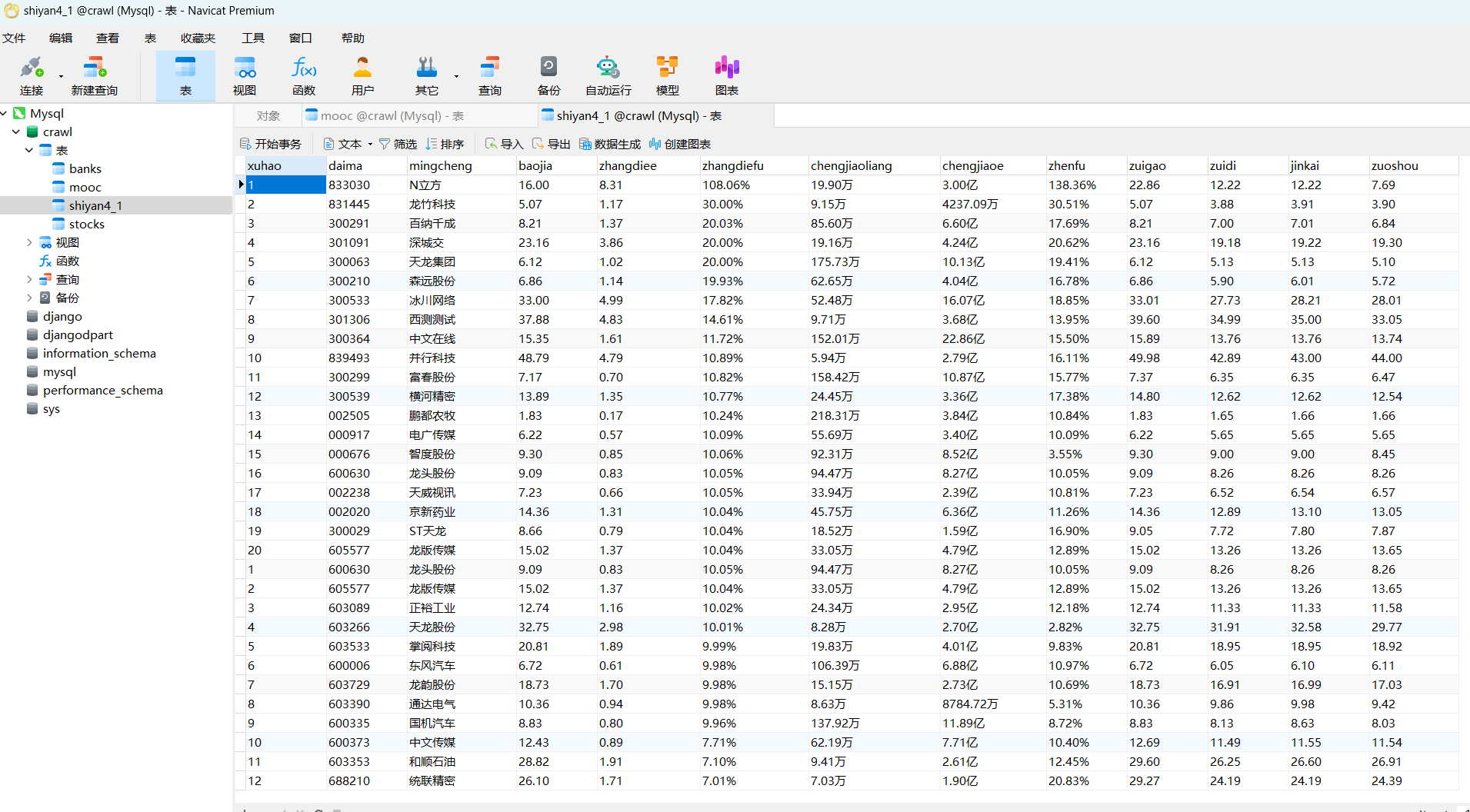

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

候选网站:东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

输出信息:MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

代码

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

import time

from scrapy.selector import Selector

import pymysql

driver = webdriver.Chrome()

driver.get("https://www.icourse163.org/")

driver.maximize_window()

# 找到登录按钮并点击

button = driver.find_element(By.XPATH,'//div[@class="_1Y4Ni"]/div')

button.click()

# 转换到iframe

frame = driver.find_element(By.XPATH,

'/html/body/div[13]/div[2]/div/div/div/div/div/div[1]/div/div[1]/div[2]/div[2]/div[1]/div/iframe')

driver.switch_to.frame(frame)

# 输入账号密码并点击登录

account = driver.find_element(By.XPATH, '/html/body/div[2]/div[2]/div[2]/form/div/div[2]/div[2]/input').send_keys('18356554842')

code = driver.find_element(By.XPATH, '/html/body/div[2]/div[2]/div[2]/form/div/div[4]/div[2]/input[2]').send_keys('fz2442961466')

login_buttom = driver.find_element(By.XPATH,'/html/body/div[2]/div[2]/div[2]/form/div/div[6]/a')

login_buttom.click()

# 切回正常模式并等待页面加载

driver.switch_to.default_content()

time.sleep(10)

# 找到搜索框并键入关键词搜索

select_course=driver.find_element(By.XPATH,'/html/body/div[4]/div[1]/div/div/div/div/div[7]/div[1]/div/div/div[1]/div/div/div/div/div/div/input')

select_course.send_keys("python")

search_buttom = driver.find_element(By.XPATH,'/html/body/div[4]/div[1]/div/div/div/div/div[7]/div[1]/div/div/div[2]/span')

search_buttom.click()

time.sleep(3)

# 进入搜索页开始寻找信息

wait = WebDriverWait(driver, 10)

element = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, ".th-bk-main-gh")))

html = driver.page_source

selector = Selector(text=html)

datas = selector.xpath("//div[@class='m-course-list']/div/div")

i = 1

for data in datas:

name = data.xpath(".//span[@class=' u-course-name f-thide']//text()").extract()

nameall = "".join(name)

schoolname = data.xpath(".//a[@class='t21 f-fc9']/text()").extract_first()

teacher = data.xpath(".//a[@class='f-fc9']//text()").extract_first()

team = data.xpath(".//a[@class='f-fc9']//text()").extract()

teamall = ",".join(team)

number = data.xpath(".//span[@class='hot']/text()").extract_first()

process = data.xpath(".//span[@class='txt']/text()").extract_first()

production = data.xpath(".//span[@class='p5 brief f-ib f-f0 f-cb']//text()").extract()

productionall = ",".join(production)

connection = pymysql.connect(host='localhost',

user='root',

password='123456',

database='crawl')

cursor = connection.cursor()

cursor.execute(

"insert into mooc(Id,cCourse,cCollege,cTeacher,cTeam,cCount,cProcess,cBrief) values(%s,%s,%s,%s,%s,%s,%s,%s)",

(i,nameall,schoolname,teacher,teamall,number,process,productionall))

connection.commit() # 提交更改

i += 1

time.sleep(10)

运行结果

心得体会

通过这个实验,我进一步学会了使用selenium来爬取数据,对xpath的使用也更加得心应手。

作业②

要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

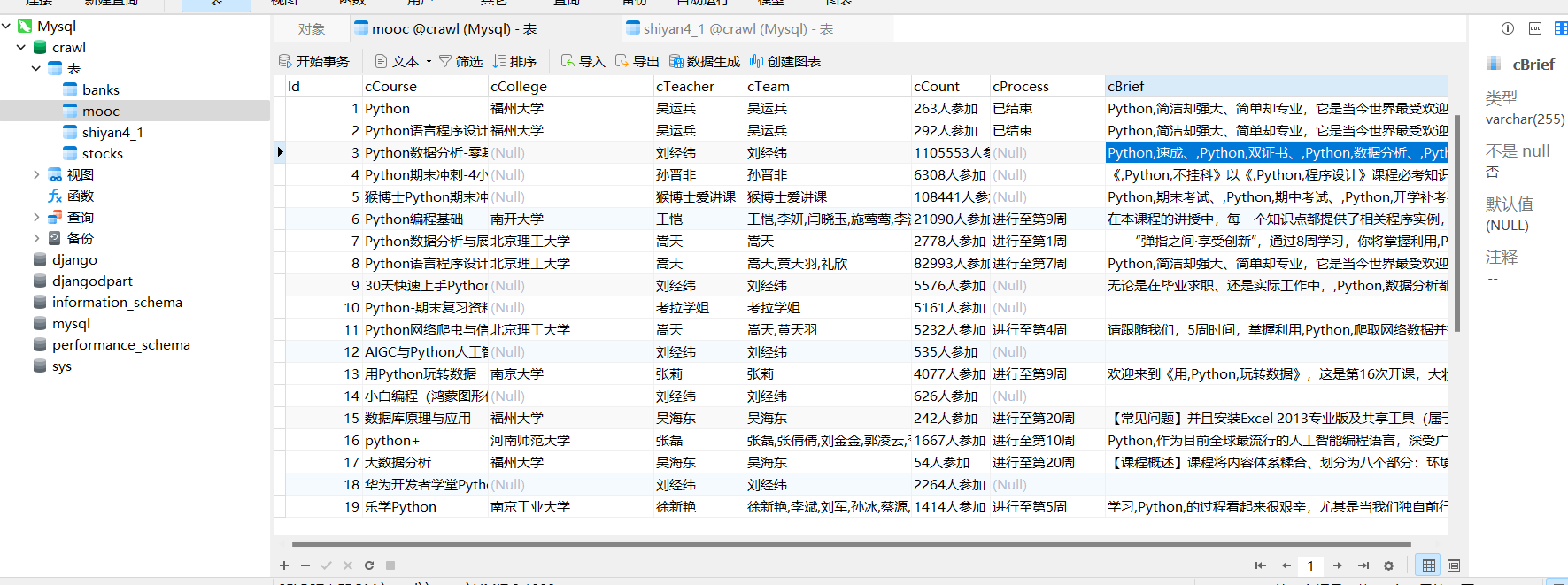

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

候选网站:中国mooc网:https://www.icourse163.org

输出信息:MYSQL数据库存储和输出格式

代码

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

import time

from scrapy.selector import Selector

import pymysql

driver = webdriver.Chrome()

driver.get("https://www.icourse163.org/")

driver.maximize_window()

# 找到登录按钮并点击

button = driver.find_element(By.XPATH,'//div[@class="_1Y4Ni"]/div')

button.click()

# 转换到iframe

frame = driver.find_element(By.XPATH,

'/html/body/div[13]/div[2]/div/div/div/div/div/div[1]/div/div[1]/div[2]/div[2]/div[1]/div/iframe')

driver.switch_to.frame(frame)

# 输入账号密码并点击登录

account = driver.find_element(By.XPATH, '/html/body/div[2]/div[2]/div[2]/form/div/div[2]/div[2]/input').send_keys('18356554842')

code = driver.find_element(By.XPATH, '/html/body/div[2]/div[2]/div[2]/form/div/div[4]/div[2]/input[2]').send_keys('fz2442961466')

login_buttom = driver.find_element(By.XPATH,'/html/body/div[2]/div[2]/div[2]/form/div/div[6]/a')

login_buttom.click()

# 切回正常模式并等待页面加载

driver.switch_to.default_content()

time.sleep(10)

# 找到搜索框并键入关键词搜索

select_course=driver.find_element(By.XPATH,'/html/body/div[4]/div[1]/div/div/div/div/div[7]/div[1]/div/div/div[1]/div/div/div/div/div/div/input')

select_course.send_keys("python")

search_buttom = driver.find_element(By.XPATH,'/html/body/div[4]/div[1]/div/div/div/div/div[7]/div[1]/div/div/div[2]/span')

search_buttom.click()

time.sleep(3)

# 进入搜索页开始寻找信息

wait = WebDriverWait(driver, 10)

element = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, ".th-bk-main-gh")))

html = driver.page_source

selector = Selector(text=html)

datas = selector.xpath("//div[@class='m-course-list']/div/div")

i = 1

for data in datas:

name = data.xpath(".//span[@class=' u-course-name f-thide']//text()").extract()

nameall = "".join(name)

schoolname = data.xpath(".//a[@class='t21 f-fc9']/text()").extract_first()

teacher = data.xpath(".//a[@class='f-fc9']//text()").extract_first()

team = data.xpath(".//a[@class='f-fc9']//text()").extract()

teamall = ",".join(team)

number = data.xpath(".//span[@class='hot']/text()").extract_first()

process = data.xpath(".//span[@class='txt']/text()").extract_first()

production = data.xpath(".//span[@class='p5 brief f-ib f-f0 f-cb']//text()").extract()

productionall = ",".join(production)

connection = pymysql.connect(host='localhost',

user='root',

password='123456',

database='crawl')

cursor = connection.cursor()

cursor.execute(

"insert into mooc(Id,cCourse,cCollege,cTeacher,cTeam,cCount,cProcess,cBrief) values(%s,%s,%s,%s,%s,%s,%s,%s)",

(i,nameall,schoolname,teacher,teamall,number,process,productionall))

connection.commit() # 提交更改

i += 1

time.sleep(10)

运行结果

心得体会

这个实验蛮难的,模拟登录搞了很久,最后同学指点使用iframe才可以,爬取数据内容倒是正常,就是mooc数据存的挺抽象的,搜索完关键词会被它单独拉出来,要自己连接。

作业③

实验要求

要求:

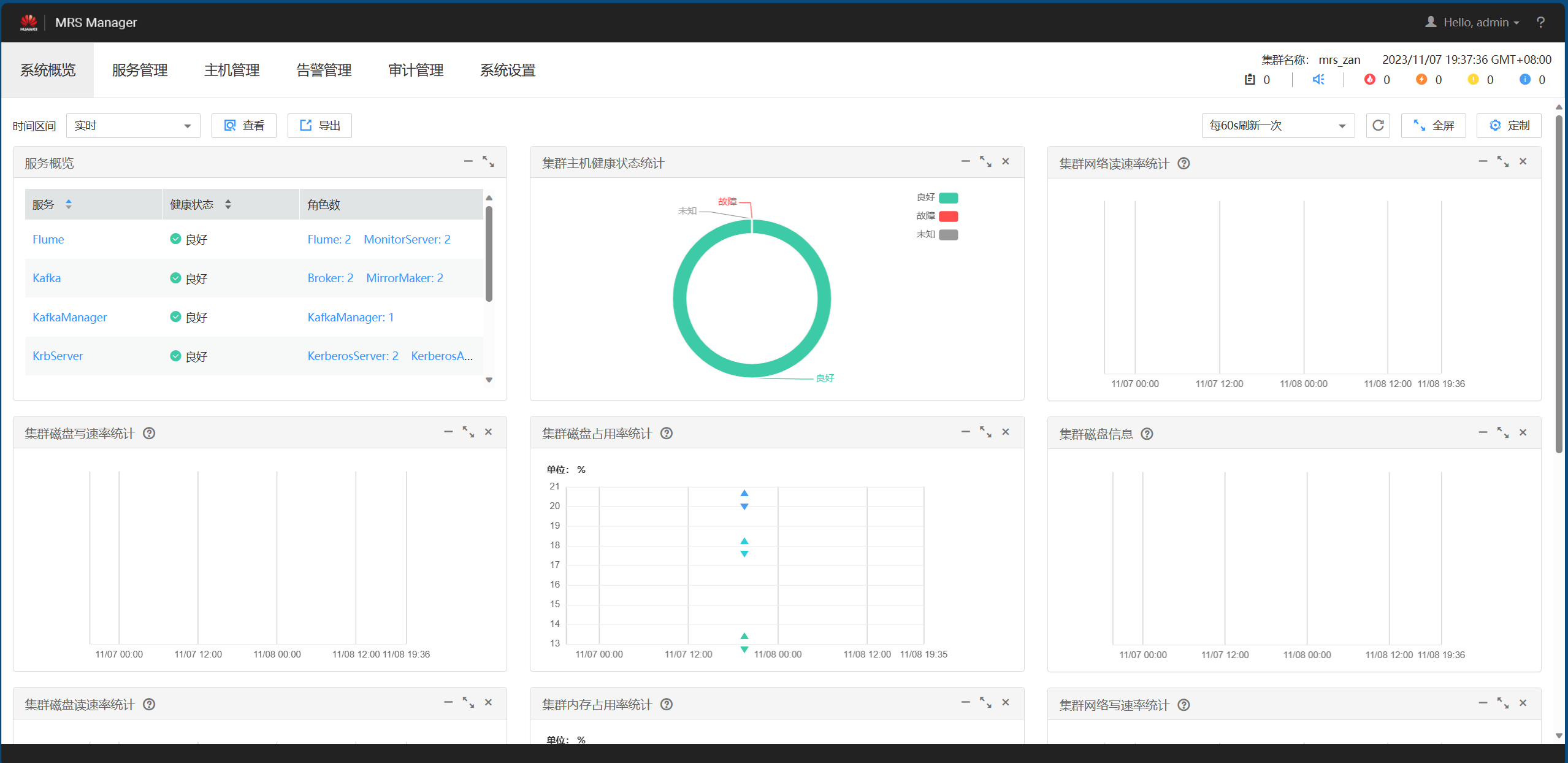

掌握大数据相关服务,熟悉Xshell的使用

完成文档 华为云_大数据实时分析处理实验手册-Flume日志采集实验(部分)v2.docx 中的任务,即为下面5个任务,具体操作见文档。

环境搭建:

任务一:开通MapReduce服务

实时分析开发实战:

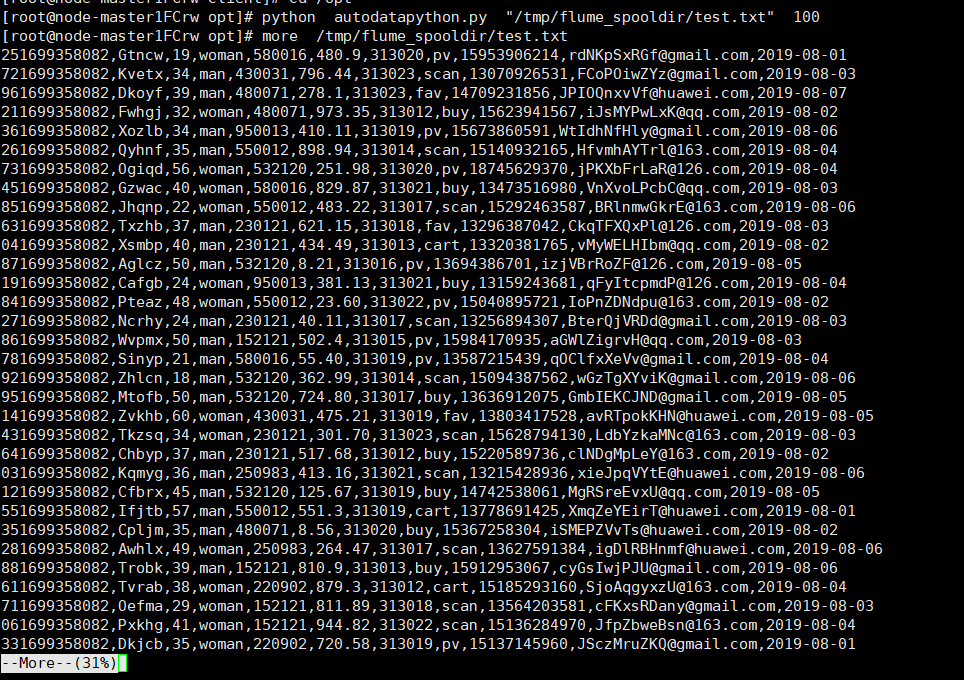

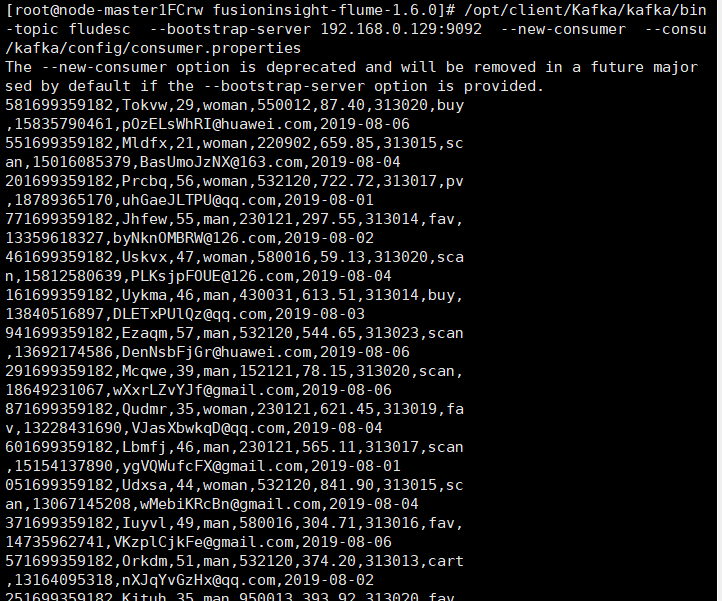

任务一:Python脚本生成测试数据

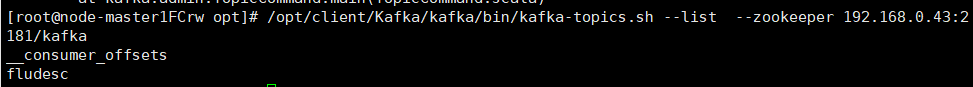

任务二:配置Kafka

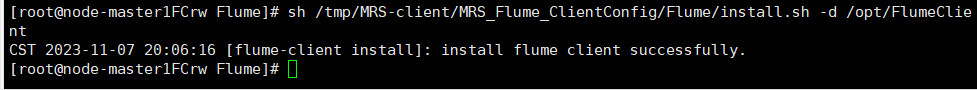

任务三: 安装Flume客户端

任务四:配置Flume采集数据

环境搭建

任务一

任务二

任务三

任务四

浙公网安备 33010602011771号

浙公网安备 33010602011771号