pytorch实现线性回归

In [1]:

import torch

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from torch import nn

In [2]:

data = pd.read_csv('./Income1.csv')

data

| Unnamed: 0 | Education | Income | |

|---|---|---|---|

| 0 | 1 | 10.000000 | 26.658839 |

| 1 | 2 | 10.401338 | 27.306435 |

| 2 | 3 | 10.842809 | 22.132410 |

| 3 | 4 | 11.244147 | 21.169841 |

| 4 | 5 | 11.645485 | 15.192634 |

| 5 | 6 | 12.086957 | 26.398951 |

| 6 | 7 | 12.488294 | 17.435307 |

| 7 | 8 | 12.889632 | 25.507885 |

| 8 | 9 | 13.290970 | 36.884595 |

| 9 | 10 | 13.732441 | 39.666109 |

| 10 | 11 | 14.133779 | 34.396281 |

| 11 | 12 | 14.535117 | 41.497994 |

| 12 | 13 | 14.976589 | 44.981575 |

| 13 | 14 | 15.377926 | 47.039595 |

| 14 | 15 | 15.779264 | 48.252578 |

| 15 | 16 | 16.220736 | 57.034251 |

| 16 | 17 | 16.622074 | 51.490919 |

| 17 | 18 | 17.023411 | 61.336621 |

| 18 | 19 | 17.464883 | 57.581988 |

| 19 | 20 | 17.866221 | 68.553714 |

| 20 | 21 | 18.267559 | 64.310925 |

| 21 | 22 | 18.709030 | 68.959009 |

| 22 | 23 | 19.110368 | 74.614639 |

| 23 | 24 | 19.511706 | 71.867195 |

| 24 | 25 | 19.913043 | 76.098135 |

| 25 | 26 | 20.354515 | 75.775218 |

| 26 | 27 | 20.755853 | 72.486055 |

| 27 | 28 | 21.157191 | 77.355021 |

| 28 | 29 | 21.598662 | 72.118790 |

| 29 | 30 | 22.000000 | 80.260571 |

In [3]:

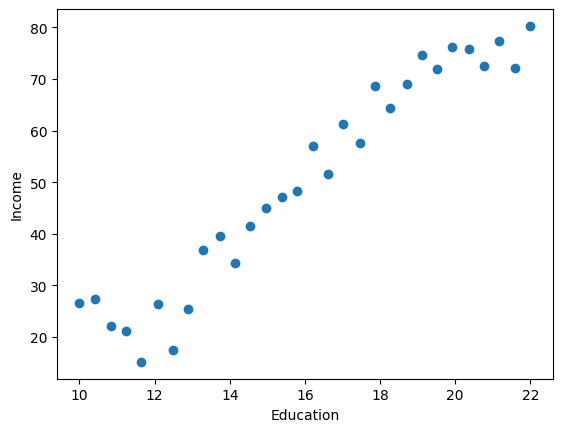

plt.scatter(data.Education, data.Income)

plt.xlabel('Education')

plt.ylabel('Income')

Out[3]:

Text(0, 0.5, 'Income')

In [4]:

# 目标 wx+b 分解写法

w = torch.randn(1, requires_grad=True)

b = torch.zeros(1, requires_grad=True)

learning_rate = 0.001

display(w, b)

tensor([-0.5873], requires_grad=True)

tensor([0.], requires_grad=True)

In [5]:

X = torch.from_numpy(data.Education.values.reshape(-1,1)).type(torch.float32)

Y = torch.from_numpy(data.Income.values.reshape(-1,1)).type(torch.float32)

In [6]:

# 定义训练过程

for epoch in range(5000):

for x, y in zip(X, Y):

y_pred = torch.matmul(x, w) + b

# 损失函数

loss = (y - y_pred).pow(2).sum()

if w.grad is not None:

# 重置w的导数

w.grad.data.zero_()

if b.grad is not None:

b.grad.data.zero_()

# 反向传播求w,b的导数

loss.backward()

# 更新w,b

with torch.no_grad():

w.data -= w.grad.data * learning_rate

b.data -= b.grad.data * learning_rate

In [7]:

display(w, b)

tensor([5.1266], requires_grad=True)

tensor([-32.6956], requires_grad=True)

In [8]:

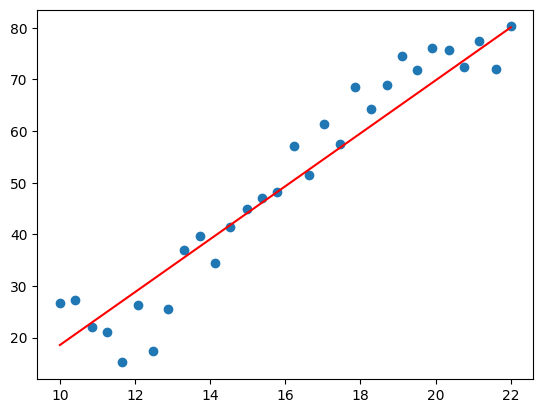

plt.scatter(data.Education, data.Income)

plt.plot(X.numpy(), (torch.matmul(X, w)+b).data.numpy(), color='red')

Out[8]:

[<matplotlib.lines.Line2D at 0x23382d9b5b0>]

In [11]:

### pytorch实现线性回归——封装

In [12]:

model = nn.Linear(1, 1)

model

Out[12]:

Linear(in_features=1, out_features=1, bias=True)

In [15]:

# 定义损失函数

loss_fn = nn.MSELoss()

# 定义优化器,第一个参数是要更新的模型中的参数

opt = torch.optim.SGD(model.parameters(), lr=0.001)

In [16]:

# 训练

for epoch in range(5000):

for x, y in zip(X, Y):

y_pred = model(x)

loss = loss_fn(y, y_pred)

# 梯度清零操作

opt.zero_grad()

loss.backward()

# 更新操作

opt.step()

In [18]:

display(model.weight, model.bias)

Parameter containing: tensor([[5.1265]], requires_grad=True)

Parameter containing: tensor([-32.6946], requires_grad=True)

浙公网安备 33010602011771号

浙公网安备 33010602011771号