TensorFlow手写AlexNet识别10-monkey-species

In [1]:

from tensorflow import keras

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

In [9]:

def AlexNet(height=224, width=224, classes=10):

input_image = keras.layers.Input(shape=(height, width, 3), dtype=tf.float32)

x = keras.layers.ZeroPadding2D(((1,2), (1,2)))(input_image)

x = keras.layers.Conv2D(48, kernel_size=11, strides=4, activation='selu')(x)

x = keras.layers.MaxPool2D(pool_size=3, strides=2)(x)

x = keras.layers.Conv2D(128, kernel_size=5, padding='same', activation='selu')(x)

x = keras.layers.MaxPool2D(pool_size=3, strides=2)(x)

x = keras.layers.Conv2D(192, kernel_size=3, padding='same', activation='selu')(x)

x = keras.layers.MaxPool2D(pool_size=3, strides=2)(x)

x = keras.layers.Conv2D(192, kernel_size=3, padding='same', activation='selu')(x)

x = keras.layers.Conv2D(192, kernel_size=3, padding='same', activation='selu')(x)

x = keras.layers.Conv2D(128, kernel_size=3, padding='same', activation='selu')(x)

x = keras.layers.MaxPool2D(pool_size=3, strides=2)(x)

# 全连接

x = keras.layers.Flatten()(x)

x = keras.layers.AlphaDropout(0.2)(x)

x = keras.layers.Dense(2048, activation='selu')(x)

x = keras.layers.AlphaDropout(0.2)(x)

x = keras.layers.Dense(2048, activation='selu')(x)

x = keras.layers.Dense(classes)(x)

predict = keras.layers.Softmax()(x)

return keras.models.Model(inputs=input_image, outputs=predict)

In [3]:

# 文件下载地址 https://www.kaggle.com/datasets/slothkong/10-monkey-species

train_dir = './10-monkey-species/training/training'

valid_dir = './10-monkey-species/validation/validation'

label_file = './10-monkey-species/monkey_labels.txt'

df = pd.read_csv(label_file, header=0)

df

| Label | Latin Name | Common Name | Train Images | Validation Images | |

|---|---|---|---|---|---|

| 0 | n0 | alouatta_palliata\t | mantled_howler | 131 | 26 |

| 1 | n1 | erythrocebus_patas\t | patas_monkey | 139 | 28 |

| 2 | n2 | cacajao_calvus\t | bald_uakari | 137 | 27 |

| 3 | n3 | macaca_fuscata\t | japanese_macaque | 152 | 30 |

| 4 | n4 | cebuella_pygmea\t | pygmy_marmoset | 131 | 26 |

| 5 | n5 | cebus_capucinus\t | white_headed_capuchin | 141 | 28 |

| 6 | n6 | mico_argentatus\t | silvery_marmoset | 132 | 26 |

| 7 | n7 | saimiri_sciureus\t | common_squirrel_monkey | 142 | 28 |

| 8 | n8 | aotus_nigriceps\t | black_headed_night_monkey | 133 | 27 |

| 9 | n9 | trachypithecus_johnii | nilgiri_langur | 132 | 26 |

In [4]:

# 图片数据生成器

height = 224

width = 224

channels = 3

batch_size = 32

classes = 10

train_datagen = keras.preprocessing.image.ImageDataGenerator(

rescale = 1. / 255, # 归一化&浮点数

rotation_range = 40, # 随机旋转 0~40°之间

width_shift_range = 0.2, # 随机水平移动

height_shift_range = 0.2, # 随机垂直移动

shear_range = 0.2, # 随机裁剪比例

zoom_range = 0.2, # 随机缩放比例

horizontal_flip = True, # 随机水平翻转

vertical_flip = True, # 随机垂直翻转

fill_mode = 'nearest', # 填充模式

)

train_generator = train_datagen.flow_from_directory(train_dir, target_size=(height, width),

batch_size=batch_size, shuffle=True, class_mode='categorical')

valid_datagen = keras.preprocessing.image.ImageDataGenerator(

rescale = 1. / 255, # 归一化&浮点数

)

valid_generator = valid_datagen.flow_from_directory(valid_dir, target_size=(height, width),

batch_size=batch_size, shuffle=False, class_mode='categorical')

Found 1098 images belonging to 10 classes. Found 272 images belonging to 10 classes.

In [5]:

# 配置网络

model = AlexNet(height=height, width=width, classes=classes)

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['acc'])

model.summary()

Model: "model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) [(None, 224, 224, 3)] 0 zero_padding2d (ZeroPadding2D) (None, 227, 227, 3) 0 conv2d (Conv2D) (None, 55, 55, 48) 17472 max_pooling2d (MaxPooling2D) (None, 27, 27, 48) 0 conv2d_1 (Conv2D) (None, 27, 27, 128) 153728 max_pooling2d_1 (MaxPooling2D) (None, 13, 13, 128) 0 conv2d_2 (Conv2D) (None, 13, 13, 192) 221376 max_pooling2d_2 (MaxPooling2D) (None, 6, 6, 192) 0 conv2d_3 (Conv2D) (None, 6, 6, 192) 331968 conv2d_4 (Conv2D) (None, 6, 6, 192) 331968 conv2d_5 (Conv2D) (None, 6, 6, 128) 221312 max_pooling2d_3 (MaxPooling2D) (None, 2, 2, 128) 0 flatten (Flatten) (None, 512) 0 alpha_dropout (AlphaDropout) (None, 512) 0 dense (Dense) (None, 2048) 1050624 alpha_dropout_1 (AlphaDropout) (None, 2048) 0 dense_1 (Dense) (None, 2048) 4196352 dense_2 (Dense) (None, 10) 20490 softmax (Softmax) (None, 10) 0 ================================================================= Total params: 6545290 (24.97 MB) Trainable params: 6545290 (24.97 MB) Non-trainable params: 0 (0.00 Byte) _________________________________________________________________

In [6]:

# 训练

history = model.fit(train_generator, steps_per_epoch=train_generator.samples//batch_size, epochs=150,

validation_data=valid_generator, validation_steps=valid_generator.samples//batch_size)

Epoch 1/150 34/34 [==============================] - 20s 561ms/step - loss: 23.3229 - acc: 0.0901 - val_loss: 5.9779 - val_acc: 0.1016 Epoch 2/150 34/34 [==============================] - 19s 559ms/step - loss: 4.7627 - acc: 0.0769 - val_loss: 2.6323 - val_acc: 0.1016 Epoch 3/150 34/34 [==============================] - 19s 570ms/step - loss: 2.6522 - acc: 0.0910 - val_loss: 2.6220 - val_acc: 0.1094 Epoch 4/150 34/34 [==============================] - 19s 539ms/step - loss: 2.4508 - acc: 0.0919 - val_loss: 2.3987 - val_acc: 0.1016 Epoch 5/150 34/34 [==============================] - 18s 539ms/step - loss: 2.5152 - acc: 0.0985 - val_loss: 2.5122 - val_acc: 0.1094 ...... Epoch 65/150 34/34 [==============================] - 18s 536ms/step - loss: 2.0745 - acc: 0.2693 - val_loss: 2.2072 - val_acc: 0.3086 Epoch 66/150 34/34 [==============================] - 18s 526ms/step - loss: 1.9485 - acc: 0.2983 - val_loss: 1.9713 - val_acc: 0.3867 Epoch 67/150 34/34 [==============================] - 18s 541ms/step - loss: 2.0011 - acc: 0.2777 - val_loss: 2.0079 - val_acc: 0.3672 Epoch 68/150 34/34 [==============================] - 18s 533ms/step - loss: 1.9882 - acc: 0.2936 - val_loss: 2.3509 - val_acc: 0.3320 Epoch 69/150 34/34 [==============================] - 18s 539ms/step - loss: 2.0199 - acc: 0.2871 - val_loss: 2.0326 - val_acc: 0.3555 Epoch 70/150 ....... Epoch 146/150 34/34 [==============================] - 18s 533ms/step - loss: 1.3461 - acc: 0.5235 - val_loss: 1.6899 - val_acc: 0.5898 Epoch 147/150 34/34 [==============================] - 18s 543ms/step - loss: 1.4630 - acc: 0.5122 - val_loss: 1.6531 - val_acc: 0.6172 Epoch 148/150 34/34 [==============================] - 18s 530ms/step - loss: 1.4329 - acc: 0.5056 - val_loss: 1.6652 - val_acc: 0.5898 Epoch 149/150 34/34 [==============================] - 19s 545ms/step - loss: 1.3375 - acc: 0.5281 - val_loss: 1.8808 - val_acc: 0.5273 Epoch 150/150 34/34 [==============================] - 18s 539ms/step - loss: 1.4911 - acc: 0.5159 - val_loss: 1.4701 - val_acc: 0.5859

In [7]:

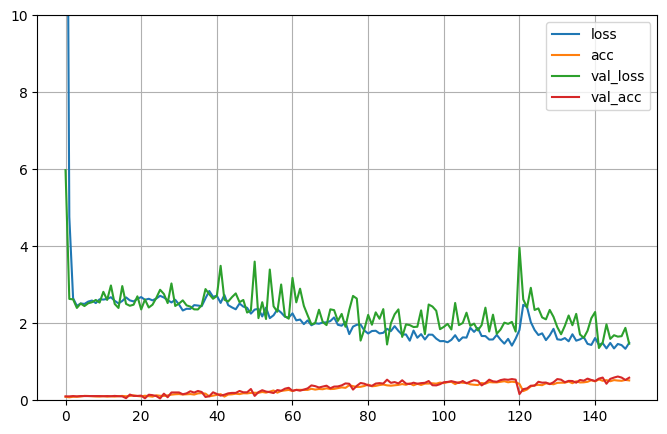

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid()

plt.gca().set_ylim(0, 10)

plt.show()

In [8]:

model.evaluate(valid_generator)

9/9 [==============================] - 2s 255ms/step - loss: 1.4895 - acc: 0.5699

Out[8]:

[1.4895002841949463, 0.5698529481887817]

浙公网安备 33010602011771号

浙公网安备 33010602011771号