celery

celery

celery是一个基于Python开发的模块,可以帮助我们对任务进行分发和处理。

1.1 环境的搭建

pip3 install celery==4.4

安装broker: redis或rabbitMQ

pip3 install redis / pika

windows需额外安装:pip install eventlet

1.2 快速使用

-

s1.py

from celery import Celery app = Celery('tasks', broker='redis://192.168.10.48:6379', backend='redis://192.168.10.48:6379') @app.task def x1(x, y): return x + y @app.task def x2(x, y): return x - y -

s2.py

from s1 import x1 import datetime # 直接执行 # result = x1.delay(4, 4) # 定时执行 ctime = datetime.datetime.now() utc_ctime = datetime.datetime.utcfromtimestamp(ctime.timestamp()) s10 = datetime.timedelta(seconds=10) ctime_x = utc_ctime + s10 result = x1.apply_async(args=[4, 5], eta=ctime_x) print(result.id) -

s3.py

from celery.result import AsyncResult from s1 import app result_object = AsyncResult(id="任务ID", app=app) print(result_object.status) data = result_object.get() print(data)

运行程序:

-

启动redis

-

启动worker# 进入当前目录 celery worker -A s1 -l info celery worker -A s1 -l info -P eventletwindows环境需注意: Traceback (most recent call last): File "d:\wupeiqi\py_virtual_envs\auction\lib\site-packages\billiard\pool.py", line 362, in workloop result = (True, prepare_result(fun(*args, **kwargs))) File "d:\wupeiqi\py_virtual_envs\auction\lib\site-packages\celery\app\trace.py", line 546, in _fast_trace_task tasks, accept, hostname = _loc ValueError: not enough values to unpack (expected 3, got 0) pip install eventlet celery worker -A s1 -l info -P eventlet -

创建任务

python s2.py python s2.py -

查看任务状态

# 填写任务ID pyhon s3.py

1.3 django中应用celery

之后,需要按照django-celery的要求进行编写代码。

-

第一步:【项目/项目/settings.py 】添加配置

CELERY_BROKER_URL = 'redis://192.168.16.85:6379' CELERY_ACCEPT_CONTENT = ['json'] CELERY_RESULT_BACKEND = 'redis://192.168.16.85:6379' CELERY_TASK_SERIALIZER = 'json' -

第二步:【项目/项目/celery.py】在项目同名目录创建 celery.py

import os from celery import Celery # set the default Django settings module for the 'celery' program. os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'auction.settings') app = Celery('django_celery_demo') # 文件名随意 # Using a string here means the worker doesn't have to serialize # the configuration object to child processes. # - namespace='CELERY' means all celery-related configuration keys # should have a `CELERY_` prefix. app.config_from_object('django.conf:settings', namespace='CELERY') # Load task modules from all registered Django app configs. app.autodiscover_tasks() # # 去每个已注册app中读取 tasks.py 文件 -

第三步,【项目/app名称/tasks.py】

from celery import shared_task @shared_task def add(x, y): return x + y @shared_task def mul(x, y): return x * y -

第四步,【项目/项目/

__init__.py】from .celery import app as celery_app __all__ = ('celery_app',) -

启动worker

进入项目目录 celery worker -A demos -l info -P eventlet 因为从 Celery 5.0 开始,-A 选项需要放在全局参数中,而不是 worker 子命令之后。 celery -A aiko5_celery worker -l info -P eventlet -

编写视图函数,调用celery去创建任务。

-

url

url(r'^create/task/$', task.create_task), url(r'^get/result/$', task.get_result), -

视图函数

from django.shortcuts import HttpResponse from api.tasks import x1 def create_task(request): print('请求来了') result = x1.delay(2,2) print('执行完毕') return HttpResponse(result.id) def get_result(request): nid = request.GET.get('nid') from celery.result import AsyncResult # from demos.celery import app from demos import celery_app result_object = AsyncResult(id=nid, app=celery_app) # print(result_object.status) data = result_object.get() # 获取结果 # result_obj.forget() # 把数据在backend中移除 # result_obj.revoke() # 取消任务 # result_obj.revoke(terminate=True) # 强制取消任务 if result_object.successful(): result_object.get() result_obj.forget() elif result_object.failed(): pass else: pass return HttpResponse(data)

-

-

启动django程序

python manage.py ....

1.4 celery定时执行

# 定时执行

ctime = datetime.datetime.now()

utc_ctime = datetime.datetime.utcfromtimestamp(ctime.timestamp())

s10 = datetime.timedelta(seconds=10)

ctime_x = utc_ctime + s10

result = add.apply_async(args=[4, 5], eta=ctime_x)

1.5 周期性定时任务

- celery

- django中也可以结合使用

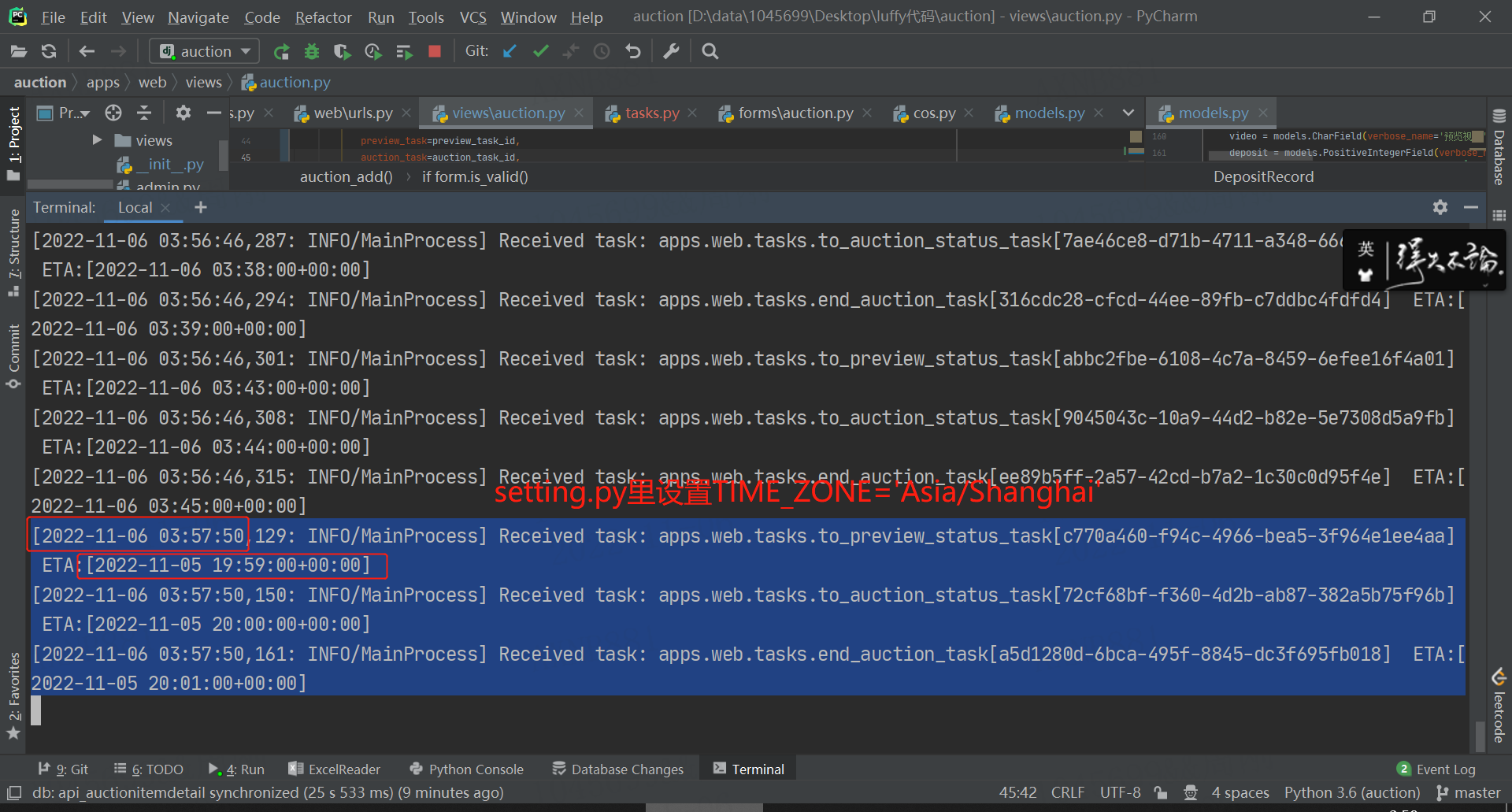

示例: 创建专场,三个定时任务

models.py

class Auction(models.Model):

"""

拍卖专场系列

"""

status_choices = (

(1, '未开拍'),

(2, '预展中'),

(3, '拍卖中'),

(4, '已结束')

)

status = models.PositiveSmallIntegerField(verbose_name='状态', choices=status_choices, default=1)

title = models.CharField(verbose_name='标题', max_length=32)

# FileField = 数据保存文件路径CharField + ModelForm显示时File来生成标签 + ModelForm.save()

cover = models.FileField(verbose_name='封面', max_length=128)

video = models.CharField(verbose_name='预览视频', max_length=128, null=True, blank=True)

preview_start_time = models.DateTimeField(verbose_name='预展开始时间')

preview_end_time = models.DateTimeField(verbose_name='预展结束时间')

auction_start_time = models.DateTimeField(verbose_name='拍卖开始时间')

auction_end_time = models.DateTimeField(verbose_name='拍卖结束时间')

deposit = models.PositiveIntegerField(verbose_name='全场保证金', default=1000)

total_price = models.PositiveIntegerField(verbose_name='成交额', null=True, blank=True)

goods_count = models.PositiveIntegerField(verbose_name='拍品数量', default=0)

bid_count = models.PositiveIntegerField(verbose_name='出价次数', default=0)

look_count = models.PositiveIntegerField(verbose_name='围观次数', default=0)

create_time = models.DateTimeField(verbose_name='创建时间', auto_now_add=True)

class Meta:

verbose_name_plural = '拍卖系列'

def __str__(self):

return self.title

class AuctionTask(models.Model):

""" 定时任务 """

auction = models.OneToOneField(verbose_name='专场', to='Auction', on_delete=models.CASCADE)

preview_task = models.CharField(verbose_name='Celery预展任务ID', max_length=64)

auction_task = models.CharField(verbose_name='Celery拍卖任务ID', max_length=64)

auction_end_task = models.CharField(verbose_name='Celery拍卖结束任务ID', max_length=64)

views.py

def auction_add(request):

"""专场添加"""

if request.method == "GET":

form = AuctionModelForm()

return render(request, 'web/auction_form.html', {'form': form})

form = AuctionModelForm(data=request.POST, files=request.FILES)

if form.is_valid():

instance = form.save()

# TODO 创建三个定时任务

# 定时任务1 未开拍 - 预览中

preview_utc_datetime = datetime.datetime.utcfromtimestamp(form.instance.preview_start_time.timestamp())

preview_task_id = tasks.to_preview_status_task.apply_async(args=[instance.id, ], eta=preview_utc_datetime).id

# 定时任务2 预览中 - 开拍中

auction_utc_datetime = datetime.datetime.utcfromtimestamp(form.instance.auction_start_time.timestamp())

auction_task_id = tasks.to_auction_status_task.apply_async(args=[instance.id, ], eta=auction_utc_datetime).id

# 定时任务3 开拍中 - 已结束

auction_end_utc_datetime = datetime.datetime.utcfromtimestamp(form.instance.auction_end_time.timestamp())

auction_end_task_id = tasks.end_auction_task.apply_async(args=[instance.id, ], eta=auction_end_utc_datetime).id

models.AuctionTask.objects.create(

auction=instance,

preview_task=preview_task_id,

auction_task=auction_task_id,

auction_end_task=auction_end_task_id

)

return redirect('auction_list')

return render(request, 'web/auction_form.html', {'form': form})

tasks.py

import uuid

from celery import shared_task

from apps.api import models

@shared_task

def to_preview_status_task(auction_id):

"""未开拍 -> 预览中"""

models.Auction.objects.filter(id=auction_id).update(status=2)

models.AuctionItem.objects.filter(auction_id=auction_id).update(status=2)

@shared_task

def to_auction_status_task(auction_id):

"""预览中 -> 拍卖中"""

models.Auction.objects.filter(id=auction_id).update(status=3)

models.AuctionItem.objects.filter(auction_id=auction_id).update(status=3)

@shared_task

def end_auction_task(auction_id):

"""拍卖中 -> 已结束"""

models.Auction.objects.filter(id=auction_id).update(status=4)

models.AuctionItem.objects.filter(auction_id=auction_id).update(status=4)

celery 启动报错:django.db.utils.DatabaseError: DatabaseWrapper objects created in a thread can only be used in that same thread. The object with alias 'default' was created in thread id 2201580366656 and this is thread id 2201786259232

将启动方式改为:celery -A yourapp.celery worker --loglevel=info --pool=solo

celery worker -A auction -l info --pool=solo

-------------- celery@AXNB881 v4.4.0 (cliffs)

--- ***** -----

-- ******* ---- Windows-10-10.0.19041-SP0 2022-11-06 04:05:27

- *** --- * ---

- ** ---------- [config]

- ** ---------- .> app: django_celery_demo:0x2f429c457b8

- ** ---------- .> transport: redis://127.0.0.1:6379//

- ** ---------- .> results: redis://127.0.0.1:6379/

- *** --- * --- .> concurrency: 8 (solo)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. apps.api.tasks.add

. apps.api.tasks.mul

. apps.web.tasks.end_auction_task

. apps.web.tasks.to_auction_status_task

. apps.web.tasks.to_preview_status_task

[2022-11-06 04:05:27,539: INFO/MainProcess] Connected to redis://127.0.0.1:6379//

[2022-11-06 04:05:27,556: INFO/MainProcess] mingle: searching for neighbors

[2022-11-06 04:05:28,650: INFO/MainProcess] mingle: all alone

[2022-11-06 04:05:28,751: INFO/MainProcess] celery@AXNB881 ready.

[2022-11-06 04:06:28,294: INFO/MainProcess] Received task: apps.web.tasks.to_preview_status_task[99f50a50-39d7-43ec-9fda-03f80fc9e42f]

ETA:[2022-11-05 20:08:00+00:00]

[2022-11-06 04:06:28,309: INFO/MainProcess] Received task: apps.web.tasks.to_auction_status_task[efe22ea6-1b9c-4bc0-af0e-40f8c2c7e8fa]

ETA:[2022-11-05 20:09:00+00:00]

[2022-11-06 04:06:28,328: INFO/MainProcess] Received task: apps.web.tasks.end_auction_task[88fc1448-76f7-4da3-8863-f90667e8b172] ETA:[

2022-11-05 20:10:00+00:00]

[2022-11-06 04:08:00,040: INFO/MainProcess] Task apps.web.tasks.to_preview_status_task[99f50a50-39d7-43ec-9fda-03f80fc9e42f] succeeded

in 0.030999999988125637s: None

[2022-11-06 04:09:00,032: INFO/MainProcess] Task apps.web.tasks.to_auction_status_task[efe22ea6-1b9c-4bc0-af0e-40f8c2c7e8fa] succeeded

in 0.014999999984866008s: None

[2022-11-06 04:10:00,021: INFO/MainProcess] Task apps.web.tasks.end_auction_task[88fc1448-76f7-4da3-8863-f90667e8b172] succeeded in 0.0

30999999988125637s: None

worker: Hitting Ctrl+C again will terminate all running tasks!

注意点1:设置里的时间戳

注意点2:celery函数直接可以相互调用

@shared_task

def f1(order_id):

result = f2.delay(...)

result.id

@shared_task

def f2(order_id):

pass

注意点3:调用celery函数时,传入的参数必须可json

task_id = twenty_four_hour.apply_async(args=[order_object.id], eta=date).id

@shared_task

def twenty_four_hour(可json):

pass

官方地址:https://docs.celeryq.dev/en/latest/django/first-steps-with-django.html#using-celery-with-django

浙公网安备 33010602011771号

浙公网安备 33010602011771号