ResNet18实现手写数字识别

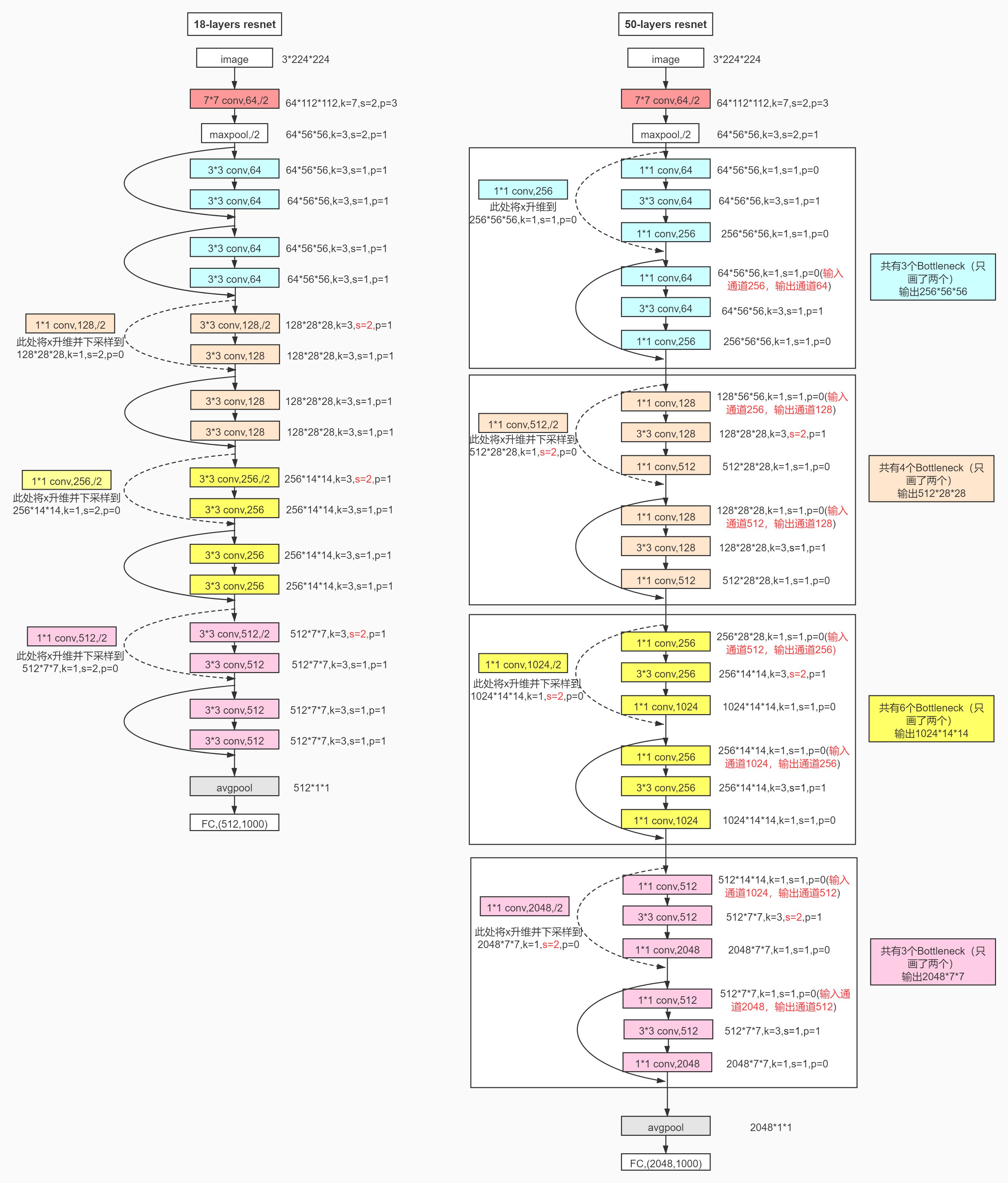

- Resnet18模型结构图

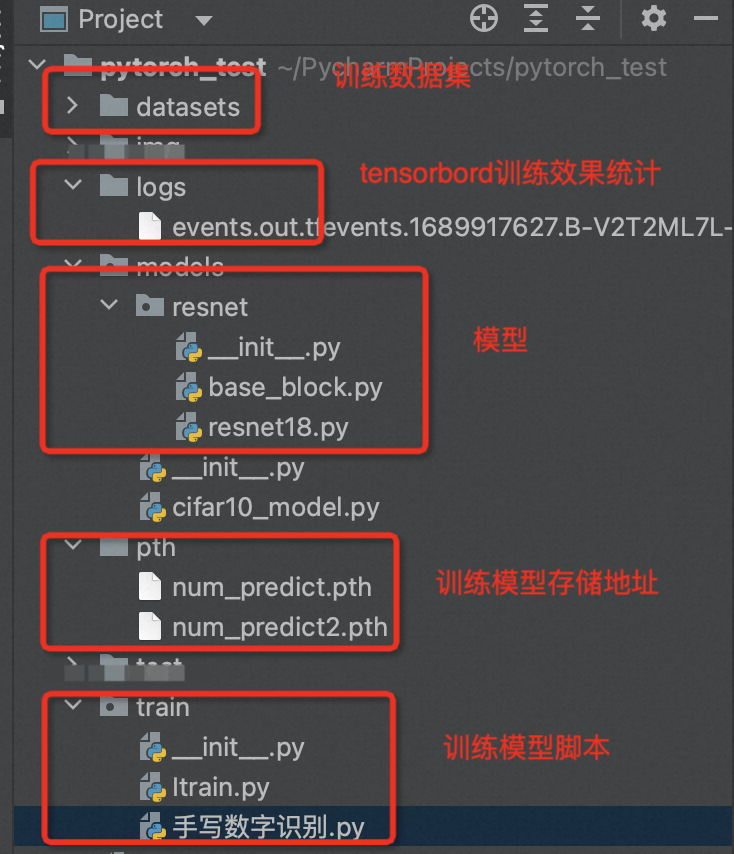

- 项目结构

- ResNet18模型搭建

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 | from torch import nnfrom torch.nn.functional import reluclass BaseBlock(nn.Module): def __init__(self, in_channels, out_channels, stride): super(BaseBlock, self).__init__() self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1) self.bn1 = nn.BatchNorm2d(out_channels) self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=stride, padding=1) self.bn2 = nn.BatchNorm2d(out_channels) def forward(self, x): out_put = self.conv1(x) out_put = relu(self.bn1(out_put)) out_put = self.conv2(out_put) out_put = self.bn2(out_put) out_put = relu(out_put + out_put) return out_putclass DownBlock(nn.Module): def __init__(self, in_channels, out_channels, stride): super(DownBlock, self).__init__() self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride[0], padding=1) self.bn1 = nn.BatchNorm2d(out_channels) self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=stride[1], padding=1) self.bn2 = nn.BatchNorm2d(out_channels) self.extra = nn.Sequential( nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=stride[0], padding=0), nn.BatchNorm2d(out_channels) ) def forward(self, x): extra_x = self.extra(x) out_put = self.conv1(x) out_put = relu(self.bn1(out_put)) out_put = self.conv2(out_put) out_put = self.bn1(out_put) out_put = relu(out_put + extra_x) return out_put |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 | from torch import nnfrom torch.nn.functional import relufrom .base_block import BaseBlock, DownBlockclass RestNEt18(nn.Module): def __init__(self, in_channels): super(RestNEt18, self).__init__() self.conv1 = nn.Conv2d(1, 64, 7, 2, 3) self.bn1 = nn.BatchNorm2d(64) self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1) self.layer1 = nn.Sequential(BaseBlock(64, 64, 1), BaseBlock(64, 64, 1)) self.layer2 = nn.Sequential(DownBlock(64, 128, [2, 1]), BaseBlock(128, 128, 1)) self.layer3 = nn.Sequential(DownBlock(128, 256, [2, 1]), BaseBlock(256, 256, 1)) self.layer4 = nn.Sequential(DownBlock(256, 512, [2, 1]), BaseBlock(512, 512, 1)) # 二位自适应平均池化,根据输入的尺寸以及设定的输出大小计算出输出元素在输入中的感受野,然后再感受野上进行平均池化 self.agv_pool = nn.AdaptiveAvgPool2d((1, 1)) self.fc = nn.Linear(512, 10) def forward(self, x): out_put = self.conv1(x) # print(out_put.shape) out_put = relu(self.bn1(out_put)) out_put = self.maxpool(out_put) # print(out_put.shape) out_put = self.layer1(out_put) # print(out_put.shape) out_put = self.layer2(out_put) # print(out_put.shape) out_put = self.layer3(out_put) # print(out_put.shape) out_put = self.layer4(out_put) # print(out_put.shape) out_put = self.agv_pool(out_put) # print(out_put.shape) out_put = out_put.reshape(out_put.shape[0], -1) # print(out_put.shape) out_put = self.fc(out_put) # print(out_put.shape) return out_put |

- 模型训练脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | from abc import ABCMeta, abstractmethodclass TrainBase(metaclass=ABCMeta): @abstractmethod def _get_data_loader(self, is_train=True): """ 获取训练数据和测试数据 :param is_train: 标识测试数据或训练数据 :return: (Feature, target) """ pass @abstractmethod def _train(self, my_model, loss_fn, optimizer, train_data): """ 训练模型 :param my_model: 模型 :param loss_fn: 损失函数 :param optimizer: 优化器 :param train_data: 训练数据 :return: """ @abstractmethod def _test(self, my_model, test_data): """ 测试模型 :param my_model: 模型 :param test_data: 测试数据 :return: """ pass @abstractmethod def predict(self, img_path): """ 使用模型预测数据 :param img_path: 图片文件地址 :return: 预测值 """ pass def run(self): """ 执行深度学习模型训练 :return: """ pass |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 | import os.pathfrom torchvision import transformsfrom torchvision import datasetsfrom torch import nn, optimfrom torch.utils.tensorboard import SummaryWriterfrom torch.utils.data import DataLoaderfrom PIL import Imagefrom models.resnet.resnet18 import RestNEt18from Itrain import TrainBaseimport numpy as npimport torchclass NumPredict(TrainBase): def __init__(self, model_path, epoch=100, lr=0.001): # 定义超参数 self.epoch = epoch self.lr = lr self.model_path = model_path # 固定参数 self.current_train_step = 0 self.acc = 0 self.test_count = 0 self.writer = None self.transforms = transforms.Compose([ transforms.Resize((224, 224)), transforms.ToTensor(), transforms.Normalize(mean=0.1307, std=0.3081) ]) def _get_data_loader(self, is_train=True): data = datasets.MNIST(root="../datasets", train=is_train, transform=self.transforms, download=True) return data def _train(self, my_model, loss_fn, optimizer, train_data): # 训练模型 for data in train_data: imgs, target = data # 计算预测值 predict = my_model(imgs) # 计算损失值 loss = loss_fn(predict, target) # 梯度归0 optimizer.zero_grad() # 反向计算梯度 loss.backward() # 反向更新 optimizer.step() # 输出损失值 self.current_train_step += 1 if self.current_train_step % 10 == 0: print("训练次数%s 损失值:%s" % (self.current_train_step, loss.item())) self.writer.add_scalar("train_loss", loss.item(), global_step=self.current_train_step) def _test(self, my_model, test_data): # 测试模型 total_acc = 0 for data in test_data: imgs, target = data with torch.no_grad(): predict = my_model(imgs) acc = (predict.argmax(1) == target).sum() print("准确率:%s" % str(acc/imgs.shape[0])) total_acc += acc self.writer.add_scalar("test_acc", total_acc / self.test_count, global_step=self.current_train_step) def run(self): """ :return: """ # 准备数据 train_data = self._get_data_loader() train_data = DataLoader(train_data, batch_size=64, shuffle=True) test_data = self._get_data_loader(is_train=False) self.test_count = len(test_data) test_data = DataLoader(test_data, batch_size=1000, shuffle=True) # 定义神经网络 my_model = RestNEt18(in_channels=1) # 断点续训 if os.path.exists(self.model_path): my_model = torch.load(self.model_path) # 定义损失函数 loss_fn = nn.CrossEntropyLoss() # 定义优化器 optimizer = optim.SGD(my_model.parameters(), lr=self.lr) self.writer = SummaryWriter(log_dir="../logs") for item in range(self.epoch): print("-----开始第%s轮测试-----" % str(item)) # 训练模型 my_model.train() self._train(my_model, loss_fn, optimizer, train_data) # 测试模型 my_model.eval() self._test(my_model, test_data) # 存储模型 torch.save(my_model, self.model_path) def predict(self, img_path): """ 使用模型预测数据 :param img_path: 图片文件地址 :return: 预测值 """ # 读取图片 img = Image.open(img_path) # 图片预处理满足模型需求 # png图片是四通道转化为3通道 img = img.convert("RGB") # 转为灰度图 img = img.convert("L") # 二值化 img = img.point(lambda x: 255 if x >= 100 else 0) # img.show() # 由于训练数据以黑色为底的图片,预测图片为白色为底,需要进行转换 img = img.point(lambda x: 255-x) # img.show() # 图片尺寸修改,转化为张量 img = self.transforms(img) # 加载模型 my_model = torch.load(self.model_path) # 预测 img = torch.reshape(img, (1, 1, 224, 224)) my_model.eval() with torch.no_grad(): predict = my_model(img) print(predict) predict = predict.argmax(1) print(predict.item()) return predictif __name__ == "__main__": # 生成模型 # pth_path = "../pth/num_predict2.pth" # num_predict = NumPredict(pth_path, epoch=1) # num_predict.run() # 预测数据 img_path = "../img/9.png" pth_path = "../pth/num_predict2.pth" num_predict = NumPredict(pth_path) num_predict.predict(img_path) |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律