Hadoop学习---Eclipse中hadoop环境的搭建

在eclipse中建立hadoop环境的支持

1.需要下载安装eclipse

2.需要hadoop-eclipse-plugin-2.6.0.jar插件,插件的终极解决方案是https://github.com/winghc/hadoop2x-eclipse-plugin下载并编译。也是可用提供好的插件。

3.复制编译好的hadoop-eclipse-plugin-2.6.0.jar复制到eclipse插件目录(plugins目录)下,如图所示

重启eclipse

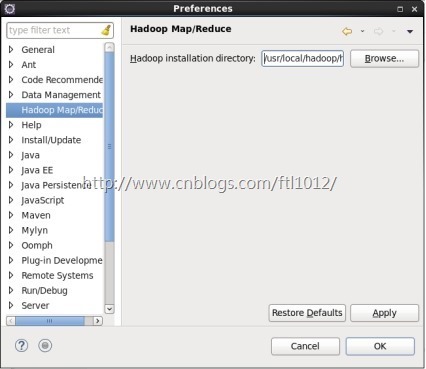

4.在eclipse中配置hadoop安装目录

windows ->preference -> hadoop Map/Reduce -> Hadoop installation directory在此处指定hadoop的安装目录

点击Apply,点击OK确定

5.配置Map Reduce视图

window -> Open Perspective ->other-> Map/Reduce -> 点击“OK”

window -> show view -> other -> Map/Reduce Locations -> 点击“OK”

6.在“Map/Reduce Location”Tab页点击图标<大象+>或者在空白的地方右键,选择“New Hadoop location...”,弹出对话框“New hadoop location...”,进行相应的配置

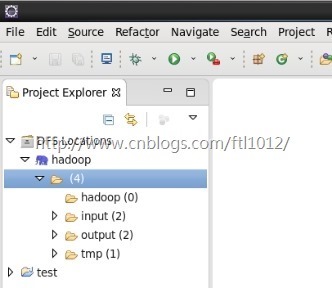

设置Location name为任意都可以,Host为hadoop集群中主节点所在主机的ip地址或主机名,这里MR Master的Port需mapred-site.xml配置文件一致为10020,DFS Master的Port需和core-site.xml配置文件的一致为9000,User name为root(安装hadoop集群的用户名)。之后点击finish。在eclipse的DFS Location目录下出现刚刚创建的Location name(这里为hadoop),eclipse就与hadoop集群连接成功,如图所示。

7.打开Project Explorers查看HDFS文件系统,如图所示

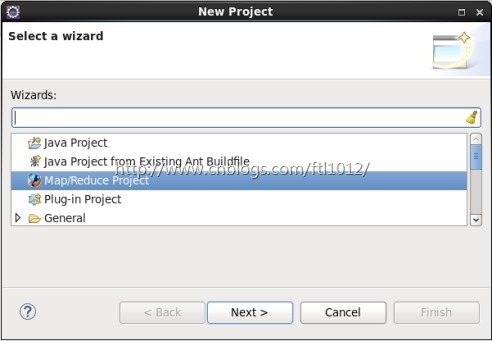

8.新建Map/Reduce任务

需要先启动Hadoop服务

File -> New -> project -> Map Reduce Project ->Next

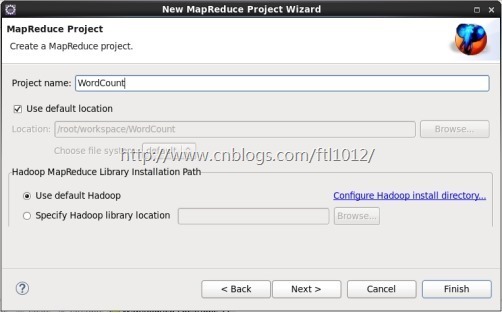

填写项目名称

编写WordCount类:

package test;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

public static class MyMap extends Mapper<Object, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

@Override

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class MyReduce extends

Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable result = new IntWritable();

@Override

public void reduce(Text key, Iterable<IntWritable> values,

Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args)

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

Job job = new Job(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(MyMap.class);

job.setReducerClass(MyReduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

运行WordCount程序:

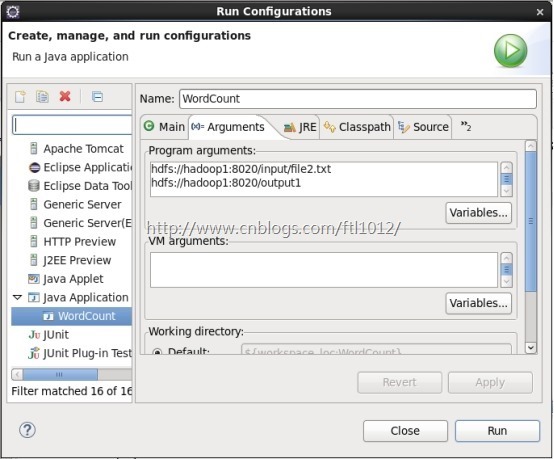

右键单击Run As -> Run Configurations

选择Java Applications ->WordCount(要运行的类)->Arguments

在Program arguments中填写输入输出路径,点击Run

-------------------------------------------

个性签名: 所有的事情到最後都是好的,如果不好,那說明事情還沒有到最後~

本文版权归作者【小a玖拾柒】和【博客园】共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利!

浙公网安备 33010602011771号

浙公网安备 33010602011771号