HBase伪分布部署

1、基础配置

#配置主机名

hostnamectl set-hostname {master, node1, node2}

#配置hosts

cat <<EOF >> /etc/hosts

192.168.2.124 master

192.168.2.125 node1

192.168.2.126 node2

EOF

#安装JDK

yum install -y java-1.8.0-openjdk-devel.x86_64

#配置java路径

cat <<EOF | sudo tee /etc/profile.d/hbase-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

export CLASSPATH=.:\$JAVA_HOME/lib/dt.jar:\$JAVA_HOME/lib/tools.jar

export PATH=$PATH:\$JAVA_HOME/bin

EOF

source /etc/profile.d/hbase-env.sh

#新建用户

adduser hadoop

echo "123456" | passwd --stdin hadoop

usermod -aG wheel hadoop

su - hadoop

#SSH基于KEY的验证

ssh-keygen -t rsa -P ""

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

2、hadoop部署

#下载hadoop

wget http://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.1.2/hadoop-3.1.2.tar.gz

tar -xzvf hadoop-3.1.2.tar.gz

rm hadoop-3.1.2-src.tar.gz

mv hadoop-3.1.2/ /usr/local/

#设置环境变量

cat <<EOF | sudo tee /etc/profile.d/hbase-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

export CLASSPATH=.:\$JAVA_HOME/lib/dt.jar:\$JAVA_HOME/lib/tools.ja

export HADOOP_HOME=/usr/local/hadoop-3.1.2

export HADOOP_HDFS_HOME=\$HADOOP_HOME

export HADOOP_MAPRED_HOME=\$HADOOP_HOME

export YARN_HOME=\$HADOOP_HOME

export HADOOP_COMMON_HOME=\$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=\$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

export PATH=\$PATH::\$JAVA_HOME/bin:\$HADOOP_HOME/bin:\$HADOOP_HOME/sbin

EOF

source /etc/profile.d/hbase-env.sh

#查看版本

hadoop version

sudo mkdir -p /hadoop/hdfs/{namenode,datanode}

#编辑配置文件

vi /usr/local/hadoop-3.1.2/etc/hadoop/hadoop-env.sh export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64 export HADOOP_PID_DIR=/hadoop/pids vi /usr/local/hadoop-3.1.2/etc/hadoop/core-site.xml <configuration> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> <description>The default file system URI</description> </property> </configuration> sudo mkdir -p /hadoop/hdfs/{namenode,datanode} sudo chown -R hadoop:hadoop /hadoop vi /usr/local/hadoop-3.1.2/etc/hadoop/hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.name.dir</name> <value>file:///hadoop/hdfs/namenode</value> </property> <property> <name>dfs.data.dir</name> <value>file:///hadoop/hdfs/datanode</value> </property> </configuration> vi /usr/local/hadoop-3.1.2/etc/hadoop/mapred-site.xml <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration> vi /usr/local/hadoop-3.1.2/etc/hadoop/yarn-site.xml <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

#namenode节点格式化

hdfs namenode -format

#启停hadoop服务

start-dfs.sh

start-yarn.sh

stop-dfs.sh

stop-yarn.sh

#查看NameNode状态

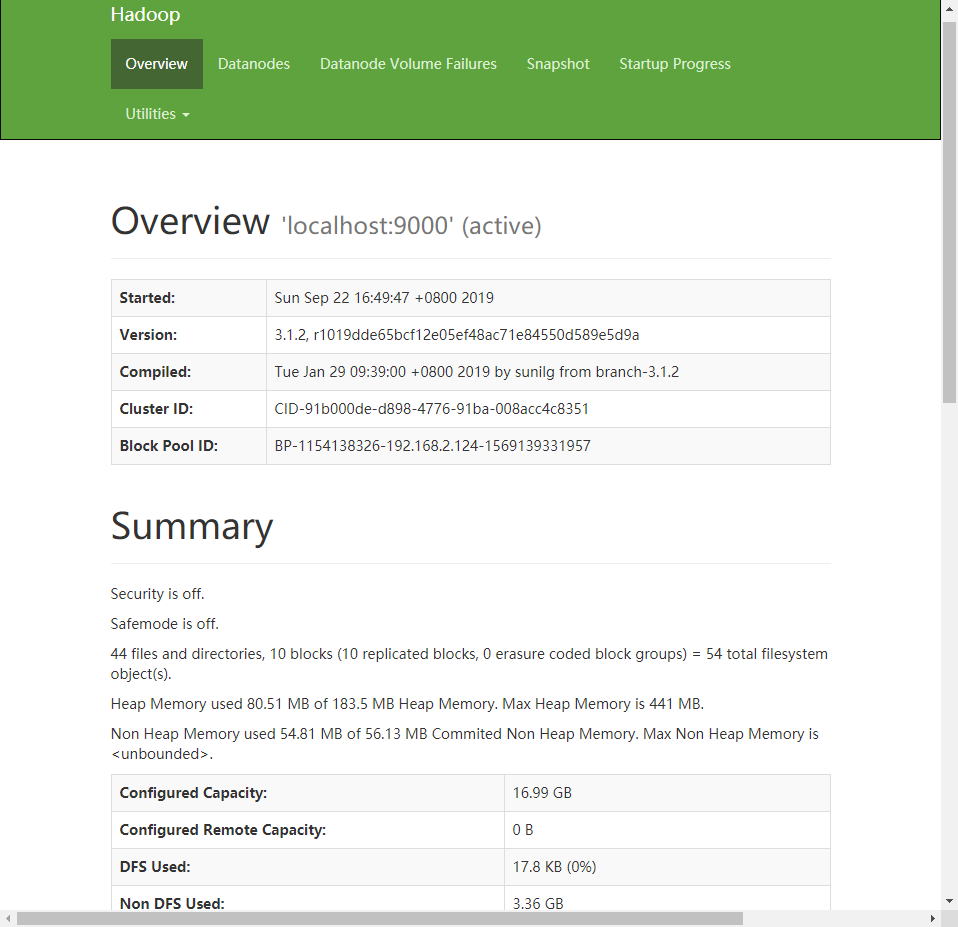

http://192.168.2.124:9870

#查看ResourceManager状态

http://192.168.2.124:8088

3、hbase部署

#下载HBase

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hbase/stable/hbase-1.4.10-bin.tar.gz

tar -zxf hbase-1.4.10-bin.tar.gz

sudo mv hbase-1.4.10 /usr/local/

#设置环境变量

cat <<EOF | sudo tee /etc/profile.d/hbase-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

export CLASSPATH=.:\$JAVA_HOME/lib/dt.jar:\$JAVA_HOME/lib/tools.ja

export HADOOP_HOME=/usr/local/hadoop-3.1.2

export HADOOP_HDFS_HOME=\$HADOOP_HOME

export HADOOP_MAPRED_HOME=\$HADOOP_HOME

export YARN_HOME=\$HADOOP_HOME

export HADOOP_COMMON_HOME=\$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=\$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.5.5

export HBASE_HOME=/usr/local/hbase-1.4.10

export PATH=\$PATH::\$JAVA_HOME/bin:\$HADOOP_HOME/bin:\$HADOOP_HOME/sbin:\$ZOOKEEPER_HOME/bin:\$HBASE_HOME/bin

EOF

source /etc/profile.d/hbase-env.sh

#修改hbase环境变量文件

vi /usr/local/hbase-1.4.10/conf/hbase-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

#export HBASE_MANAGES_ZK=false

export HBASE_PID_DIR=/hadoop/pids

sudo mkdir -p /hadoop/{zookeeper,pids}

sudo chown -R hadoop:hadoop /hadoop

#修改hbase配置文件

vi /usr/local/hbase-1.4.10/conf/hbase-site.xml <configuration> <property> <name>hbase.rootdir</name> <value>hdfs://localhost:9000/hbase</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/hadoop/zookeeper</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> </configuration>

#使用HBaseShell

hbase shell

status

#启动/停止HBase服务

start-all.sh

start-hbase.sh

stop-hbase.sh

stop-all.sh

4、最终效果

浙公网安备 33010602011771号

浙公网安备 33010602011771号