K8s学习笔记

1、管理节点配置

增加主机解析

vi /etc/hosts

192.168.2.121 master

192.168.2.122 node1

192.168.2.123 node2

停止防火墙

systemctl stop firewalld

systemctl disable firewalld

配置yum源

cd /etc/yum.repos.d/

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

vi /etc/yum.repos.d/kubernetes.repo

[Kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

enabled=1

wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

rpm --import yum-key.gpg

使用阿里云静像加速器

tee /etc/docker/daemon.json <<-'EOF'

> {

> "registry-mirrors": ["https://wgcscytr.mirror.aliyuncs.com"]

> }

> EOF

systemctl daemon-reload

安装应用

yum install docker-ce kubelet kubeadm kubectl -y

------------------------------------------------------------

使用代理获取静像

vi /usr/lib/systemd/system/docker.service

[Service]

Environment="HTTPS_PROXY=http://www.ik8s.io:10080"

Environment="NO_PROXY=127.0.0.0/8,172.20.0.0/16"

systemctl daemon-reload

忽略swap错误,或者使用(swapoff -a)禁用swap

vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

-------------------------------------------------------------

关闭swap功能

swapoff -a

--------------------------------------------------------------

启动docker

systemctl start docker

systemctl enable docker

docker info

开启bridge-nf-call-iptables

echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables

echo "1" > /proc/sys/net/bridge/bridge-nf-call-ip6tables

启动kubelet

systemctl start kubelet.service

systemctl enable kubelet.service

进行kube初始化

kubeadm init --kubernetes-version=v1.15.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

查看需要拉取哪些静像

kubeadm config images list

手工拉取静像

docker pull mirrorgooglecontainers/kube-apiserver:v1.15.1

docker pull mirrorgooglecontainers/kube-controller-manager:v1.15.1

docker pull mirrorgooglecontainers/kube-scheduler:v1.15.1

docker pull mirrorgooglecontainers/kube-proxy:v1.15.1

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd:3.3.10

docker pull coredns/coredns:1.3.1

docker pull jmgao1983/flannel

复制重命名静像

docker tag docker.io/mirrorgooglecontainers/kube-apiserver:v1.15.1 k8s.gcr.io/kube-apiserver:v1.15.1

docker tag docker.io/mirrorgooglecontainers/kube-controller-manager:v1.15.1 k8s.gcr.io/kube-controller-manager:v1.15.1

docker tag docker.io/mirrorgooglecontainers/kube-scheduler:v1.15.1 k8s.gcr.io/kube-scheduler:v1.15.1

docker tag docker.io/mirrorgooglecontainers/kube-proxy:v1.15.1 k8s.gcr.io/kube-proxy:v1.15.1

docker tag docker.io/mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag docker.io/mirrorgooglecontainers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag docker.io/coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

docker tag jmgao1983/flannel:latest quay.io/coreos/flannel:v0.11.0-amd64

删除多余静像

docker rmi mirrorgooglecontainers/kube-apiserver:v1.15.1

docker rmi mirrorgooglecontainers/kube-controller-manager:v1.15.1

docker rmi mirrorgooglecontainers/kube-scheduler:v1.15.1

docker rmi mirrorgooglecontainers/kube-proxy:v1.15.1

docker rmi mirrorgooglecontainers/pause:3.1

docker rmi mirrorgooglecontainers/etcd:3.3.10

docker rmi coredns/coredns:1.3.1

docker rmi jmgao1983/flannel:latest

查看静像

docker images

继续进行kube初始化

kubeadm init --kubernetes-version=v1.15.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

记录子节点加入命令行

kubeadm join 192.168.2.121:6443 --token 1i9d6a.o328eax5vrs2x7xf --discovery-token-ca-cert-hash sha256:c143e64032b929079f97282256c72d11eacb8c5874527de0e466ed1b47f5f5bf

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

查看集群状态

kubectl get cs

部署flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

查看当前已下载的静像

docker images

---------------------

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.15.1 89a062da739d 2 weeks ago 82.4MB

k8s.gcr.io/kube-controller-manager v1.15.1 d75082f1d121 2 weeks ago 159MB

k8s.gcr.io/kube-apiserver v1.15.1 68c3eb07bfc3 2 weeks ago 207MB

k8s.gcr.io/kube-scheduler v1.15.1 b0b3c4c404da 2 weeks ago 81.1MB

quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 6 months ago 52.6MB

k8s.gcr.io/coredns 1.3.1 eb516548c180 6 months ago 40.3MB

k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 8 months ago 258MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 19 months ago 742kB

查看名称空间

kubectl get ns

查看指定名称空间下pod状态

kubectl get pods -n kube-system -o wide

查看pod详细信息

kubectl describe pods kube-proxy-tsnpd -n kube-system

查看节点状态

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 102m v1.15.1

node1 Ready <none> 51m v1.15.1

2、子节点配置

增加主机解析

vi /etc/hosts

192.168.2.121 master master.com

192.168.2.122 node1 node1.com

192.168.2.123 node2 node2.com

配置yum源

cd /etc/yum.repos.d/

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

vi /etc/yum.repos.d/kubernetes.repo

[Kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

enabled=1

wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

rpm --import yum-key.gpg

安装应用

yum install -y docker-ce kubelet kubeadm

关闭swap功能

swapoff -a

systemctl restart docker.service kubelet.service

systemctl enable docker.service kubelet.service

加入集群

kubeadm join 192.168.2.121:6443 --token 1i9d6a.o328eax5vrs2x7xf --discovery-token-ca-cert-hash sha256:c143e64032b929079f97282256c72d11eacb8c5874527de0e466ed1b47f5f5bf

手工拉取静像

docker pull mirrorgooglecontainers/kube-proxy:v1.15.1

docker pull mirrorgooglecontainers/pause:3.1

docker pull jmgao1983/flannel

复制重命名静像

docker tag mirrorgooglecontainers/kube-proxy:v1.15.1 k8s.gcr.io/kube-proxy:v1.15.1

docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag jmgao1983/flannel:latest quay.io/coreos/flannel:v0.11.0-amd64

删除多余静像

docker rmi mirrorgooglecontainers/kube-proxy:v1.15.1

docker rmi mirrorgooglecontainers/pause:3.1

docker rmi jmgao1983/flannel:latest

3、容器编排管理

kubectl run nginx-deploy --image=nginx:1.14-alpine --port=80 --replicas=1 --dry-run=true#干跑模式,不会创建pod

kubectl run nginx-deploy --image=nginx:1.14-alpine --port=80 --replicas=1

--------------------------------------------------

kubectl get deployment -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deploy 1/1 1 1 52m nginx-deploy nginx:1.14-alpine run=nginx-deploy

-------------------------------------------------

kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-7689897d8d-ldgxj 1/1 Running 0 51m 10.244.1.2 node1 <none> <none>

--------------------------------------------------------

暴露服务访问地址,只能通过节点访问

kubectl expose deployment nginx-deploy --name=nginx --port=80 --target-port=80

查看pod信息

kubectl get pods -n kube-system -o wide

查看服务信息

kubectl get svc -n kube-system

查看DNS信息

cat /etc/resolv.conf

创建客户端

[root@master ~]# kubectl run client --image=busybox --replicas=1 -it --restart=Never

地址解析

[root@master ~]# dig -t A nginx.default.svc.cluster.local @10.96.0.10

查看服务信息

---------------------------------------

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 28h

nginx ClusterIP 10.99.55.181 <none> 80/TCP 36m

-----------------------------------------

查看服务详细信息

----------------------------------------

[root@master ~]# kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: run=nginx-deploy

Annotations: <none>

Selector: run=nginx-deploy

Type: ClusterIP

IP: 10.99.55.181

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.2:80

Session Affinity: None

Events: <none>

--------------------------------------------

服务扩容

kubectl scale --replicas=5 deployment nginx-deploy

服务缩容

kubectl scale --replicas=3 deployment nginx-deploy

服务升级

kubectl set image deployment nginx-deploy nginx-deploy=nginx:1.15

服务回滚

kubectl rllout undo deployment nginx-deploy

将服务中的type改为NodePort,即可从外部访问

kubectl edit svc nginx-deploy

type:NodePort

通过外部浏览器访问:http://192.168.2.123:30719/

kube命令补全

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

以yaml格式查看pod详细信息

kubectl get pod nginx-deploy-7689897d8d-ldgxj -o yml

查看pod访问日志信息

kubectl logs pod-demo nginx

通过yamal创建pod

mkdir manifests

vi pod-demo

apiVersion: v1 kind: Pod metadata: name: pod-demo namespace: default labels: app: nginx tier: frontend annotations: nginx/create-by: "trent hu" spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe:#第一种探针 httpGet: port: http path: /index.html initialDelaySeconds: 1 periodSeconds: 3 - name: busybox image: busybox:latest command: ["/bin/sh","-c","touch /tmp/healthy; sleep 30; rm -f /tmp/helpthy; sleep 3600"] livenessProbe:#第二种探针 exec: command: ["test","-e","/tmp/healthy"] initialDelaySeconds: 1 periodSeconds: 3 nodeSelector: disktype: ssd

创建pod

kubectl create -f pod-demo.yml

删除pod

kubectl delete -f pod-demo.yml

查看pod标签

kubectl get pods --show-labels

kubectl get pods -l app --show-labels

给pod打新标签

kubectl label pods pod-demo release=canary

kubectl label pods pod-demo release=stable --overwrite #修改标签值

通过标签查找pod

kubectl get pods -l release

kubectl get pods -l "release in (alpha,stable)"

给node打标签

kubectl label nodes node1 disktype=ssd

查看pod详细信息

kubectl describe pod pod-demo

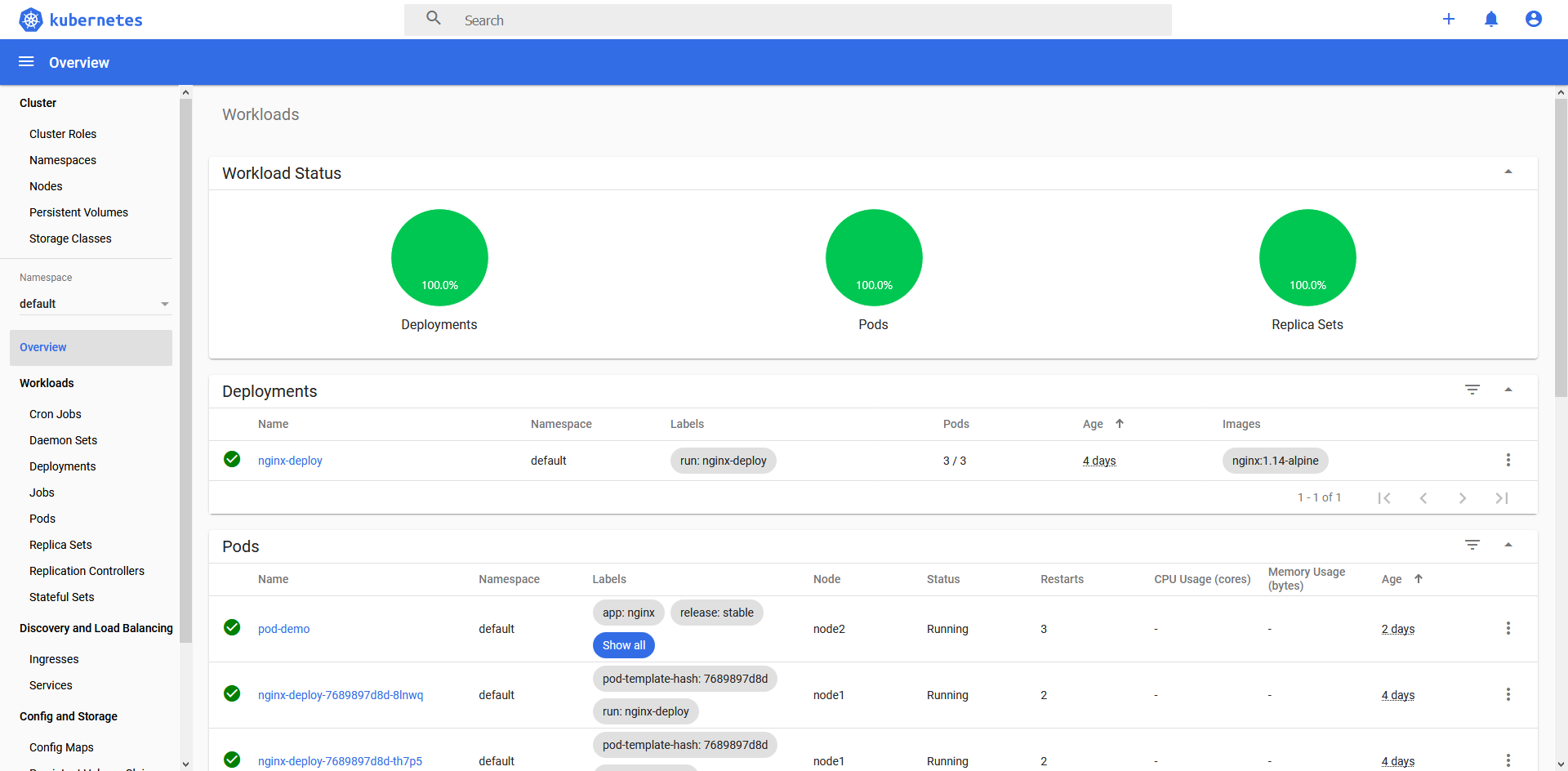

部署dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta1/aio/deploy/recommended.yaml

查看pod信息

kubectl get pods -n kube-system

手工拉取静像

kubernetesui/dashboard:v2.0.0-beta1

kubernetesui/metrics-scraper:v1.0.0

docker pull kubernetes-dashboard-amd64

重命名静像

docker tag k8s.gcr.io/kubernetes-dashboard-amd64:v2.0.0-beta1 kubernetesui/dashboard:v2.0.0-beta1

删除多余静像

docker rmi k8s.gcr.io/kubernetes-dashboard-amd64:v2.0.0-beta1

编辑资源创建对象dashboard

----------------------------------------------

vi kube-dashboard.yml

apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.0.0-beta1 imagePullPolicy: Never ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: kubernetes-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-metrics-scraper name: kubernetes-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-metrics-scraper template: metadata: labels: k8s-app: kubernetes-metrics-scraper spec: containers: - name: kubernetes-metrics-scraper image: kubernetesui/metrics-scraper:v1.0.0 imagePullPolicy: Never ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule

重新安装dashboard

kubectl apply -f kube-dashboard.yml

查看dashboard安装pod资源

kubectl get pods --all-namespaces

kubectl get pods -n kubernetes-dashboard

kubectl describe pods kubernetes-dashboard-b799bf77c-7v429 -n kubernetes-dashboard

查看dashboard安装日志

kubectl logs -f kubernetes-dashboard-b799bf77c-7v429 --namespace=kubernetes-dashboard

查看dashboard服务

kubectl get svc --all-namespaces

kubectl get svc -n kubernetes-dashboard

发布dashboard外部访问地址

kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kubernetes-dashboard

访问dashboard UI

https://192.168.2.122:32352/#/login

创建dashboard管理用户模板

vi dashboard-admin-user.yml

apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kube-system

创建绑定集群管理员模板

vi cluster-role-binding.yml

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kube-system

kubectl apply -f dashboard-admin-user.yml

kubectl apply -f cluster-role-bingding.yml

获取登录口令

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

访问dashboard UI,选择口令登录方式

https://192.168.2.122:32352/#/login

制作静像

cd /home/hello/

--------------------------------------------

vi hello.js

var http = require('http');

var handleRequest = function(request, response) {

console.log('Received request for URL: ' + request.url);

response.writeHead(200);

response.end('Hello World!');

};

var www = http.createServer(handleRequest);

www.listen(8080);

------------------------------------------------

vi Dockerfile

FROM node:6.14.2

EXPOSE 8080

COPY server.js .

CMD node server.js

生成静像

docker build /home/hello/ --tag=hello-node

常用管理

查看kubernetes版本

kubectl version

查看集群信息

kubectl cluster-info

查看节点状态

kubectl get nodes

部署应用

kubectl run kubernetes-bootcamp --image=fernandox/kubernetes-bootcamp:v1 --port=8080

查看部署应用状态

kubectl get deployments

查看副本状态

kubectl get rs -o wide

查看pod更新过程信息

kubectl get pod -w

查点部署应用POD状态

kubectl get pods

查看pod详细信息

kubectl describe pods kubernetes-bootcamp-8644c875c7-jds9m

启动代理服务

kubectl proxy

查点代理信息

curl http://localhost:8001/version

获取pod名称

export POD_NAME=$(kubectl get pods -o go-template --template '{{range .item}}{{.metadata.name}}{{"\n"}}{{end}}')

echo Name of the Pod: $POD_NAME

访问部署应用

curl http://localhost:8001/api/v1/namespaces/default/pods/kubernetes-bootcamp-8644c875c7-jds9m/proxy/

查看pod访问日志信息

kubectl logs kubernetes-bootcamp-8644c875c7-jds9m

查看pod环境变量

kubectl exec kubernetes-bootcamp-8644c875c7-jds9m env

进入pod容器

kubectl exec -it kubernetes-bootcamp-8644c875c7-jds9m bash

查看应用代码

root@kubernetes-bootcamp-8644c875c7-jds9m:/# cat server.js

在pod容器内访问应用

root@kubernetes-bootcamp-8644c875c7-jds9m:/# curl localhost:8080

退出容器

root@kubernetes-bootcamp-8644c875c7-jds9m:/# exit

查看服务信息

kubectl get services

对外公开服务地址

kubectl expose deployment kubernetes-bootcamp --type="NodePort" --port 8080

通过外部或任意节点访问部署应用

http://192.168.2.123:31751/

查看服务详细信息

kubectl describe services kubernetes-bootcamp

查看部署详细信息

kubectl describe deployments kubernetes-bootcamp

通过标签查询资源、部署应用、服务

kubectl get pods[deployments/services] -l run=kubernetes-bootcamp

给资料增加标签

kubectl label pod kubernetes-bootcamp-8644c875c7-jds9m app=v1

查看资源标签

kubectl get pods kubernetes-bootcamp-8644c875c7-jds9m --show-labels

删除服务

kubectl delete service -l run=kubernetes-bootcamp#通过标签删除

kubectl delete service kubernetes-bootcamp#通过名称删除

修改应用部署数量

kubectl scale deployments kubernetes-bootcamp --replicas=4

kubectl scale deployments kubernetes-bootcamp --replicas=2

kubectl patch deployments kubernetes-bootcamp -p '{"spec":{"replicas":2}}'

查看资源扩展信息

kubectl get pods kubernetes-bootcamp -o wide

更新应用

kubectl set image deployments/kubernetes-bootcamp kubernetes-bootcamp=mricheng/kubernetes-bootcamp:v2

应用版本回滚

kubectl rollout undo deployments kubernetes-bootcamp --to-revision=1#回滚到指定版本

查看pod更新过程信息

kubectl rollout status deployment kubernetes-bootcamp

kubectl get pods -w

浙公网安备 33010602011771号

浙公网安备 33010602011771号