OpenVINO 目标检测底层C++代码改写实现(待优化)

System: Centos7.4

I:OpenVINO 的安装

refer:https://docs.openvinotoolkit.org/latest/_docs_install_guides_installing_openvino_linux.html

II: 基于OpenVINO tensorflow 的model optimizer 参考(SSD部分)

https://www.cnblogs.com/fourmi/p/10888513.html

执行路径:/opt/intel/openvino/deployment_tools/model_optimizer

执行指令:

python3.6 mo_tf.py --input_model=/home/gsj/object-detection/test_models/ssd_inception_v2_coco_2018_01_28/frozen_inference_graph.pb --tensorflow_use_custom_operations_config /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/extensions/front/tf/ssd_v2_support.json --tensorflow_object_detection_api_pipeline_config /home/gsj/object-detection/test_models/ssd_inception_v2_coco_2018_01_28/pipeline.config --reverse_input_channels --batch 32

II:改写文件路径:

/opt/intel/openvino/inference_engine/samples/self_object_detection

包含文件

main.cpp self_object_detection_engine_head.h

CMakeLists.txt README.md self_object_detection.h

1. main.cpp

1 // Copyright (C) 2018-2019 Intel Corporation 2 // SPDX-License-Identifier: Apache-2.0 3 // 4 /******************************************************************* 5 * Copyright:2019-2030, CC 6 * File Name: Main.cpp 7 * Description: main function includes ObjectDetection, 8 * Initialize_Check, readInputimagesNames, 9 * load_inference_engine, readIRfiles, 10 * prepare_input_blobs, load_and_create_request,* 11 * prepare_input , process 12 *Author: Gao Shengjun 13 *Date: 2019-07-19 14 *******************************************************************/ 15 #include <gflags/gflags.h> 16 #include <iostream> 17 #include <string> 18 #include <memory> 19 #include <vector> 20 #include <algorithm> 21 #include <map> 22 23 #include <format_reader_ptr.h> 24 #include <inference_engine.hpp> 25 #include <ext_list.hpp> 26 27 #include <samples/common.hpp> 28 #include <samples/slog.hpp> 29 #include <samples/args_helper.hpp> 30 31 #include <vpu/vpu_tools_common.hpp> 32 #include <vpu/vpu_plugin_config.hpp> 33 34 #include "self_object_detection_engine_head.h" 35 36 37 bool ParseAndCheckCommandLine(int argc, char *argv[]) { 38 gflags::ParseCommandLineNonHelpFlags(&argc, &argv, true); 39 if (FLAGS_h) { 40 showUsage(); 41 showAvailableDevices(); 42 return false; 43 } 44 45 slog::info << "Parsing input parameters" << slog::endl; 46 47 if (FLAGS_i.empty()) { 48 throw std::logic_error("Parameter -i is not set"); 49 } 50 51 if (FLAGS_m.empty()) { 52 throw std::logic_error("Parameter -m is not set"); 53 } 54 55 return true; 56 } 57 58 59 60 static std::map<std::string, std::string> configure(const std::string& confFileName) { 61 auto config = parseConfig(confFileName); 62 63 return config; 64 } 65 66 67 68 void Initialize_Check_params(int argc,char* argv[]){ 69 slog::info << "InferenceEngine: " << GetInferenceEngineVersion()<<"\n"; 70 if(!ParseAndCheckCommandLine(argc,argv)){ 71 slog::info<<"Check successfully"<<"\n"; 72 return ; 73 } 74 return; 75 } 76 77 78 79 std::vector<std::string> readInputimagesNames(){ 80 std::vector<std::string>images; 81 parseInputFilesArguments(images); 82 if(images.empty()) throw std::logic_error("No suitable images were found"); 83 return images; 84 } 85 86 87 void load_inference_engine(Core &ie){ 88 slog::info <<"Loading Inference Engine"<<slog::endl; 89 slog::info <<"Device info:" <<slog::endl; 90 std::cout<<ie.GetVersions(FLAGS_d); 91 if(FLAGS_p_msg){ 92 ie.SetLogCallback(error_listener); 93 } 94 if (FLAGS_d.find("CPU")!=std::string::npos){ 95 ie.AddExtension(std::make_shared<Extensions::Cpu::CpuExtensions>(),"CPU"); 96 } 97 if(!FLAGS_l.empty()){ 98 IExtensionPtr extension_ptr = make_so_pointer<IExtension>(FLAGS_l); 99 ie.AddExtension(extension_ptr,"CPU"); 100 slog::info <<"CPU Extension loaded: "<<FLAGS_l<<slog::endl; 101 } 102 if(!FLAGS_c.empty()){ 103 ie.SetConfig({{PluginConfigParams::KEY_CONFIG_FILE,FLAGS_c}},"GPU"); 104 slog::info<<"GPU Extension loaded: "<<FLAGS_c<<slog::endl; 105 } 106 107 } 108 109 110 struct NetworkReader_networkinfo readIRfiles(){ 111 struct NetworkReader_networkinfo nettools; 112 std::string binFileName = fileNameNoExt(FLAGS_m) +".bin"; 113 slog::info << "Loading network files:" 114 "\n\t" << FLAGS_m << 115 "\n\t" << binFileName << 116 slog::endl; 117 CNNNetReader networkReader; 118 networkReader.ReadNetwork(FLAGS_m); 119 networkReader.ReadWeights(binFileName); 120 CNNNetwork network = networkReader.getNetwork(); 121 nettools.networkReader = networkReader; 122 nettools.network = network; 123 return nettools; 124 } 125 126 127 128 struct inputInfo_imageName prepare_input_blobs( CNNNetwork &network,CNNNetReader &networkReader, InputsDataMap &inputsInfo){ 129 slog::info << "Preparing input blobs" << slog::endl; 130 struct inputInfo_imageName res; 131 if (inputsInfo.size() != 1 && inputsInfo.size() != 2) throw std::logic_error("Sample supports topologies only with 1 or 2 inputs"); 132 std::string imageInputName,imInfoInputName; 133 InputInfo::Ptr inputInfo = nullptr; 134 SizeVector inputImageDims; 135 for(auto &item:inputsInfo){ 136 if(item.second->getInputData()->getTensorDesc().getDims().size()==4){ 137 imageInputName = item.first; 138 inputInfo = item.second; 139 slog::info<<"Batch size is "<<std::to_string(networkReader.getNetwork().getBatchSize())<<slog::endl; 140 Precision inputPrecision =Precision::U8; 141 item.second->setPrecision(inputPrecision); 142 }else if(item.second->getInputData()->getTensorDesc().getDims().size()==2){ 143 imInfoInputName = item.first; 144 Precision inputPrecision = Precision::FP32; 145 item.second->setPrecision(inputPrecision); 146 if((item.second->getTensorDesc().getDims()[1]!=3 && item.second->getTensorDesc().getDims()[1]!=6)){ 147 throw std::logic_error("Invalid input info. Should be 3 or 6 values length"); 148 } 149 } 150 } 151 if(inputInfo == nullptr){ 152 inputInfo = inputsInfo.begin()->second; 153 } 154 res.inputInfo = inputInfo; 155 res.InputName = imageInputName; 156 res.imInfoInputName=imInfoInputName; 157 return res; 158 } 159 160 161 162 struct outputInfoStruct prepare_output_blobs(CNNNetwork &network){ 163 struct outputInfoStruct res_output; 164 slog::info << "Preparing output blobs" << slog::endl; 165 OutputsDataMap outputsInfo(network.getOutputsInfo()); 166 std::string outputName; 167 DataPtr outputInfo; 168 for(const auto& out : outputsInfo){ 169 if(out.second->getCreatorLayer().lock()->type=="DetectionOutput"){ 170 outputName = out.first; 171 outputInfo = out.second; 172 } 173 } 174 if(outputInfo == nullptr){ 175 throw std::logic_error("Can't find a DetectionOutput layers in the topology"); 176 } 177 const SizeVector outputDims = outputInfo->getTensorDesc().getDims(); 178 res_output.maxProposalCount=outputDims[2]; 179 res_output.objectSize=outputDims[3]; 180 res_output.outputName=outputName; 181 if (res_output.objectSize != 7) { 182 throw std::logic_error("Output item should have 7 as a last dimension"); 183 } 184 185 if (outputDims.size() != 4) { 186 throw std::logic_error("Incorrect output dimensions for SSD model"); 187 } 188 189 outputInfo->setPrecision(Precision::FP32); 190 return res_output; 191 } 192 193 194 195 struct exenet_requests load_and_create_request(CNNNetwork& network,Core &ie){ 196 struct exenet_requests res; 197 slog::info << "Loading model to the device" << slog::endl; 198 res.executable_network = ie.LoadNetwork(network, FLAGS_d, configure(FLAGS_config)); 199 slog::info << "Create infer request" << slog::endl; 200 res.infer_request = res.executable_network.CreateInferRequest(); 201 return res; 202 } 203 204 205 206 207 struct res_outputStruct prepare_input(std::vector<std::string>& images,CNNNetwork& network, struct inputInfo_imageName& res,InferRequest& infer_request,InputsDataMap& inputsInfo){ 208 struct res_outputStruct output_res2; 209 std::vector<std::shared_ptr<unsigned char>> imageData,originalImagesData; 210 std::vector<size_t>imageWidths,imageHeights; 211 for(auto &i : images){ 212 FormatReader::ReaderPtr reader(i.c_str()); 213 if(reader.get()==nullptr){ 214 slog::warn << "Image" + i + "cannot be read!" <<slog::endl; 215 continue; 216 } 217 std::shared_ptr<unsigned char>originalData(reader->getData()); 218 std::shared_ptr<unsigned char>data(reader->getData(res.inputInfo->getTensorDesc().getDims()[3],res.inputInfo->getTensorDesc().getDims()[2])); 219 if(data.get()!=nullptr){ 220 originalImagesData.push_back(originalData); 221 imageData.push_back(data); 222 imageWidths.push_back(reader->width()); 223 imageHeights.push_back(reader->height()); 224 225 } 226 227 } 228 if(imageData.empty())throw std::logic_error("Valid input images were not found!"); 229 size_t batchSize = network.getBatchSize(); 230 slog::info << "Batch Size is "<<std::to_string(batchSize)<<slog::endl; 231 if(batchSize!=imageData.size()){ 232 slog::warn << "Number of images " + std::to_string(imageData.size()) + \ 233 "dosen't match batch size "+std::to_string(batchSize)<<slog::endl; 234 batchSize = std::min(batchSize,imageData.size()); 235 slog::warn <<"Number of images to be processed is "<<std::to_string(batchSize)<<slog::endl; 236 } 237 Blob::Ptr imageInput = infer_request.GetBlob(res.InputName); 238 size_t num_channels = imageInput->getTensorDesc().getDims()[1]; 239 size_t image_size=imageInput->getTensorDesc().getDims()[3]*imageInput->getTensorDesc().getDims()[2]; 240 unsigned char* data = static_cast<unsigned char*>(imageInput->buffer()); 241 for(size_t image_id = 0 ; image_id < std::min(imageData.size(),batchSize);++image_id){ 242 for(size_t pid = 0; pid < image_size; pid++){ 243 for(size_t ch = 0 ; ch < num_channels;++ch){ 244 data[image_id*image_size*num_channels + ch*image_size+pid] = imageData.at(image_id).get()[pid*num_channels + ch]; 245 } 246 } 247 } 248 if(res.imInfoInputName!=""){ 249 Blob::Ptr input2 = infer_request.GetBlob(res.imInfoInputName); 250 auto imInfoDim = inputsInfo.find(res.imInfoInputName)->second->getTensorDesc().getDims()[1]; 251 float *p = input2->buffer().as<PrecisionTrait<Precision::FP32>::value_type*>(); 252 for(size_t image_id=0;image_id<std::min(imageData.size(),batchSize);++image_id){ 253 p[image_id*imInfoDim+0] = static_cast<float>(inputsInfo[res.InputName]->getTensorDesc().getDims()[2]); 254 p[image_id*imInfoDim+1] = static_cast<float>(inputsInfo[res.InputName]->getTensorDesc().getDims()[3]); 255 for(size_t k = 2; k < imInfoDim; ++k){ 256 p[image_id*imInfoDim+k]=1.0f; 257 } 258 } 259 } 260 output_res2.originalImagesData=originalImagesData; 261 output_res2.imageWidths=imageWidths; 262 output_res2.imageHeights=imageHeights; 263 output_res2.batchSize=batchSize; 264 slog::info<<"Start inference"<<slog::endl; 265 infer_request.Infer(); 266 return output_res2; 267 } 268 269 270 271 void process(InferRequest& infer_request,std::string& outputName,size_t& batchSize,const int& maxProposalCount,const int& objectSize,std::vector<size_t>& imageWidths,std::vector<size_t>& imageHeights,std::vector<std::shared_ptr<unsigned char>>& originalImagesData){ 272 slog::info << "Processing output blobs" <<slog::endl; 273 const Blob::Ptr output_blob = infer_request.GetBlob(outputName); 274 const float* detection = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output_blob->buffer()); 275 std::vector<std::vector<int>>boxes(batchSize); 276 std::vector<std::vector<int>>classes(batchSize); 277 std::cout<<imageWidths[0]<<"--"<<imageHeights[0]<<" "<<detection[3]<<std::endl; 278 279 for(int curProposal = 0; curProposal < maxProposalCount;curProposal++){ 280 auto image_id = static_cast<int>(detection[curProposal * objectSize +0]); 281 if(image_id < 0){break;} 282 float confidence =detection[curProposal * objectSize + 2]; 283 auto label = static_cast<int>(detection[curProposal * objectSize + 1]); 284 auto xmin = static_cast<int>(detection[curProposal * objectSize + 3] * imageWidths[image_id]); 285 auto ymin = static_cast<int>(detection[curProposal * objectSize + 4] * imageHeights[image_id]); 286 auto xmax = static_cast<int>(detection[curProposal * objectSize + 5] * imageWidths[image_id]); 287 auto ymax = static_cast<int>(detection[curProposal * objectSize + 6] * imageHeights[image_id]); 288 std::cout << "[" << curProposal << "," << label << "] element, prob = " << confidence << 289 " (" << xmin << "," << ymin << ")-(" << xmax << "," << ymax << ")" << " batch id : " << image_id; 290 if(confidence > 0.3){ 291 classes[image_id].push_back(label); 292 boxes[image_id].push_back(xmin); 293 boxes[image_id].push_back(ymin); 294 boxes[image_id].push_back(xmax - xmin); 295 boxes[image_id].push_back(ymax - ymin); 296 std::cout << " WILL BE PRINTED!"; 297 } 298 std::cout<<std::endl; 299 } 300 for(size_t batch_id = 0 ; batch_id < batchSize; ++batch_id){ 301 addRectangles(originalImagesData[batch_id].get(),imageHeights[batch_id],imageWidths[batch_id],boxes[batch_id],classes[batch_id],BBOX_THICKNESS); 302 const std::string image_path = "out_" + std::to_string(batch_id) + ".bmp"; 303 if (writeOutputBmp(image_path, originalImagesData[batch_id].get(), imageHeights[batch_id], imageWidths[batch_id])) { 304 slog::info << "Image " + image_path + " created!" << slog::endl; 305 } else { 306 throw std::logic_error(std::string("Can't create a file: ") + image_path); 307 } 308 309 } 310 311 } 312 313 /****************************************MAIN***************************************************/ 314 315 int main(int argc, char *argv[]) { 316 try { 317 /** This sample covers certain topology and cannot be generalized for any object detection one **/ 318 // --------------------------- 1. Parsing and validation of input args --------------------------------- 319 Initialize_Check_params(argc,argv); 320 // --------------------------- 2. Read input ----------------------------------------------------------- 321 /** This vector stores paths to the processed images **/ 322 std::vector<std::string> images = readInputimagesNames(); 323 // ----------------------------------------------------------------------------------------------------- 324 325 // --------------------------- 3. Load inference engine ------------------------------------- 326 Core ie; 327 load_inference_engine(ie); 328 329 // --------------------------- 4. Read IR Generated by ModelOptimizer (.xml and .bin files) ------------ 330 CNNNetwork network = readIRfiles().network; 331 CNNNetReader networkReader = readIRfiles().networkReader; 332 // ----------------------------------------------------------------------------------------------------- 333 334 // --------------------------- 5. Prepare input blobs -------------------------------------------------- 335 /** Taking information about all topology inputs **/ 336 InputsDataMap inputsInfo(network.getInputsInfo()); 337 338 struct inputInfo_imageName res = prepare_input_blobs(network,networkReader, inputsInfo); 339 InputInfo::Ptr inputInfo = res.inputInfo; 340 std::string imageInputName = res.InputName; 341 std::string imInfoInputName = res.imInfoInputName; 342 343 // ----------------------------------------------------------------------------------------------------- 344 345 // --------------------------- 6. Prepare output blobs ------------------------------------------------- 346 struct outputInfoStruct res_output = prepare_output_blobs(network); 347 const int maxProposalCount = res_output.maxProposalCount; 348 const int objectSize =res_output.objectSize; 349 std::string outputName = res_output.outputName; 350 351 // ----------------------------------------------------------------------------------------------------- 352 353 // --------------------------- 7. Loading model to the device ------------------------------------------ 354 struct exenet_requests exe_req = load_and_create_request(network,ie); 355 ExecutableNetwork executable_network = exe_req.executable_network; 356 InferRequest infer_request = exe_req.infer_request; 357 // ----------------------------------------------------------------------------------------------------- 358 // --------------------------- 8. Prepare input -------------------------------------------------------- 359 struct res_outputStruct out_struct =prepare_input(images,network,res,infer_request,inputsInfo); 360 std::vector<std::shared_ptr<unsigned char>>originalImagesData=out_struct.originalImagesData; 361 std::vector<size_t>imageWidths=out_struct.imageWidths; 362 std::vector<size_t>imageHeights=out_struct.imageHeights; 363 size_t batchSize = out_struct.batchSize; 364 // ----------------------------------------------------------------------------------------------------- 365 // --------------------------- 9. Process output ------------------------------------------------------- 366 process(infer_request, outputName, batchSize, maxProposalCount, objectSize, imageWidths,imageHeights,originalImagesData); 367 // ----------------------------------------------------------------------------------------------------- 368 } 369 catch (const std::exception& error) { 370 slog::err << error.what() << slog::endl; 371 return 1; 372 } 373 catch (...) { 374 slog::err << "Unknown/internal exception happened." << slog::endl; 375 return 1; 376 } 377 378 slog::info << "Execution successful" << slog::endl; 379 slog::info << slog::endl << "This sample is an API example, for any performance measurements " 380 "please use the dedicated benchmark_app tool" << slog::endl; 381 return 0; 382 }

2. self_object_detection_engine_head.h

1 /******************************************************************* 2 * Copyright:2019-2030, CC 3 * File Name: self_object_detection_engine_head.h 4 * Description: main function includes ObjectDetection, 5 * Initialize_Check, readInputimagesNames, 6 * load_inference_engine, readIRfiles, 7 * prepare_input_blobs, load_and_create_request,* 8 * prepare_input , process 9 *Author: Gao Shengjun 10 *Date: 2019-07-19 11 *******************************************************************/ 12 #ifndef SELF_OBJECT_DETECTION_ENGINE_HEAD_H 13 #define SELF_OBJECT_DETECTION_ENGINE_HEAD_H 14 15 #include "self_object_detection.h" 16 #include <gflags/gflags.h> 17 #include <iostream> 18 #include <string> 19 #include <memory> 20 #include <vector> 21 #include <algorithm> 22 #include <map> 23 24 #include <format_reader_ptr.h> 25 #include <inference_engine.hpp> 26 #include <ext_list.hpp> 27 28 #include <samples/common.hpp> 29 #include <samples/slog.hpp> 30 #include <samples/args_helper.hpp> 31 32 #include <vpu/vpu_tools_common.hpp> 33 #include <vpu/vpu_plugin_config.hpp> 34 using namespace InferenceEngine; 35 ConsoleErrorListener error_listener; 36 37 typedef struct NetworkReader_networkinfo{ 38 CNNNetReader networkReader; 39 CNNNetwork network; 40 }NetworkReader_networkinfo; 41 42 typedef struct inputInfo_imageName{ 43 InputInfo::Ptr inputInfo; 44 std::string InputName; 45 std::string imInfoInputName; 46 47 }inputInfo_imageName; 48 49 typedef struct outputInfoStruct{ 50 int maxProposalCount ; 51 int objectSize; 52 std::string outputName; 53 }outputInfoStruct; 54 55 typedef struct exenet_requests{ 56 ExecutableNetwork executable_network; 57 InferRequest infer_request; 58 59 }exenet_requests; 60 61 typedef struct res_outputStruct { 62 std::vector<std::shared_ptr<unsigned char>>originalImagesData; 63 std::vector<size_t>imageWidths; 64 std::vector<size_t>imageHeights; 65 size_t batchSize; 66 67 }res_outputStruct; 68 69 #ifdef __cplusplus 70 extern "C" 71 { 72 #endif 73 74 /** 75 * @brief get the version of the InferenceEngine and parse the input params, print the help information about the instruct if needed, check the required input params eg. the file of model and the input images both are required. 76 77 78 * @ param[in] argc: the number of the input params, 79 * argv: the vector to store the input params 80 * @ param[out] None 81 */ 82 void Initialize_Check_params(int argc,char* argv[]); 83 84 85 /** 86 *@brief get the input images filenames and store the in a vector 87 88 *@ param[in] None 89 *@ param[out] return the filenames of the images 90 *@ Parse the info of the inputimages by call " parseInputFilesArguments " build in OpenVINO 91 */ 92 std::vector<std::string> readInputimagesNames(); 93 94 95 /** 96 * @brief load the extension_plugin according the specific devices,eg CPU,GPU 97 * @ param[in] Core &ie 98 * @ param[out] None 99 */ 100 void load_inference_engine(Core& ie); 101 102 103 104 /** 105 * @brief read the proto and weights files which produced by openvino model optimizer tools of the network. 106 * @ param[in] flags_m: the model files *.bin,*.xml 107 * @ param[out] Struct contains NetworkReader and the structure info of the model 108 */ 109 struct NetworkReader_networkinfo readIRfiles(); 110 111 112 113 /* 114 * @brief get the detail InputsInfo for the blob format according the InputsInfo, and set the precision of the inputs according to the input format ,eg,[NCHW]:U8 [HW]:FP32 115 * @ param[in] network,CNNNetReader,inputsInfo 116 * @ param[out] strcut contains the inputInfo,ImageInputName,the info name of the input Image 117 */ 118 struct inputInfo_imageName prepare_input_blobs( CNNNetwork &network,CNNNetReader &networkReader, InputsDataMap &inputsInfo); 119 120 121 122 /* 123 * @brief get the info of the ouputs from the network by calling the OPENVINO's "getOutputsInof()" 124 * @ param[in] network 125 * @ param[out] strcut contains the model's maxProposals,objectSize,outputName 126 */ 127 struct outputInfoStruct prepare_output_blobs(CNNNetwork &network); 128 129 130 /* 131 * @brief Load the network to the device and create the infer request 132 * @ param[in] network, Core 133 * @ param[out] struct contains the excuteable network and the inferRequest 134 */ 135 struct exenet_requests load_and_create_request(CNNNetwork& network,Core &ie); 136 137 138 /* 139 * @brief read the input images and create the input blob and start the infer request 140 * @ param[in] images:the path of the images , network: the info of the model, inputInfo_imageName,inputInfo_imageName, infer_request, inputsInfo 141 * @ param[out] return the data of the input images and its height ,width,batchSize. 142 */ 143 struct res_outputStruct prepare_input(std::vector<std::string>& images,CNNNetwork& network, struct inputInfo_imageName& res,InferRequest& infer_request,InputsDataMap& inputsInfo); 144 145 146 /* 147 * @brief get the output according the output_blob,and get the (label,xmin,ymin,xmax,ymax) about the detection boxes , I set the thread value :0.3 it gets the better result. 148 * @param [in] infer_request, outputName,batchSize,maxProposalCount,objectSize,imageWidths,imageHeights,originalImagesData 149 * @param [out] None 150 */ 151 void process(InferRequest& infer_request,std::string& outputName,size_t& batchSize,const int& maxProposalCount,const int& objectSize,std::vector<size_t>& imageWidths,std::vector<size_t>& imageHeights,std::vector<std::shared_ptr<unsigned char>>& originalImagesData); 152 #ifdef __cplusplus 153 } 154 #endif 155 #endif

3.self_object_detection.h

1 // Copyright (C) 2018-2019 Intel Corporation 2 // SPDX-License-Identifier: Apache-2.0 3 // 4 5 #pragma once 6 7 #include <string> 8 #include <vector> 9 #include <gflags/gflags.h> 10 #include <iostream> 11 12 /* thickness of a line (in pixels) to be used for bounding boxes */ 13 #define BBOX_THICKNESS 2 14 15 /// @brief message for help argument 16 static const char help_message[] = "Print a usage message."; 17 18 /// @brief message for images argument 19 static const char image_message[] = "Required. Path to an .bmp image."; 20 21 /// @brief message for model argument 22 static const char model_message[] = "Required. Path to an .xml file with a trained model."; 23 24 /// @brief message for plugin argument 25 static const char plugin_message[] = "Plugin name. For example MKLDNNPlugin. If this parameter is pointed, " \ 26 "the sample will look for this plugin only"; 27 28 /// @brief message for assigning cnn calculation to device 29 static const char target_device_message[] = "Optional. Specify the target device to infer on (the list of available devices is shown below). " \ 30 "Default value is CPU. Use \"-d HETERO:<comma-separated_devices_list>\" format to specify HETERO plugin. " \ 31 "Sample will look for a suitable plugin for device specified"; 32 33 /// @brief message for clDNN custom kernels desc 34 static const char custom_cldnn_message[] = "Required for GPU custom kernels. "\ 35 "Absolute path to the .xml file with the kernels descriptions."; 36 37 /// @brief message for user library argument 38 static const char custom_cpu_library_message[] = "Required for CPU custom layers. " \ 39 "Absolute path to a shared library with the kernels implementations."; 40 41 /// @brief message for plugin messages 42 static const char plugin_err_message[] = "Optional. Enables messages from a plugin"; 43 44 /// @brief message for config argument 45 static constexpr char config_message[] = "Path to the configuration file. Default value: \"config\"."; 46 47 /// \brief Define flag for showing help message <br> 48 DEFINE_bool(h, false, help_message); 49 50 /// \brief Define parameter for set image file <br> 51 /// It is a required parameter 52 DEFINE_string(i, "", image_message); 53 54 /// \brief Define parameter for set model file <br> 55 /// It is a required parameter 56 DEFINE_string(m, "", model_message); 57 58 /// \brief device the target device to infer on <br> 59 DEFINE_string(d, "CPU", target_device_message); 60 61 /// @brief Define parameter for clDNN custom kernels path <br> 62 /// Default is ./lib 63 DEFINE_string(c, "", custom_cldnn_message); 64 65 /// @brief Absolute path to CPU library with user layers <br> 66 /// It is a optional parameter 67 DEFINE_string(l, "", custom_cpu_library_message); 68 69 /// @brief Enable plugin messages 70 DEFINE_bool(p_msg, false, plugin_err_message); 71 72 /// @brief Define path to plugin config 73 DEFINE_string(config, "", config_message); 74 75 /** 76 * \brief This function show a help message 77 */ 78 static void showUsage() { 79 std::cout << std::endl; 80 std::cout << "object_detection_sample_ssd [OPTION]" << std::endl; 81 std::cout << "Options:" << std::endl; 82 std::cout << std::endl; 83 std::cout << " -h " << help_message << std::endl; 84 std::cout << " -i \"<path>\" " << image_message << std::endl; 85 std::cout << " -m \"<path>\" " << model_message << std::endl; 86 std::cout << " -l \"<absolute_path>\" " << custom_cpu_library_message << std::endl; 87 std::cout << " Or" << std::endl; 88 std::cout << " -c \"<absolute_path>\" " << custom_cldnn_message << std::endl; 89 std::cout << " -d \"<device>\" " << target_device_message << std::endl; 90 std::cout << " -p_msg " << plugin_err_message << std::endl; 91 }

IV: 编译

执行路径:

/opt/intel/openvino/inference_engine/samples

sh ./build_samples.sh

V: Test

执行路径:

/root/inference_engine_samples_build/intel64/Release

执行命令:

./self_object_detection -m /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.xml -d CPU -i /home/gsj/dataset/coco_val/val8

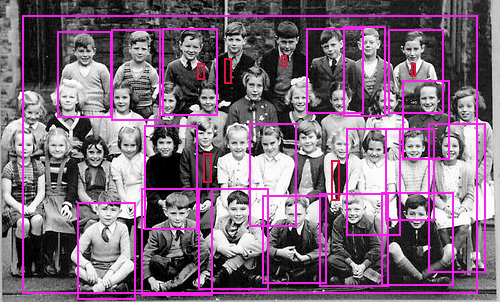

VI: result

[ INFO ] InferenceEngine: API version ............ 2.0 Build .................. custom_releases/2019/R2_3044732e25bc7dfbd11a54be72e34d512862b2b3 Description ....... API Parsing input parameters [ INFO ] Files were added: 8 [ INFO ] /home/gsj/dataset/coco_val/val8/000000000785.jpg [ INFO ] /home/gsj/dataset/coco_val/val8/000000000139.jpg [ INFO ] /home/gsj/dataset/coco_val/val8/000000000724.jpg [ INFO ] /home/gsj/dataset/coco_val/val8/000000000285.jpg [ INFO ] /home/gsj/dataset/coco_val/val8/000000000802.jpg [ INFO ] /home/gsj/dataset/coco_val/val8/000000000632.jpg [ INFO ] /home/gsj/dataset/coco_val/val8/000000000872.jpg [ INFO ] /home/gsj/dataset/coco_val/val8/000000000776.jpg [ INFO ] Loading Inference Engine [ INFO ] Device info: CPU MKLDNNPlugin version ......... 2.0 Build ........... 26451 [ INFO ] Loading network files: /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.xml /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.bin [ INFO ] Loading network files: /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.xml /opt/intel/openvino_2019.2.201/deployment_tools/model_optimizer/./frozen_inference_graph.bin [ INFO ] Preparing input blobs [ INFO ] Batch size is 32 [ INFO ] Preparing output blobs [ INFO ] Loading model to the device [ INFO ] Create infer request [ WARNING ] Image is resized from (640, 425) to (300, 300) [ WARNING ] Image is resized from (640, 426) to (300, 300) [ WARNING ] Image is resized from (375, 500) to (300, 300) [ WARNING ] Image is resized from (586, 640) to (300, 300) [ WARNING ] Image is resized from (424, 640) to (300, 300) [ WARNING ] Image is resized from (640, 483) to (300, 300) [ WARNING ] Image is resized from (621, 640) to (300, 300) [ WARNING ] Image is resized from (428, 640) to (300, 300) [ INFO ] Batch Size is 32 [ WARNING ] Number of images 8dosen't match batch size 32 [ WARNING ] Number of images to be processed is 8 [ INFO ] Start inference [ INFO ] Processing output blobs 640--425 0.452099 [0,1] element, prob = 0.851202 (289,42)-(485,385) batch id : 0 WILL BE PRINTED! [1,35] element, prob = 0.495117 (205,360)-(600,397) batch id : 0 WILL BE PRINTED! [2,1] element, prob = 0.376695 (380,177)-(398,208) batch id : 1 WILL BE PRINTED! [3,1] element, prob = 0.337178 (398,159)-(459,292) batch id : 1 WILL BE PRINTED! [4,62] element, prob = 0.668834 (365,214)-(428,310) batch id : 1 WILL BE PRINTED! [5,62] element, prob = 0.558071 (294,220)-(365,321) batch id : 1 WILL BE PRINTED! [6,62] element, prob = 0.432652 (388,205)-(440,307) batch id : 1 WILL BE PRINTED! [7,62] element, prob = 0.313619 (218,228)-(300,319) batch id : 1 WILL BE PRINTED! [8,64] element, prob = 0.488229 (217,178)-(269,217) batch id : 1 WILL BE PRINTED! [9,72] element, prob = 0.885867 (9,165)-(149,263) batch id : 1 WILL BE PRINTED! [10,86] element, prob = 0.305516 (239,196)-(255,217) batch id : 1 WILL BE PRINTED! [11,3] element, prob = 0.332538 (117,284)-(146,309) batch id : 2 WILL BE PRINTED! [12,13] element, prob = 0.992781 (122,73)-(254,223) batch id : 2 WILL BE PRINTED! [13,23] element, prob = 0.988277 (4,75)-(580,632) batch id : 3 WILL BE PRINTED! [14,79] element, prob = 0.981469 (29,292)-(161,516) batch id : 4 WILL BE PRINTED! [15,82] element, prob = 0.94848 (244,189)-(412,525) batch id : 4 WILL BE PRINTED! [16,62] element, prob = 0.428106 (255,233)-(348,315) batch id : 5 WILL BE PRINTED! [17,64] element, prob = 0.978832 (336,217)-(429,350) batch id : 5 WILL BE PRINTED! [18,64] element, prob = 0.333557 (192,148)-(235,234) batch id : 5 WILL BE PRINTED! [19,65] element, prob = 0.985633 (-5,270)-(404,477) batch id : 5 WILL BE PRINTED! [20,84] element, prob = 0.882272 (461,246)-(472,288) batch id : 5 WILL BE PRINTED! [21,84] element, prob = 0.874527 (494,192)-(503,223) batch id : 5 WILL BE PRINTED! [22,84] element, prob = 0.850498 (482,247)-(500,285) batch id : 5 WILL BE PRINTED! [23,84] element, prob = 0.844409 (461,296)-(469,335) batch id : 5 WILL BE PRINTED! [24,84] element, prob = 0.787552 (414,24)-(566,364) batch id : 5 WILL BE PRINTED! [25,84] element, prob = 0.748578 (524,189)-(533,224) batch id : 5 WILL BE PRINTED! [26,84] element, prob = 0.735457 (524,98)-(535,133) batch id : 5 WILL BE PRINTED! [27,84] element, prob = 0.712015 (528,49)-(535,84) batch id : 5 WILL BE PRINTED! [28,84] element, prob = 0.689215 (496,51)-(504,82) batch id : 5 WILL BE PRINTED! [29,84] element, prob = 0.620327 (456,192)-(467,224) batch id : 5 WILL BE PRINTED! [30,84] element, prob = 0.614535 (481,154)-(514,173) batch id : 5 WILL BE PRINTED! [31,84] element, prob = 0.609089 (506,151)-(537,172) batch id : 5 WILL BE PRINTED! [32,84] element, prob = 0.604894 (456,148)-(467,181) batch id : 5 WILL BE PRINTED! [33,84] element, prob = 0.554959 (485,102)-(505,125) batch id : 5 WILL BE PRINTED! [34,84] element, prob = 0.549844 (508,244)-(532,282) batch id : 5 WILL BE PRINTED! [35,84] element, prob = 0.404613 (437,143)-(443,180) batch id : 5 WILL BE PRINTED! [36,84] element, prob = 0.366167 (435,245)-(446,287) batch id : 5 WILL BE PRINTED! [37,84] element, prob = 0.320608 (438,191)-(446,226) batch id : 5 WILL BE PRINTED! [38,1] element, prob = 0.996094 (197,115)-(418,568) batch id : 6 WILL BE PRINTED! [39,1] element, prob = 0.9818 (266,99)-(437,542) batch id : 6 WILL BE PRINTED! [40,1] element, prob = 0.517957 (152,117)-(363,610) batch id : 6 WILL BE PRINTED! [41,40] element, prob = 0.302339 (154,478)-(192,542) batch id : 6 WILL BE PRINTED! [42,88] element, prob = 0.98227 (8,24)-(372,533) batch id : 7 WILL BE PRINTED! [43,88] element, prob = 0.924668 (0,268)-(323,640) batch id : 7 WILL BE PRINTED! [ INFO ] Image out_0.bmp created! [ INFO ] Image out_1.bmp created! [ INFO ] Image out_2.bmp created! [ INFO ] Image out_3.bmp created! [ INFO ] Image out_4.bmp created! [ INFO ] Image out_5.bmp created! [ INFO ] Image out_6.bmp created! [ INFO ] Image out_7.bmp created! [ INFO ] Execution successful [ INFO ] This sample is an API example, for any performance measurements please use the dedicated benchmark_app tool

浙公网安备 33010602011771号

浙公网安备 33010602011771号