Hadoop分布式集群安装

集群搭建之前需要做好NN节点到其他所有节点的免密认证,关闭所有服务器的selinux和防火墙

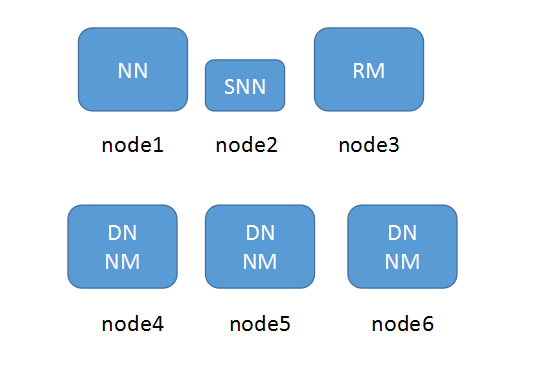

架构图

1.更改所有服务器的主机名和hosts文件对应关系

[root@localhost ~]# hostnamectl set-hostname node1 [root@localhost ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.159.129 node1 192.168.159.130 node2 192.168.159.132 node3 192.168.159.133 node4 192.168.159.136 node5 192.168.159.137 node6

2.NameNode节点做对所有主机的免密登陆

[root@localhost ~]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:lIvGygyJHycNTZJ0KeuE/BM0BWGGq/UTgMUQNo7Qm2M root@node1 The key's randomart image is: +---[RSA 2048]----+ |+@=**o | |*.XB. . | |oo+*o o | |.+E=.. o . | |o=*o+.+ S | |...Xoo | | . =. | | | | | +----[SHA256]-----+ [root@localhost ~]# for i in `seq 1 6`;do ssh-copy-id root@node$i;done

3.同步所有服务器时间

[root@node1 ~]# ansible all -m shell -o -a 'ntpdate ntp1.aliyun.com' node4 | CHANGED | rc=0 | (stdout) 20 Feb 16:08:37 ntpdate[2477]: adjust time server 120.25.115.20 offset 0.001546 sec node6 | CHANGED | rc=0 | (stdout) 20 Feb 16:08:37 ntpdate[2470]: adjust time server 120.25.115.20 offset 0.000220 sec node2 | CHANGED | rc=0 | (stdout) 20 Feb 16:08:37 ntpdate[2406]: adjust time server 120.25.115.20 offset -0.002414 sec node3 | CHANGED | rc=0 | (stdout) 20 Feb 16:08:37 ntpdate[2465]: adjust time server 120.25.115.20 offset -0.001185 sec node5 | CHANGED | rc=0 | (stdout) 20 Feb 16:08:37 ntpdate[2466]: adjust time server 120.25.115.20 offset 0.005768 sec node7 | CHANGED | rc=0 | (stdout) 20 Feb 16:08:43 ntpdate[2503]: adjust time server 120.25.115.20 offset 0.000703 sec node8 | CHANGED | rc=0 | (stdout) 20 Feb 16:08:43 ntpdate[2426]: adjust time server 120.25.115.20 offset -0.001338 sec

4.所有服务器安装JDK环境并配置好环境变量

[root@node1 ~]# tar -xf jdk-8u144-linux-x64.gz -C /usr/ [root@node1 ~]# ln -sv /usr/jdk1.8.0_144/ /usr/java "/usr/java" -> "/usr/jdk1.8.0_144/" [root@node1 ~]# cat /etc/profile.d/java.sh export JAVA_HOME=/usr/java export PATH=$PATH:$JAVA_HOME/bin [root@node1 ~]# source /etc/profile.d/java.sh [root@node1 ~]# java -version java version "1.8.0_144" Java(TM) SE Runtime Environment (build 1.8.0_144-b01) Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode)

5.所有服务器配置Hadoop环境

[root@node1 ~]# tar xf hadoop-2.9.2.tar.gz -C /usr

[root@node1 ~]# ln -sv /usr/hadoop-2.9.2/ /usr/hadoop

"/usr/hadoop" -> "/usr/hadoop-2.9.2/"

[root@node1 ~]# cat /etc/profile.d/hadoop.sh

export HADOOP_HOME=/usr/hadoop-2.9.2

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

更改hadoop程序包内 hadoop-env.sh,mapred-env.sh,yarn-env.sh中的JAVA_HOME环境变量

[root@node1 ~]# grep 'export JAVA_HOME' /usr/hadoop/etc/hadoop/{hadoop-env.sh,mapred-env.sh,yarn-env.sh}

/usr/hadoop/etc/hadoop/hadoop-env.sh:export JAVA_HOME=/usr/java

/usr/hadoop/etc/hadoop/mapred-env.sh:export JAVA_HOME=/usr/java

/usr/hadoop/etc/hadoop/yarn-env.sh:export JAVA_HOME=/usr/java

6.core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/data/hadoop-new</value>

</property>

</configuration>

6.1在所有服务器上对应创建hadoop数据存放目录

7.hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node2:50090</value>

</property>

</configuration>

8.mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

9.yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node3</value>

</property>

</configuration>

10.slaves

node4 node5 node6

11.namenode节点初始化hdfs集群信息

[root@node1 hadoop-new]# hdfs namenode -format

12.启动所有节点,start-all脚本无法启动resourcemanager节点,需要手动到RM节点启动

[root@node1 hadoop-new]# sbin/start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [node1] node1: starting namenode, logging to /usr/hadoop-new/logs/hadoop-root-namenode-node1.out node5: starting datanode, logging to /usr/hadoop-new/logs/hadoop-root-datanode-node5.out node4: starting datanode, logging to /usr/hadoop-new/logs/hadoop-root-datanode-node4.out node6: starting datanode, logging to /usr/hadoop-new/logs/hadoop-root-datanode-node6.out Starting secondary namenodes [node2] node2: starting secondarynamenode, logging to /usr/hadoop-new/logs/hadoop-root-secondarynamenode-node2.out starting yarn daemons starting resourcemanager, logging to /usr/hadoop-new/logs/yarn-root-resourcemanager-node1.out node4: starting nodemanager, logging to /usr/hadoop-new/logs/yarn-root-nodemanager-node4.out node5: starting nodemanager, logging to /usr/hadoop-new/logs/yarn-root-nodemanager-node5.out node6: starting nodemanager, logging to /usr/hadoop-new/logs/yarn-root-nodemanager-node6.out

13.手动启动resourcemanager节点

[root@node3 ~]# /usr/hadoop-new/sbin/yarn-daemon.sh start resourcemanager starting resourcemanager, logging to /usr/hadoop-new/logs/yarn-root-resourcemanager-node3.out

14.查看所有节点的进程信息

[root@node1 hadoop-new]# jps 5043 NameNode 5503 Jps [root@node2 ~]# jps 1396 SecondaryNameNode 1557 Jps [root@node3 ~]# jps 1414 ResourceManager 1967 Jps [root@node4 ~]# jps 1505 NodeManager 1686 Jps 1401 DataNode [root@node5 ~]# jps 1508 NodeManager 1404 DataNode 1678 Jps [root@node6 ~]# jps 1409 DataNode 1690 Jps 1519 NodeManager

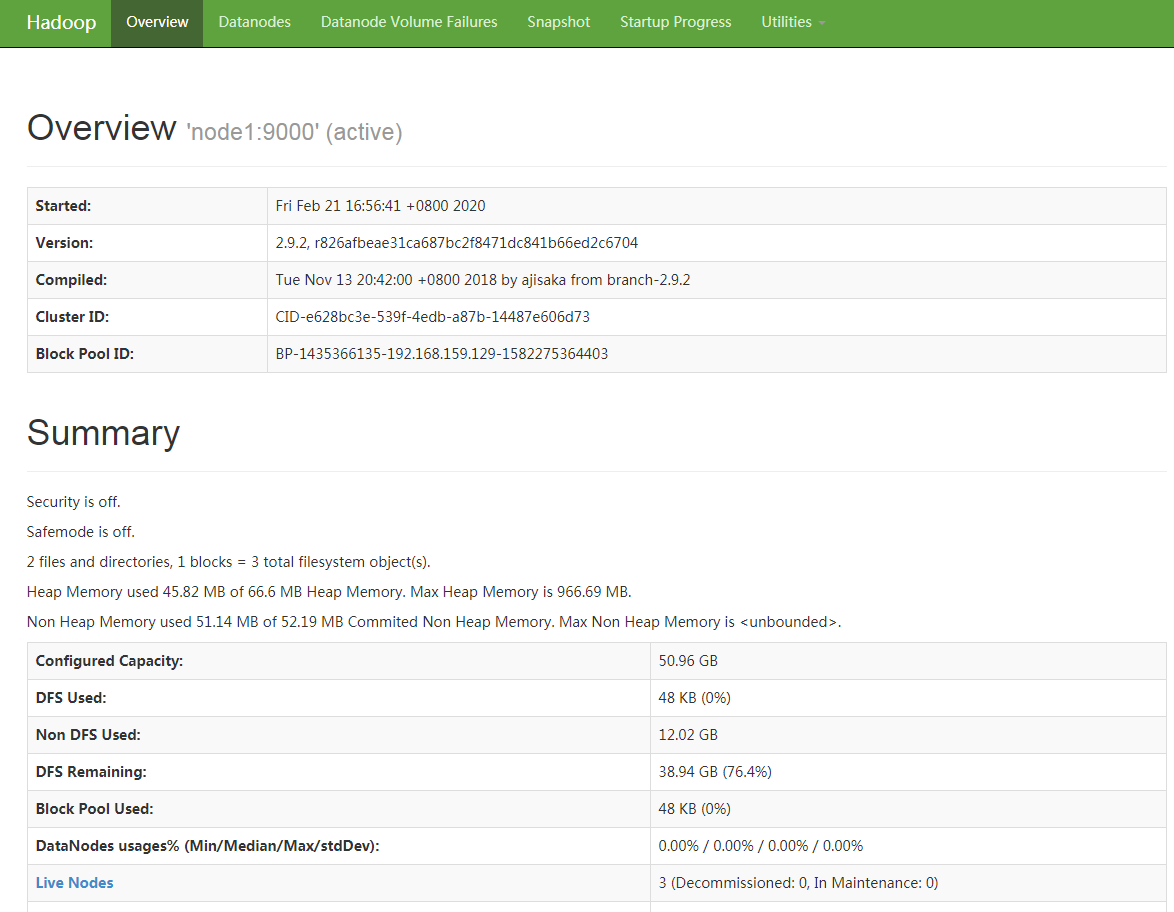

- 查看namenode web信息

- 查看resourcemanager web信息

- 向HDFS上传一个文件

/usr/hadoop-new/bin/hadoop dfs -put /etc/fstab /

- 单词统计案例

/usr/hadoop-new/bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.2.jar wordcount /fstab /word.count

初学linux,每学到一点东西就写一点,如有不对的地方,恳请包涵!

浙公网安备 33010602011771号

浙公网安备 33010602011771号