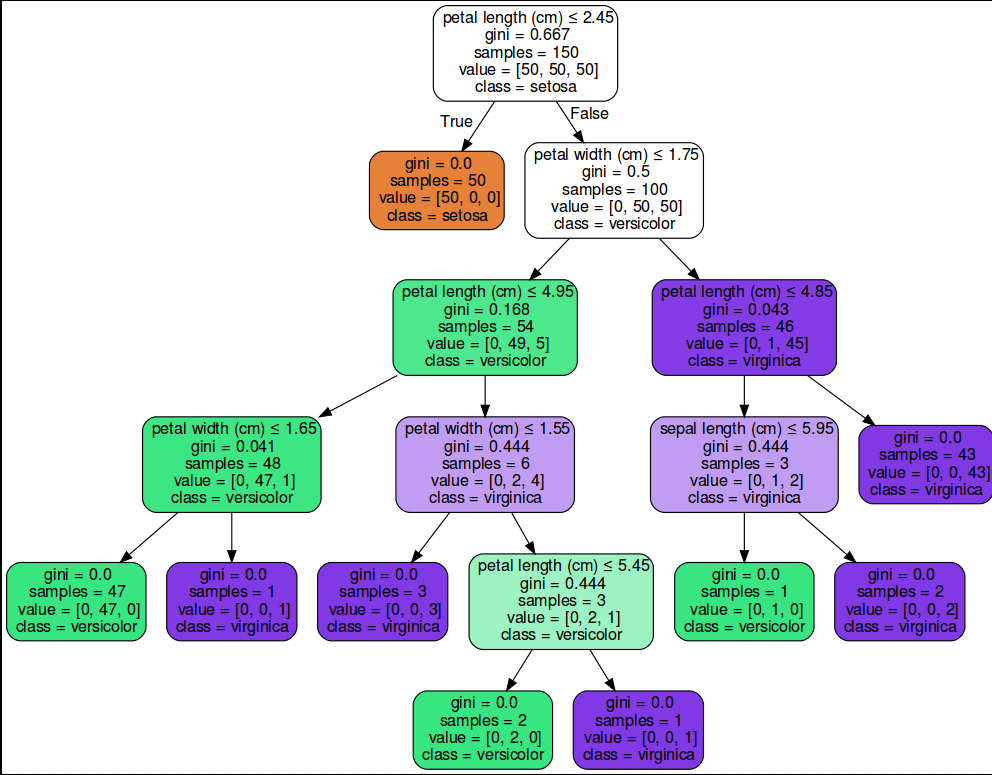

绘制决策树

绘制出决策树

经过训练的决策树,我们可以使用 export_graphviz 导出器以 Graphviz 格式导出决策树. 如果你是用 conda 来管理包,那么安装 graphviz 二进制文件和 python 包可以用以下指令安装

conda install python-graphviz

或者,可以从 graphviz 项目主页下载 graphviz 的二进制文件,并从 pypi 安装 Python 包装器,并安装 ‘pip install graphviz` .以下是在整个 iris 数据集上训练的上述树的 graphviz 导出示例; 其结果被保存在 iris.pdf 中:

from sklearn.datasets import load_iris

from sklearn import tree

iris = load_iris()

clf_iris = tree.DecisionTreeClassifier()

clf_iris = clf.fit(iris.data, iris.target)* 下面的代码可以到处我们的决策树 *

:func:export_graphviz 出导出还支持各种美化,包括通过他们的类着色节点(或回归值),如果需要,使用显式变量和类名。

* 注意:默认情况下,会导出图形文件*

* 更详细的内容请参考 sklearn官方文档:sklearn.tree.export_graphviz*

Jupyter notebook也可以自动找出相同的模块

import graphviz # doctest: +SKIP

dot_data = tree.export_graphviz(clf, out_file=None) # doctest: +SKIP

graph = graphviz.Source(dot_data) # doctest: +SKIP

graph.render("iris") # doctest: +SKIP

dot_data = tree.export_graphviz(clf, out_file=None, # doctest: +SKIP

feature_names=iris.feature_names, # doctest: +SKIP

class_names=iris.target_names, # doctest: +SKIP

filled=True, rounded=True, # doctest: +SKIP

special_characters=True) # doctest: +SKIP

graph = graphviz.Source(dot_data) # doctest: +SKIP

graph # doctest: +SKIP* 之后依旧可以使用该函数进行预测数据等操作*

clf_iris.predict(iris.data[:1, :]) array([0])

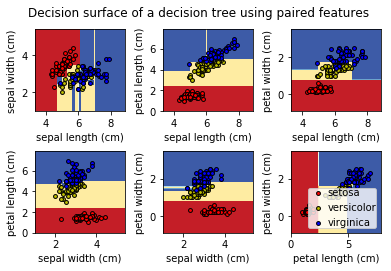

画出决策树的分类区域

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn.tree import DecisionTreeClassifier

# Parameters

n_classes = 3

plot_colors = "ryb"

plot_step = 0.02

# Load data

iris = load_iris()

for pairidx, pair in enumerate([[0, 1], [0, 2], [0, 3],

[1, 2], [1, 3], [2, 3]]):

# We only take the two corresponding features

X = iris.data[:, pair]

y = iris.target

# Train

clf = DecisionTreeClassifier().fit(X, y)

# Plot the decision boundary

plt.subplot(2, 3, pairidx + 1)

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, plot_step),

np.arange(y_min, y_max, plot_step))

plt.tight_layout(h_pad=0.5, w_pad=0.5, pad=2.5)

Z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

cs = plt.contourf(xx, yy, Z, cmap=plt.cm.RdYlBu)

plt.xlabel(iris.feature_names[pair[0]])

plt.ylabel(iris.feature_names[pair[1]])

# Plot the training points

for i, color in zip(range(n_classes), plot_colors):

idx = np.where(y == i)

plt.scatter(X[idx, 0], X[idx, 1], c=color, label=iris.target_names[i],

cmap=plt.cm.RdYlBu, edgecolor='black', s=15)

plt.suptitle("Decision surface of a decision tree using paired features")

plt.legend(loc='lower right', borderpad=0, handletextpad=0)

plt.axis("tight")

plt.show()