当我们的模型训练好之后,需要将其参数(或整个模型)保存起来以便日后直接使用。pytorch提供了两种方法帮助我们快速、方便地保存训练好的模型

步骤

- 训练模型

- 保存模型

- 加载模型

训练模型

我们以二分类问题为例,训练一个神经网络,代码如下:

import torch

import torch.nn.functional as F

import matplotlib.pyplot as plt

n_data = torch.ones(100, 2)

x0 = torch.normal(2 * n_data, 1)

y0 = torch.zeros(100)

x1 = torch.normal(-2 * n_data, 1)

y1 = torch.ones(100)

x = torch.cat((x0, x1), 0).type(torch.FloatTensor)

y = torch.cat((y0, y1)).type(torch.LongTensor)

class Net(torch.nn.Module):

def __init__(self, input_size, hidden_size, output_size):

super().__init__()

self.linear1 = torch.nn.Linear(input_size, hidden_size)

self.pred = torch.nn.Linear(hidden_size, output_size)

def forward(self, x):

output = F.relu(self.linear1(x))

output = self.pred(output)

return output

def train(epochs):

model = Net(2, 64, 2)

optimi = torch.optim.SGD(model.parameters(), lr=0.001)

loss_fn = torch.nn.CrossEntropyLoss()

for epoch in range(epochs):

out = model(x)

loss = loss_fn(out, y) # 一定要预测在前,真实值在后

optimi.zero_grad()

loss.backward()

optimi.step()

if (epoch + 1) % 50 == 0:

print("epoch [{}]/[{}], loss: {:.4f}".format(epoch + 1, epochs, loss.item()))

if epoch == epochs - 1:

draw(out, y)

保存模型

- 方法一:torch.save(model, "model_name.pkl")。该方法会将整个模型都保存下来

- 方法二:torch.save(model.state_dict(), "model_name.pkl") 该方法只保留模型参数

推荐使用第二种方法,据说速度快

加载模型

方法一:

print("加载整个模型........")

model = torch.load("model.pkl")

pred = model(x)

方法二:

print("配置模型参数........")

model2 = Net(2, 64, 2)

model2.load_state_dict(torch.load("model_param.pkl"))

pred2 = model2(x)

示例

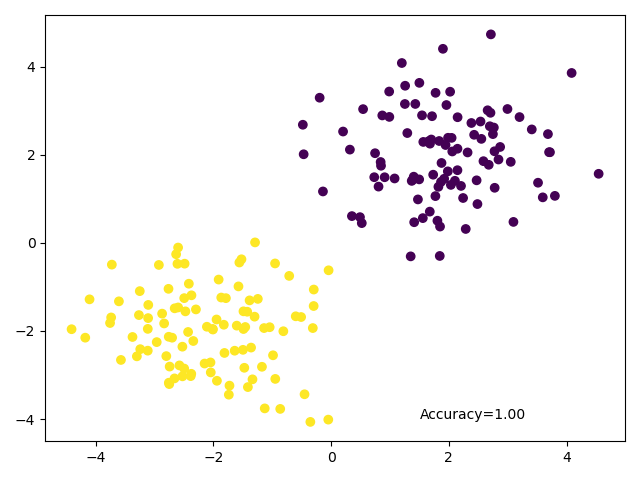

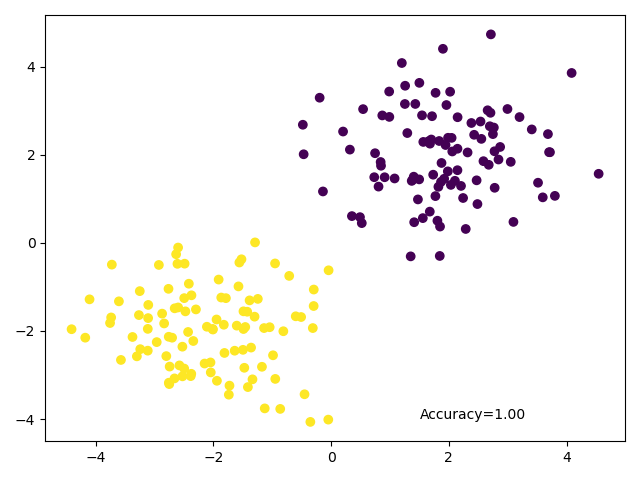

用上述方法加载的两个模型,在同一数据集上运行结果应该是一样的:

|

|

| 加载整个模型的绘图结果 | 加载模型参数的绘图结果 |

完整代码

import torch

import torch.nn.functional as F

import matplotlib.pyplot as plt

n_data = torch.ones(100, 2)

x0 = torch.normal(2 * n_data, 1)

y0 = torch.zeros(100)

x1 = torch.normal(-2 * n_data, 1)

y1 = torch.ones(100)

x = torch.cat((x0, x1), 0).type(torch.FloatTensor)

y = torch.cat((y0, y1)).type(torch.LongTensor)

class Net(torch.nn.Module):

def __init__(self, input_size, hidden_size, output_size):

super().__init__()

self.linear1 = torch.nn.Linear(input_size, hidden_size)

self.pred = torch.nn.Linear(hidden_size, output_size)

def forward(self, x):

output = F.relu(self.linear1(x))

output = self.pred(output)

return output

def draw(pred, y):

pred = torch.max(F.log_softmax(pred, dim=1), 1)[1]

pred_y = pred.data.numpy().squeeze()

target_y = y.numpy()

plt.scatter(x.data.numpy()[:, 0], x.data.numpy()[:, 1], c=pred_y)

accuracy = sum(pred_y == target_y) / 200

plt.text(1.5, -4, "Accuracy=%.2f" % accuracy)

plt.show()

def train(epochs):

model = Net(2, 64, 2)

optimi = torch.optim.SGD(model.parameters(), lr=0.001)

loss_fn = torch.nn.CrossEntropyLoss()

for epoch in range(epochs):

out = model(x)

loss = loss_fn(out, y) # 一定要预测在前,真实值在后

optimi.zero_grad()

loss.backward()

optimi.step()

if (epoch + 1) % 50 == 0:

print("epoch [{}]/[{}], loss: {:.4f}".format(epoch + 1, epochs, loss.item()))

if epoch == epochs - 1:

draw(out, y)

torch.save(model, "model.pkl") # 保存整个网络

torch.save(model.state_dict(), "model_param.pkl") # 只保留模型参数

print("模型保存成功!!!")

if __name__ == "__main__":

# train(100)

print("加载整个模型........")

model = torch.load("model.pkl")

pred = model(x)

draw(pred, y)

print("配置模型参数........")

model2 = Net(2, 64, 2)

model2.load_state_dict(torch.load("model_param.pkl"))

pred2 = model2(x)

draw(pred2, y)