决策树

决策树(红酒数据集)

In [ ]:

from sklearn import tree # 导入树模块

from sklearn.datasets import load_wine # 导入红酒数据集

from sklearn.model_selection import train_test_split # 导入分训练集和测试集的模块

In [3]:

wine = load_wine()

In [4]:

wine

Out[4]:

In [5]:

wine.data # 我们所需要的数据

Out[5]:

In [6]:

wine.target # 数据集的标签,三分类

Out[6]:

In [7]:

import pandas as pd

pd.concat([pd.DataFrame(wine.data),pd.DataFrame(wine.target)],axis=1) # 因为是纵向的连接,所以是axis=1,讲数据集数据和数据集标签以表的形式连接

Out[7]:

In [8]:

wine.feature_names # 特征名字

Out[8]:

In [9]:

wine.target_names

Out[9]:

In [10]:

Xtrain, Xtest, Ytrain, Ytest = train_test_split(wine.data,wine.target,test_size=0.3) # 对数据集以7:3的比例进行进行切分

In [11]:

Xtrain.shape # 训练集的数据大小

Out[11]:

In [12]:

wine.data.shape # 全部数据集的数据大小:178行,13个标签

Out[12]:

In [13]:

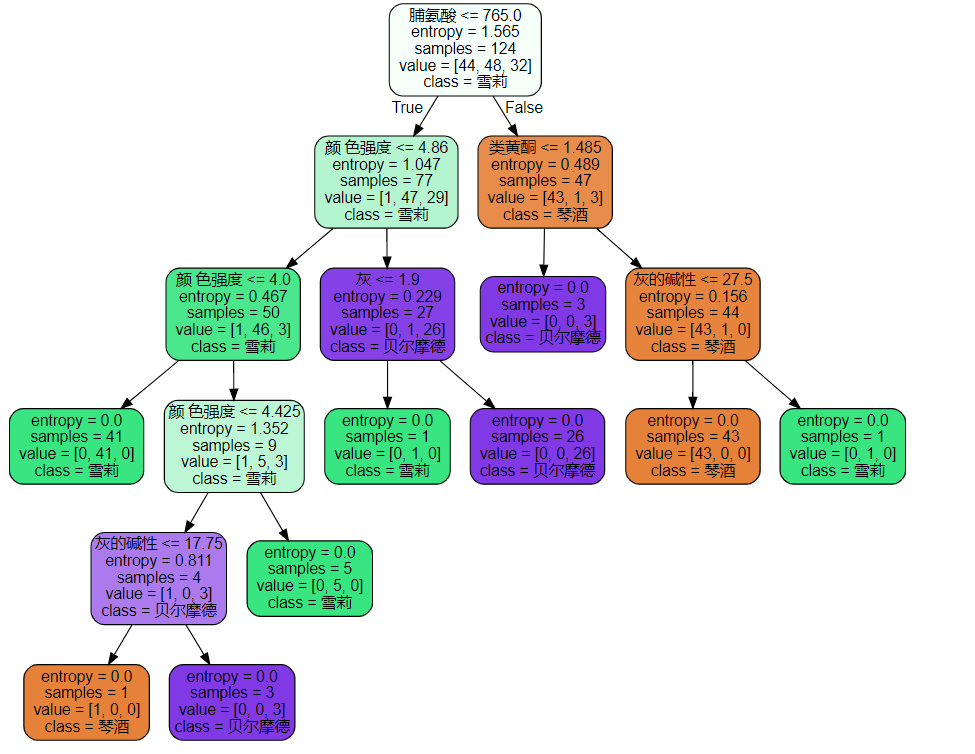

clf = tree.DecisionTreeClassifier(criterion="entropy") # 实例化

clf = clf.fit(Xtrain, Ytrain) # 训练模型

score = clf.score(Xtest, Ytest) # 执行模型,得出精确度

In [14]:

score

Out[14]:

In [17]:

feature_name = ['酒精','苹果酸','灰','灰的碱性','镁','总酚','类黄酮','非黄烷类酚类','花青素','颜 色强度','色调','od280/od315稀释葡萄酒','脯氨酸']

import graphviz

dot_data = tree.export_graphviz(clf

,feature_names = feature_name

,class_names=["琴酒","雪莉","贝尔摩德"]

,filled=True

,rounded=True

,out_file=None

)

graph = graphviz.Source(dot_data)

graph

Out[17]: