小批量的删除大表数据

当要删除大表的数据时,一定要小批量的删除相应行,这样带来的好处为:

1:一个事物删除少数行,避免由行锁转化为表锁,从而阻塞业务的正常运行

2:事务提交后,日志文件可以重复使用!

以下有两种小批量的删除行的解决方案,测试它们的性能如何:

首先填充测试表:

USE AdventureWorks GO SELECT * INTO TransactionHistory_temp FROM Production.TransactionHistory(nolock) SELECT * INTO TransactionHistory_top FROM Production.TransactionHistory(nolock) SELECT * FROM TransactionHistory_temp WHERE modifiedDate<'2004-04-02 00:00:00.000' SELECT * FROM TransactionHistory_top WHERE modifiedDate<'2004-04-02 00:00:00.000' --create index CREATE CLUSTERED INDEX ix_TransactionID ON TransactionHistory_temp(TransactionID) CREATE CLUSTERED INDEX ix_TransactionID ON TransactionHistory_top(TransactionID)

第一种解决方法使用top的方式来删除行

DBCC FREEPROCCACHE CHECKPOINT DBCC DROPCLEANBUFFERS DECLARE @start_top datetime=getdate(); while(1=1) BEGIN DELETE top(100) FROM TransactionHistory_top WHERE modifiedDate<'2004-04-02 00:00:00.000' if(@@rowcount<>100) BEGIN SELECT datediff(SECOND,@start_top,getdate()) AS "使用[top]花费的时间" return; end END

时间为:【单位秒】

第二种解决方案使用临时表来删除行:

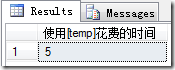

DBCC FREEPROCCACHE CHECKPOINT DBCC DROPCLEANBUFFERS DECLARE @start_temp datetime=getdate(); CREATE table #transaction (transactionid INT PRIMARY KEY) INSERT INTO #transaction SELECT transactionid FROM TransactionHistory_temp(nolock) WHERE modifiedDate<'2004-04-02 00:00:00.000' declare @temp table(transactionid INT ) WHILE exists(SELECT TOP 1 1 FROM #transaction) BEGIN DELETE FROM @temp; INSERT INTO @temp SELECT TOP 100 transactionid FROM #transaction DELETE FROM TransactionHistory_temp WHERE transactionid IN ( SELECT transactionid FROM @temp ) DELETE FROM #transaction WHERE transactionid IN ( SELECT transactionid FROM @temp ) END SELECT datediff(second ,@start_temp,getdate()) AS "使用[temp]花费的时间"

时间为:【单位秒】

可以看到使用临时表速度快了5倍有余,但是需要手写的代码就多了点,第一种解决方案代码清晰明了,通常删除大表数据都是在凌晨以后通过创建JOB去做,所以如果时间不是重点,

我倾向使用第一种解决方案,如果删除的速度很重要,可以选择第二种解决方案!