20-Netty TCP 粘包和拆包及解决方案

TCP粘包和拆包的基本介绍

- TCP是面向连接的, 面向流的, 提供可靠性服务, 收发两端(客户端和服务器端) 都有一一成对的Socket,因此发送端为了将多个发给接收端的包, 更有效的发给对方, 使用了优化算法(Nagle算法),将多次间隔较小且数据量小的数据,合并成一个大的数据块,然后进行封包, 这样做虽然提高了效率,但是接收端就难于分辨出完整的数据包了,因为面向流的通信是无消息保护边界的

- 由于TCP无消息保护边界, 需要在接收端处理消息边界问题, 也就是我们所说的粘包,拆包问题,看一张图

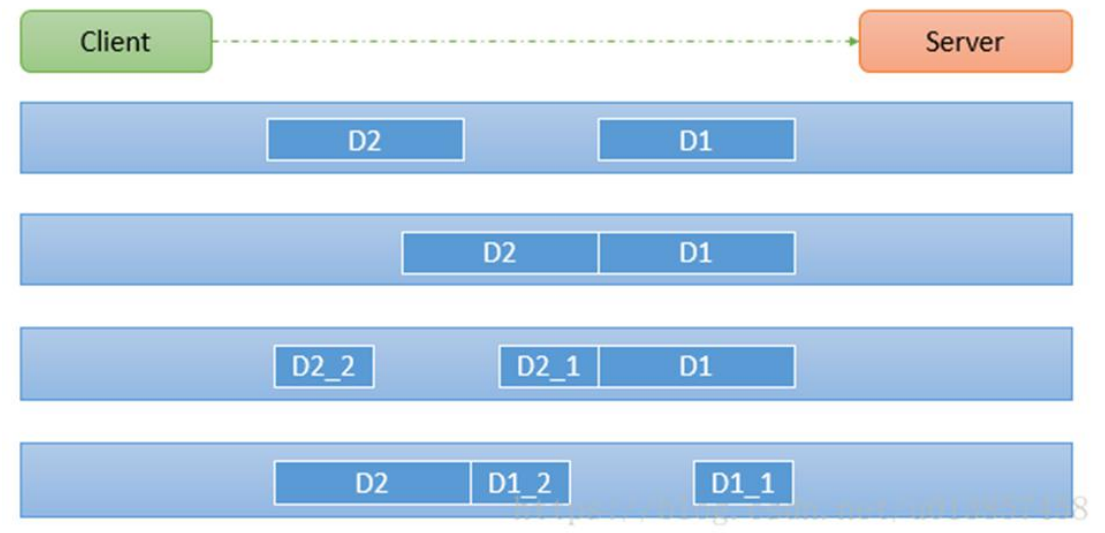

- 示意图TCP粘包,拆包图解

对图的说明

假设客户端分别发送了两个数据包D1和D2给服务端, 由于服务端一次读取到字节数是不确定的,故有可能存在以下四种情况

- 服务端分别两次读取到了两个独立的数据包, 分别是D1 和 D2, 没有粘包和拆包

- 服务端一次接收到了两个数据包D1和D2粘在了一起,称之为TCP粘包

- 服务端分两次读取到了数据包, 第一次读取到了完整的D1包和D2包的部分内容, 第二次读取到了D2包的剩余部分, 称之为TCP拆包

- 服务器分两次读取到了数据包, 第一次读取到了D1包的部分内容D1_1, 第二次读取到了D1包的剩余部分D1_2, 和完整的D2包

TCP粘包和拆包现象实例

在编写Netty程序时, 如果没有做处理,就会发生粘包和拆包问题

看一个具体的实例

NettyServer

package com.dance.netty.netty.tcp; import io.netty.bootstrap.ServerBootstrap; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.*; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; import java.nio.charset.StandardCharsets; import java.util.UUID; public class NettyServer { public static void main(String[] args) { NioEventLoopGroup bossGroup = new NioEventLoopGroup(1); NioEventLoopGroup workerGroup = new NioEventLoopGroup(); try { ServerBootstrap serverBootstrap = new ServerBootstrap(); serverBootstrap.group(bossGroup,workerGroup) .channel(NioServerSocketChannel.class) .option(ChannelOption.SO_BACKLOG, 128) .childOption(ChannelOption.SO_KEEPALIVE, true) .childHandler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { ChannelPipeline pipeline = ch.pipeline(); pipeline.addLast(new NettyServerHandler()); } }); ChannelFuture sync = serverBootstrap.bind("127.0.0.1", 7000).sync(); System.out.println("server is ready ......"); sync.channel().closeFuture().sync(); } catch (Exception e) { e.printStackTrace(); } finally { bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); } } static class NettyServerHandler extends SimpleChannelInboundHandler<ByteBuf> { private int count = 0; @Override protected void channelRead0(ChannelHandlerContext ctx, ByteBuf msg) throws Exception { byte[] bytes = new byte[msg.readableBytes()]; msg.readBytes(bytes); count++; System.out.println("服务器第"+count+"次接收到来自客户端的数据:" + new String(bytes, StandardCharsets.UTF_8)); // 服务器回送数据给客户端 回送随机的UUID给客户端 ctx.writeAndFlush(Unpooled.copiedBuffer(UUID.randomUUID().toString(),StandardCharsets.UTF_8)); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); cause.printStackTrace(); } } }

NettyClient

package com.dance.netty.netty.tcp; import io.netty.bootstrap.Bootstrap; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.*; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; import java.nio.charset.StandardCharsets; public class NettyClient { public static void main(String[] args) { NioEventLoopGroup eventExecutors = new NioEventLoopGroup(); try { Bootstrap bootstrap = new Bootstrap(); bootstrap.group(eventExecutors) .channel(NioSocketChannel.class) .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { ChannelPipeline pipeline = ch.pipeline(); pipeline.addLast(new NettyClientHandler()); } }); ChannelFuture sync = bootstrap.connect("127.0.0.1", 7000).sync(); sync.channel().closeFuture().sync(); } catch (Exception e) { e.printStackTrace(); } finally { eventExecutors.shutdownGracefully(); } } static class NettyClientHandler extends SimpleChannelInboundHandler<ByteBuf> { private int count = 0; @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { // 连续发送10条数据 for (int i = 0; i < 10; i++) { ctx.writeAndFlush(Unpooled.copiedBuffer("hello,server!" + i, StandardCharsets.UTF_8)); } } @Override protected void channelRead0(ChannelHandlerContext ctx, ByteBuf msg) throws Exception { byte[] bytes = new byte[msg.readableBytes()]; msg.readBytes(bytes); // 接收服务器的返回 count++; System.out.println("客户端第"+count+"次接收服务端的回送:" + new String(bytes, StandardCharsets.UTF_8)); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); cause.printStackTrace(); } } }

执行结果

Server

log4j:WARN No appenders could be found for logger (io.netty.util.internal.logging.InternalLoggerFactory). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. server is ready ...... 服务器第1次接收到来自客户端的数据:hello,server!0hello,server!1hello,server!2hello,server!3hello,server!4hello,server!5hello,server!6hello,server!7hello,server!8hello,server!9 服务器第1次接收到来自客户端的数据:hello,server!0 服务器第2次接收到来自客户端的数据:hello,server!1 服务器第3次接收到来自客户端的数据:hello,server!2hello,server!3hello,server!4 服务器第4次接收到来自客户端的数据:hello,server!5hello,server!6 服务器第5次接收到来自客户端的数据:hello,server!7hello,server!8hello,server!9

Client1

客户端第1次接收服务端的回送:84653e99-0e7f-431d-a897-c215af959a3b

Client2

客户端第1次接收服务端的回送:6f3b0e79-2f40-4066-bb6b-80f988ecec116b6bbd94-b345-46d6-8d36-a114534331a850628e04-ece1-4f58-b684-d30189f6cf26b2139027-6bda-4d40-9238-9fc0e59bc7a64b568ffe-f616-4f48-8f1c-05ecf3e817ee

分析:

服务器启动后到server is ready ......

第一个客户端启动后 TCP将10次发送直接封包成一次直接发送,所以导致了服务器一次就收到了所有的数据,产生了TCP粘包,拆包的问题

第二客户端启动后 TCP将10次发送分别封装成了5次请求,产生粘包,拆包问题

TCP粘包和拆包解决方案

- 使用自定义协议 + 编解码器来解决

- 关键就是要解决 服务器每次读取数据长度的问题, 这个问题解决, 就不会出现服务器多读或少读数据的问题,从而避免TCP粘包和拆包

TCP粘包, 拆包解决方案实现

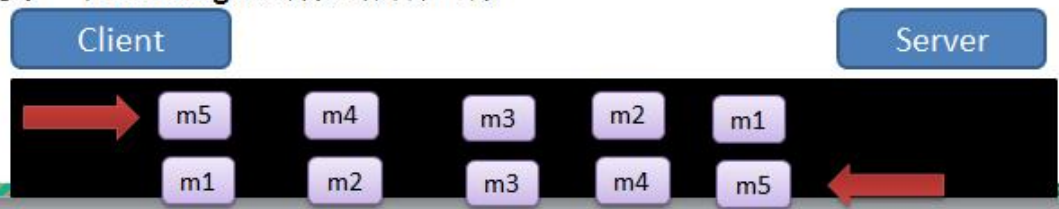

- 要求客户端发送5个Message对象, 客户端每次发送一个Message对象

- 服务器端每次接收一个Message, 分5次进行解码, 每读到一个Message, 会回复一个Message对象给客户端

新建协议MessageProtocol

package com.dance.netty.netty.protocoltcp; /** * 消息协议 */ public class MessageProtocol { private int length; private byte[] content; public MessageProtocol() { } public MessageProtocol(int length, byte[] content) { this.length = length; this.content = content; } public int getLength() { return length; } public void setLength(int length) { this.length = length; } public byte[] getContent() { return content; } public void setContent(byte[] content) { this.content = content; } }

新建编码器

package com.dance.netty.netty.protocoltcp; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelHandlerContext; import io.netty.handler.codec.MessageToByteEncoder; /** * 自定义协议编码器 */ public class MyMessageProtocolEncoder extends MessageToByteEncoder<MessageProtocol> { @Override protected void encode(ChannelHandlerContext ctx, MessageProtocol msg, ByteBuf out) throws Exception { // System.out.println("自定义协议---->开始编码"); // 开始发送数据 out.writeInt(msg.getLength()); // 优先发送长度,定义边界 out.writeBytes(msg.getContent()); // System.out.println("自定义协议---->编码完成"); } }

新建解码器

package com.dance.netty.netty.protocoltcp; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelHandlerContext; import io.netty.handler.codec.ByteToMessageDecoder; import java.util.List; public class MyMessageProtocolDecoder extends ByteToMessageDecoder { @Override protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception { // System.out.println("自定义协议---->开始解码"); // 获取定义的边界长度 int length = in.readInt(); if(in.readableBytes() >= length){ // 根据长度读取数据 byte[] bytes = new byte[length]; in.readBytes(bytes); // 反构造成MessageProtocol MessageProtocol messageProtocol = new MessageProtocol(length, bytes); out.add(messageProtocol); // System.out.println("自定义协议---->解码完成"); }else{ // 内容长度不够 } } }

新建服务器端

package com.dance.netty.netty.protocoltcp; import io.netty.bootstrap.ServerBootstrap; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.*; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; import java.nio.charset.StandardCharsets; import java.util.UUID; public class NettyServer { public static void main(String[] args) { NioEventLoopGroup bossGroup = new NioEventLoopGroup(1); NioEventLoopGroup workerGroup = new NioEventLoopGroup(); try { ServerBootstrap serverBootstrap = new ServerBootstrap(); serverBootstrap.group(bossGroup,workerGroup) .channel(NioServerSocketChannel.class) .option(ChannelOption.SO_BACKLOG, 128) .childOption(ChannelOption.SO_KEEPALIVE, true) .childHandler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { ChannelPipeline pipeline = ch.pipeline(); // 加入自定义协议编解码器 pipeline.addLast(new MyMessageProtocolDecoder()); pipeline.addLast(new MyMessageProtocolEncoder()); pipeline.addLast(new NettyServerHandler()); } }); ChannelFuture sync = serverBootstrap.bind("127.0.0.1", 7000).sync(); System.out.println("server is ready ......"); sync.channel().closeFuture().sync(); } catch (Exception e) { e.printStackTrace(); } finally { bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); } } static class NettyServerHandler extends SimpleChannelInboundHandler<MessageProtocol> { private int count = 0; @Override protected void channelRead0(ChannelHandlerContext ctx, MessageProtocol msg) throws Exception { byte[] bytes = msg.getContent(); count++; System.out.println("服务器第"+count+"次接收到来自客户端的数据:" + new String(bytes, StandardCharsets.UTF_8)); // 服务器回送数据给客户端 回送随机的UUID给客户端 byte[] s = UUID.randomUUID().toString().getBytes(StandardCharsets.UTF_8); ctx.writeAndFlush(new MessageProtocol(s.length,s)); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); cause.printStackTrace(); } } }

新建客户端

package com.dance.netty.netty.protocoltcp; import io.netty.bootstrap.Bootstrap; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.*; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; import java.nio.charset.StandardCharsets; public class NettyClient { public static void main(String[] args) { NioEventLoopGroup eventExecutors = new NioEventLoopGroup(); try { Bootstrap bootstrap = new Bootstrap(); bootstrap.group(eventExecutors) .channel(NioSocketChannel.class) .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { ChannelPipeline pipeline = ch.pipeline(); // 加入自定义分割符号 // ByteBuf delimiter = Unpooled.copiedBuffer("\r\n".getBytes()); // pipeline.addFirst(new DelimiterBasedFrameDecoder(8192, delimiter)); // 添加自定义协议编解码器 pipeline.addLast(new MyMessageProtocolDecoder()); pipeline.addLast(new MyMessageProtocolEncoder()); pipeline.addLast(new NettyClientHandler()); } }); ChannelFuture sync = bootstrap.connect("127.0.0.1", 7000).sync(); sync.channel().closeFuture().sync(); } catch (Exception e) { e.printStackTrace(); } finally { eventExecutors.shutdownGracefully(); } } static class NettyClientHandler extends SimpleChannelInboundHandler<MessageProtocol> { private int count = 0; @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { // 连续发送10条数据 for (int i = 0; i < 10; i++) { String msg = "今天天气冷, 打火锅" + i; byte[] bytes = msg.getBytes(StandardCharsets.UTF_8); // 使用自定义协议 MessageProtocol messageProtocol = new MessageProtocol(bytes.length, bytes); ctx.writeAndFlush(messageProtocol); } } @Override protected void channelRead0(ChannelHandlerContext ctx, MessageProtocol msg) throws Exception { byte[] bytes = msg.getContent(); // 接收服务器的返回 count++; System.out.println("客户端第"+count+"次接收服务端的回送:" + new String(bytes, StandardCharsets.UTF_8)); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); cause.printStackTrace(); } } }

测试

发送10次

服务器端

log4j:WARN No appenders could be found for logger (io.netty.util.internal.logging.InternalLoggerFactory). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. server is ready ...... 服务器第1次接收到来自客户端的数据:今天天气冷, 打火锅0 ...... 服务器第10次接收到来自客户端的数据:今天天气冷, 打火锅9

客户端

log4j:WARN No appenders could be found for logger (io.netty.util.internal.logging.InternalLoggerFactory). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. 客户端第1次接收服务端的回送:a6b69f1c-daba-435a-802a-c19a6350ca94 ...... 客户端第10次接收服务端的回送:5af5c297-8668-48aa-b8c4-35656142f591

ok,没有问题, 但是真的没有问题吗?答案是有问题

FAQ

发送1000次

修改客户端发送消息数量

@Override public void channelActive(ChannelHandlerContext ctx) throws Exception { // 连续发送10条数据 for (int i = 0; i < 1000; i++) { ...... } }

重新测试

服务器端

log4j:WARN No appenders could be found for logger (io.netty.util.internal.logging.InternalLoggerFactory). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. server is ready ...... 服务器第1次接收到来自客户端的数据:今天天气冷, 打火锅0 ...... 服务器第31次接收到来自客户端的数据:今天天气冷, 打火锅30 服务器第32次接收到来自客户端的数据:今天天气冷, 打火锅31 io.netty.handler.codec.DecoderException: java.lang.NegativeArraySizeException at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:459) at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:265) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1412) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:943) at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:141) at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:645) at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580) at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497) at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459) at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:886) at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.NegativeArraySizeException at com.dance.netty.netty.protocoltcp.MyMessageProtocolDecoder.decode(MyMessageProtocolDecoder.java:17) at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:489) at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:428) ... 16 more io.netty.handler.codec.DecoderException: java.lang.IndexOutOfBoundsException: readerIndex(1022) + length(4) exceeds writerIndex(1024): PooledUnsafeDirectByteBuf(ridx: 1022, widx: 1024, cap: 1024) at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:459) at io.netty.handler.codec.ByteToMessageDecoder.channelInputClosed(ByteToMessageDecoder.java:392) at io.netty.handler.codec.ByteToMessageDecoder.channelInputClosed(ByteToMessageDecoder.java:359) at io.netty.handler.codec.ByteToMessageDecoder.channelInactive(ByteToMessageDecoder.java:342) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:245) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:231) at io.netty.channel.AbstractChannelHandlerContext.fireChannelInactive(AbstractChannelHandlerContext.java:224) at io.netty.channel.DefaultChannelPipeline$HeadContext.channelInactive(DefaultChannelPipeline.java:1407) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:245) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:231) at io.netty.channel.DefaultChannelPipeline.fireChannelInactive(DefaultChannelPipeline.java:925) at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:822) at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163) at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:404) at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:463) at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:886) at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.IndexOutOfBoundsException: readerIndex(1022) + length(4) exceeds writerIndex(1024): PooledUnsafeDirectByteBuf(ridx: 1022, widx: 1024, cap: 1024) at io.netty.buffer.AbstractByteBuf.checkReadableBytes0(AbstractByteBuf.java:1403) at io.netty.buffer.AbstractByteBuf.readInt(AbstractByteBuf.java:786) at com.dance.netty.netty.protocoltcp.MyMessageProtocolDecoder.decode(MyMessageProtocolDecoder.java:14) at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:489) at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:428) ... 17 more

what ? 直接报错了, 数组下标越界, 读索引1022 + 长度4 > 写缩影1024了

这个是什么问题呢 ? 我看网上关于这个BUG的解决方案很少,基本没有, 好多都是贴问题的, 我翻了将近1个小时,才找到一个大佬写的一篇文章解决了, 感谢大佬

博客地址:

https://blog.csdn.net/u011035407/article/details/80454511

问题描述:

这样在刚开始的工作中数据包传输没有问题,不过数据包的大小超过512b的时候就会抛出异常了。

解决方案

配合解码器DelimiterBasedFrameDecoder一起使用,在数据包的末尾使用换行符\n表示本次数据包已经结束,当DelimiterBasedFrameDecoder把数据切割之后,再使用ByteToMessageDecoder实现decode方法把数据流转换为Message对象。

我们在ChannelPipeline加入DelimiterBasedFrameDecoder解码器

客户端和服务器端都加

//使用\n作为分隔符 pipeline.addLast(new LoggingHandler(LogLevel.INFO)); pipeline.addLast(new DelimiterBasedFrameDecoder(8192, Delimiters.lineDelimiter()));

在MessageToByteEncoder的实现方法encode()增加out.writeBytes(new byte[]{'\n'});

//在写出字节流的末尾增加\n表示数据结束 out.writeBytes(new byte[]{'\n'});

这时候就可以愉快的继续处理数据了。

等我还没有高兴半天的时候,问题又来了。还是一样的问题

等等等,,,怎么又报错了,不是已经加了黏包处理了吗??,解决问题把,首先看解析的数据包结构

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 01 01 01 00 00 00 06 00 00 01 0a 7b 22 69 64 22 |...........{"id"|

|00000010| 3a 33 2c 22 75 73 65 72 6e 61 6d 65 22 3a 22 31 |:3,"username":"1|

|00000020| 38 35 30 30 33 34 30 31 36 39 22 2c 22 6e 69 63 |8500340169","nic|

|00000030| 6b 6e 61 6d 65 22 3a 22 e4 bb 96 e5 9b 9b e5 a4 |kname":"........|

|00000040| a7 e7 88 b7 22 2c 22 72 6f 6f 6d 49 64 22 3a 31 |....","roomId":1|

|00000050| 35 32 37 32 33 38 35 36 39 34 37 34 2c 22 74 65 |527238569474,"te|

|00000060| 61 6d 4e 61 6d 65 22 3a 22 e4 bf 84 e7 bd 97 e6 |amName":".......|

|00000070| 96 af 22 2c 22 75 6e 69 74 73 22 3a 7b 22 75 6e |..","units":{"un|

|00000080| 69 74 31 22 3a 7b 22 78 22 3a 31 30 2e 30 2c 22 |it1":{"x":10.0,"|

|00000090| 79 22 3a 31 30 2e 30 7d 2c 22 75 6e 69 74 32 22 |y":10.0},"unit2"|

|000000a0| 3a 7b 22 78 22 3a 31 30 2e 30 2c 22 79 22 3a 31 |:{"x":10.0,"y":1|

|000000b0| 30 2e 30 7d 2c 22 75 6e 69 74 33 22 3a 7b 22 78 |0.0},"unit3":{"x|

|000000c0| 22 3a 31 30 2e 30 2c 22 79 22 3a 31 30 2e 30 7d |":10.0,"y":10.0}|

|000000d0| 2c 22 75 6e 69 74 34 22 3a 7b 22 78 22 3a 31 30 |,"unit4":{"x":10|

|000000e0| 2e 30 2c 22 79 22 3a 31 30 2e 30 7d 2c 22 75 6e |.0,"y":10.0},"un|

|000000f0| 69 74 35 22 3a 7b 22 78 22 3a 31 30 2e 30 2c 22 |it5":{"x":10.0,"|

|00000100| 79 22 3a 31 30 2e 30 7d 7d 2c 22 73 74 61 74 75 |y":10.0}},"statu|

|00000110| 73 22 3a 31 7d 0a |s":1}. |

+--------+-------------------------------------------------+----------------+

接收到的数据是完整的没错,但是还是报错了,而且数据结尾的字节的确是0a,转化成字符就是\n没有问题啊。

在ByteToMessageDecoder的decode方法里打印ByteBuf buf的长度之后,问题找到了 长度 : 10

这就是说在进入到ByteToMessageDecoder这个解码器的时候,数据包已经只剩下10个长度了,那么长的数据被上个解码器DelimiterBasedFrameDecoder隔空劈开了- -。问题出现在哪呢,看上面那块字节流的字节,找到第11个字节,是0a。。。。因为不是标准的json格式,最前面使用了3个字节 加上2个int长度的属性,所以 数据包头应该是11个字节长。

而DelimiterBasedFrameDecoder在读到第11个字节的时候读成了\n,自然而然的就认为这个数据包已经结束了,而数据进入到ByteToMessageDecoder的时候就会因为规定的body长度不等于length长度而出现问题。

思来想去 不实用\n 这样的单字节作为换行符,很容易在数据流中遇到,转而使用\r\n俩字节来处理,而这俩字节出现在前面两个int长度中的几率应该很小。

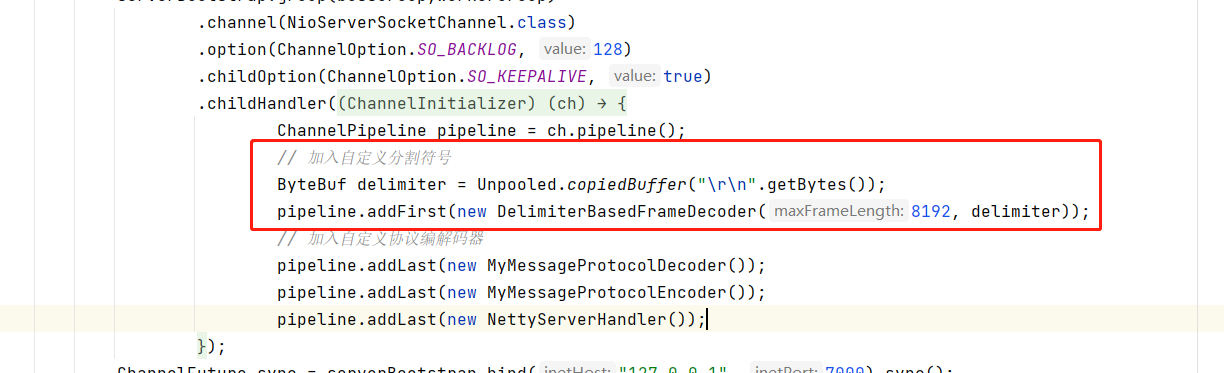

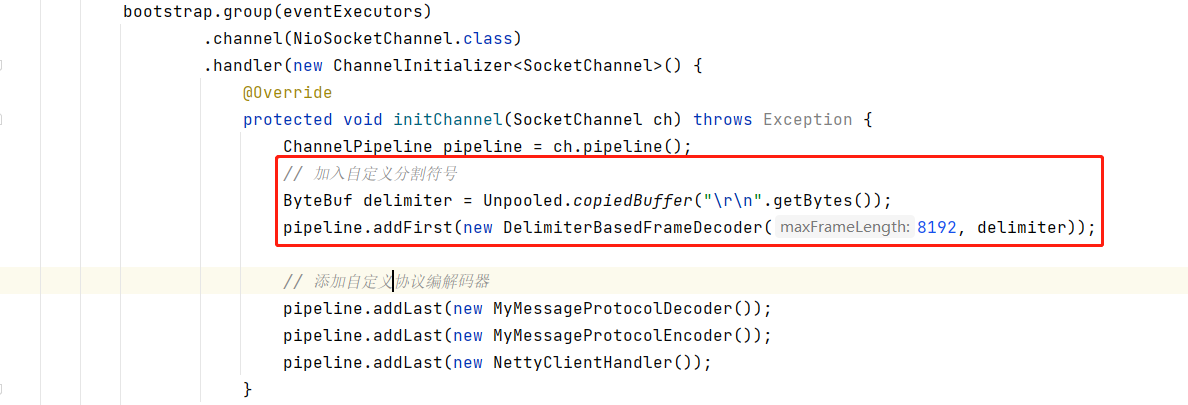

最终解决

在客户端和服务器端的pipeline中添加 以 "\r\n" 定义为边界的符号来标识数据包结束

//这里使用自定义分隔符 ByteBuf delimiter = Unpooled.copiedBuffer("\r\n".getBytes()); pipeline.addFirst(new DelimiterBasedFrameDecoder(8192, delimiter));

Server端

Client端

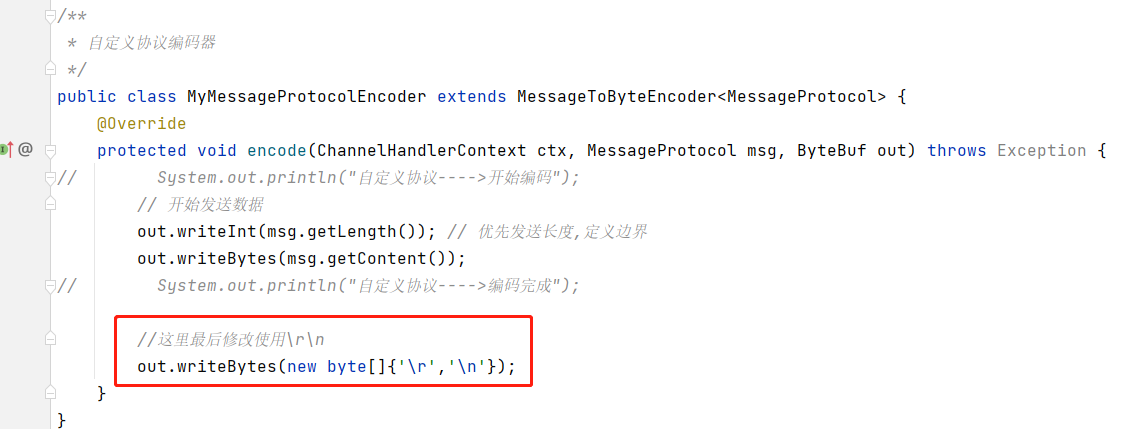

编码器中发送结束位置增加

//这里最后修改使用\r\n out.writeBytes(new byte[]{'\r','\n'});

再次运行程序 数据包可以正常接收了。

最终测试

服务器端

log4j:WARN No appenders could be found for logger (io.netty.util.internal.logging.InternalLoggerFactory). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. server is ready ...... 服务器第1次接收到来自客户端的数据:今天天气冷, 打火锅0 ...... 服务器第999次接收到来自客户端的数据:今天天气冷, 打火锅998 服务器第1000次接收到来自客户端的数据:今天天气冷, 打火锅999

客户端

log4j:WARN No appenders could be found for logger (io.netty.util.internal.logging.InternalLoggerFactory). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. 客户端第1次接收服务端的回送:48fa6d78-8079-4700-b488-ca2af9eb3f8c ...... 客户端第999次接收服务端的回送:581da47b-d77b-4972-af11-6d33057f6610 客户端第1000次接收服务端的回送:0014e906-69cb-4900-9409-f4d1af9148dd

总结

以前使用netty的时候也仅限于和硬件交互,而当时的硬件受限于成本问题是一条一条处理数据包的,所以基本上不会考虑黏包问题

然后就是ByteToMessageDecoder和MessageToByteEncoder两个类是比较底层实现数据流处理的,并没有带有拆包黏包的处理机制,需要自己在数据包头规定包的长度,而且无法处理过大的数据包,因为我一开始首先使用了这种方式处理数据,所以后来就没有再换成DelimiterBasedFrameDecoder加 StringDecoder来解析数据包,最后使用json直接转化为对象。

FAQ 参考粘贴于大佬的博客,加自己的改动

若有收获,就点个赞吧