博文转载请注明作者和出处(作者:finallyliuyu :出处博客园)

一.数学背景

将数学知识、数学理论以及数学思想迁移到实际工程问题中,经常会促进工程问题的圆满解决。

可是如何将数学知识引入工程问题中呢?首先需要有“数学思维”例如理解数学公式所刻画的内涵;其次需要有“建模”能力:从不同的视角来看待同一个问题,最后抽象出的数学模型也可能会差别很大。比如有的数学模型有现成的解决方案可用,然而有的数学模型没有现成的解决方案可用;或者有的模型比其他模型能更好地刻画和表示实际问题。如果把在头脑中搜索适合待解决实际问题的数学工具定义为“数学思维”,把将实际问题抽象成数学模型的能力定义为"”数学建模”的话,只有这两种能力配合“默契”才能更好地解决实际问题。或许我们从文本分类问题中的各种特征词选择方法能够看到些端倪。

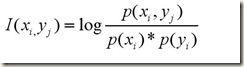

首先先来看一些公式的含义

注意:上面的互信息公式有些错误,做如下更正:

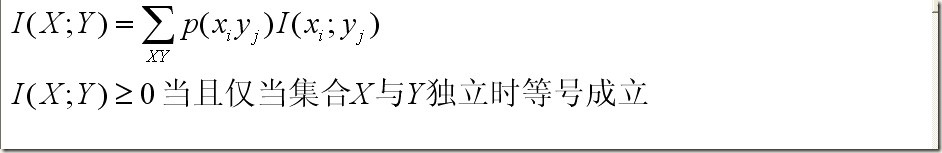

平均互信息:(或叫做信息增益)

下面开开始介绍平均互信息与互信息在文本分类特征词选择算法中的应用

为了避免引起混淆,互信息在文本分类特征词选择中被称为point-wise MI;平均互信息被称作IG(Information Gain)。

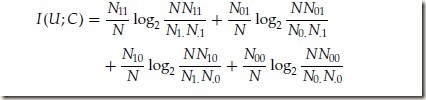

参照 Manning《信息检索导论》p189页(王斌译作)的定义:

IG 公式为:

point-wise MI公式为

二.下面给出实现这两种算法的代码C++代码

point-wise MI 声明代码

point-wise MI 声明代码

double CalPointWiseMI(double N11,double N10,double N01,double N00);//计算pointwise MI;

vector<pair<string,double> >PointWiseMIFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel);

void PointWiseMIFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address); 计算pointwiseMI的值

计算pointwiseMI的值

vector<pair<string,double> >PointWiseMIFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel);

void PointWiseMIFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address);

计算pointwiseMI的值

计算pointwiseMI的值

/************************************************************************/

/* 计算pointwiseMI的值 */

/************************************************************************/

double Preprocess:: CalPointWiseMI(double N11,double N10,double N01,double N00)

{

double pointwiseMI=0;

if(N11>0)

{

pointwiseMI=log(N11+N10+N01+N00)+log(N11)-log(N11+N10)-log(N11+N01);

}

return pointwiseMI;

} 计算每个词对每个类别的pointwiseMI

计算每个词对每个类别的pointwiseMI

/* 计算pointwiseMI的值 */

/************************************************************************/

double Preprocess:: CalPointWiseMI(double N11,double N10,double N01,double N00)

{

double pointwiseMI=0;

if(N11>0)

{

pointwiseMI=log(N11+N10+N01+N00)+log(N11)-log(N11+N10)-log(N11+N01);

}

return pointwiseMI;

}

计算每个词对每个类别的pointwiseMI

计算每个词对每个类别的pointwiseMI

/************************************************************************/

/* 计算每个词对每个类别的pointwiseMI */

/************************************************************************/

vector<pair<string,double> > Preprocess:: PointWiseMIFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel)

{

int N=endIndex-beginIndex+1;//总共的文章数目

vector<string>tempvector;//词袋子中的所有词

vector<pair<string,double> > MIInfo;

for(map<string,vector<pair<int,int>>>::iterator it=mymap.begin();it!=mymap.end();++it)

{

tempvector.push_back(it->first);

}

//计算卡方值

for(vector<string>::iterator ittmp=tempvector.begin();ittmp!=tempvector.end();ittmp++)

{

int N1=mymap[*ittmp].size();

pair<string,string> compoundKey=make_pair(*ittmp,classLabel);

double N11=double(contingencyTable[compoundKey].first);

double N01=double(contingencyTable[compoundKey].second);

double N10=double(N1-N11);

double N00=double(N-N1-N01);

double miValue=CalPointWiseMI(N11,N10,N01,N00);

MIInfo.push_back(make_pair(*ittmp,miValue));

}

//按照卡方值从大到小将这些词排列起来

stable_sort(MIInfo.begin(), MIInfo.end(),isLarger);

return MIInfo;

}

/* 计算每个词对每个类别的pointwiseMI */

/************************************************************************/

vector<pair<string,double> > Preprocess:: PointWiseMIFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel)

{

int N=endIndex-beginIndex+1;//总共的文章数目

vector<string>tempvector;//词袋子中的所有词

vector<pair<string,double> > MIInfo;

for(map<string,vector<pair<int,int>>>::iterator it=mymap.begin();it!=mymap.end();++it)

{

tempvector.push_back(it->first);

}

//计算卡方值

for(vector<string>::iterator ittmp=tempvector.begin();ittmp!=tempvector.end();ittmp++)

{

int N1=mymap[*ittmp].size();

pair<string,string> compoundKey=make_pair(*ittmp,classLabel);

double N11=double(contingencyTable[compoundKey].first);

double N01=double(contingencyTable[compoundKey].second);

double N10=double(N1-N11);

double N00=double(N-N1-N01);

double miValue=CalPointWiseMI(N11,N10,N01,N00);

MIInfo.push_back(make_pair(*ittmp,miValue));

}

//按照卡方值从大到小将这些词排列起来

stable_sort(MIInfo.begin(), MIInfo.end(),isLarger);

return MIInfo;

}

point-wise MI特征词选择法

point-wise MI特征词选择法

/************************************************************************/

/* point-wise MI特征词选择法 */

/************************************************************************/

void Preprocess:: PointWiseMIFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address)

{

clock_t start,finish;

double totaltime;

int totalTraingingCorpus=endIndex-beginIndex+1;//训练语料库总共的文章数目

set<string>finalKeywords;//存放最终遴选出的特征词

vector<pair<string,double>>MIInfo;

start=clock();

for(vector<string>::iterator it=classLabels.begin();it!=classLabels.end();it++)

{

//训练语料库中某个类别的文章数目

int N_subClassCnt=getCategorizationNum(*it,"TrainingCorpus");

//threshold决定每个类别遴选多少个特征词

int threshold=N_subClassCnt*N/totalTraingingCorpus;

MIInfo=PointWiseMIFeatureSelectionForPerclass(mymap,contingencyTable,*it);

for(vector<pair<string,double> >::size_type j=0;j<threshold;j++)

{

finalKeywords.insert(MIInfo[j].first);

}

MIInfo.clear();

}

ofstream outfile(address);

int finalKeyWordsCount=finalKeywords.size();

for (set<string>::iterator it=finalKeywords.begin();it!=finalKeywords.end();it++)

{

outfile<<*it<<endl;

}

outfile.close();

cout<<"最后共选择特征词"<<finalKeyWordsCount<<endl;

finish=clock();

totaltime=(double)(finish-start)/CLOCKS_PER_SEC;

cout<<"遴选特征词共有了"<<totaltime<<endl;

}

/* point-wise MI特征词选择法 */

/************************************************************************/

void Preprocess:: PointWiseMIFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address)

{

clock_t start,finish;

double totaltime;

int totalTraingingCorpus=endIndex-beginIndex+1;//训练语料库总共的文章数目

set<string>finalKeywords;//存放最终遴选出的特征词

vector<pair<string,double>>MIInfo;

start=clock();

for(vector<string>::iterator it=classLabels.begin();it!=classLabels.end();it++)

{

//训练语料库中某个类别的文章数目

int N_subClassCnt=getCategorizationNum(*it,"TrainingCorpus");

//threshold决定每个类别遴选多少个特征词

int threshold=N_subClassCnt*N/totalTraingingCorpus;

MIInfo=PointWiseMIFeatureSelectionForPerclass(mymap,contingencyTable,*it);

for(vector<pair<string,double> >::size_type j=0;j<threshold;j++)

{

finalKeywords.insert(MIInfo[j].first);

}

MIInfo.clear();

}

ofstream outfile(address);

int finalKeyWordsCount=finalKeywords.size();

for (set<string>::iterator it=finalKeywords.begin();it!=finalKeywords.end();it++)

{

outfile<<*it<<endl;

}

outfile.close();

cout<<"最后共选择特征词"<<finalKeyWordsCount<<endl;

finish=clock();

totaltime=(double)(finish-start)/CLOCKS_PER_SEC;

cout<<"遴选特征词共有了"<<totaltime<<endl;

}

信息增益特征词选择算法

信息增益特征词选择算法

double CalInformationGain(double N11,double N10,double N01,double N00);//计算IG值

vector<pair<string,double> >InformationGainFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel);

void InformationGainFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address);

vector<pair<string,double> >InformationGainFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel);

void InformationGainFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address);

计算IG值

计算IG值

/************************************************************************/

/* 计算IG值 */

/************************************************************************/

double Preprocess::CalInformationGain(double N11,double N10,double N01,double N00)

{

double IG=0;

double Ntotal=N11+N10+N01+N00;

double N1_=N11+N10;

double N0_=N01+N00;

double N_0=N10+N00;

double N_1=N11+N01;

if(N11>0)

{

IG+=N11/Ntotal*log(Ntotal*N11/(N1_*N_1));

}

if(N10>0)

{

IG+=N10/Ntotal*log(Ntotal*N10/(N1_*N_0));

}

if(N01>0)

{

IG+=N01/Ntotal*log(Ntotal*N01/(N0_*N_1));

}

if(N00>0)

{

IG+=N00/Ntotal*log(Ntotal*N00/(N0_*N_0));

}

return IG;

} 计算每个单词,对于某个类别的IG值,并排序

计算每个单词,对于某个类别的IG值,并排序

/* 计算IG值 */

/************************************************************************/

double Preprocess::CalInformationGain(double N11,double N10,double N01,double N00)

{

double IG=0;

double Ntotal=N11+N10+N01+N00;

double N1_=N11+N10;

double N0_=N01+N00;

double N_0=N10+N00;

double N_1=N11+N01;

if(N11>0)

{

IG+=N11/Ntotal*log(Ntotal*N11/(N1_*N_1));

}

if(N10>0)

{

IG+=N10/Ntotal*log(Ntotal*N10/(N1_*N_0));

}

if(N01>0)

{

IG+=N01/Ntotal*log(Ntotal*N01/(N0_*N_1));

}

if(N00>0)

{

IG+=N00/Ntotal*log(Ntotal*N00/(N0_*N_0));

}

return IG;

}

计算每个单词,对于某个类别的IG值,并排序

计算每个单词,对于某个类别的IG值,并排序

************************************************************************/

/* 计算每个单词,对于某个类别的IG值,并排序 */

/************************************************************************/

vector<pair<string,double> > Preprocess:: InformationGainFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel)

{

int N=endIndex-beginIndex+1;//总共的文章数目

vector<string>tempvector;//词袋子中的所有词

vector<pair<string,double> > IGInfo;

for(map<string,vector<pair<int,int>>>::iterator it=mymap.begin();it!=mymap.end();++it)

{

tempvector.push_back(it->first);

}

//计算卡方值

for(vector<string>::iterator ittmp=tempvector.begin();ittmp!=tempvector.end();ittmp++)

{

int N1=mymap[*ittmp].size();

pair<string,string> compoundKey=make_pair(*ittmp,classLabel);

double N11=double(contingencyTable[compoundKey].first);

double N01=double(contingencyTable[compoundKey].second);

double N10=double(N1-N11);

double N00=double(N-N1-N01);

//double chiValue=CalChiSquareValue(N11,N10,N01,N00);

double igValue=CalInformationGain(N11,N10,N01,N00);

IGInfo.push_back(make_pair(*ittmp,igValue));

}

//按照卡方值从大到小将这些词排列起来

stable_sort(IGInfo.begin(),IGInfo.end(),isLarger);

return IGInfo;

}

/* 计算每个单词,对于某个类别的IG值,并排序 */

/************************************************************************/

vector<pair<string,double> > Preprocess:: InformationGainFeatureSelectionForPerclass(DICTIONARY& mymap,CONTINGENCY& contingencyTable,string classLabel)

{

int N=endIndex-beginIndex+1;//总共的文章数目

vector<string>tempvector;//词袋子中的所有词

vector<pair<string,double> > IGInfo;

for(map<string,vector<pair<int,int>>>::iterator it=mymap.begin();it!=mymap.end();++it)

{

tempvector.push_back(it->first);

}

//计算卡方值

for(vector<string>::iterator ittmp=tempvector.begin();ittmp!=tempvector.end();ittmp++)

{

int N1=mymap[*ittmp].size();

pair<string,string> compoundKey=make_pair(*ittmp,classLabel);

double N11=double(contingencyTable[compoundKey].first);

double N01=double(contingencyTable[compoundKey].second);

double N10=double(N1-N11);

double N00=double(N-N1-N01);

//double chiValue=CalChiSquareValue(N11,N10,N01,N00);

double igValue=CalInformationGain(N11,N10,N01,N00);

IGInfo.push_back(make_pair(*ittmp,igValue));

}

//按照卡方值从大到小将这些词排列起来

stable_sort(IGInfo.begin(),IGInfo.end(),isLarger);

return IGInfo;

}

信息增益特征词选择算法

信息增益特征词选择算法

/************************************************************************/

/* 信息增益特征词选择算法 */

/************************************************************************/

void Preprocess::InformationGainFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address)

{

clock_t start,finish;

double totaltime;

int totalTraingingCorpus=endIndex-beginIndex+1;//训练语料库总共的文章数目

set<string>finalKeywords;//存放最终遴选出的特征词

vector<pair<string,double>>IGInfo;

start=clock();

for(vector<string>::iterator it=classLabels.begin();it!=classLabels.end();it++)

{

//训练语料库中某个类别的文章数目

int N_subClassCnt=getCategorizationNum(*it,"TrainingCorpus");

//threshold决定每个类别遴选多少个特征词

int threshold=N_subClassCnt*N/totalTraingingCorpus;

IGInfo=InformationGainFeatureSelectionForPerclass(mymap,contingencyTable,*it);

for(vector<pair<string,double> >::size_type j=0;j<threshold;j++)

{

finalKeywords.insert(IGInfo[j].first);

}

IGInfo.clear();

}

ofstream outfile(address);

int finalKeyWordsCount=finalKeywords.size();

for (set<string>::iterator it=finalKeywords.begin();it!=finalKeywords.end();it++)

{

outfile<<*it<<endl;

}

outfile.close();

cout<<"最后共选择特征词"<<finalKeyWordsCount<<endl;

finish=clock();

totaltime=(double)(finish-start)/CLOCKS_PER_SEC;

cout<<"遴选特征词共有了"<<totaltime<<endl;

}

/* 信息增益特征词选择算法 */

/************************************************************************/

void Preprocess::InformationGainFeatureSelection(vector<string > classLabels,DICTIONARY& mymap,CONTINGENCY& contingencyTable,int N,char * address)

{

clock_t start,finish;

double totaltime;

int totalTraingingCorpus=endIndex-beginIndex+1;//训练语料库总共的文章数目

set<string>finalKeywords;//存放最终遴选出的特征词

vector<pair<string,double>>IGInfo;

start=clock();

for(vector<string>::iterator it=classLabels.begin();it!=classLabels.end();it++)

{

//训练语料库中某个类别的文章数目

int N_subClassCnt=getCategorizationNum(*it,"TrainingCorpus");

//threshold决定每个类别遴选多少个特征词

int threshold=N_subClassCnt*N/totalTraingingCorpus;

IGInfo=InformationGainFeatureSelectionForPerclass(mymap,contingencyTable,*it);

for(vector<pair<string,double> >::size_type j=0;j<threshold;j++)

{

finalKeywords.insert(IGInfo[j].first);

}

IGInfo.clear();

}

ofstream outfile(address);

int finalKeyWordsCount=finalKeywords.size();

for (set<string>::iterator it=finalKeywords.begin();it!=finalKeywords.end();it++)

{

outfile<<*it<<endl;

}

outfile.close();

cout<<"最后共选择特征词"<<finalKeyWordsCount<<endl;

finish=clock();

totaltime=(double)(finish-start)/CLOCKS_PER_SEC;

cout<<"遴选特征词共有了"<<totaltime<<endl;

}

附: DF特征词选择算法