Canal数据同步Kafka

1、环境准备

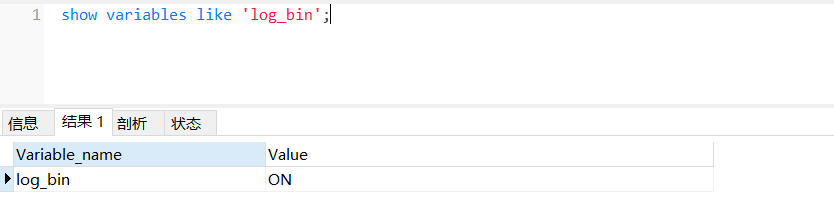

1、mysql数据开启binlog

show variables like 'log_%';

show variables like '%binlog_format%';

test库里面新建以下t_user表

-- Table structure for t_user -- ---------------------------- DROP TABLE IF EXISTS `t_user`; CREATE TABLE `t_user` ( `id` bigint(20) NOT NULL AUTO_INCREMENT, `name` varchar(255) NOT NULL COMMENT '用户姓名', `gender` tinyint(4) DEFAULT NULL COMMENT '性别1:男2:女', `phone` varchar(20) NOT NULL COMMENT '手机号码', `email` varchar(50) DEFAULT NULL COMMENT '邮箱', `status` tinyint(4) NOT NULL DEFAULT '1' COMMENT '状态1:启用2:禁用', `birthday` date DEFAULT NULL COMMENT '出生日期', `id_card` varchar(20) DEFAULT NULL COMMENT '证件号码', `head_portrait` varchar(255) DEFAULT NULL COMMENT '头像', `create_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间', `update_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '最后修改时间', PRIMARY KEY (`id`), UNIQUE KEY `uk_user_phone` (`phone`) USING BTREE ) ENGINE=InnoDB AUTO_INCREMENT=12 DEFAULT CHARSET=utf8mb4 COMMENT='用户表';

注意:1、开启binlog。2、创建test库(因为我canal默认指定配置的test库,表随便,canal未配置正则表的匹配规则)。

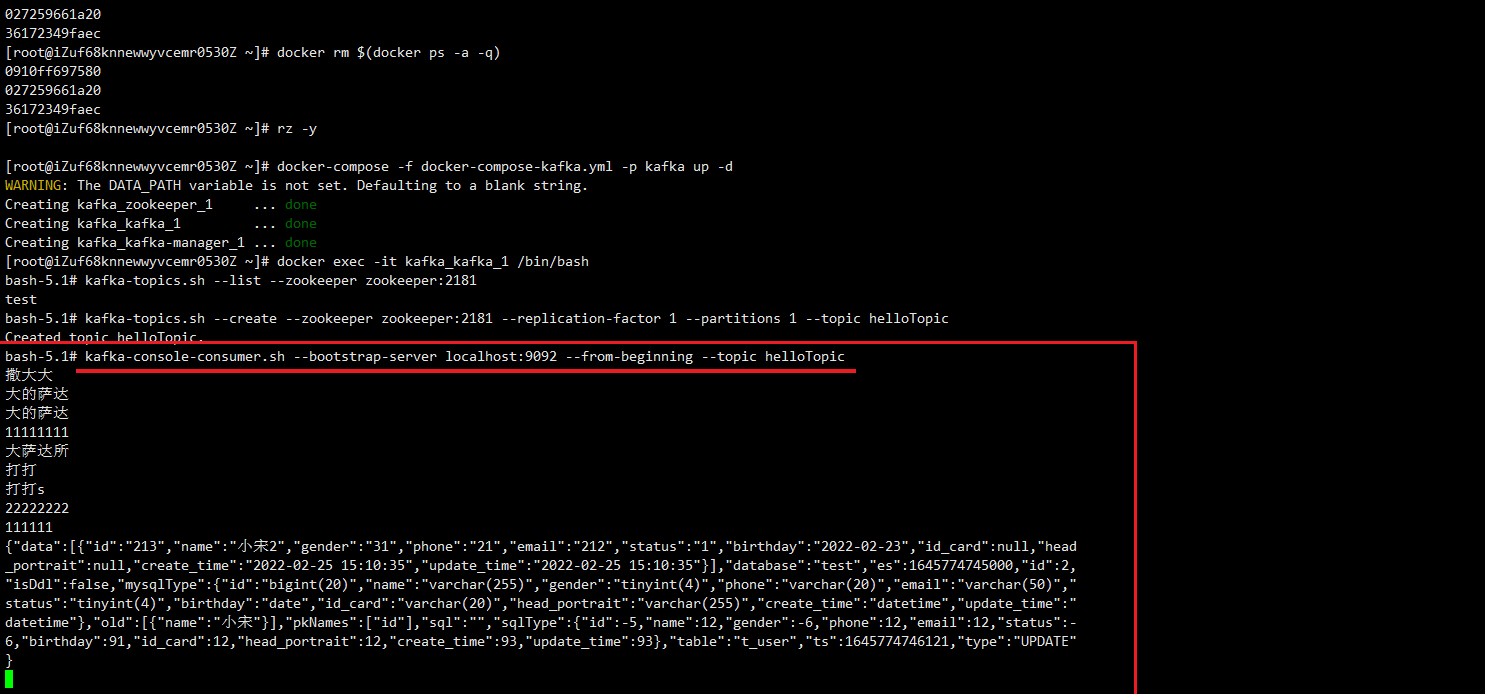

2、Kafka安装

注意:1、必须确定创建helloTopic主题,且可以通过linux 脚本sh 开两个窗口,正常生产和订阅消息。这两个窗口保留,方便实时查看消息(生产和消费)实时测试队列服务是正常OK。

2、开启9092防火墙,对外可用,这点(我用c# 示例已测,或者下载kafka界面化工具)

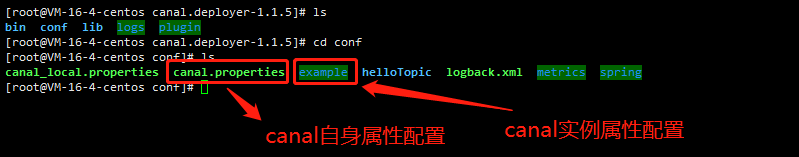

3、Canal中间件

1、更改实例属性配置文件 conf/example/instance.properties

cat conf/example/instance.properties

更改部分

改动的4个部分 配置主数据的配置链接 canal.instance.master.address= 47.102.117.31:3306 配置数据库的账号和密码 canal.instance.dbUsername=root canal.instance.dbPassword=abc123 新增的配置,指定默认链接数据库 canal.instance.defaultDatabaseName=test 更改消息主题 canal.mq.topic=helloTopic

具体文件如下

################################################# ## mysql serverId , v1.0.26+ will autoGen # canal.instance.mysql.slaveId=0 # enable gtid use true/false canal.instance.gtidon=false # position info canal.instance.master.address= 47.102.117.31:3306 canal.instance.master.journal.name= canal.instance.master.position= canal.instance.master.timestamp= canal.instance.master.gtid= # rds oss binlog canal.instance.rds.accesskey= canal.instance.rds.secretkey= canal.instance.rds.instanceId= # table meta tsdb info canal.instance.tsdb.enable=true #canal.instance.tsdb.url=jdbc:mysql://127.0.0.1:3306/canal_tsdb #canal.instance.tsdb.dbUsername=canal #canal.instance.tsdb.dbPassword=canal #canal.instance.standby.address = #canal.instance.standby.journal.name = #canal.instance.standby.position = #canal.instance.standby.timestamp = #canal.instance.standby.gtid= # username/password canal.instance.dbUsername=root canal.instance.dbPassword=abc123 canal.instance.defaultDatabaseName=test canal.instance.connectionCharset = UTF-8 # enable druid Decrypt database password canal.instance.enableDruid=false #canal.instance.pwdPublicKey=MFwwDQYJKoZIhvcNAQEBBQADSwAwSAJBALK4BUxdDltRRE5/zXpVEVPUgunvscYFtEip3pmLlhrWpacX7y7GCMo2/JM6LeHmiiNdH1FWgGCpUfircSwlWKUCAwEAAQ== # table regex canal.instance.filter.regex=.*\\..* # table black regex canal.instance.filter.black.regex=mysql\\.slave_.* # table field filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2) #canal.instance.filter.field=test1.t_product:id/subject/keywords,test2.t_company:id/name/contact/ch # table field black filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2) #canal.instance.filter.black.field=test1.t_product:subject/product_image,test2.t_company:id/name/contact/ch # mq config canal.mq.topic=helloTopic # dynamic topic route by schema or table regex #canal.mq.dynamicTopic=mytest1.user,mytest2\\..*,.*\\..* canal.mq.partition=0 # hash partition config #canal.mq.partitionsNum=3 #canal.mq.partitionHash=test.table:id^name,.*\\..* #canal.mq.dynamicTopicPartitionNum=test.*:4,mycanal:6 #################################################

2、配置 conf/canal.properties 文件

cat conf/canal.properties

改动的3个部分

配置解析主题

canal.destinations = helloTopic

配置Kafka地址

kafka.bootstrap.servers = 47.102.117.31:9092

配置 模式

canal.serverMode = kafka

具体文件内容如下:

#################################################

######### common argument #############

#################################################

# tcp bind ip

canal.ip =

# register ip to zookeeper

canal.register.ip =

canal.port = 11111

canal.metrics.pull.port = 11112

# canal instance user/passwd

# canal.user = canal

# canal.passwd = E3619321C1A937C46A0D8BD1DAC39F93B27D4458

# canal admin config

#canal.admin.manager = 127.0.0.1:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

# admin auto register

#canal.admin.register.auto = true

#canal.admin.register.cluster =

#canal.admin.register.name =

canal.zkServers =

# flush data to zk

canal.zookeeper.flush.period = 1000

canal.withoutNetty = false

# tcp, kafka, rocketMQ, rabbitMQ

canal.serverMode = kafka

# flush meta cursor/parse position to file

canal.file.data.dir = ${canal.conf.dir}

canal.file.flush.period = 1000

## memory store RingBuffer size, should be Math.pow(2,n)

canal.instance.memory.buffer.size = 16384

## memory store RingBuffer used memory unit size , default 1kb

canal.instance.memory.buffer.memunit = 1024

## meory store gets mode used MEMSIZE or ITEMSIZE

canal.instance.memory.batch.mode = MEMSIZE

canal.instance.memory.rawEntry = true

## detecing config

canal.instance.detecting.enable = false

#canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

canal.instance.detecting.sql = select 1

canal.instance.detecting.interval.time = 3

canal.instance.detecting.retry.threshold = 3

canal.instance.detecting.heartbeatHaEnable = false

# support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

canal.instance.transaction.size = 1024

# mysql fallback connected to new master should fallback times

canal.instance.fallbackIntervalInSeconds = 60

# network config

canal.instance.network.receiveBufferSize = 16384

canal.instance.network.sendBufferSize = 16384

canal.instance.network.soTimeout = 30

# binlog filter config

canal.instance.filter.druid.ddl = true

canal.instance.filter.query.dcl = false

canal.instance.filter.query.dml = false

canal.instance.filter.query.ddl = false

canal.instance.filter.table.error = false

canal.instance.filter.rows = false

canal.instance.filter.transaction.entry = false

canal.instance.filter.dml.insert = false

canal.instance.filter.dml.update = false

canal.instance.filter.dml.delete = false

# binlog format/image check

canal.instance.binlog.format = ROW,STATEMENT,MIXED

canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

# binlog ddl isolation

canal.instance.get.ddl.isolation = false

# parallel parser config

canal.instance.parser.parallel = true

## concurrent thread number, default 60% available processors, suggest not to exceed Runtime.getRuntime().availableProcessors()

#canal.instance.parser.parallelThreadSize = 16

## disruptor ringbuffer size, must be power of 2

canal.instance.parser.parallelBufferSize = 256

# table meta tsdb info

canal.instance.tsdb.enable = true

canal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:}

canal.instance.tsdb.url = jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL;

canal.instance.tsdb.dbUsername = canal

canal.instance.tsdb.dbPassword = canal

# dump snapshot interval, default 24 hour

canal.instance.tsdb.snapshot.interval = 24

# purge snapshot expire , default 360 hour(15 days)

canal.instance.tsdb.snapshot.expire = 360

#################################################

######### destinations #############

#################################################

canal.destinations = helloTopic

# conf root dir

canal.conf.dir = ../conf

# auto scan instance dir add/remove and start/stop instance

canal.auto.scan = true

canal.auto.scan.interval = 5

# set this value to 'true' means that when binlog pos not found, skip to latest.

# WARN: pls keep 'false' in production env, or if you know what you want.

canal.auto.reset.latest.pos.mode = false

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml

#canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = spring

canal.instance.global.lazy = false

canal.instance.global.manager.address = ${canal.admin.manager}

#canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

canal.instance.global.spring.xml = classpath:spring/file-instance.xml

#canal.instance.global.spring.xml = classpath:spring/default-instance.xml

##################################################

######### MQ Properties #############

##################################################

# aliyun ak/sk , support rds/mq

canal.aliyun.accessKey =

canal.aliyun.secretKey =

canal.aliyun.uid=

canal.mq.flatMessage = true

canal.mq.canalBatchSize = 50

canal.mq.canalGetTimeout = 100

# Set this value to "cloud", if you want open message trace feature in aliyun.

canal.mq.accessChannel = local

canal.mq.database.hash = true

canal.mq.send.thread.size = 30

canal.mq.build.thread.size = 8

##################################################

######### Kafka #############

##################################################

kafka.bootstrap.servers = 47.102.117.31:9092

kafka.acks = all

kafka.compression.type = none

kafka.batch.size = 16384

kafka.linger.ms = 1

kafka.max.request.size = 1048576

kafka.buffer.memory = 33554432

kafka.max.in.flight.requests.per.connection = 1

kafka.retries = 0

kafka.kerberos.enable = false

kafka.kerberos.krb5.file = "../conf/kerberos/krb5.conf"

kafka.kerberos.jaas.file = "../conf/kerberos/jaas.conf"

##################################################

######### RocketMQ #############

##################################################

rocketmq.producer.group = test

rocketmq.enable.message.trace = false

rocketmq.customized.trace.topic =

rocketmq.namespace =

rocketmq.namesrv.addr = 127.0.0.1:9876

rocketmq.retry.times.when.send.failed = 0

rocketmq.vip.channel.enabled = false

rocketmq.tag =

##################################################

######### RabbitMQ #############

##################################################

rabbitmq.host =

rabbitmq.virtual.host =

rabbitmq.exchange =

rabbitmq.username =

rabbitmq.password =

rabbitmq.deliveryMode =

YAML、YML在线编辑(校验)器

https://www.bejson.com/validators/yaml_editor

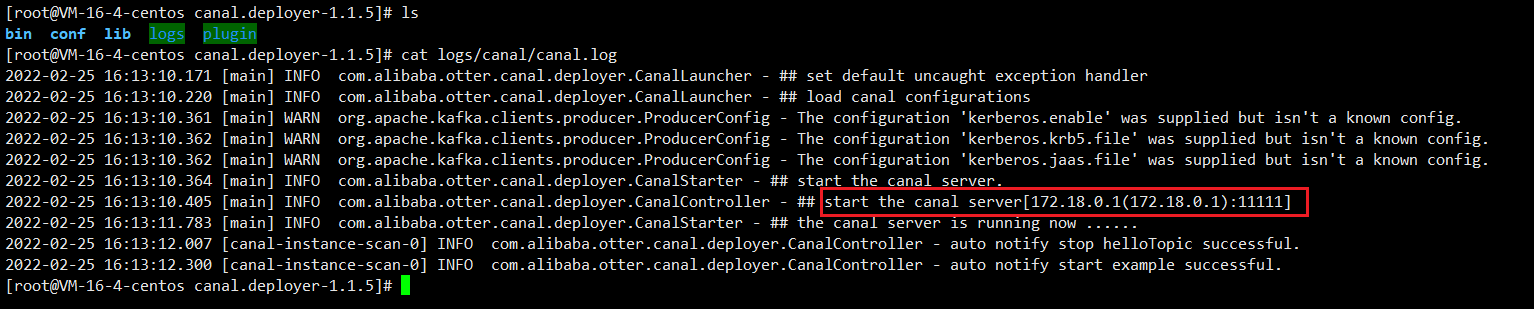

关于启动日志

查看canal日志

tail -f logs/canal/canal.log

查看instanse日志

tail -f log/example/example.log

如图所示:

授权canal链接 MySQL 账号具有作为 MySQL slave的权限

CREATE USER canal IDENTIFIED BY 'canal'; GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%'; -- GRANT ALL PRIVILEGES ON *.* TO 'canal'@'%' ; FLUSH PRIVILEGES;

点到为止

浙公网安备 33010602011771号

浙公网安备 33010602011771号