Kubernetes网络

kubernetes-Service

1.service存在的意义

1.防止破的失联(服务发现)

2.定义一组pod的访问策略(提供负载均衡)

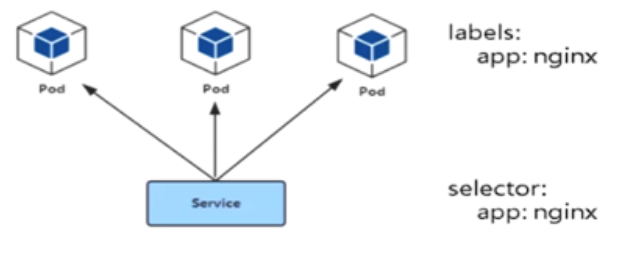

2.pod与service的关系

1.通过lablel-selector相关联

2.通过service实现pod的负载均衡(TCP/UDP 4层)

负载均衡器类型

1.四层 传输层,基于IP和端口

2.七层 应用层,基于应用协议转发,例如http协议

3. service三种常用类型

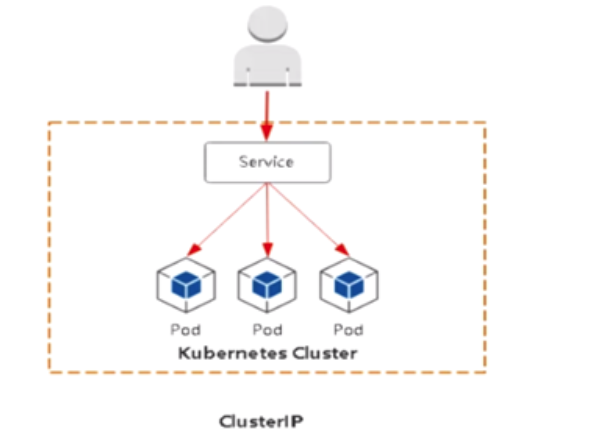

1.Cluster:集群内部使用

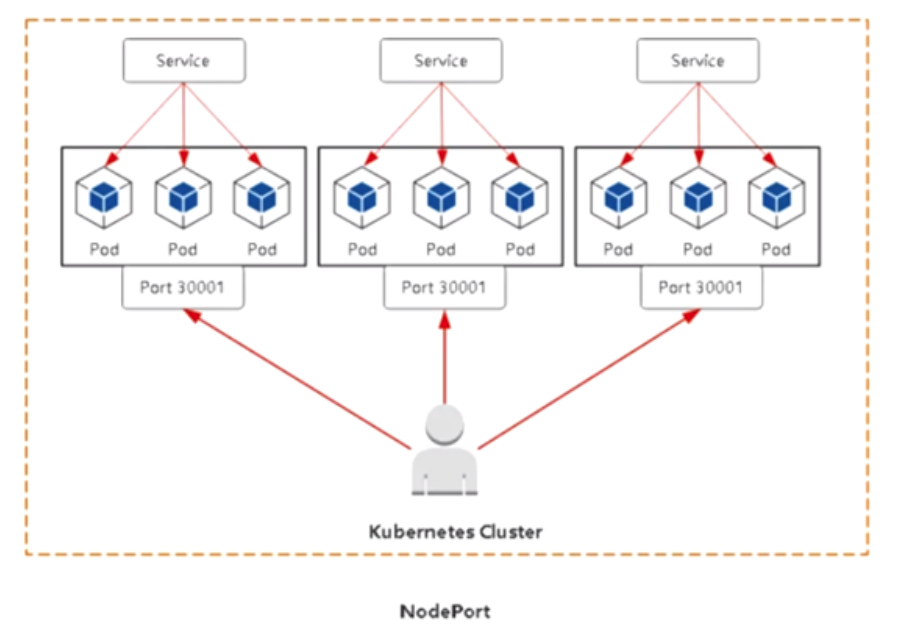

2.Node port:对外暴露应用

3.Loadbalancer: 对外暴露应用,适合公有云(腾讯云、阿里云、亚马逊等)

ClusterIP:

默认分配一个稳定的IP地址,即VIP只能在集群内部访问(amespace内部pod)

Nodeport:

Nodeport:

在每一个节点上启用一个端口来暴露服务,可以在集群外部访问,也会分配一个稳定内部集群IP地址。访问地址<nodeIP: nodeport>

Cluster端口 镜像中使用的端口

生成service的yaml

kubectl expose deploy web --port=80 --target-port=8080 --type=NodePort -o yaml --dry-run=client >service.yaml

cat server.yaml

apiVersion: v1 kind: Service metadata: labels: app: web name: web spec: ports: - port: 80 #clusterIP对应端口 protocol: TCP targetPort: 8080 #容器里面应用的端口 nodePort: 30012 #nodeport使用的节点端口 (配置的固定端口) selector: app: web type: NodePort

loadBalancer(只适合用云平台)

与nodeport类似,在每一个节点上启用一个端口来暴露服务,除此之外,Kubernetes会请求低层云平台删固定负载均衡器,将每个node(nodeIP):[nodePort]作为后端添加上

用户 --->域名 --->负载均衡器(公网IP) --->nodePort(所有节点) --->pod

每个部署一个应用,都需要手工在负载均衡器上配置,比较麻烦

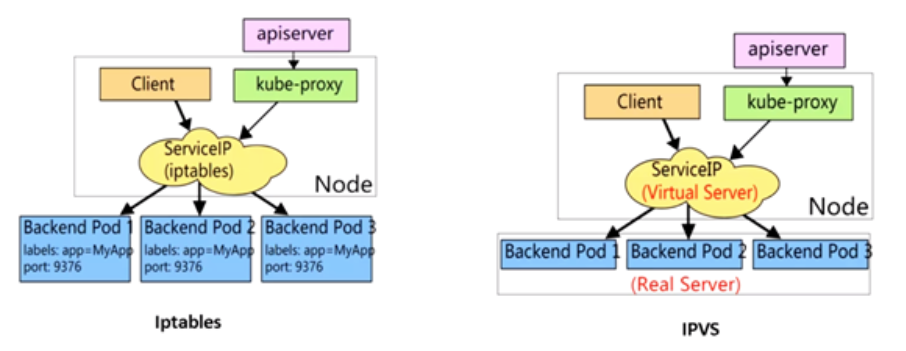

4. service代码模式

service使用iptables或者ipvs进行转发的

kubeadm 方式修改ipvs模式

kubectl edit configmap kube-proxy -n kube-system ... 43 mode: "ipvs" ... kubectl delete pod kube-proxy-btz4p -n kube-system # 删除pod,会自动拉起 kubectl logs kube-proxy-wwqbh -n kube-system # 查看是否启用ipvs

注意:

1.kube-proxy配置文件以configmap方式存储

2.如果让所有节点生效,需要重建所有节点kube-proxy pod

二进制修改ipvs模式:

vim kube-proxy-config.yaml mode: ipvs scheduler: "rr" systemctl restart kube-proxy # 注:参考不同资料,文件名可能不同

安装ipvsadm工具进行查看ipvs

yum install -y ipvsadm lsmod |grep ip_vs #检测是否加载 modprobe ip_vs # 或者更新一下内核 yum update -y

查看规则

[root@k8s-node ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.17.0.1:32487 rr -> 10.244.84.176:80 Masq 1 0 0 TCP 192.168.10.111:30001 rr -> 10.244.247.3:8443 Masq 1 0 0

六城堡流程: 客户端 --> clusterIP(iptables/ipvs负载均衡规则) -->分布在各个节点pod

查看规则负载均衡规则:

iptables

iptables-save |grep <service-name>

ipvs

ipvsadm -L -n

iptables VS ipvs

| iptables |

1.灵活。功能强大 2.规则遍历匹配和更新,呈现性时延时 |

| ipvs |

1.工作在内核态,有更好的性能 2.调度算法丰富:rr、wrr、lc、wlc、ip hash |

5. service DNS名称

DNS服务监视kubernetes API, 为每一个service创建DNS记录用于域名解析

clusterIP A记录格式: <service-name>.<namespace-name>.svc,cluster.local

示例:my-svc.my-namespace.svc.cluster.local

小结:

1.采用Node Port对外暴露应用,前面加一个LB实现统一访问入口

2.优先使用IPVS代理模式

3.集群内应用采用DNS名称访问

-------------------------------------------------------

Kubernetes-Ingress

1. Ingress 为弥补Node Port不足而生

Nodeport存在的不足:

1.一个端口只能一个服务使用,端口需要提前规划

2.只支持4层负载均衡

2. Pod与ingress 的关系

1.通过service Controller实现的负载均衡

2.支持TCP、UDP 4层和HTTP 7层

3. Ingerss Controller部署

1.部署ingress Controller

2.创建ingress规则

ingress controller有很多方式实现,这里采用官方维护的nginx控制器

github代码托管地址:

https://github.com/kunernetes/ingress-nginx

下载到master节点

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml

注意事项:

1.镜像地址修改/如果可以外网可以不修改(一般这个很难pull 下来)lizhenliang/nginx-ingress-controller:0.30.0

2.建议直接宿主机网络暴露: hostNetwork: true

设置/查看 污点

格式:kubectl label nodes <node-name> <label-key>=<label-value> 例如:kubectl label nodes k8s-node1 disktype=ssd 验证:kubectl get nodes --show-labels

修改mandatory.yaml

# 主要Deployment 资源下的内容 tolerations: # 由于我这里的边缘节点只有master一个节点,所有需要加上容忍 - operator: "Exists" nodeSelector: # 固定在边缘节点 #disktype: ssd kubernetes.io/os: linux #kubernetes.io/hostname: k8s-master hostNetwork: true # 将pod使用主机网命名空间 containers: - name: nginx-ingress-controller image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1 args: - /nginx-ingress-controller - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io #这里的标签和后面创建ingress 资源对象标签对应 securityContext:

其他主流控制器

1.Traefik: HTTP反向代理、负载均衡工具

2.Istio: 服务治理、控制入口流量

启动 Controller

kubectl apply -f mandatroy.yaml # 查看pod状态 kubectl get pods -n ingress-nginx -o wide kubectl describe pod xxxx -n ingress-nginx

创建服务发现

1.启动一个pod

# 导出yaml文件 或者直接创建pod kubectl create deploy java-demo --image=lizhenliang/java-demo -oyaml --dry-run=client > java-demo.yaml kubectl apply -f java-demo.yaml

2.为pod创建/绑定 service

kubectl expose deployment java-demo --port=80 --target-port=8080 --type=NodePort -o yaml --dry-run=client > svc.yaml # java-demo-svc.yaml 文件内容 apiVersion: v1 kind: Service metadata: labels: app: java-demo name: java-demo spec: ports: - port: 80 protocol: TCP targetPort: 8080 selector: app: java-demo

3.创建ingress规则(基于http的方式)

cat java-demo-ingress.yaml

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: snippet-test annotations: nginx.ingress.kubernetes.io/server-snippet: | # 对应的mandatroy文件中的标签

if ($http_user_agent ~* '(Android|iPhone)') { rewrite ^/(.*) http://m.baidu.com break; } spec: rules: - host: foo.bar.com http: paths: - path: / pathType: Prefix backend: service: name: java-demo port: number: 80

生效ingress资源

kubectl apply -f java-demo-ingress.yaml

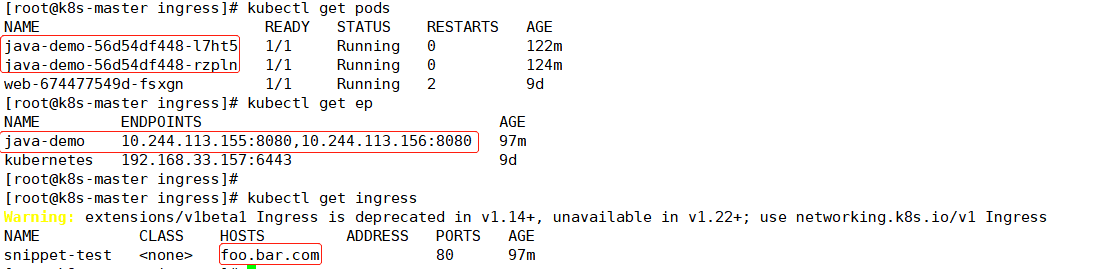

4.扩容pod副本/ 并且查看ingress、service资源服务

# 扩容副本 kubectl scale deploy java-demo --replicas=2 # 查新是否被关联到一个负载均衡器里面 kubectl get ep # 查看ingress资源 kubectl get ingress

访问测试:

需要添加hosts解析

curl foo.bar.com

ingerss规则相当于在nginx里创建了一个基于域名虚拟主机。只不过是自动生成,不需人工干预

upstream web { server pod1; server pod2; } server { listen 80; server_name foo.bar.com; location / { cert key proxy_pass http://web; } }

用户--> 域名-->负载均衡器(公网IP) --> ingress controller(nginx实现负载均衡)-->pod

创建ingress规则 (基于https的方式)

https方式通过证书方式进行实现,证书分自签证书和CA证书机构

这里使用自签证书方式进行实现https加密传输

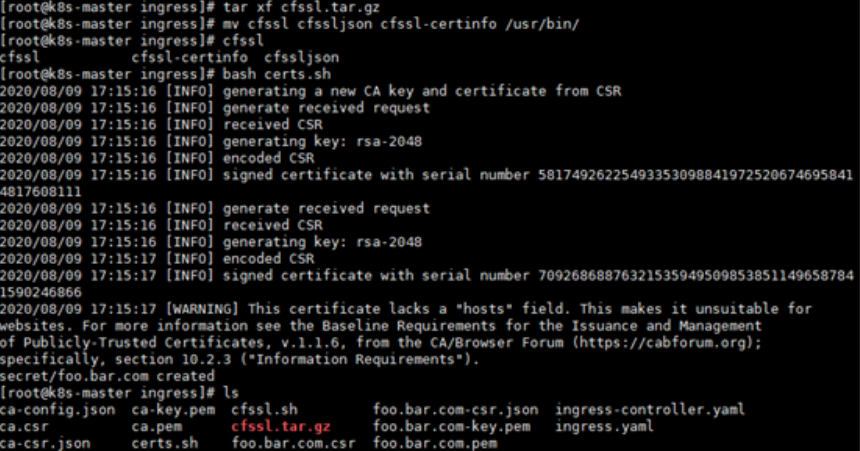

部署生成证书的工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

脚本方式生成证书

[root@k8s-master ~]# unzip ingress-https.zip [root@k8s-master ~]# cd ingress/ [root@k8s-master ingress]# ls certs.sh cfssl.sh ingress-controller.yaml ingress.yaml [root@k8s-master ingress]#

修改ceats.sh里面的域名,改成要使用的域名,然后生成证书

cd ingress/ && bash certs.sh #执行过程会将证书保存到secret中调用

查看保存的证书

kubectl get secret

配置https证书访问

cat ingress-https.yaml

apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: tls-example-ingress spec: tls: - hosts: - foo.bar.com # 以上四行就是加https证书位置 secretName: foo.bar.com #对应保存证书的名称 rules: - host: foo.bar.com http: paths: - path: / backend: serviceName: java-demo servicePort: 80

删除http规则,不删除可能会对https有影响

kubectl delete -f ingress.yaml

生效https规则

kubectl apply -f ingress-https.yaml

kubectl get ing

测试访问 https://foo.bar.com

mandatory.yaml 原yaml的内容: 橙色部分为修改部分

apiVersion: v1 kind: Namespace metadata: name: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: nginx-configuration namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: tcp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: udp-services namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: v1 kind: ServiceAccount metadata: name: nginx-ingress-serviceaccount namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: nginx-ingress-clusterrole labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - "extensions" - "networking.k8s.io" resources: - ingresses verbs: - get - list - watch - apiGroups: - "extensions" - "networking.k8s.io" resources: - ingresses/status verbs: - update --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: Role metadata: name: nginx-ingress-role namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - pods - secrets - namespaces verbs: - get - apiGroups: - "" resources: - configmaps resourceNames: # Defaults to "<election-id>-<ingress-class>" # Here: "<ingress-controller-leader>-<nginx>" # This has to be adapted if you change either parameter # when launching the nginx-ingress-controller. - "ingress-controller-leader-nginx" verbs: - get - update - apiGroups: - "" resources: - configmaps verbs: - create - apiGroups: - "" resources: - endpoints verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: nginx-ingress-role-nisa-binding namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: nginx-ingress-role subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: nginx-ingress-clusterrole-nisa-binding labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: nginx-ingress-clusterrole subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: apps/v1 kind: Deployment metadata: name: nginx-ingress-controller namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: prometheus.io/port: "10254" prometheus.io/scrape: "true" spec: # wait up to five minutes for the drain of connections terminationGracePeriodSeconds: 300 serviceAccountName: nginx-ingress-serviceaccount tolerations: # 由于我这里的边缘节点只有master一个节点,所有需要加上容忍 - operator: "Exists" nodeSelector: # 固定在边缘节点 #disktype: ssd kubernetes.io/os: linux #kubernetes.io/hostname: k8s-master hostNetwork: true # 将pod使用主机网命名空间 containers: - name: nginx-ingress-controller image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1 args: - /nginx-ingress-controller - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io #这里的标签和后面创建ingress 资源对象标签对应 securityContext: allowPrivilegeEscalation: true capabilities: drop: - ALL add: - NET_BIND_SERVICE # www-data -> 33 runAsUser: 33 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 lifecycle: preStop: exec: command: - /wait-shutdown ---