双线性模型(二)(NTN、SLM、SME)

继续学习双线性模型。越看越觉得,双线性模型都可以归类为 NN 模型,之前很多论文的模型综述里的双线性模型,看了原文才知道,其实更应归类为 NN,只是在打分函数设计时候用到了双线性函数,所以称为双线性模型。后面写大论文的时候应该重新给这些模型归归类。

NTN(Neural Tensor Network)

【paper】 Reasoning With Neural Tensor Networks for Knowledge Base Completion

【简介】 本文是斯坦福大学陈丹琦所在团队 2013 年的工作,好像是发表在一个期刊上的。文章提出了用于知识库补全的神经网络框架 NTN(Neural Tensor Network),网络结构/打分函数中同时包含双线性函数和线性函数,并用词向量的平均作为实体的表示。

Intro 和 Related Work

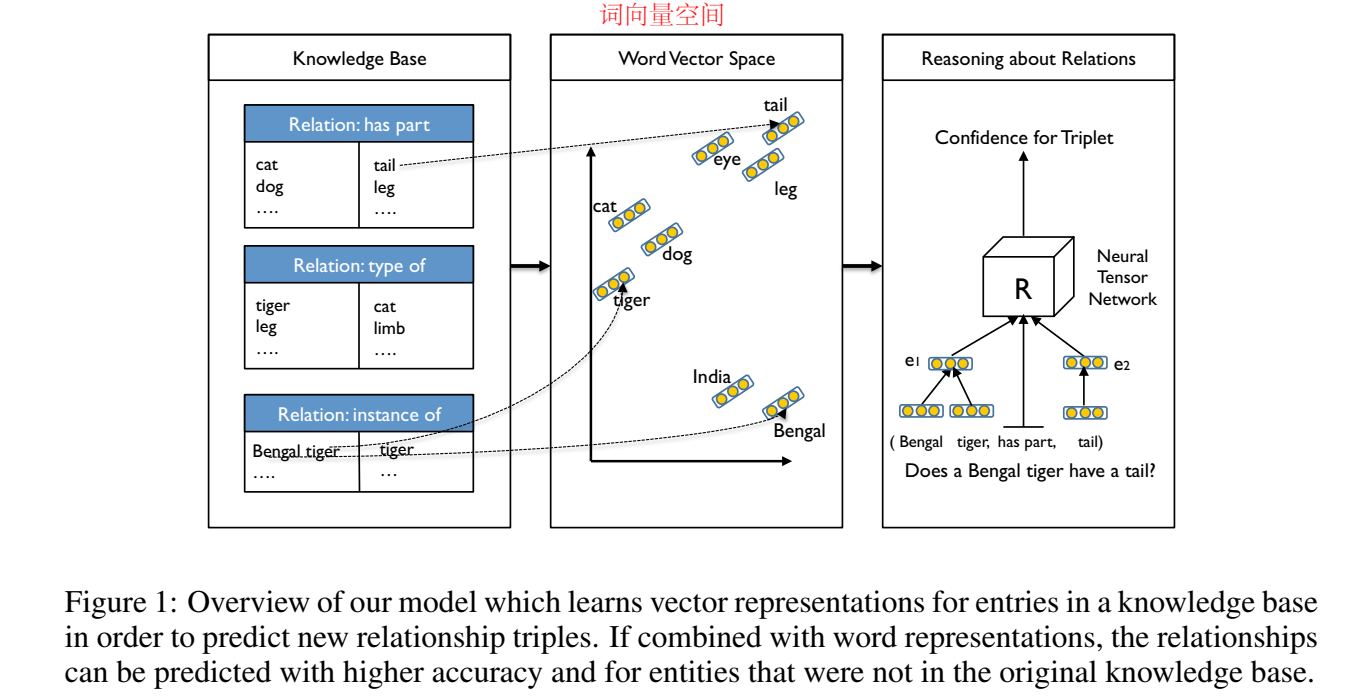

NTN 的模型结构图如下:

首先得到词向量空间中词的表示,然后用词的组合作为实体的表示,输入神经张量网络,进行置信度打分。

文章的 related work 也值得学习,每一段介绍了一种方法,并阐述了本文方法与前人方法的关联。

文中也提到,NTN 可以被视为学习张量分解的一种方法,类似于 Rescal。

模型

打分函数定义

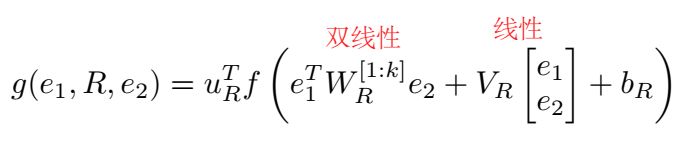

NTN 定义的打分函数为:

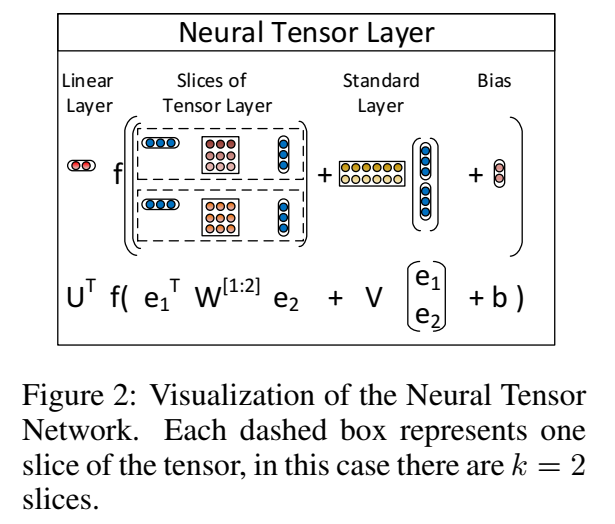

f 是 tanh 非线性激活函数,\(W_R\) 是一个张量。模型示意图如下:

虚线框内的暖色矩阵是张量 \(W_R\) 的一个 slice,对应一个关系,用于头尾实体的交互。

相关模型与 NTN 特例

这部分介绍与 NTN 相关的模型和在特殊情况下 NTN 的表现形式。

- Distance Model(之前看过的翻译模型 SE)

这种模型的缺点是两个实体之间没有交互。

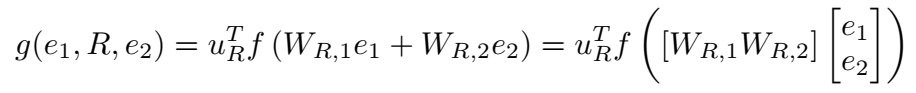

- Single Layer Model(SLM)

SLM 是普通的线性模型,是 NTN 中去掉双线性部分的表现形式(将 tensor 设置)。昨天看的 LFM 是纯双线性函数,没有线性部分,因此之前有论文说 NTN 是 SLM 和 LFM 的联合,这就对上了。

- Hadamard Model

这是 Antoine Bordes 2012 年提出的 UM,应该属于从线性到双线性的一个过渡模型。

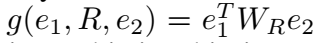

- Bilinear Model

这个就是 LFM 双线性模型了,没有线性变换。

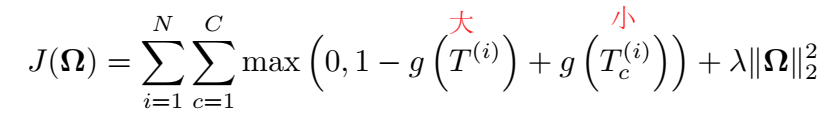

训练目标

实体表示初始化

用词向量的平均作为实体向量,文章还试验了 RNN,普通的平均操作效果差不多。

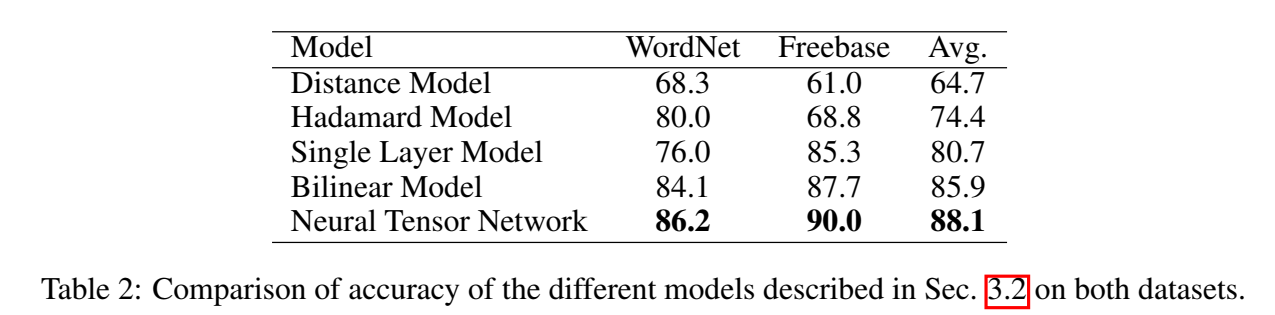

实验

关系三元组分类

整体数据集上的效果:

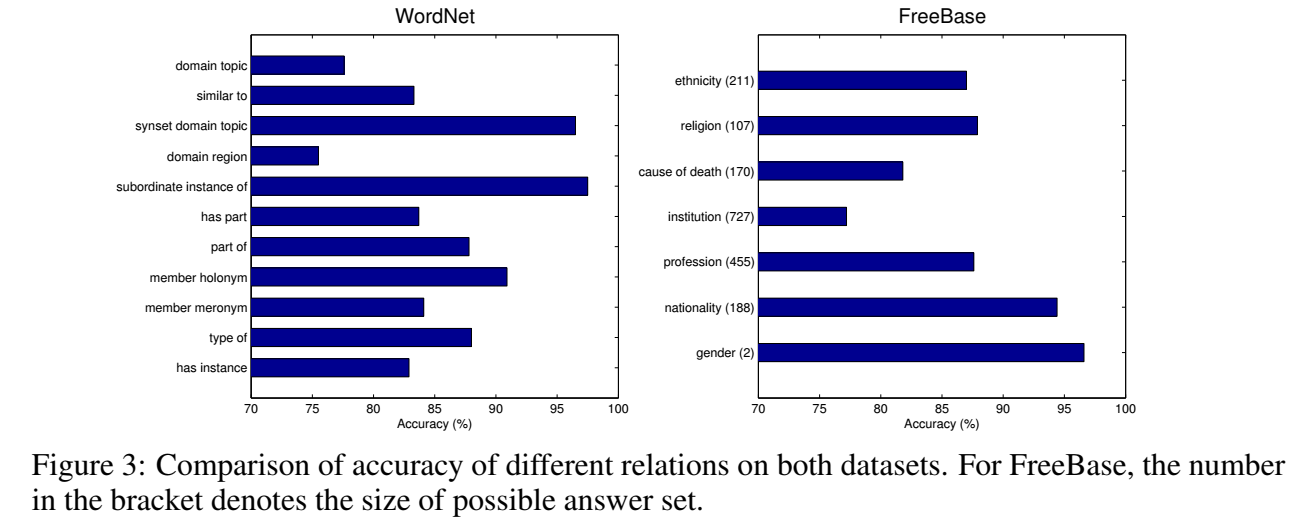

在每个关系上的效果:

对于每个关系下的分类效果各不相同的现象,文章给出的解释是:由于关系的模糊语义导致难以推断,因此在有些关系下分类效果差。

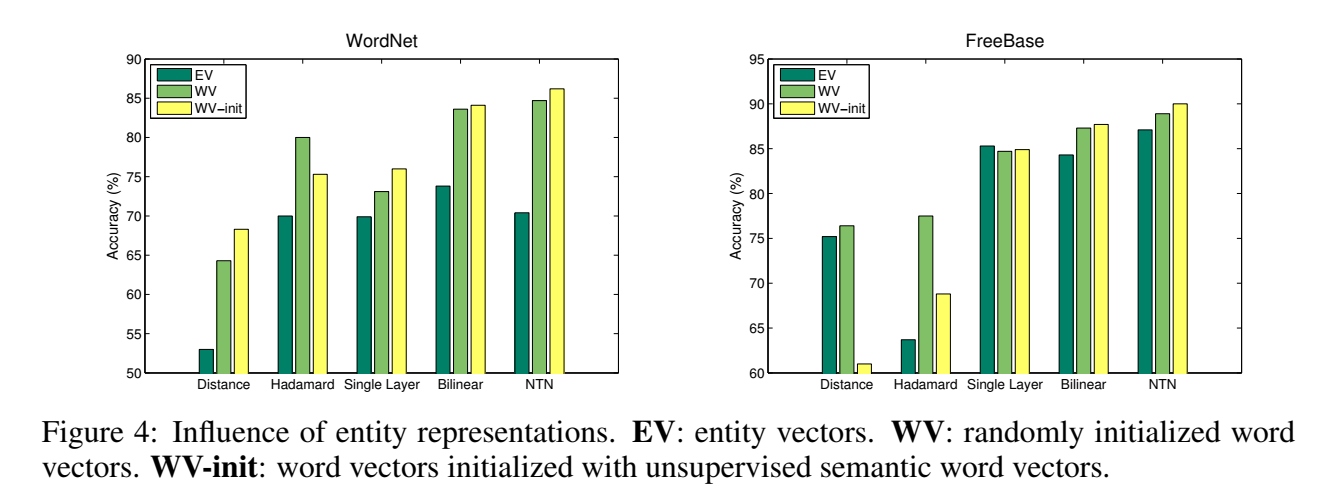

文章还对比了三种向量初始化方法的效果:不使用词向量初始化(EV);随机初始化的词向量(WV);用无监督语料训练的词向量(WV-init)

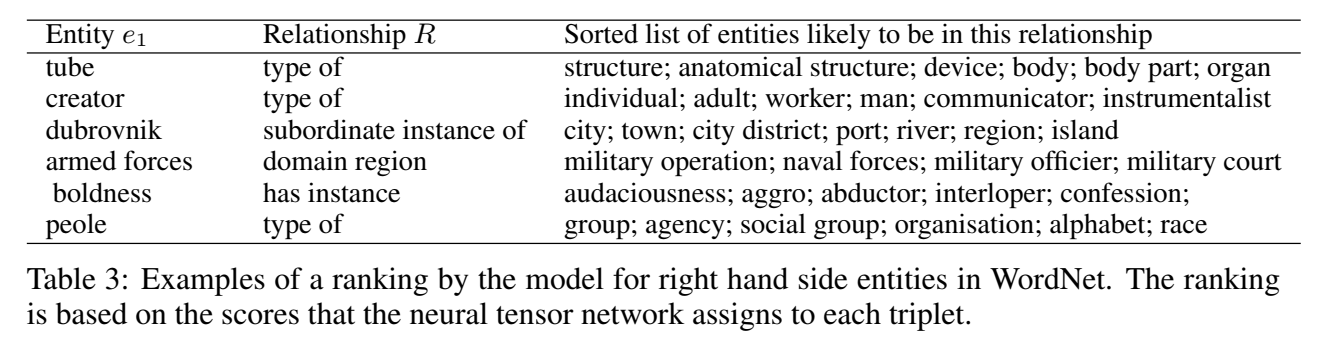

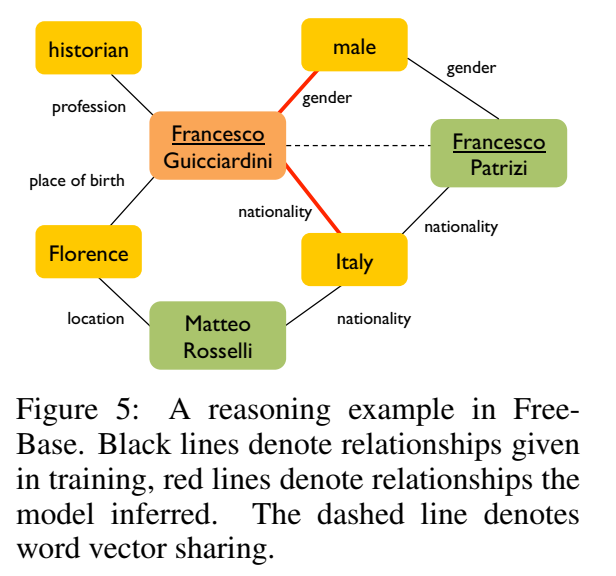

Case Study

代码

文中给出的代码链接失效了。Pykg2vec 给出了 NTN 的实现:

class NTN(PairwiseModel):

"""

`Reasoning With Neural Tensor Networks for Knowledge Base Completion`_ (NTN) is

a neural tensor network which represents entities as an average of their constituting

word vectors. It then projects entities to their vector embeddings

in the input layer. The two entities are then combined and mapped to a non-linear hidden layer.

https://github.com/siddharth-agrawal/Neural-Tensor-Network/blob/master/neuralTensorNetwork.py

It is a neural tensor network which represents entities as an average of their constituting word vectors. It then projects entities to their vector embeddings in the input layer. The two entities are then combined and mapped to a non-linear hidden layer.

Portion of the code based on `siddharth-agrawal`_.

Args:

config (object): Model configuration parameters.

.. _siddharth-agrawal:

https://github.com/siddharth-agrawal/Neural-Tensor-Network/blob/master/neuralTensorNetwork.py

.. _Reasoning With Neural Tensor Networks for Knowledge Base Completion:

https://nlp.stanford.edu/pubs/SocherChenManningNg_NIPS2013.pdf

"""

def __init__(self, **kwargs):

super(NTN, self).__init__(self.__class__.__name__.lower())

param_list = ["tot_entity", "tot_relation", "ent_hidden_size", "rel_hidden_size", "lmbda"]

param_dict = self.load_params(param_list, kwargs)

self.__dict__.update(param_dict)

self.ent_embeddings = NamedEmbedding("ent_embedding", self.tot_entity, self.ent_hidden_size)

self.rel_embeddings = NamedEmbedding("rel_embedding", self.tot_relation, self.rel_hidden_size)

self.mr1 = NamedEmbedding("mr1", self.ent_hidden_size, self.rel_hidden_size)

self.mr2 = NamedEmbedding("mr2", self.ent_hidden_size, self.rel_hidden_size)

self.br = NamedEmbedding("br", 1, self.rel_hidden_size)

self.mr = NamedEmbedding("mr", self.rel_hidden_size, self.ent_hidden_size*self.ent_hidden_size)

nn.init.xavier_uniform_(self.ent_embeddings.weight)

nn.init.xavier_uniform_(self.rel_embeddings.weight)

nn.init.xavier_uniform_(self.mr1.weight)

nn.init.xavier_uniform_(self.mr2.weight)

nn.init.xavier_uniform_(self.br.weight)

nn.init.xavier_uniform_(self.mr.weight)

self.parameter_list = [

self.ent_embeddings,

self.rel_embeddings,

self.mr1,

self.mr2,

self.br,

self.mr,

]

self.loss = Criterion.pairwise_hinge

def train_layer(self, h, t):

""" Defines the forward pass training layers of the algorithm.

Args:

h (Tensor): Head entities ids.

t (Tensor): Tail entity ids of the triple.

"""

mr1h = torch.matmul(h, self.mr1.weight) # h => [m, self.ent_hidden_size], self.mr1 => [self.ent_hidden_size, self.rel_hidden_size]

mr2t = torch.matmul(t, self.mr2.weight) # t => [m, self.ent_hidden_size], self.mr2 => [self.ent_hidden_size, self.rel_hidden_size]

expanded_h = h.unsqueeze(dim=0).repeat(self.rel_hidden_size, 1, 1) # [self.rel_hidden_size, m, self.ent_hidden_size]

expanded_t = t.unsqueeze(dim=-1) # [m, self.ent_hidden_size, 1]

temp = (torch.matmul(expanded_h, self.mr.weight.view(self.rel_hidden_size, self.ent_hidden_size, self.ent_hidden_size))).permute(1, 0, 2) # [m, self.rel_hidden_size, self.ent_hidden_size]

htmrt = torch.squeeze(torch.matmul(temp, expanded_t), dim=-1) # [m, self.rel_hidden_size]

return F.tanh(htmrt + mr1h + mr2t + self.br.weight)

def embed(self, h, r, t):

"""Function to get the embedding value.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

t (Tensor): Tail entity ids of the triple.

Returns:

Tensors: Returns head, relation and tail embedding Tensors.

"""

emb_h = self.ent_embeddings(h)

emb_r = self.rel_embeddings(r)

emb_t = self.ent_embeddings(t)

return emb_h, emb_r, emb_t

def forward(self, h, r, t):

h_e, r_e, t_e = self.embed(h, r, t)

norm_h = F.normalize(h_e, p=2, dim=-1)

norm_r = F.normalize(r_e, p=2, dim=-1)

norm_t = F.normalize(t_e, p=2, dim=-1)

return -torch.sum(norm_r*self.train_layer(norm_h, norm_t), -1)

def get_reg(self, h, r, t):

return self.lmbda*torch.sqrt(sum([torch.sum(torch.pow(var.weight, 2)) for var in self.parameter_list]))

【小结】 本文提出了神经张量模型 NTN,在打分函数中同时使用了双线性和线性操作,双线性操作中定义了张量用于捕捉头尾实体间的交互。NTN 使用预训练词向量的平均作为实体向量的初始化表示。

SLM(Single Layer Model)

NTN 模型中介绍到了 SLM:是最简单的单层线性模型,NTN 去掉双线性部分的特例。

代码

还是 Pykg2vec 的实现:

class SLM(PairwiseModel):

"""

In `Reasoning With Neural Tensor Networks for Knowledge Base Completion`_,

SLM model is designed as a baseline of Neural Tensor Network.

The model constructs a nonlinear neural network to represent the score function.

Args:

config (object): Model configuration parameters.

.. _Reasoning With Neural Tensor Networks for Knowledge Base Completion:

https://nlp.stanford.edu/pubs/SocherChenManningNg_NIPS2013.pdf

"""

def __init__(self, **kwargs):

super(SLM, self).__init__(self.__class__.__name__.lower())

param_list = ["tot_entity", "tot_relation", "rel_hidden_size", "ent_hidden_size"]

param_dict = self.load_params(param_list, kwargs)

self.__dict__.update(param_dict)

self.ent_embeddings = NamedEmbedding("ent_embedding", self.tot_entity, self.ent_hidden_size)

self.rel_embeddings = NamedEmbedding("rel_embedding", self.tot_relation, self.rel_hidden_size)

self.mr1 = NamedEmbedding("mr1", self.ent_hidden_size, self.rel_hidden_size)

self.mr2 = NamedEmbedding("mr2", self.ent_hidden_size, self.rel_hidden_size)

nn.init.xavier_uniform_(self.ent_embeddings.weight)

nn.init.xavier_uniform_(self.rel_embeddings.weight)

nn.init.xavier_uniform_(self.mr1.weight)

nn.init.xavier_uniform_(self.mr2.weight)

self.parameter_list = [

self.ent_embeddings,

self.rel_embeddings,

self.mr1,

self.mr2,

]

self.loss = Criterion.pairwise_hinge

def embed(self, h, r, t):

"""Function to get the embedding value.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

t (Tensor): Tail entity ids of the triple.

Returns:

Tensors: Returns head, relation and tail embedding Tensors.

"""

emb_h = self.ent_embeddings(h)

emb_r = self.rel_embeddings(r)

emb_t = self.ent_embeddings(t)

return emb_h, emb_r, emb_t

def forward(self, h, r, t):

h_e, r_e, t_e = self.embed(h, r, t)

norm_h = F.normalize(h_e, p=2, dim=-1)

norm_r = F.normalize(r_e, p=2, dim=-1)

norm_t = F.normalize(t_e, p=2, dim=-1)

return -torch.sum(norm_r * self.layer(norm_h, norm_t), -1)

def layer(self, h, t):

"""Defines the forward pass layer of the algorithm.

Args:

h (Tensor): Head entities ids.

t (Tensor): Tail entity ids of the triple.

"""

mr1h = torch.matmul(h, self.mr1.weight) # h => [m, d], self.mr1 => [d, k]

mr2t = torch.matmul(t, self.mr2.weight) # t => [m, d], self.mr2 => [d, k]

return torch.tanh(mr1h + mr2t)

SME(Semantic Matching Energy)

【paper】 A Semantic Matching Energy Function for Learning with Multi-relational Data

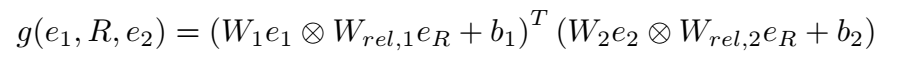

【简介】 这篇文章是 Antoine Bordes 发表在 2014 年的 Machine Learning 上的工作,提出的模型和翻译模型的 UM(Unstructured Model)一毛一样,甚至使用的变量写法都是一样的,只是 UM 画了示意图、SME 没有,唯一和 UM 不同的是 SME 提供了实体和关系交互时使用的 g 函数的两种形式:线性和双线性,而 UM 只有双线性。

代码

线性的实现:

class SME(PairwiseModel):

""" `A Semantic Matching Energy Function for Learning with Multi-relational Data`_

Semantic Matching Energy (SME) is an algorithm for embedding multi-relational data into vector spaces.

SME conducts semantic matching using neural network architectures. Given a fact (h, r, t), it first projects

entities and relations to their embeddings in the input layer. Later the relation r is combined with both h and t

to get gu(h, r) and gv(r, t) in its hidden layer. The score is determined by calculating the matching score of gu and gv.

There are two versions of SME: a linear version(SMELinear) as well as bilinear(SMEBilinear) version which differ in how the hidden layer is defined.

Args:

config (object): Model configuration parameters.

Portion of the code based on glorotxa_.

.. _glorotxa: https://github.com/glorotxa/SME/blob/master/model.py

.. _A Semantic Matching Energy Function for Learning with Multi-relational Data: http://www.thespermwhale.com/jaseweston/papers/ebrm_mlj.pdf

"""

def __init__(self, **kwargs):

super(SME, self).__init__(self.__class__.__name__.lower())

param_list = ["tot_entity", "tot_relation", "hidden_size"]

param_dict = self.load_params(param_list, kwargs)

self.__dict__.update(param_dict)

self.ent_embeddings = NamedEmbedding("ent_embedding", self.tot_entity, self.hidden_size)

self.rel_embeddings = NamedEmbedding("rel_embedding", self.tot_relation, self.hidden_size)

self.mu1 = NamedEmbedding("mu1", self.hidden_size, self.hidden_size)

self.mu2 = NamedEmbedding("mu2", self.hidden_size, self.hidden_size)

self.bu = NamedEmbedding("bu", self.hidden_size, 1)

self.mv1 = NamedEmbedding("mv1", self.hidden_size, self.hidden_size)

self.mv2 = NamedEmbedding("mv2", self.hidden_size, self.hidden_size)

self.bv = NamedEmbedding("bv", self.hidden_size, 1)

nn.init.xavier_uniform_(self.ent_embeddings.weight)

nn.init.xavier_uniform_(self.rel_embeddings.weight)

nn.init.xavier_uniform_(self.mu1.weight)

nn.init.xavier_uniform_(self.mu2.weight)

nn.init.xavier_uniform_(self.bu.weight)

nn.init.xavier_uniform_(self.mv1.weight)

nn.init.xavier_uniform_(self.mv2.weight)

nn.init.xavier_uniform_(self.bv.weight)

self.parameter_list = [

self.ent_embeddings,

self.rel_embeddings,

self.mu1,

self.mu2,

self.bu,

self.mv1,

self.mv2,

self.bv,

]

self.loss = Criterion.pairwise_hinge

def embed(self, h, r, t):

"""Function to get the embedding value.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

t (Tensor): Tail entity ids of the triple.

Returns:

Tensors: Returns head, relation and tail embedding Tensors.

"""

emb_h = self.ent_embeddings(h)

emb_r = self.rel_embeddings(r)

emb_t = self.ent_embeddings(t)

return emb_h, emb_r, emb_t

def _gu_linear(self, h, r):

"""Function to calculate linear loss.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

Returns:

Tensors: Returns the bilinear loss.

"""

mu1h = torch.matmul(self.mu1.weight, h.T) # [k, b]

mu2r = torch.matmul(self.mu2.weight, r.T) # [k, b]

return (mu1h + mu2r + self.bu.weight).T # [b, k]

def _gv_linear(self, r, t):

"""Function to calculate linear loss.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

Returns:

Tensors: Returns the bilinear loss.

"""

mv1t = torch.matmul(self.mv1.weight, t.T) # [k, b]

mv2r = torch.matmul(self.mv2.weight, r.T) # [k, b]

return (mv1t + mv2r + self.bv.weight).T # [b, k]

def forward(self, h, r, t):

"""Function to that performs semanting matching.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

t (Tensor): Tail ids of the triple.

Returns:

Tensors: Returns the semantic matchin score.

"""

h_e, r_e, t_e = self.embed(h, r, t)

norm_h = F.normalize(h_e, p=2, dim=-1)

norm_r = F.normalize(r_e, p=2, dim=-1)

norm_t = F.normalize(t_e, p=2, dim=-1)

return -torch.sum(self._gu_linear(norm_h, norm_r) * self._gv_linear(norm_r, norm_t), 1)

双线性的实现:

class SME_BL(SME):

""" `A Semantic Matching Energy Function for Learning with Multi-relational Data`_

SME_BL is an extension of SME_ that BiLinear function to calculate the matching scores.

Args:

config (object): Model configuration parameters.

.. _`SME`: api.html#pykg2vec.models.pairwise.SME

"""

def __init__(self, **kwargs):

super(SME_BL, self).__init__(**kwargs)

self.model_name = self.__class__.__name__.lower()

self.loss = Criterion.pairwise_hinge

def _gu_bilinear(self, h, r):

"""Function to calculate bilinear loss.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

Returns:

Tensors: Returns the bilinear loss.

"""

mu1h = torch.matmul(self.mu1.weight, h.T) # [k, b]

mu2r = torch.matmul(self.mu2.weight, r.T) # [k, b]

return (mu1h * mu2r + self.bu.weight).T # [b, k]

def _gv_bilinear(self, r, t):

"""Function to calculate bilinear loss.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

Returns:

Tensors: Returns the bilinear loss.

"""

mv1t = torch.matmul(self.mv1.weight, t.T) # [k, b]

mv2r = torch.matmul(self.mv2.weight, r.T) # [k, b]

return (mv1t * mv2r + self.bv.weight).T # [b, k]

def forward(self, h, r, t):

"""Function to that performs semanting matching.

Args:

h (Tensor): Head entities ids.

r (Tensor): Relation ids of the triple.

t (Tensor): Tail ids of the triple.

Returns:

Tensors: Returns the semantic matchin score.

"""

h_e, r_e, t_e = self.embed(h, r, t)

norm_h = F.normalize(h_e, p=2, dim=-1)

norm_r = F.normalize(r_e, p=2, dim=-1)

norm_t = F.normalize(t_e, p=2, dim=-1)

return torch.sum(self._gu_bilinear(norm_h, norm_r) * self._gv_bilinear(norm_r, norm_t), -1)

浙公网安备 33010602011771号

浙公网安备 33010602011771号