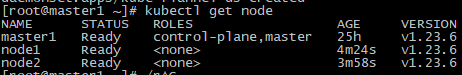

kubeadmin 安装k8s 1.23.3

配置yum源

[root@master1 ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

安装docker

yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo

yum list docker-ce.x86_64 --showduplicates |sort -r

#默认安装最新版

yum -y install docker-ce

修改docker:

修改或创建/etc/docker/daemon.json,加入下面的内容:

"registry-mirrors": ["https://ddcy8uhg.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

docker启动

# 启动

systemctl start docker

systemctl enable docker

选在安装的版本

yum list kubelet --showduplicates | sort -r

master node 都需要安装kubeadm kubelet kubectl

yum install -y kubelet-1.23.9-0 kubeadm-1.23.9-0 kubectl-1.23.9-0

拉去镜像

#!/bin/bash version="1.23.9" images=`kubeadm config images list --kubernetes-version=${version} |awk -F'/' '{print $NF}'` for imageName in ${images[@]};do docker pull registry.aliyuncs.com/google_containers/$imageName # docker pull gcr.azk8s.cn/google-containers/$imageName docker tag registry.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName # docker rmi gcr.azk8s.cn/google-containers/$imageName done

关闭swap

swapoff -a

初始化

[root@master ~]# cat 2.sh #!/bin/bash kubeadm init --kubernetes-version=v1.23.9 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=192.168.96.140 --ignore-preflight-errors=NumCPU --image-repository registry.aliyuncs.com/google_containers

如果runtime是crio

kubeadm init --kubernetes-version=v1.23.4 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 、

--apiserver-advertise-address=192.168.96.140 --ignore-preflight-errors=NumCPU \

--image-repository registry.aliyuncs.com/google_containers \

--cri-socket unix:///var/run/cri-docker.sock

输入日志

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.3.121:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:803d49279922ae293e02b11f9e5a4a6ca86589e3d4cccbe83b57829b176fec8d \ --control-plane --certificate-key dbf06b9cdf56046c6d1bdf2383856c374bf0a29470b66ddfc5528d1ab3c7b39c Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.3.121:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:803d49279922ae293e02b11f9e5a4a6ca86589e3d4cccbe83b57829b176fec8d

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml

把node加入到集群

如果token忘了, 重新生成 join 命令 可用来join work节点

kubeadm token create --print-join-command 查看

[root@master1 ~]# kubeadm token create --print-join-command

kubeadm join 192.168.3.121:6443 --token 6a4qb1.bfqsvxoburcrfe8f --discovery-token-ca-cert-hash sha256:803d49279922ae293e02b11f9e5a4a6ca86589e3d4cccbe83b57829b176fec8d

如果master节点key过期 重新生成--control-plane --certificate-key

kubeadm init phase upload-certs --upload-certs

如果需要添加master节点需要

使用 kubeadm init phase upload-certs --upload-certs 重新生成certificate-key

(这里需要注意老版本 需要用 kubeadm init phase upload-certs --experimental-upload-certs 生成)

执行

[root@master1 ~]# kubeadm init phase upload-certs --upload-certs I0728 17:25:02.945115 13362 version.go:255] remote version is much newer: v1.24.3; falling back to: stable-1.23 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 189d848109dac424dadcaef814fffb1e5a9e344329f378927431434eadd76f04

node和master拼接在一起后,在master3上执行

kubeadm join 192.168.3.121:6443 --token 6a4qb1.bfqsvxoburcrfe8f \ --discovery-token-ca-cert-hash sha256:803d49279922ae293e02b11f9e5a4a6ca86589e3d4cccbe83b57829b176fec8d \ --control-plane --certificate-key 189d848109dac424dadcaef814fffb1e5a9e344329f378927431434eadd76f04

设置tab补全

前提安装了 bash-completion.noarch vim /etc/profile source < (kubectl completion bash) source /etc/profile vim 的设置 set paste kubectl edit cm -m kube-system 删除daemonset pod

在安装kubernetes的过程中,会出现

failed to create kubelet: misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "systemd"

文件驱动默认由systemd改成cgroupfs, 而我们安装的docker使用的文件驱动是systemd, 造成不一致, 导致镜像无法启动

docker info查看

Cgroup Driver: systemd

现在有两种方式, 一种是修改docker, 另一种是修改kubelet,

修改docker:

修改或创建/etc/docker/daemon.json,加入下面的内容:

{ "exec-opts": ["native.cgroupdriver=systemd"] }

生成kubeadm-config.yaml文件(也可以不使用配置文件直接执行上面的初始化命令)

kubeadm config print init-defaults > kubeadm-config.yaml

# kubeadm config print init-defaults --component-configs KubeletConfiguration

配置文件

[root@master1 ~]# cat kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.3.111 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock imagePullPolicy: IfNotPresent name: master1 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: "192.168.3.121:6443" #添加vip地址 controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.23.9 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 scheduler: {} --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd failSwapOn: false --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs

如果kubeadm-config.xml 老旧,可以更新成新的

kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

master节点初始化, 会在/etc/kubernetes/目录下生成对应的证书和配置文件,之后其他master节点加入master1 即可

kubeadm init --config /root/new.yaml --upload-certs

--upload-certs 会生成master和node 加入集群的命令

从k8s集群中 删除master3节点,在次加入,出现etcd故障

[check-etcd] Checking that the etcd cluster is healthy error execution phase check-etcd: etcd cluster is not healthy: failed to dial endpoint https://192.168.3.113:2379 with maintenance client: context deadline exceeded

因为etcd集群中有master3 相关信息,需要删除后在添加

[root@master1 manifests]# kubectl exec -it etcd-master1 -n kube-system -- sh

sh-5.1# export ETCDCTL_API=3

sh-5.1# alias etcdctl='etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key'

sh-5.1# etcdctl member list

121d253eabe8363b, started, master2, https://192.168.3.112:2380, https://192.168.3.112:2379, false

653ca7d2b0854cca, started, master1, https://192.168.3.111:2380, https://192.168.3.111:2379, false

ca860714fca4f411, started, master3, https://192.168.3.113:2380, https://192.168.3.113:2379, false

sh-5.1# etcdctl member remove ca860714fca4f411

Member ca860714fca4f411 removed from cluster ab9ead666b29ed9d

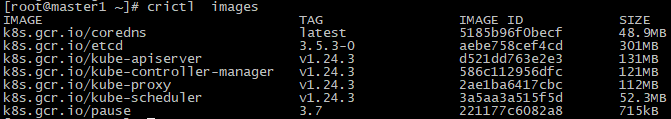

使用containerd 作为运行时,kubeadm离线安装1.24.3

1. 首先crictl下载镜像,ctr下载的镜像没有作用,必须使用crictl下载

crictl pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.3

crictl pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.3

crictl pull registry.aliyuncs.com/google_containers/kube-proxy:v1.24.3

crictl pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.3

crictl pull registry.aliyuncs.com/google_containers/coredns:latest

crictl pull registry.aliyuncs.com/google_containers/etcd:3.5.3-0

crictl pull registry.aliyuncs.com/google_containers/pause:3.7

containerd导入导出镜像

ctr -n=k8s.io image import typha.tar.gz ctr -n k8s.io tag nginx:1.19 1.1.1.1/bigdata/nginx:1.19

ctr -n=k8s.io image tag dockerproxy.com/bitnami/nginx-ingress-controller:1.6.4 fengjian/nginx-ingress-controller:1.6.4

导出镜像 ctr -n k8s.io image export --all-platforms typha.tar.gz docker.io/calico/typha:v3.25.0 或 ctr -n k8s.io image export --all-platforms typha.img docker.io/calico/typha:v3.25.0

# 修改containerd 配置文件

cd /etc/containerd containerd config default | tee /etc/containerd/config.toml sed -i "s#SystemdCgroup\ \=\ false#SystemdCgroup\ \=\ true#g" /etc/containerd/config.toml sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7" #变更为阿里地址 systemctl restart containerd

kubeadm 直接安装

kubeadm init --kubernetes-version=v1.24.3 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=192.168.40.130 \

--ignore-preflight-errors=NumCPU \

--image-repository registry.aliyuncs.com/google_containers

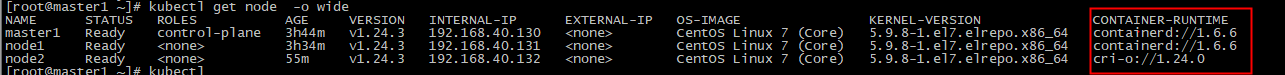

node2节点runtime使用crio

首先安装crio,使用crictl下载镜像,

[root@node2 ~]# cat /etc/crictl.yaml runtime-endpoint: "unix:///data/crio/var/run/crio/crio.sock" image-endpoint: "unix:///data/crio/var/run/crio/crio.sock" timeout: 10 debug: false pull-image-on-create: true disable-pull-on-run: false

再次安装containerd,修改 crictl.yaml配置文件

runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false

再次下载镜像后,把node2加入集群

kubeadm join 192.168.40.130:6443 --token e1s0vx.ubmtoojipk26nhut --discovery-token-ca-cert-hash sha256:2314e7be688cfb712e7ca802867955060e74075e37a42376ecaa964917a444b5

更换containerd,使用crio,修改kubelet启动文件

[root@node2 ~]# cat /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf # Note: This dropin only works with kubeadm and kubelet v1.11+ [Service] Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf" Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml" # This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env # This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use # the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file. EnvironmentFile=-/etc/sysconfig/kubelet ExecStart= ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS --container-runtime=remote --container-runtime-endpoint=unix:///data/crio/var/run/crio/crio.sock $KUBELET_EXTRA_ARGS #ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

重启kubelet服务后,查看使用的运行时