flume安装

Flume安装

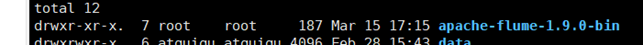

下载flume,解压到指定目录

tar -zxf apache-flume-1.9.0-bin.tar.gz -C /opt/software/

在flume的conf文件夹下新建log-kafka.properties

内容为:

agent.sources = exectail

agent.channels = memoryChannel

agent.sinks = kafkasink

# For each one of the sources, the type is defined

agent.sources.exectail.type = exec

# 下面这个路径是需要收集日志的绝对路径,改为自己的日志目录

agent.sources.exectail.command = tail -f /home/hadoop/flume/log/agent.log

agent.sources.exectail.interceptors=i1

agent.sources.exectail.interceptors.i1.type=regex_filter

# 定义日志过滤前缀的正则

agent.sources.exectail.interceptors.i1.regex=.+PRODUCT_RATING_PREFIX.+

# The channel can be defined as follows.

agent.sources.exectail.channels = memoryChannel

# Each sink's type must be defined

agent.sinks.kafkasink.type = org.apache.flume.sink.kafka.KafkaSink

agent.sinks.kafkasink.kafka.topic = log

agent.sinks.kafkasink.kafka.bootstrap.servers = localhost:9092

agent.sinks.kafkasink.kafka.producer.acks = 1

agent.sinks.kafkasink.kafka.flumeBatchSize = 20

#Specify the channel the sink should use

agent.sinks.kafkasink.channel = memoryChannel

# Each channel's type is defined.

agent.channels.memoryChannel.type = memory

# Other config values specific to each type of channel(sink or source)

# can be defined as well

# In this case, it specifies the capacity of the memory channel

agent.channels.memoryChannel.capacity = 10000

上述配置文件功能描述:

使用tail -f /home/hadoop/flume/log/agent.log命令,监听文件中改动的内容,通过正则表达式.+PRODUCT_RATING_PREFIX.+匹配内容,将匹配的结果发送到localhost:9092中Kafka的log主题中

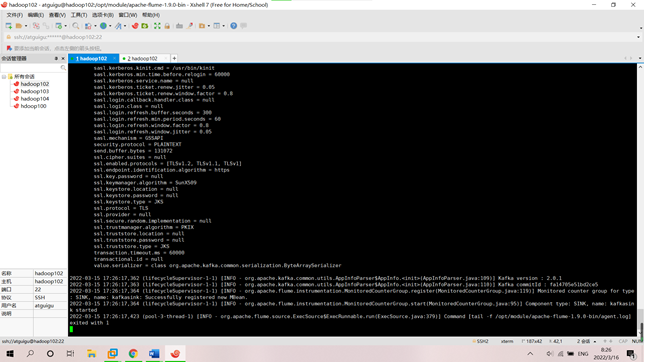

进入flume目录,执行启动命令:

cd ~/flume

./bin/flume-ng agent -c ./conf/ -f ./conf/log-kafka.properties -n agent -Dflume.root.logger=INFO,console

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App

2021-03-16 大二下学期第一次结对作业(第一阶段)