课堂测试

1.转换为csv文件

2.导入hive

创建表

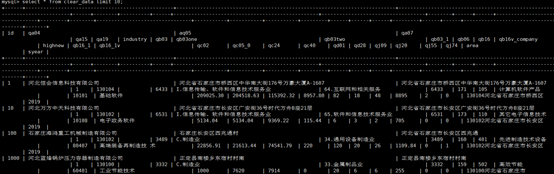

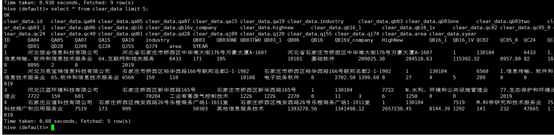

create table clear_data

(ID String, QA04 String, QA05 String,QA07 String,QA15 String, QA19 String,industry String,QB03 String,QB03ONE String,QB03TWO String,QB03_1 String,QB06 String,QB16 String,QB16V_company String,HighNew String,QB16_1 String,QB16_1V String,QC02 String,QC05_0 String,QC24 String,QC40 String,QD01 String,QD28 String,QJ09 String,QJ20 String,QJ55 String,QJ74 String,area String,SYEAR String)

ROW format delimited fields terminated by ',' STORED AS TEXTFILE;

create table enterprise_data

(state_id String, state_str String)

ROW format delimited fields terminated by ',' STORED AS TEXTFILE;

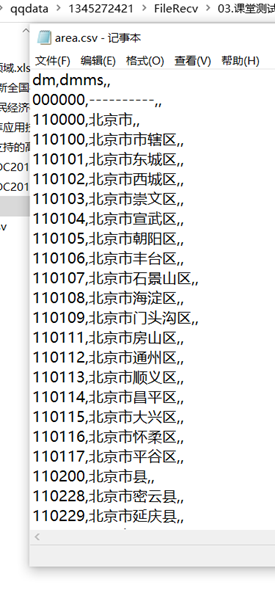

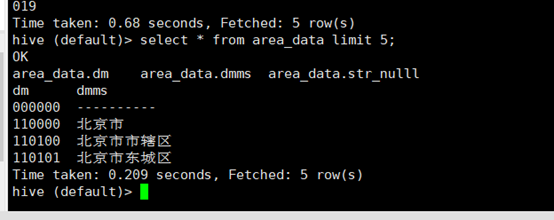

create table area_data

( String, dmms String, str_nulll String)

ROW format delimited fields terminated by ',' STORED AS TEXTFILE;

导入数据

load data local inpath '/opt/module/data/area.csv' into table area_data;

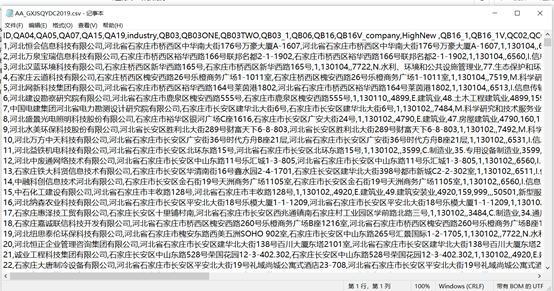

load data local inpath '/opt/module/data/ AA_GXJSQYDC2019.csv' into table clear_data;

3.数据清洗并导出数据库MySql

bin/sqoop export --connect "jdbc:mysql://hadoop102:3306/test?useUnicode=true&characterEncoding=utf-8" --username root --password 123456 --table clear_data --num-mappers 1 --export-dir /user/hive/warehouse/clear_data --input-fields-terminated-by ","

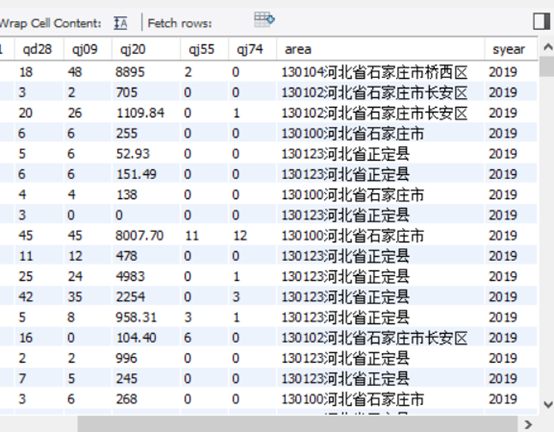

update clear_data a,area_data b set a.area=a.qa19+b.dmms where a.qa19=b.dm;