05 RDD编程

一、词频统计:

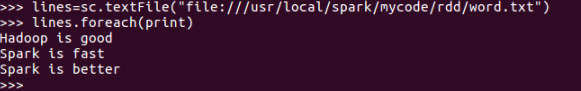

1.读文本文件生成RDD lines

lines=sc.textFile("file:///usr/local/spark/mycode/rdd/word.txt")

lines.foreach(print)

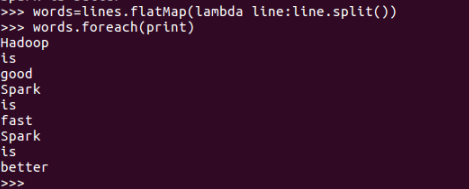

2.将一行一行的文本分割成单词 words flatmap()

words=lines.flatMap(lambda line:line.split())

words.foreach(print)

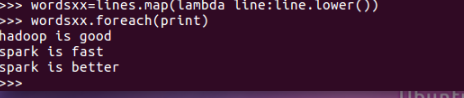

3.全部转换为小写 lower()

wordsxx=lines.map(lambda word:word.lower())

wordsxx.foreach(print)

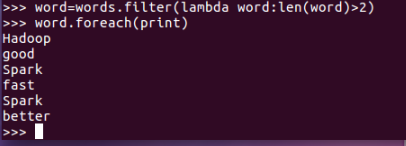

4.去掉长度小于3的单词 filter()

word=words.filter(lambda words:len(words)>2)

word.foreach(print)

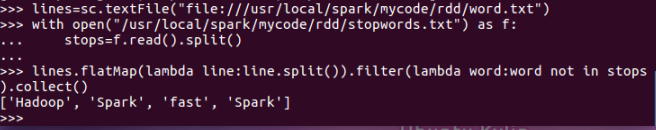

5.去掉停用词

lines=textFile("file:///usr/local/spark/mycode/rdd/word.txt")

with open("/usr/lcaol/spark/mycode/rdd/stopwords.txt") as f:

stops=f.read().split()

lines.flatMap(lambda line:line.split()).filter(lambda word:word not in stops).collect()

6.转换成键值对 map()

words.map(lambda word:(word,1)).collect()

7.统计词频 reduceByKey()

words.map(lambda word:(word,1)).reduceByKey(lambda a,b:a+b).collect()

8.按字母顺序排序 sortBy(f)

words.map(lambda word:(word,1)).reduceByKey(lambda a,b:a+b).sortBy(lambda word:word[0]).collect()

9.按词频排序 sortByKey()

words.map(lambda word:(word,1)).reduceByKey(lambda a,b:a+b).sortByKey().collect()

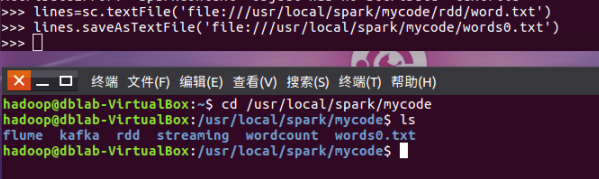

10.结果文件保存 saveAsTextFile(out_url)

lines.saveAsTextFile('file:///usr/local/spark/mycode/words0.txt')

二、学生课程分数案例

lines=sc.textFile("file:///usr/local/spark/mycode/rdd/xs.txt")

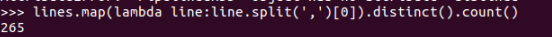

- 总共有多少学生?map(), distinct(), count()

lines.map(lambda line:line.split(',')[0]).distinct().count()

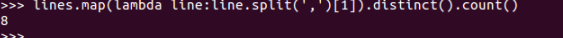

- 开设了多少门课程?

lines.map(lambda line:line.split(',')[1]).distinct().count()

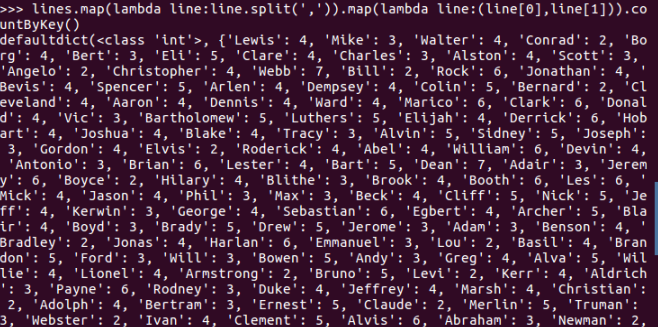

- 每个学生选修了多少门课?map(), countByKey()

lines.map(lambda line:line.split(',')).map(lambda line:(ine[0],line[1])).countByKey()

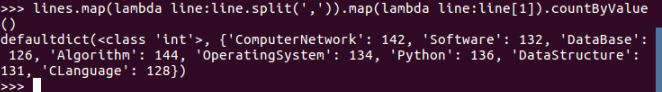

- 每门课程有多少个学生选?map(), countByValue()

lines.map(lambda line:line.split(',')).map(lambda line:line[1]).countByValue()

- Tom选修了几门课?每门课多少分?filter(), map() RDD

lines.file(lambda line:"Tom" in line).map(lambda line:line.split(','))

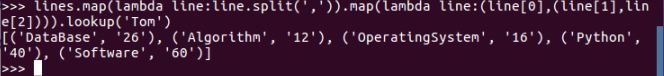

- Tom选修了几门课?每门课多少分?map(),lookup() list

lines.map(lambda line:line.split(',')).map(lambda line:(line[0],(line[1],line[2]))).lookup('Tom')

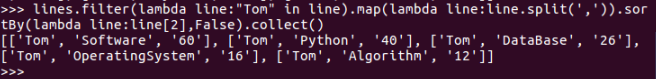

- Tom的成绩按分数大小排序。filter(), map(), sortBy()

lines.filter(lambda line:'Tom' in line).map(lambda line:l;ine.split(',')).sortBy(lambda line:line[2],False).collect()

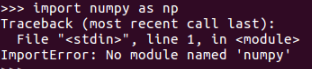

- Tom的平均分。map(),lookup(),mean()

import numpy as np

name=lines.map(lambda line:line.split(',')).map(lambda line:(line[0],(line[1],line[2]))).lookup('Tom')

np.mean([int(x) for x in name])

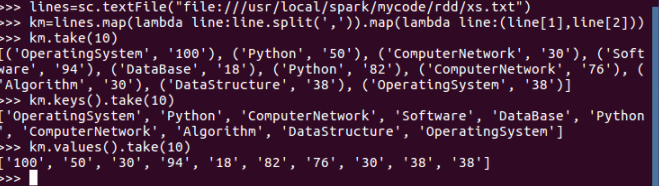

- 生成(课程,分数)RDD,观察keys(),values()

lines=sc.textFile('file:///usr/local/spark/mycode/rdd/xs.txt')

km=lines.map(lambda line:line.split()).map(lambda line:(line[1],line[2]))

km.take(10)

km.keys().take(10)

km.values().take(10)

map(lambda line:line.split())

map(lambda line:line.split())

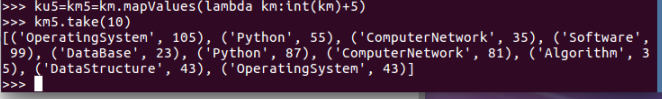

- 每个分数+5分。mapValues(func)

km5=km.mapValues(lambda km:int(km)+5)

km5.take(10)

- 求每门课的选修人数及所有人的总分。combineByKey()

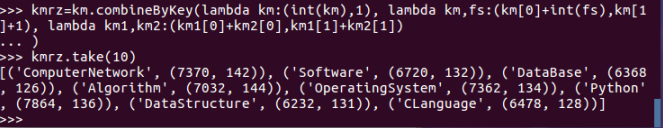

kmrz=km.combineByKey(lambda km:(int(km),1), lambda km,fs:(km[0]+int(fs),km[1]+1), lambda km1,km2:(km1[0]+km2[0],km1[1]+km2[1]))

kmrz.take(10)

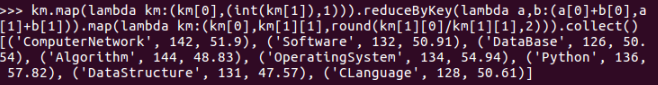

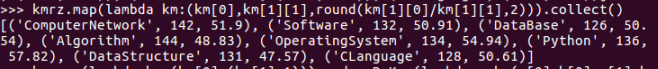

- 求每门课的选修人数及平均分,精确到2位小数。map(),round()

kmrz.map(lambda km:(km[0],km[1][1],round(km[1][0]/km[1][1],2))).collect()

- 求每门课的选修人数及平均分。用reduceByKey()实现,并比较与combineByKey()的异同。

km.map(lambda km:(km[0],(int(km[1]),1))).reduceByKey(lambda a,b:(a[0]+b[0],a[1]+b[1])).map(lambda km:(km[0],km[1][1],round(km[1][0]/km[1][1],2))).collect()