ELK之收集日志到mysql数据库

写入数据库的目的是持久化保存重要数据,比如状态码、客户端浏览器版本等,用于后期按月做数据统计等.

环境准备

linux-elk1:10.0.0.22,Kibana ES Logstash Nginx

linux-elk2:10.0.0.33,MySQL5.7

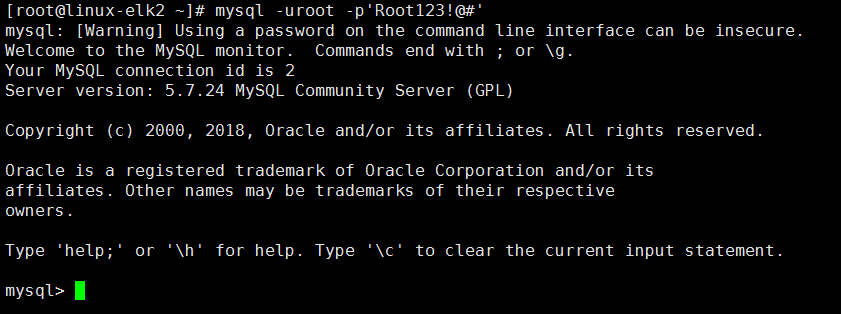

1.linux-elk2上配置数据库

安装好数据库后,配置,并授权

mysql -uroot -p'Root123!@#' create database elk character set utf8 collate utf8_bin; grant all privileges on elk.* to elk@'10.0.0.%' identified by 'Elk123!@#'; flush privileges; # 在linux-elk1上验证是否能登录elk2上的mysql mysql -u elk -h 10.0.0.33 -p'Elk123!@#'

2.配置JDBC数据库驱动

/usr/share/logstash/bin/logstash-plugin list | grep jdbc

logstash-input-jdbc # 没有logstash-output-jdbc

# 安装logstash的数据库驱动需要先安装gem源

yum -y install gem

gem -v

gem source list # 目前是一个国外的源,需要将其换成rubychina的

gem sources --add https://gems.ruby-china.org/ --remove https://rubygems.org/

Error fetching https://gems.ruby-china.org/:

bad response Not Found 404 (https://gems.ruby-china.org/specs.4.8.gz)

# 替换不成功,是因为官网换地址了

gem sources --add https://gems.ruby-china.com/ --remove https://rubygems.org/

https://gems.ruby-china.com/ added to sources

https://rubygems.org/ removed from sources

RubyChina官网由org换成com

安装JDBC驱动

报错1:WARNING: SSLSocket#session= is not supported

报错2:INFO: I/O exception (java.net.SocketException) caught when processing request to {s}->https://repo.maven.apache.org:443

解决办法:

vim /usr/share/logstash/Gemfile # source "https://rubygems.org" 将国外的源注释,换成国内的 source "https://gems.ruby-china.com/"

安装顺利的话是这样的

/usr/share/logstash/bin/logstash-plugin install logstash-output-jdbc Validating logstash-output-jdbc Installing logstash-output-jdbc Installation successful /usr/share/logstash/bin/logstash-plugin list | grep jdbc logstash-input-jdbc logstash-output-jdbc # 下载数据库的JDBC驱动-https://dev.mysql.com/downloads/connector/j/, # 上传到服务器,驱动的路径必须严格一致,否则连接数据库会报错. tar xf mysql-connector-java-5.1.47.tar.gz cd mysql-connector-java-5.1.47/ mkdir -p /usr/share/logstash/vendor/jar/jdbc cp mysql-connector-java-5.1.47-bin.jar /usr/share/logstash/vendor/jar/jdbc/ chown -R logstash.logstash /usr/share/logstash/vendor/jar/jdbc/

3.创建数据表

配置Nginx日志格式

log_format access_log_json '{"host":"$http_x_real_ip","client_ip":"$remote_addr","log_time":"$time_iso8601","request":"$request","status":"$status","body_bytes_sent":"$body_bytes_sent","req_time":"$request_time","AgentVersion":"$http_user_agent"}';

access_log /var/log/nginx/access.log access_log_json;

nginx -t

nginx -s reload

创建数据表:在数据库中存储数据的时候,没有必要存储日志的所有内容,只需存储我们需要的重要信息即可.

注意:数据表中需要创建time字段,time的默认值设置为CURRENT_TIMESTAMP.

use elk; create table nginx_log(host varchar(128),client_ip varchar(128),status int(4),req_time float(8,3),AgentVersion varchar(512), time TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

4.配置Logstash将日志写入数据库

cat /etc/logstash/conf.d/nginx_log.conf

input{

file{

path => "/var/log/nginx/access.log"

start_position => "beginning"

stat_interval => "2"

codec => "json"

}

}

output{

elasticsearch {

hosts => ["10.0.0.22:9200"]

index => "nginx-log-%{+YYYY.MM.dd}"

}

jdbc{

connection_string => "jdbc:mysql://10.0.0.33/elk?user=elk&password=Elk123!@#&useUnicode=true&characterEncoding=UTF8"

statement => ["insert into nginx_log(host,client_ip,status,req_time,AgentVersion) VALUES(?,?,?,?,?)", "host","client_ip","status","req_time","AgentVersion"]

}

}

systemctl restart logstash.service

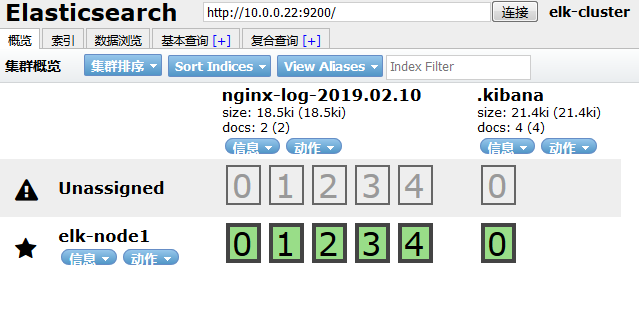

访问http://10.0.0.22/nginxweb/,可以在数据库看到数据已经入库

输出到es的nginx日志

logstash安装插件解决报错:https://www.jianshu.com/p/4fe495639a9a

ELK收集日志到mysql数据库:http://blog.51cto.com/tryingstuff/2050360

定期删除es集群10天以上的索引:https://blog.csdn.net/felix_yujing/article/details/78207667

ELK批量删除索引及集群相关操作记录:https://www.cnblogs.com/kevingrace/p/9994178.html