[Paper Reading] HPT: Scaling Proprioceptive-Visual Learning with Heterogeneous Pre-trained Transformers

Scaling Proprioceptive-Visual Learning with Heterogeneous Pre-trained Transformers

Scaling Proprioceptive-Visual Learning with Heterogeneous Pre-trained Transformers

时间:24.09

机构:MIT&Meta

主页:https://liruiw.github.io/hpt/

TL;DR

由于具身智能在各种本体(例如摆放、Sensor多样性)与任务上泛化性是目前具身智能的一个难点问题,本文通过提出HPT(Heterogeneous Pre-trained Transformers),一种共享Policy NN的Trunk部分预训练参数,来解决该问题。实验证明这种方法在真实与仿真场景下能提升20%的效果。

Method

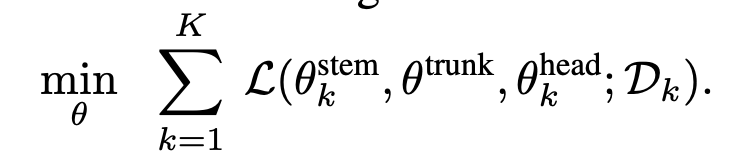

将一个Policy NN模型定义为stem, trunk, head三部分

Stem

a proprioceptive tokenizer(本体感受) and a vision tokenizer(ResNet backbone),整体参数量占比少。

Trunk

the number of trunk parameters is fixed independent of the number of embodiments and tasks

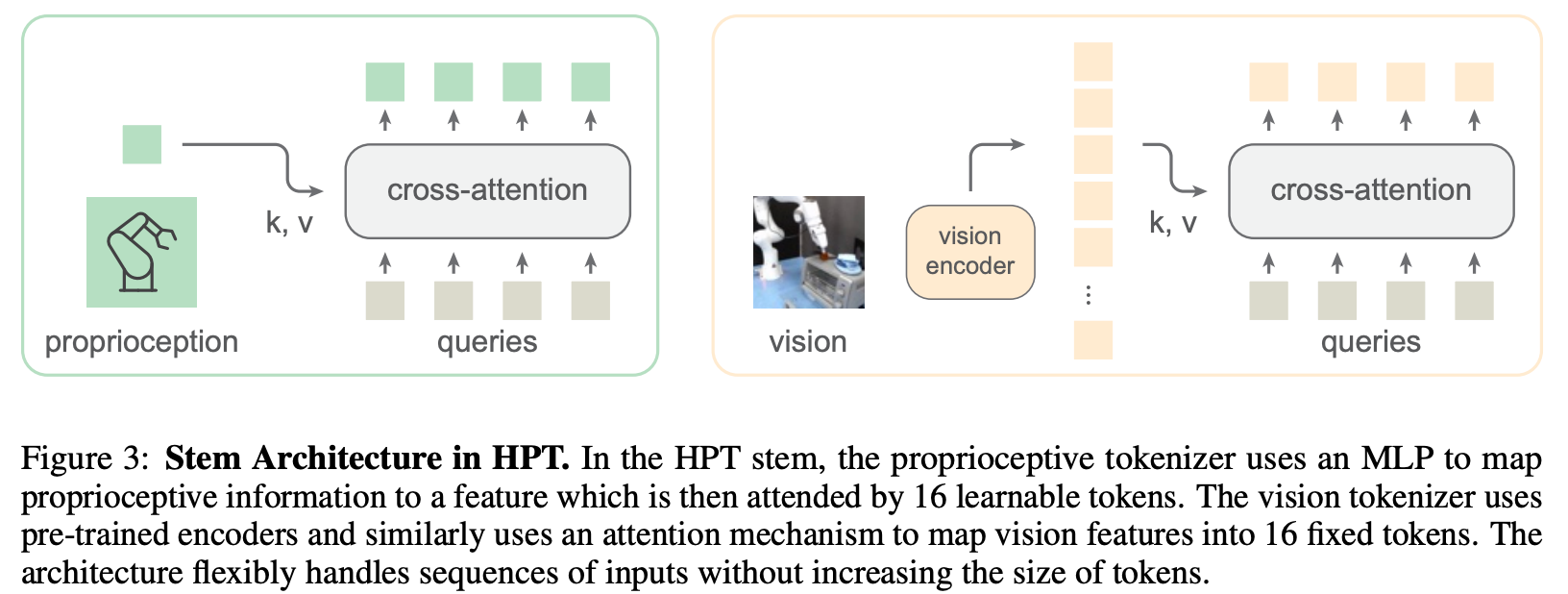

Loss

In the pre-training stage, only the trunk parameters are updated at every iteration, and the stems and heads for each heterogeneous embodiment and task are updated based on the training batch sampling.

预训练的主体部分,其输入与输出sequence长度是固定的,根据embediements与task来决定使用哪个stem与head。

Head

在多种训练集混合训练中,仅trunk是每个sample都会更新,而head与stem是否更新取决于数据集。

takes as input the pooled feature of the trunk and outputs a normalized action trajectory. The policy head is reinitialized for transferring to a new embodiment.

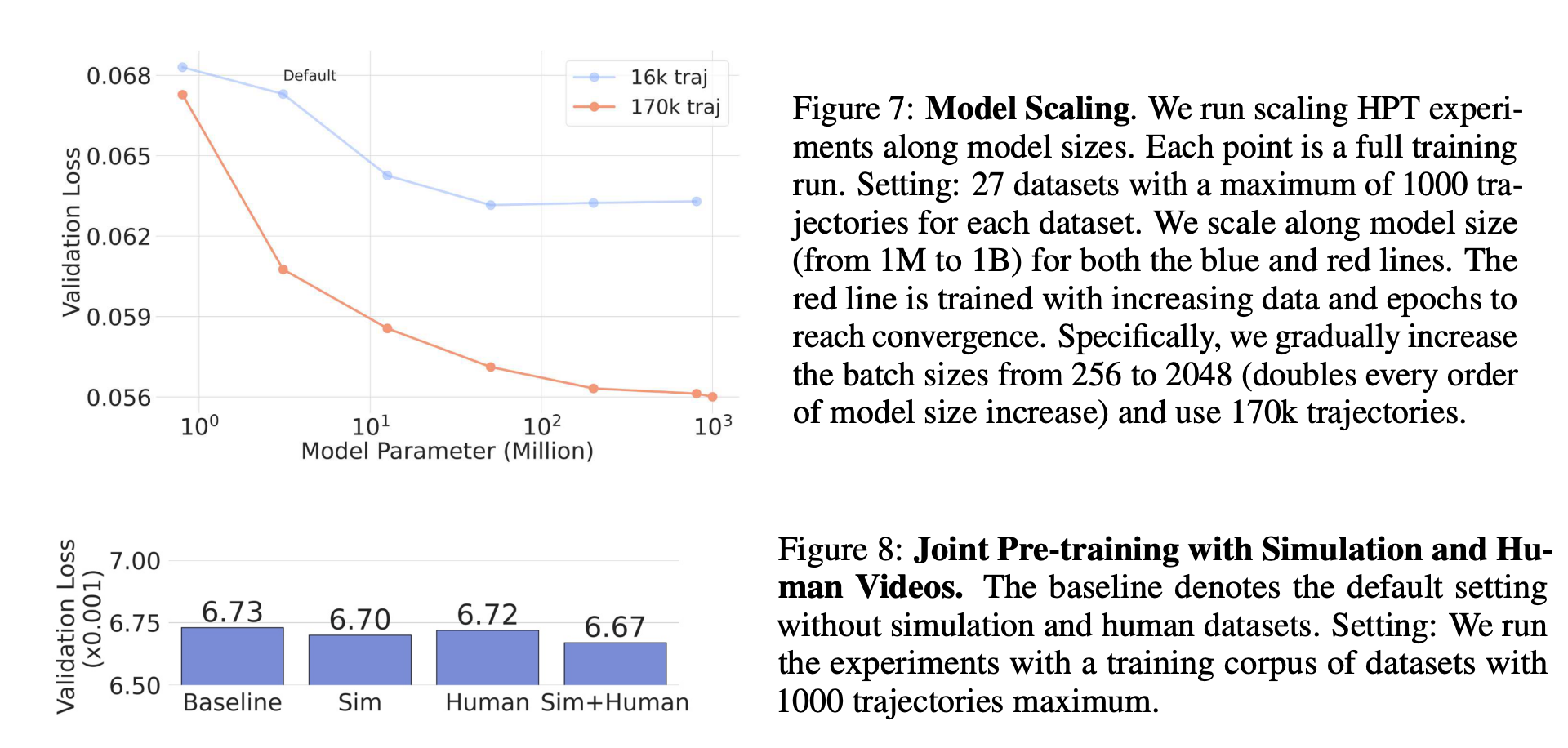

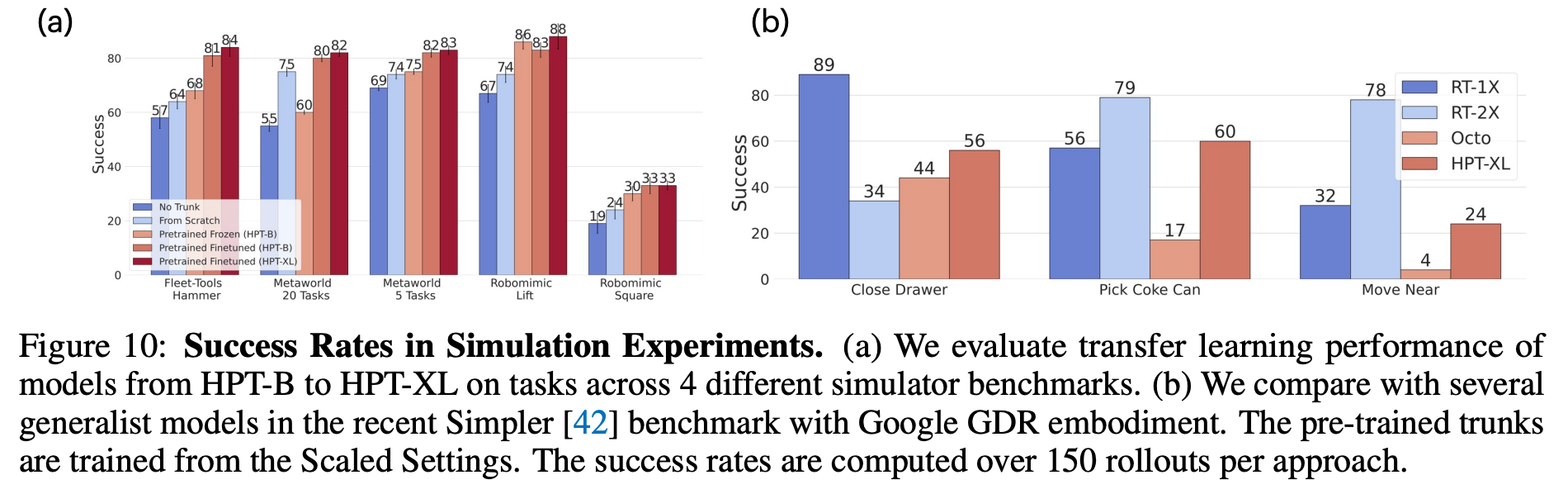

Experiment

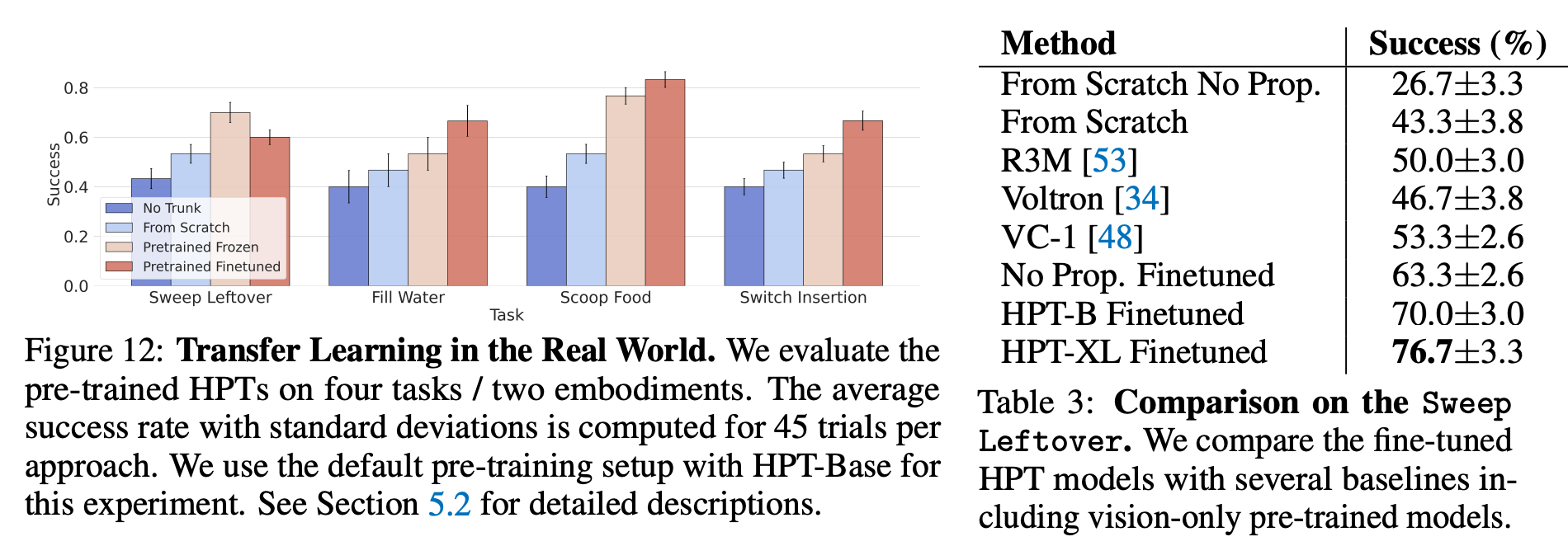

从下面这张图看,Finetuned相对于FromScratch确实有20%以上涨幅度了。

训练资源

The compute resources for these pre-training experiments range from 8 V-100s to 128 V-100s and the training time spans from

half a day to 1 month. The total dataset disk size is around 10Tb and the RAM memory requirement is below 50Gb.

效果可视化

总结与发散

异构性指得是机器人类型、任务 以及 环境多样性,核心是解决泛化性问题

相关链接

https://zhuanlan.zhihu.com/p/899491255

https://zhuanlan.zhihu.com/p/845325482

资料查询

折叠Title

FromChatGPT(提示词:XXX)本文来自博客园,作者:fariver,转载请注明原文链接:https://www.cnblogs.com/fariver/p/18452260

浙公网安备 33010602011771号

浙公网安备 33010602011771号