[tensorflow] tensorflow官方例子和传统bp网络的实现 -- 超参数初始化的重要性

首先准备mnist数据集(网上下载)和input.py。

input.py内容如下:

# -*- coding: utf-8 -*- # Copyright 2015 The TensorFlow Authors. All Rights Reserved. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # ============================================================================== """Functions for downloading and reading MNIST data.""" from __future__ import absolute_import from __future__ import division from __future__ import print_function import gzip import os import tempfile import numpy from six.moves import urllib from six.moves import xrange # pylint: disable=redefined-builtin import tensorflow as tf from tensorflow.contrib.learn.python.learn.datasets.mnist import read_data_sets

test_soft.py 官方例子如下(网址):

# -*- coding: utf-8 -*- """ Created on Wed Nov 22 13:24:22 2017 @author: fc """ #from tensorflow.examples.tutorials.mnist import input_data #mnist = input_data.read_data_sets('MNIST_data', one_hot=True) batchsize=100 import tensorflow as tf import input_data def train(): mnist = input_data.read_data_sets("MNIST_data/", one_hot=True) sess = tf.InteractiveSession() x = tf.placeholder("float", [None, 784],name='x-input') y_ = tf.placeholder("float", [None,10],name='y-input') w = tf.Variable(tf.zeros([784,10])) b = tf.Variable(tf.zeros([10])) y = tf.nn.softmax(tf.matmul(x,w) + b) cross_entropy = -tf.reduce_sum(y_*tf.log(y)) train_step = tf.train.GradientDescentOptimizer(0.01).minimize(cross_entropy) init = tf.global_variables_initializer() sess.run(init) for i in range(1000): batch_xs, batch_ys = mnist.train.next_batch(batchsize) sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys}) correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float")) print (sess.run(accuracy, feed_dict={x: mnist.test.images, y_: mnist.test.labels})) if __name__ == '__main__': train()

注意这句代码中,传入参数为mnist在电脑中的实际位置。

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

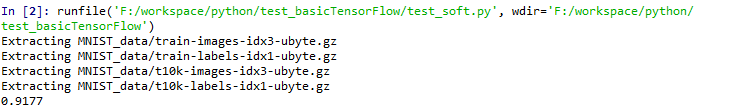

运行结果为:

test_nn.py 传统bp神经网络:

# -*- coding: utf-8 -*- """ Created on Wed Dec 13 23:11:09 2017 @author: fc """ #from tensorflow.examples.tutorials.mnist import input_data #mnist = input_data.read_data_sets('MNIST_data', one_hot=True) batchsize=64 import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("MNIST_data/", one_hot=True) def train(init_bias): sess = tf.InteractiveSession() #---------------------------------------初始化网络结构------------------------------------- x = tf.placeholder("float", [None, 784],name='x-input') y_ = tf.placeholder("float", [None,10],name='y-input') W1 = tf.Variable(tf.random_uniform([784,100],-0.5+init_bias,0.5+init_bias)) b1 = tf.Variable(tf.random_uniform([100],-0.5+init_bias,0.5+init_bias)) u1 = tf.matmul(x,W1) + b1 y1 = tf.nn.sigmoid(u1) # y1=u1 W2 = tf.Variable(tf.random_uniform([100,10],-0.5+init_bias,0.5+init_bias)) b2 = tf.Variable(tf.random_uniform([10],-0.5+init_bias,0.5+init_bias)) y = tf.nn.sigmoid(tf.matmul(y1,W2) + b2) #---------------------------------------设置网络的训练方式------------------------------------- mse = tf.reduce_sum(tf.square(y-y_))#mse # train_step = tf.train.GradientDescentOptimizer(0.02).minimize(mse) train_step = tf.train.AdamOptimizer(0.001).minimize(mse) correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float")) init = tf.global_variables_initializer() sess.run(init) #---------------------------------------开始训练------------------------------------- for i in range(1001): batch_xs, batch_ys = mnist.train.next_batch(batchsize) sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys}) print ('权重初始化范围[%.1f,%.1f],1000次训练过后的准确率' %(init_bias-0.5,init_bias+0.5),sess.run(accuracy, feed_dict={x: mnist.test.images, y_: mnist.test.labels})) if __name__ == '__main__': init_bias=-0.6#权重的初始化时的偏置量 for i in range(11): init_bias+=0.1 train(init_bias)

第一次写这个程序的时候,权重初始化的函数是:

W1 = tf.Variable(tf.random_uniform([784,100],0,1.0))

权重随机分布在(0.0,1.0)之间,训练出来效果极差,大概0.1的准确率(10%)。

后来多番检查,确定问题在此,改为分布在(-0.5,0.5)之间,训练准确率得到了极大的提升。

原理还没想通。

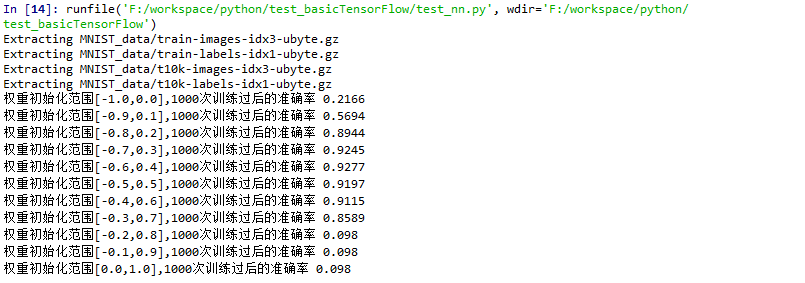

test_nn.py运行结果如下:

说明不同的超参数(权重)初始化,对网络的影响极大。