我的第一个神经网络

from sklearn import datasets from sklearn import preprocessing from sklearn.model_selection import train_test_split from sklearn.neighbors import KNeighborsClassifier import numpy as np def sigmoid(x): # 激活函数 f(x) = 1 / (1 + e^(-x)) return 1 / (1 + np.exp(-x)) def deri_sigmoid(x): # 激活函数求导 f'(x) = f(x) * (1 - f(x)) k = sigmoid(x) return k * (1 - k) def mse_loss(y_true, y_pred): # 计算损失 return ((y_true - y_pred) ** 2).mean() class OurNeuralNetwork(): def __init__(self, N): # 初始化隐含层参数 Z = w*x+b A = sigmiod(Z) self.W = [np.random.randn(2, 2) for _ in range(N)] self.B = [np.random.randn(2, 1) for _ in range(N)] self.A = [np.random.randn(1, 1) for _ in range(N)] self.Z = [np.random.randn(1, 1) for _ in range(N)] self.dW = [np.zeros((2, 2)) for _ in range(N)] self.dB = [np.zeros((2, 1)) for _ in range(N)] self.dA = [np.zeros((1, 1)) for _ in range(N)] self.dZ = [np.zeros((1, 1)) for _ in range(N)] # 初始化输出层参数 self.WO = np.random.rand(1, 2) self.BO = np.random.rand(1, 1) self.AO = np.random.rand(1, 1) self.ZO = np.random.rand(1, 1) self.dWO = np.random.rand(1, 1) self.dBO = np.random.rand(1, 1) self.dAO = np.random.rand(1, 1) self.dZO = np.random.rand(1, 1) self.N = N # N个隐含层 def feedforward(self, x): # 前向反馈 for i in range(self.N): if i == 0: self.Z[i] = np.dot(self.W[i], x) + self.B[i] # Z[i] = W[i]*X + B[i] else: self.Z[i] = np.dot(self.W[i], self.A[i-1]) + self.B[i] # Z[i] = W[i]*A[i-1] + B[i] self.A[i] = sigmoid(self.Z[i]) # 计算输出层 self.ZO = np.dot(self.WO, self.A[self.N-1]) + self.BO self.AO = sigmoid(self.ZO) return self.AO def train(self, data, all_y_trues): learn_rate = 0.1 times = 5000 for time in range(times): # 1.计算前向反馈 for i in range(self.N): if i == 0: self.Z[i] = np.dot(self.W[i], data) + self.B[i] else: self.Z[i] = np.dot(self.W[i], self.A[i - 1]) + self.B[i] self.A[i] = sigmoid(self.Z[i]) self.ZO = np.dot(self.WO, self.A[self.N - 1]) + self.BO self.AO = sigmoid(self.ZO) # 2.计算 输出层 误差和导数 self.dAO = 2 * (self.AO - all_y_trues) self.dZO = deri_sigmoid(self.AO) * self.dAO m = self.A[self.N-1].shape[1] self.dWO = np.dot(self.dZO, self.A[self.N-1].T) / m self.dBO = np.sum(self.dZO, axis=1, keepdims=True) / m self.WO -= learn_rate * self.dWO self.BO -= learn_rate * self.dBO # 反向反馈 for i in range(self.N-1, 0, -1): if i == self.N-1: # 如果是最有一层 则根据输出层的信息来更新 self.dA[i] = np.dot(self.WO.T, self.dZO) else: # 否则根据上一层的隐含层信息来更新 self.dA[i] = np.dot(self.W[i+1].T, self.dZ[i+1]) self.dZ[i] = deri_sigmoid(self.Z[i]) * self.dA[i] m = self.A[i-1].shape[1] # m表示有m个样本数据 self.dW[i] = np.dot(self.dZ[i], self.A[i-1].T) / m self.dB[i] = np.sum(self.dZ[i], axis=1, keepdims=True) / m self.W[i] -= learn_rate * self.dW[i] self.B[i] -= learn_rate * self.dB[i] if self.N > 1: self.dA[0] = np.dot(self.W[1].T, self.dZ[1]) else: self.dA[0] = np.dot(self.WO.T, self.dZO) self.dZ[0] = deri_sigmoid(self.Z[0]) * self.dA[0] m = data.shape[1] self.dW[0] = np.dot(self.dZ[0], data.T) / m self.dB[0] = np.sum(self.dZ[0], axis=1, keepdims=True) / m self.W[0] -= learn_rate * self.dW[0] self.B[0] -= learn_rate * self.dB[0] if time % 10 == 0: y_preds = np.apply_along_axis(self.feedforward, 0, data) loss = mse_loss(all_y_trues, y_preds) print("time %d loss: %0.3f" % (time, loss)) # Define dataset data = np.array([ [-2, -1], # Alice [25, 6], # Bob [17, 4], # Charlie [-15, -6] # diana ]) all_y_trues = np.array([ 1, # Alice 0, # Bob 0, # Charlie 1 # diana ]) network = OurNeuralNetwork(2) network.train(data.T, all_y_trues.T) # 这里注意转置 emily = np.array([[-7], [-3]]) frank = np.array([[20], [2]]) print("Emily: %.3f" % network.feedforward(emily)) # 0.951 - F print("Frank: %.3f" % network.feedforward(frank)) # 0.039 - M

训练结果(只有最后一部分):

time 4710 loss: 0.241 time 4720 loss: 0.241 time 4730 loss: 0.241 time 4740 loss: 0.241 time 4750 loss: 0.241 time 4760 loss: 0.241 time 4770 loss: 0.241 time 4780 loss: 0.241 time 4790 loss: 0.241 time 4800 loss: 0.241 time 4810 loss: 0.241 time 4820 loss: 0.241 time 4830 loss: 0.241 time 4840 loss: 0.241 time 4850 loss: 0.241 time 4860 loss: 0.241 time 4870 loss: 0.241 time 4880 loss: 0.241 time 4890 loss: 0.241 time 4900 loss: 0.241 time 4910 loss: 0.241 time 4920 loss: 0.241 time 4930 loss: 0.241 time 4940 loss: 0.241 time 4950 loss: 0.241 time 4960 loss: 0.241 time 4970 loss: 0.241 time 4980 loss: 0.241 time 4990 loss: 0.241 Emily: 0.983 Frank: 0.017

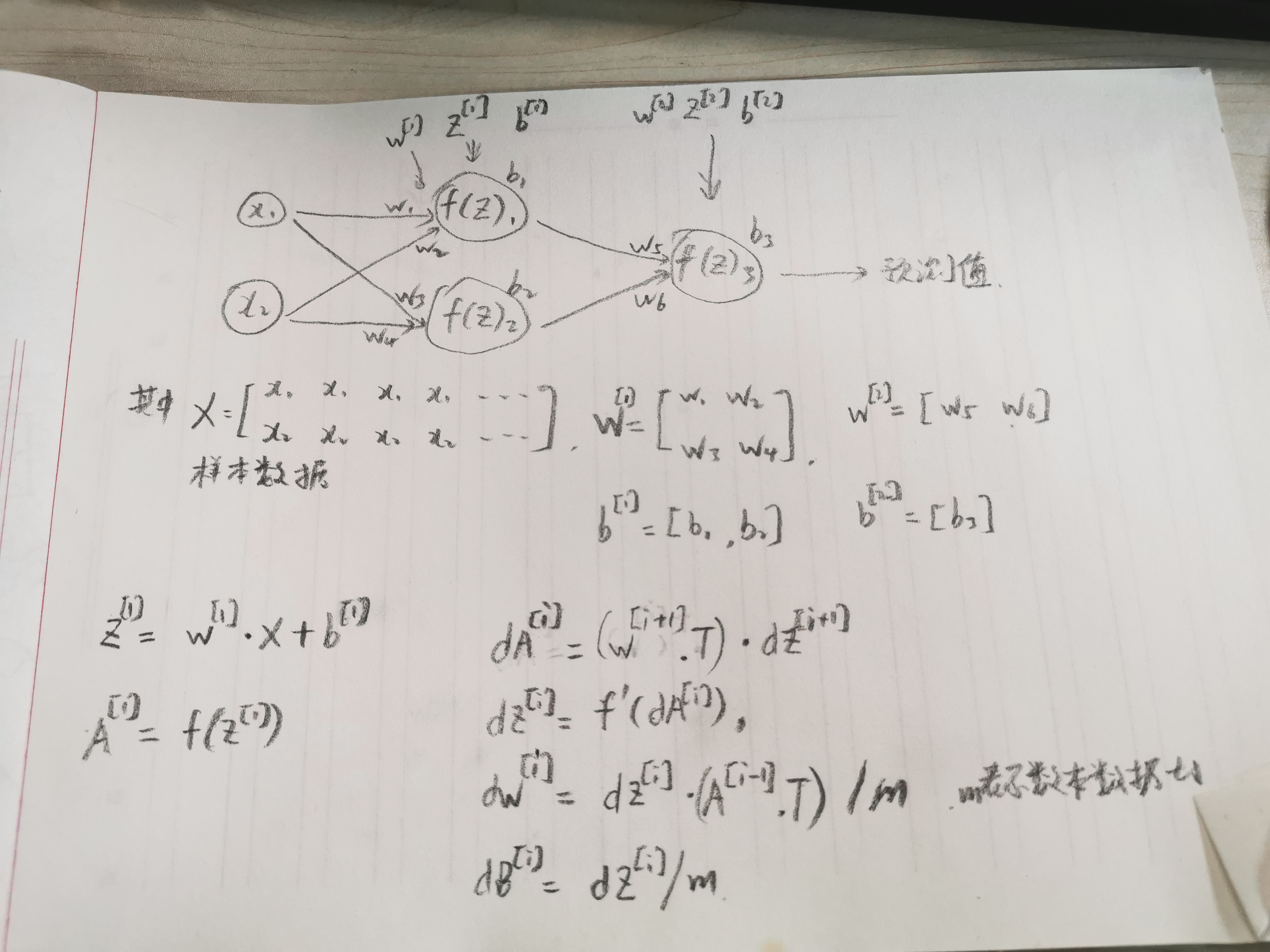

神经网络描述图:

人生如修仙,岂是一日间。何时登临顶,上善若水前。