盒子

盒子

https://wwc.lanzoul.com/b03j3017e

密码:3bq8环境中缺少了torch==1.10.2,可切换清华镜像源下载

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple torch==1.10.2

在Anaconda中创建python3.6环境

-

创建: conda create -n py36 python=3.6

- 如果创建失败,则执行:conda config --show-sources

-

激活py36环境:conda activate py36

-

关闭:conda deactivate

-

注销:conda remove -n py36 --all

-

清华园:

-

更换清华镜像源

- pip install pip -U

- pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

-

pip升级:

- python -m pip install --upgrade pip

环境

- pip install numpy==1.19.5

- pip install keras==2.1.5

- pip install pandas==1.1.5

- pip install tensorflow==1.14.0

- pip install dlib==19.7.0

- pip install opencv_python==4.5.5.64

- pip install Pillow==8.4.0

- pip install h5py==2.10.0

- pip install sklearn==0.0

- 利用清华镜像源下载

- pip install -i https://pypi.doubanio.com/simple/ --trusted-host pypi.doubanio.com pillow==8.4.0

pip离线下载

方法②

-

找一台线上计算机安装同样版本的PYTHON .

-

新建site-packages目录.

-

进入到site-packages目录下执行pip freeze >requirements.txt

- 查看requirements.txt当前机器的python所有依赖包列表

-

在site-packages目录下执行pip download -r requirements.txt,下载依赖包列表中的所有依赖包。

-

将site-packages文件夹打包,移动至我们需要这些依赖包的离线机器上.

-

在离线机器 执行 pip install --no-index --find-links=/xxx/site-packages -r /xxx/site-packages/requirements.txt

(/xxx/指明离线机器上的绝对路径。)

迁移完成

工具方法

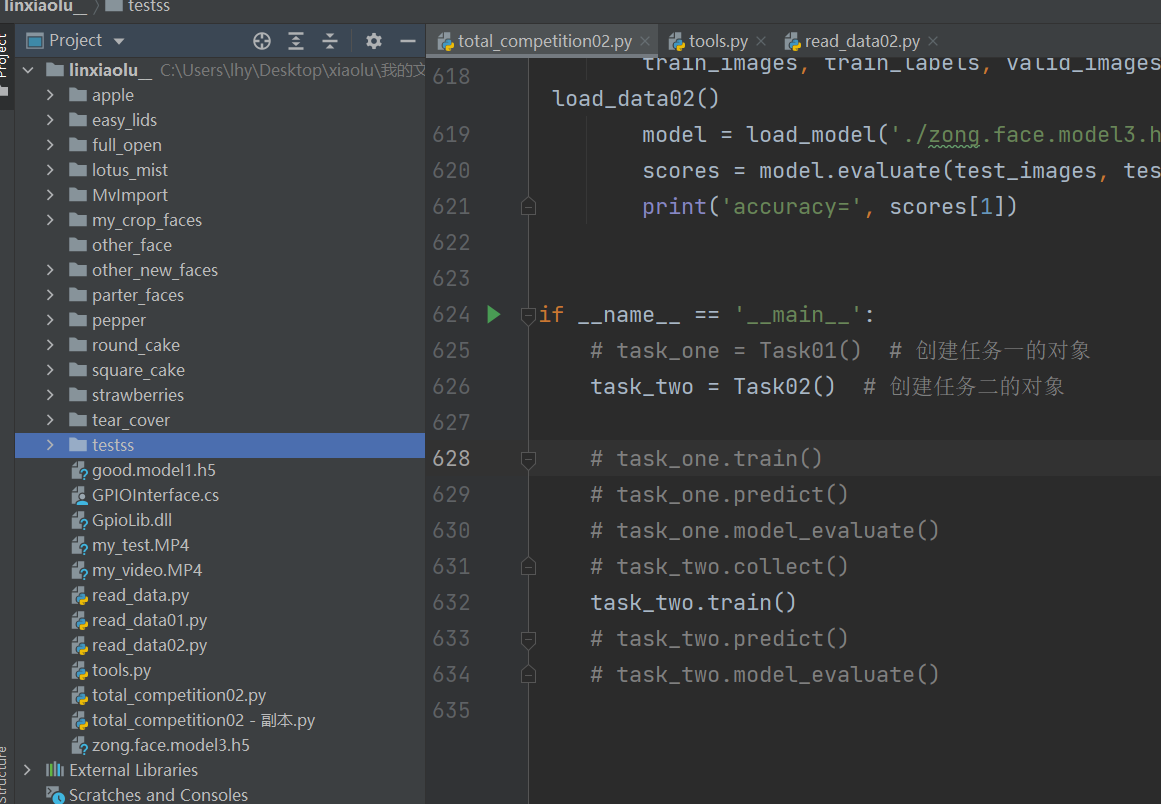

total_competition02.py

read_data02.py

read_data01.py

__EOF__

本文作者:小鹿同学

本文链接:https://www.cnblogs.com/exiaolu/p/16428073.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

本文链接:https://www.cnblogs.com/exiaolu/p/16428073.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· ollama系列01:轻松3步本地部署deepseek,普通电脑可用

· 25岁的心里话

· 按钮权限的设计及实现