- 使用正则表达式匹配

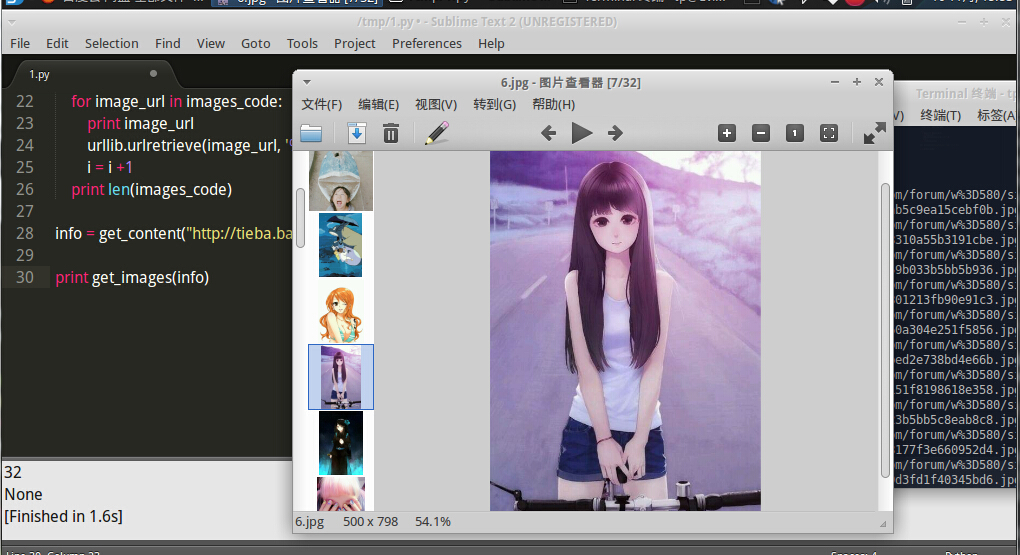

# coding:utf-8 import re import urllib def get_content(url): """ Evilxr, """ html = urllib.urlopen(url) content = html.read() html.close() return content def get_images(info): """" Download Baidu pictures. <img class="BDE_Image" src="http:*****"> """ regex = r' class="BDE_Image" src="(.+?\.jpg)" ' pat = re.compile(regex) images_code = re.findall(pat, info) i = 0 for image_url in images_code: print image_url urllib.urlretrieve(image_url, '%s.jpg' % i) i = i +1 print len(images_code) info = get_content("http://tieba.baidu.com/p/2299704181") print get_images(info)![]()

- 使用第三方库BeautifulSoup匹配

# 安装 sudo pip install beautifulsoup4

# coding:utf-8 import urllib from bs4 import BeautifulSoup def get_content(url): """ Evilxr, """ html = urllib.urlopen(url) content = html.read() html.close() return content def get_images(info): """ 使用BeautifulSoup在网页源码中匹配图片地址 """ soup = BeautifulSoup(info) all_img = soup.find_all('img', class_="BDE_Image" ) i = 1 for img in all_img: print img['src'] urllib.urlretrieve(img['src'], '%s.jpg' % i) i = i +1 print "一共下载了 ", len(all_img), "张图片" info = get_content("http://tieba.baidu.com/p/3368845086") print get_images(info)![]()

若非特别声明,文章均为Evilxr的个人笔记,转载请注明出处。

浙公网安备 33010602011771号

浙公网安备 33010602011771号