Cilium Cluster Mesh(转载)

Cilium Cluster Mesh(转载)

一、环境信息

| 主机 | IP |

|---|---|

| ubuntu | 172.16.94.141 |

| 软件 | 版本 |

|---|---|

| docker | 26.1.4 |

| helm | v3.15.0-rc.2 |

| kind | 0.18.0 |

| clab | 0.54.2 |

| cilium 命令行 | 0.13.0 |

| kubernetes | 1.23.4 |

| ubuntu os | Ubuntu 20.04.6 LTS |

| kernel | 5.11.5 内核升级文档 |

cilium命令行使用版本0.13.0,某些版本中没有--inherit-ca指令,会导致继承kind-cluster1的cilium-ca证书时失败,无法安装kind-cluster2集群 cilium 服务

当然可以手动导入kind-cluster1证书到kind-cluster2集群后,kind-cluster2集群在安装cilium服务

二、Cilium ClusterMesh 架构概览

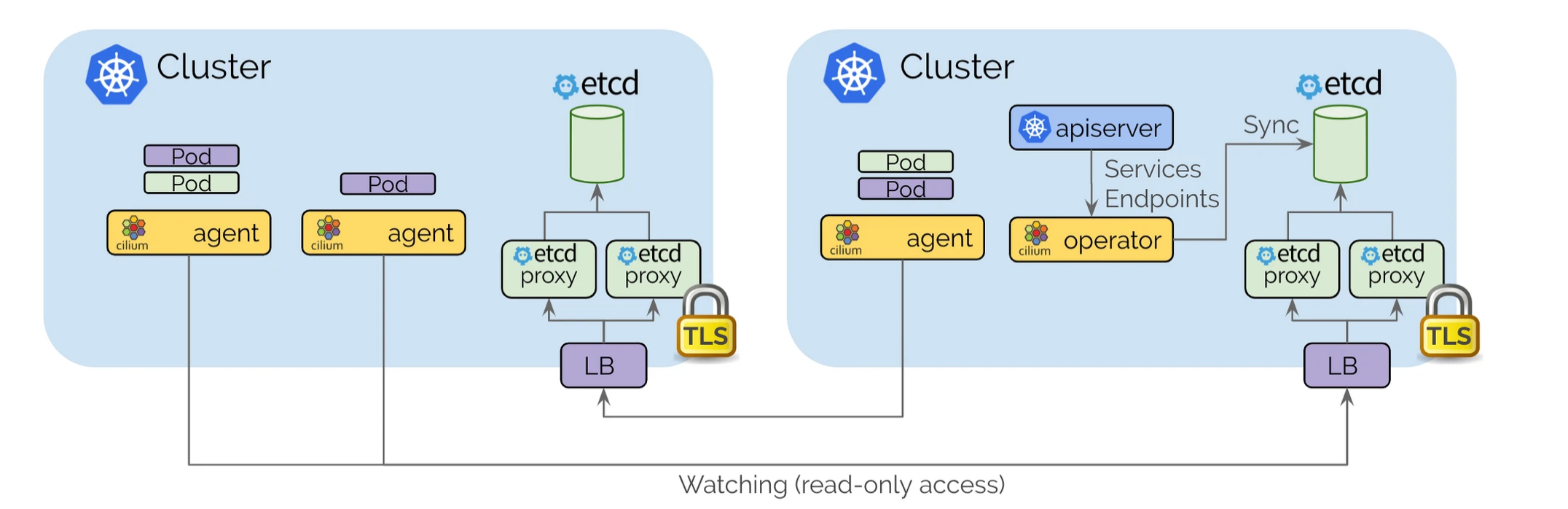

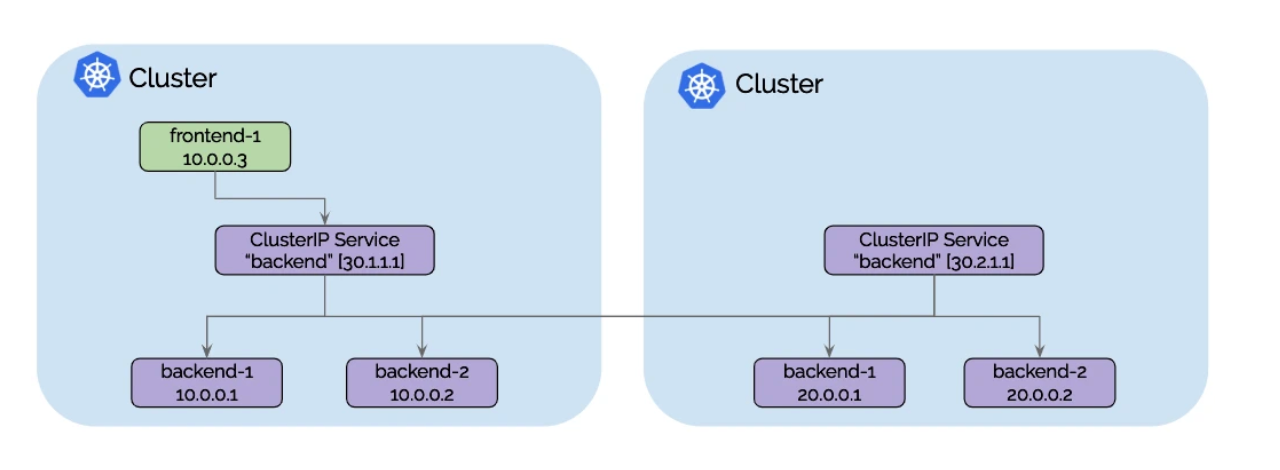

Cilium控制面基于etcd设计,尽可能保持设计简单- 每个

Kubernetes集群都维护自己的etcd集群,其中包含该集群的状态。来自多个集群的状态永远不会在etcd中混淆。 - 每个集群通过一组

etcd proxy公开自身etcd。其他集群中运行的Cilium agent连接到etcd proxy监听集群资源状态,并将多集群相关资源状态复制到自己的集群中。使用etcd proxy可确保etcd watcher的可扩展性。访问受到TLS证书的保护。 - 从一个集群到另一个集群的访问始终是只读的。这确保了故障域保持不变,即一个集群中的故障永远不会传播到其他集群。

- 配置通过一个简单的

Kubernetes secrets,其中包含远程etcd代理的地址信息以及集群名称和访问etcd代理所需的证书。

- 每个

三、Cilium ClusterMesh 使用背景

-

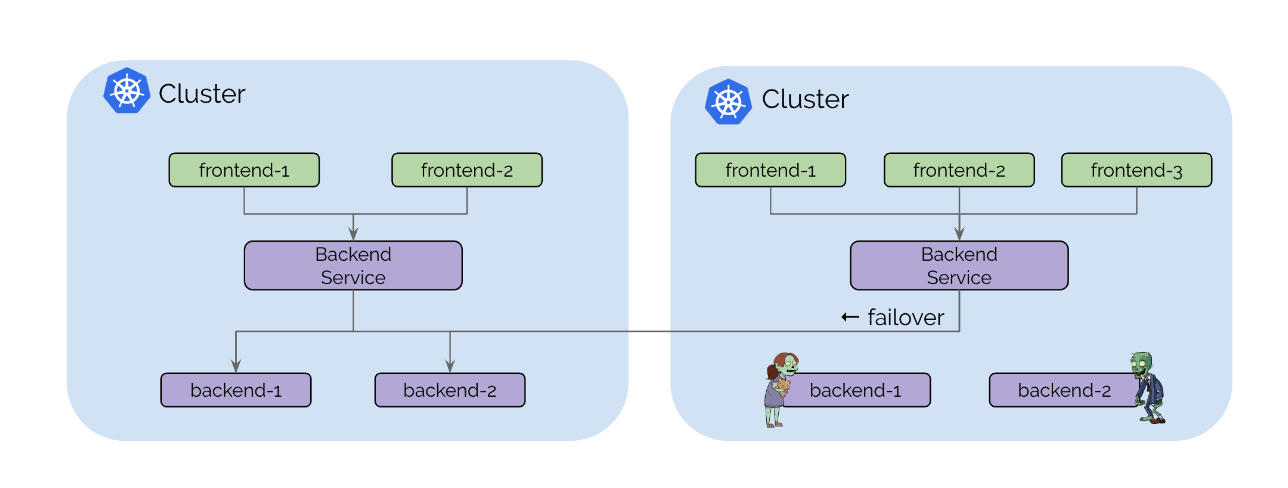

High Availability 容灾备份

Cluster Mesh增强了服务的高可用性和容错能力。支持Kubernetes集群在多个地域或者可用区的运行。如果资源暂时不可用、一个集群中配置错误或离线升级,它可以将故障转移到其他集群,确保您的服务始终可访问。

-

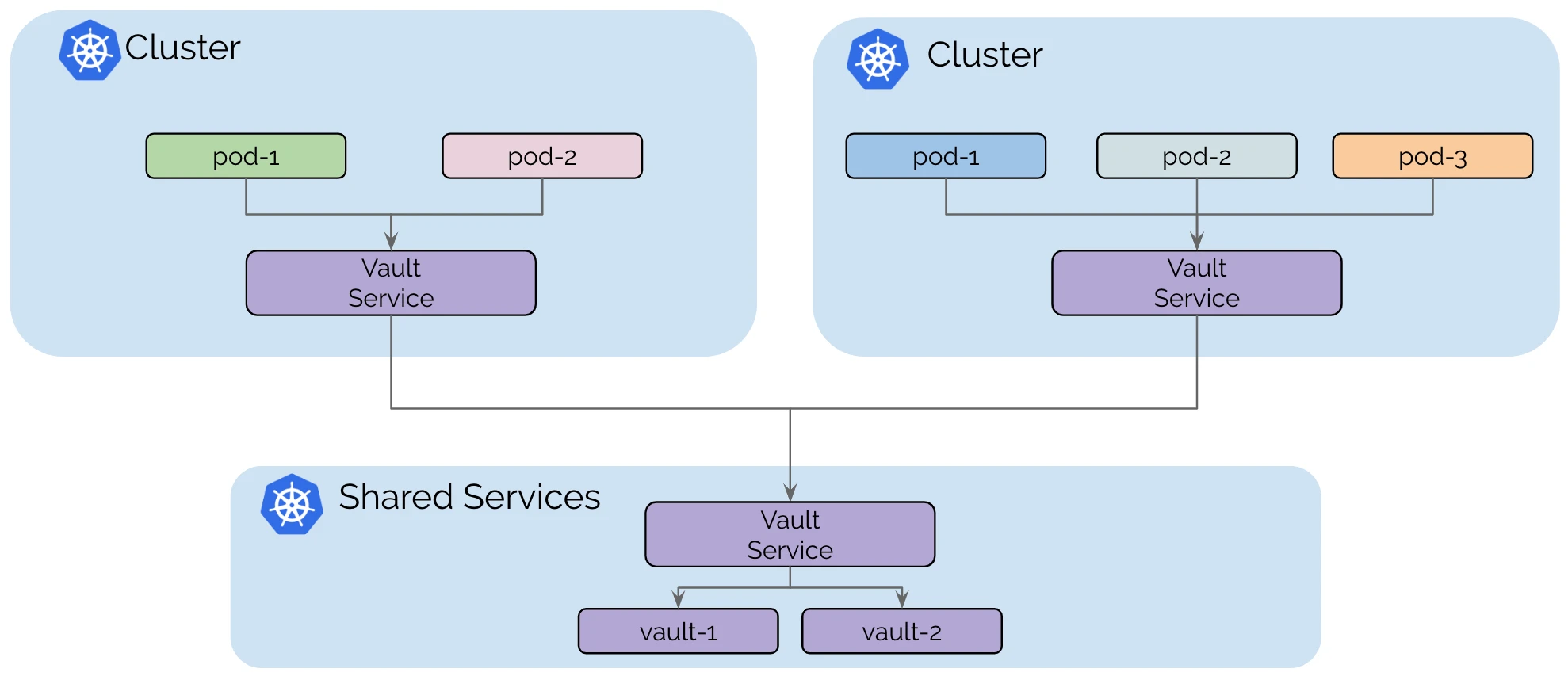

Shared Services Across Clusters (跨集群共享服务)

Cluster Mesh支持在所有集群之间共享服务,例如秘密管理、日志记录、监控或DNS。这可以减少运营开销、简化管理并保持租户集群之间的隔离。

-

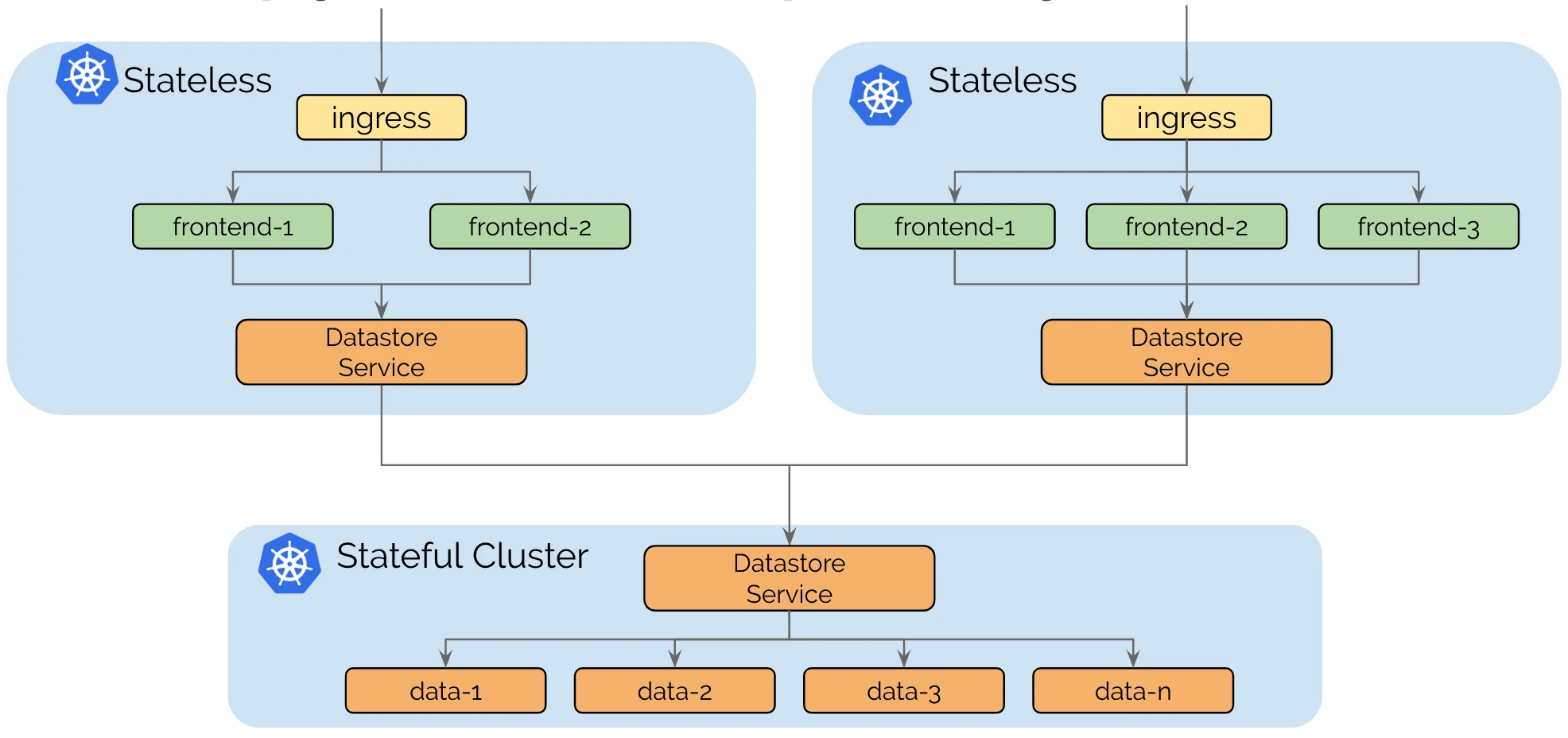

Splitting Stateful and Stateless services (拆分有状态服务和无状态服务)

Cluster Mesh支持针对无状态和有状态运行单独的集群,可以将依赖关系复杂性隔离到较少数量的集群,并保持无状态集群的依赖关系。

-

Transparent Service Discovery

Cluster Mesh可自动发现Kubernetes集群中的服务。使用标准Kubernetes service,它会自动将跨集群具有相同名称和命名空间的服务合并为全局服务。这意味着您的应用程序可以发现服务并与服务交互,无论它们驻留在哪个集群中,从而大大简化了跨集群通信。

-

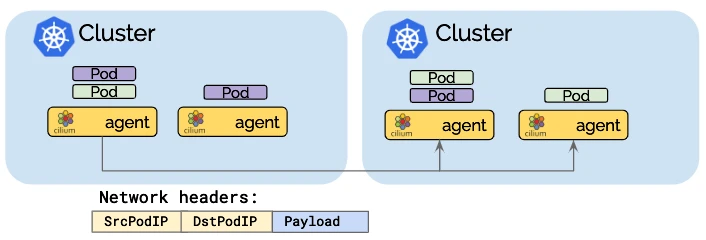

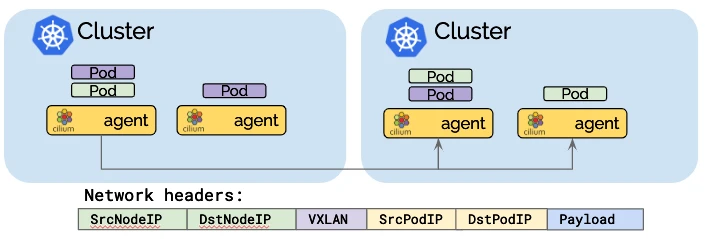

可基于Pod IP 进行路由

Cluster Mesh能够以本机性能处理跨多个Kubernetes集群的Pod IP路由。通过使用隧道或直接路由,它不需要任何网关或代理。这允许您的Pod跨集群无缝通信,从而提高微服务架构的整体效率。- native-routing模式:

- 隧道封装模式:

-

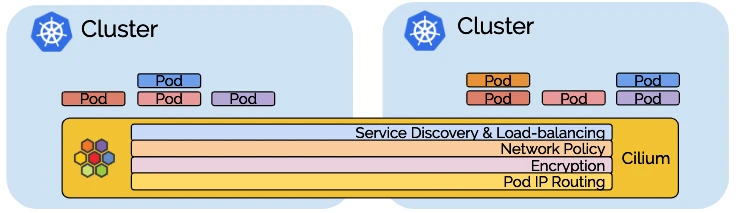

Uniform Network Policy Enforcement (强制统一网络策略)

- 集群网格将

Cilium的第3-7层网络策略实施扩展到网格中的所有集群。它标准化了网络策略的应用,确保整个Kubernetes部署采用一致的安全方法,无论涉及多少集群。

- 集群网格将

四、Cilium ClusterMesh 模式环境搭建

安装须知

- 所有

Kubernetes工作节点必须分配一个唯一的IP地址,并且所有工作节点之间必须具有IP直通。 - 必须为所有集群分配唯一的

PodCIDR范围。 Cilium必须配置为使用etcd作为kvstore。- 集群之间的网络必须允许集群间通信。确切的防火墙要求取决于

Cilium是否配置为在直接路由模式或隧道模式下运行。

安装 kind-cluster1 集群

kind-cluster1 配置文件信息

#!/bin/bash

set -v

date

# 1. prep noCNI env

cat <<EOF | kind create cluster --name=cluster1 --image=kindest/node:v1.27.3 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# pod 网段

podSubnet: "10.10.0.0/16"

# service 网段

serviceSubnet: "10.11.0.0/16"

nodes:

- role: control-plane

- role: worker

EOF

# 2. remove taints

kubectl taint nodes $(kubectl get nodes -o name | grep control-plane) node-role.kubernetes.io/control-plane:NoSchedule-

kubectl get nodes -o wide

# 3. install CNI

cilium install --context kind-cluster1 \

--version v1.13.0-rc5 \

--helm-set ipam.mode=kubernetes,cluster.name=cluster1,cluster.id=1

cilium status --context kind-cluster1 --wait

# 4.install necessary tools

for i in $(docker ps -a --format "table {{.Names}}" | grep kind-cluster1)

do

echo $i

docker cp /usr/bin/ping $i:/usr/bin/ping

docker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

- 安装

kind-cluster1集群和cilium服务

root@kind:~# ./install.sh

Creating cluster "cluster1" ...

✓ Ensuring node image (kindest/node:v1.23.4) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-cluster1"

You can now use your cluster with:

kubectl cluster-info --context kind-cluster1

Have a nice day! 👋

# install cilium

🔮 Auto-detected Kubernetes kind: kind

✨ Running "kind" validation checks

✅ Detected kind version "0.18.0"

ℹ️ Using Cilium version 1.13.0-rc5

🔮 Auto-detected cluster name: kind-cluster1

🔮 Auto-detected datapath mode: tunnel

🔮 Auto-detected kube-proxy has been installed

ℹ️ helm template --namespace kube-system cilium cilium/cilium --version 1.13.0-rc5 --set cluster.id=1,cluster.name=cluster1,encryption.nodeEncryption=false,ipam.mode=kubernetes,kubeProxyReplacement=disabled,operator.replicas=1,serviceAccounts.cilium.name=cilium,serviceAccounts.operator.name=cilium-operator,tunnel=vxlan

ℹ️ Storing helm values file in kube-system/cilium-cli-helm-values Secret

🔑 Created CA in secret cilium-ca

🔑 Generating certificates for Hubble...

🚀 Creating Service accounts...

🚀 Creating Cluster roles...

🚀 Creating ConfigMap for Cilium version 1.13.0-rc5...

🚀 Creating Agent DaemonSet...

🚀 Creating Operator Deployment...

⌛ Waiting for Cilium to be installed and ready...

✅ Cilium was successfully installed! Run 'cilium status' to view installation health

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble Relay: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 2, Ready: 2/2, Available: 2/2

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 2

cilium-operator Running: 1

Cluster Pods: 3/3 managed by Cilium

Image versions cilium-operator quay.io/cilium/operator-generic:v1.13.0-rc5@sha256:74c05a1e27f6f7e4d410a4b9e765ab4bb33c36d19016060a7e82c8d305ff2d61: 1

cilium quay.io/cilium/cilium:v1.13.0-rc5@sha256:143c6fb2f32cbd28bb3abb3e9885aab0db19fae2157d167f3bc56021c4fd1ad8: 2

默认使用

tunnel模式即vxlan模式

查看 kind-cluster1 集群安装的服务

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-79vq6 1/1 Running 0 2m29s

kube-system cilium-operator-76564696fd-jpcs8 1/1 Running 0 2m29s

kube-system cilium-thfjk 1/1 Running 0 2m29s

kube-system coredns-64897985d-jtbmb 1/1 Running 0 2m50s

kube-system coredns-64897985d-n6ctz 1/1 Running 0 2m50s

kube-system etcd-cluster1-control-plane 1/1 Running 0 3m4s

kube-system kube-apiserver-cluster1-control-plane 1/1 Running 0 3m4s

kube-system kube-controller-manager-cluster1-control-plane 1/1 Running 0 3m4s

kube-system kube-proxy-8p985 1/1 Running 0 2m31s

kube-system kube-proxy-b4lq4 1/1 Running 0 2m50s

kube-system kube-scheduler-cluster1-control-plane 1/1 Running 0 3m4s

local-path-storage local-path-provisioner-5ddd94ff66-dhpsg 1/1 Running 0 2m50s

root@kind:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.11.0.1 <none> 443/TCP 2m41s

kube-system kube-dns ClusterIP 10.11.0.10 <none> 53/UDP,53/TCP,9153/TCP 2m40s

kind-cluster1 集群 cilium 配置信息

root@kind:~# kubectl -n kube-system exec -it ds/cilium -- cilium status

KVStore: Ok Disabled

Kubernetes: Ok 1.23 (v1.23.4) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Disabled

Host firewall: Disabled

CNI Chaining: none

CNI Config file: CNI configuration file management disabled

Cilium: Ok 1.13.0-rc5 (v1.13.0-rc5-dc22a46f)

NodeMonitor: Listening for events on 128 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

IPAM: IPv4: 5/254 allocated from 10.10.0.0/24,

ClusterMesh: 0/0 clusters ready, 0 global-services

IPv6 BIG TCP: Disabled

BandwidthManager: Disabled

Host Routing: Legacy

Masquerading: IPTables [IPv4: Enabled, IPv6: Disabled]

Controller Status: 30/30 healthy

Proxy Status: OK, ip 10.10.0.170, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Ok Current/Max Flows: 171/4095 (4.18%), Flows/s: 2.81 Metrics: Disabled

Encryption: Disabled

Cluster health: 1/1 reachable (2024-07-20T02:40:13Z)

安装 kind-cluster2 集群

kind-cluster2 配置文件信息

安装

kind-cluster2: 关键配置install CNI步骤时继承kind-cluster1的cilium-ca证书

#!/bin/bash

set -v

date

# 1. prep noCNI env

cat <<EOF | kind create cluster --name=cluster2 --image=kindest/node:v1.27.3 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# pod 网段

podSubnet: "10.20.0.0/16"

# service 网段

serviceSubnet: "10.21.0.0/16"

nodes:

- role: control-plane

- role: worker

EOF

# 2. remove taints

kubectl taint nodes $(kubectl get nodes -o name | grep control-plane) node-role.kubernetes.io/control-plane:NoSchedule-

kubectl get nodes -o wide

# 3. install CNI

# 如果此处的 cilium 不支持 --inherit-ca 也可以手动导入证书

# kubectl --context=kind-cluster1 get secret -n kube-system cilium-ca -o yaml | kubectl --context kind-cluster2 create -f -

cilium install --context kind-cluster2 \

--version v1.13.0-rc5 \

--helm-set ipam.mode=kubernetes,cluster.name=cluster2,cluster.id=2 --inherit-ca kind-cluster1

cilium status --context kind-cluster2 --wait

# 4.install necessary tools

for i in $(docker ps -a --format "table {{.Names}}" | grep kind-cluster1)

do

echo $i

docker cp /usr/bin/ping $i:/usr/bin/ping

docker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

- 安装

kind-cluster2集群和cilium服务

root@kind:~# ./install.sh

Creating cluster "cluster2" ...

✓ Ensuring node image (kindest/node:v1.23.4) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-cluster2"

You can now use your cluster with:

kubectl cluster-info --context kind-cluster2

Have a nice day! 👋

# install cilium

🔮 Auto-detected Kubernetes kind: kind

✨ Running "kind" validation checks

✅ Detected kind version "0.18.0"

ℹ️ Using Cilium version 1.13.0-rc5

🔮 Auto-detected cluster name: kind-cluster2

🔮 Auto-detected datapath mode: tunnel

🔮 Auto-detected kube-proxy has been installed

ℹ️ helm template --namespace kube-system cilium cilium/cilium --version 1.13.0-rc5 --set cluster.id=2,cluster.name=cluster2,encryption.nodeEncryption=false,ipam.mode=kubernetes,kubeProxyReplacement=disabled,operator.replicas=1,serviceAccounts.cilium.name=cilium,serviceAccounts.operator.name=cilium-operator,tunnel=vxlan

ℹ️ Storing helm values file in kube-system/cilium-cli-helm-values Secret

🔑 Found CA in secret cilium-ca

🔑 Generating certificates for Hubble...

🚀 Creating Service accounts...

🚀 Creating Cluster roles...

🚀 Creating ConfigMap for Cilium version 1.13.0-rc5...

🚀 Creating Agent DaemonSet...

🚀 Creating Operator Deployment...

⌛ Waiting for Cilium to be installed and ready...

✅ Cilium was successfully installed! Run 'cilium status' to view installation health

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble Relay: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 2, Ready: 2/2, Available: 2/2

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 2

cilium-operator Running: 1

Cluster Pods: 3/3 managed by Cilium

Image versions cilium quay.io/cilium/cilium:v1.13.0-rc5@sha256:143c6fb2f32cbd28bb3abb3e9885aab0db19fae2157d167f3bc56021c4fd1ad8: 2

cilium-operator quay.io/cilium/operator-generic:v1.13.0-rc5@sha256:74c05a1e27f6f7e4d410a4b9e765ab4bb33c36d19016060a7e82c8d305ff2d61: 1

默认使用

tunnel模式即vxlan模式

查看 kind-cluster2 集群安装的服务

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-operator-76564696fd-qztwd 1/1 Running 0 89s

kube-system cilium-qcqgp 1/1 Running 0 89s

kube-system cilium-vddf9 1/1 Running 0 89s

kube-system coredns-64897985d-ncj25 1/1 Running 0 107s

kube-system coredns-64897985d-wxq2t 1/1 Running 0 107s

kube-system etcd-cluster2-control-plane 1/1 Running 0 2m3s

kube-system kube-apiserver-cluster2-control-plane 1/1 Running 0 119s

kube-system kube-controller-manager-cluster2-control-plane 1/1 Running 0 119s

kube-system kube-proxy-8cccw 1/1 Running 0 107s

kube-system kube-proxy-f2lgj 1/1 Running 0 91s

kube-system kube-scheduler-cluster2-control-plane 1/1 Running 0 119s

local-path-storage local-path-provisioner-5ddd94ff66-28t24 1/1 Running 0 107s

root@kind:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.21.0.1 <none> 443/TCP 2m15s

kube-system kube-dns ClusterIP 10.21.0.10 <none> 53/UDP,53/TCP,9153/TCP 2m13s

kind-cluster2 集群 cilium 配置信息

root@kind:~# kubectl -n kube-system exec -it ds/cilium -- cilium status

KVStore: Ok Disabled

Kubernetes: Ok 1.23 (v1.23.4) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Disabled

Host firewall: Disabled

CNI Chaining: none

CNI Config file: CNI configuration file management disabled

Cilium: Ok 1.13.0-rc5 (v1.13.0-rc5-dc22a46f)

NodeMonitor: Listening for events on 128 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

IPAM: IPv4: 5/254 allocated from 10.20.0.0/24,

ClusterMesh: 0/0 clusters ready, 0 global-services

IPv6 BIG TCP: Disabled

BandwidthManager: Disabled

Host Routing: Legacy

Masquerading: IPTables [IPv4: Enabled, IPv6: Disabled]

Controller Status: 30/30 healthy

Proxy Status: OK, ip 10.20.0.92, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Ok Current/Max Flows: 293/4095 (7.16%), Flows/s: 3.24 Metrics: Disabled

Encryption: Disabled

Cluster health: 2/2 reachable (2024-07-20T02:56:22Z)

k8s 集群均已安装完成

root@kind:~# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

kind-cluster1 kind-cluster1 kind-cluster1

* kind-cluster2 kind-cluster2 kind-cluster2

# 切换到 kind-cluster1 集群

root@kind:~# kubectl config use-context kind-cluster1

Switched to context "kind-cluster1".

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-79vq6 1/1 Running 0 5m7s

kube-system cilium-operator-76564696fd-jpcs8 1/1 Running 0 5m7s

kube-system cilium-thfjk 1/1 Running 0 5m7s

kube-system coredns-64897985d-jtbmb 1/1 Running 0 5m28s

kube-system coredns-64897985d-n6ctz 1/1 Running 0 5m28s

kube-system etcd-cluster1-control-plane 1/1 Running 0 5m42s

kube-system kube-apiserver-cluster1-control-plane 1/1 Running 0 5m42s

kube-system kube-controller-manager-cluster1-control-plane 1/1 Running 0 5m42s

kube-system kube-proxy-8p985 1/1 Running 0 5m9s

kube-system kube-proxy-b4lq4 1/1 Running 0 5m28s

kube-system kube-scheduler-cluster1-control-plane 1/1 Running 0 5m42s

local-path-storage local-path-provisioner-5ddd94ff66-dhpsg 1/1 Running 0 5m28s

root@kind:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.11.0.1 <none> 443/TCP 5m46s

kube-system kube-dns ClusterIP 10.11.0.10 <none> 53/UDP,53/TCP,9153/TCP 5m45s

local-path-storage local-path-provisioner-5ddd94ff66-sljs9 1/1 Running 0 52m

# 切换到 kind-cluster2 集群

root@kind:~# kubectl config use-context kind-cluster2

Switched to context "kind-cluster2".

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-operator-76564696fd-qztwd 1/1 Running 0 2m42s

kube-system cilium-qcqgp 1/1 Running 0 2m42s

kube-system cilium-vddf9 1/1 Running 0 2m42s

kube-system coredns-64897985d-ncj25 1/1 Running 0 3m

kube-system coredns-64897985d-wxq2t 1/1 Running 0 3m

kube-system etcd-cluster2-control-plane 1/1 Running 0 3m16s

kube-system kube-apiserver-cluster2-control-plane 1/1 Running 0 3m12s

kube-system kube-controller-manager-cluster2-control-plane 1/1 Running 0 3m12s

kube-system kube-proxy-8cccw 1/1 Running 0 3m

kube-system kube-proxy-f2lgj 1/1 Running 0 2m44s

kube-system kube-scheduler-cluster2-control-plane 1/1 Running 0 3m12s

local-path-storage local-path-provisioner-5ddd94ff66-28t24 1/1 Running 0 3m

root@kind:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.21.0.1 <none> 443/TCP 3m16s

kube-system kube-dns ClusterIP 10.21.0.10 <none> 53/UDP,53/TCP,9153/TCP 3m14s

kind-cluster1 kind-cluster2 组成 Cluster Mesh

clustermesh互联是通过nodeportsvc实现的

root@kind:~# cilium clustermesh enable --context kind-cluster1 --service-type NodePort

⚠️ Using service type NodePort may fail when nodes are removed from the cluster!

🔑 Found CA in secret cilium-ca

🔑 Generating certificates for ClusterMesh...

✨ Deploying clustermesh-apiserver from quay.io/cilium/clustermesh-apiserver:v1.13.0-rc5...

✅ ClusterMesh enabled!

root@kind:~# cilium clustermesh enable --context kind-cluster2 --service-type NodePort

⚠️ Using service type NodePort may fail when nodes are removed from the cluster!

🔑 Found CA in secret cilium-ca

🔑 Generating certificates for ClusterMesh...

✨ Deploying clustermesh-apiserver from quay.io/cilium/clustermesh-apiserver:v1.13.0-rc5...

✅ ClusterMesh enabled!

root@kind:~# cilium clustermesh connect --context kind-cluster1 --destination-context kind-cluster2

✨ Extracting access information of cluster cluster2...

🔑 Extracting secrets from cluster cluster2...

⚠️ Service type NodePort detected! Service may fail when nodes are removed from the cluster!

ℹ️ Found ClusterMesh service IPs: [172.18.0.5]

✨ Extracting access information of cluster cluster1...

🔑 Extracting secrets from cluster cluster1...

⚠️ Service type NodePort detected! Service may fail when nodes are removed from the cluster!

ℹ️ Found ClusterMesh service IPs: [172.18.0.3]

✨ Connecting cluster kind-cluster1 -> kind-cluster2...

🔑 Secret cilium-clustermesh does not exist yet, creating it...

🔑 Patching existing secret cilium-clustermesh...

✨ Patching DaemonSet with IP aliases cilium-clustermesh...

✨ Connecting cluster kind-cluster2 -> kind-cluster1...

🔑 Secret cilium-clustermesh does not exist yet, creating it...

🔑 Patching existing secret cilium-clustermesh...

✨ Patching DaemonSet with IP aliases cilium-clustermesh...

✅ Connected cluster kind-cluster1 and kind-cluster2!

root@kind:~# cilium clustermesh status --context kind-cluster1 --wait

⚠️ Service type NodePort detected! Service may fail when nodes are removed from the cluster!

✅ Cluster access information is available:

- 172.18.0.3:30029

✅ Service "clustermesh-apiserver" of type "NodePort" found

⌛ [kind-cluster1] Waiting for deployment clustermesh-apiserver to become ready...

⌛ Waiting (13s) for clusters to be connected: unable to determine status of cilium pod "cilium-bdnrw": unable to determine cilium status: command terminated with exit code 1

⌛ Waiting (29s) for clusters to be connected: 2 clusters have errors

⌛ Waiting (42s) for clusters to be connected: 2 clusters have errors

⌛ Waiting (53s) for clusters to be connected: 1 clusters have errors

✅ All 2 nodes are connected to all clusters [min:1 / avg:1.0 / max:1]

🔌 Cluster Connections:

- cluster2: 2/2 configured, 2/2 connected

🔀 Global services: [ min:3 / avg:3.0 / max:3 ]

如果节点重启过,集群互联状态可能会失效,需要重新执行该脚本。

检查 kind-cluster1 集群

clustermesh互联是通过nodeportsvc实现的

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system clustermesh-apiserver-857c87986d-zpv6s 2/2 Running 0 92s

root@kind:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-system clustermesh-apiserver NodePort 10.11.24.85 <none> 2379:30029/TCP 95s

新增了 clustermesh-apiserver-857c87986d-zpv6s Pod 和 clustermesh-apiserver Svc 用于 Cluster Mesh 通讯

检查 kind-cluster2 集群

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system clustermesh-apiserver-69fb7f6646-bscjg 2/2 Running 0 2m36s

root@kind:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-system clustermesh-apiserver NodePort 10.21.245.59 <none> 2379:30363/TCP 2m39s

新增了 clustermesh-apiserver-69fb7f6646-bscjg Pod 和 clustermesh-apiserver Svc 用于 Cluster Mesh 通讯

五、测试 Cluster Mesh 功能

Load-balancing with Global Services

通过在每个集群中定义具有相同 name 和 namespace 的 Kubernetes service 并添加注解 service.cilium.io/global: "true" 将其声明为全局来实现集群之间建立负载均衡。 Cilium 将自动对两个集群中的 pod 执行负载均衡。

---

apiVersion: v1

kind: Service

metadata:

name: rebel-base

annotations:

io.cilium/global-service: "true"

# 默认即为 “true”

io.cilium/shared-service: "true"

- 部署服务

# 下载 test yaml 文件

root@kind:~# wget https://gh.api.99988866.xyz/https://raw.githubusercontent.com/cilium/cilium/1.11.2/examples/kubernetes/clustermesh/global-service-example/cluster1.yaml

root@kind:~# wget https://gh.api.99988866.xyz/https://raw.githubusercontent.com/cilium/cilium/1.11.2/examples/kubernetes/clustermesh/global-service-example/cluster2.yaml

# 替换镜像,docker.io 无法拉去 image

root@kind:~# sed -i "s#image: docker.io#image: harbor.dayuan1997.com/devops#g" cluster1.yaml

root@kind:~# sed -i "s#image: docker.io#image: harbor.dayuan1997.com/devops#g" cluster2.yaml

# kind-cluster1 kind-cluster2 集群部署服务

root@kind:~# kubectl apply -f ./cluster1.yaml --context kind-cluster1

service/rebel-base created

deployment.apps/rebel-base created

configmap/rebel-base-response created

deployment.apps/x-wing created

root@kind:~# kubectl apply -f ./cluster2.yaml --context kind-cluster2

service/rebel-base created

deployment.apps/rebel-base created

configmap/rebel-base-response created

deployment.apps/x-wing created

# 等待服务正常启动

root@kind:~# kubectl wait --for=condition=Ready=true pods --all --context kind-cluster1

root@kind:~# kubectl wait --for=condition=Ready=true pods --all --context kind-cluster2

- 查看 cilium service list

root@kind:~# kubectl --context kind-cluster1 get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.11.0.1 <none> 443/TCP 14m

rebel-base ClusterIP 10.11.153.54 <none> 80/TCP 8m56s

root@kind:~# kubectl -n kube-system --context kind-cluster1 exec -it ds/cilium -- cilium service list

ID Frontend Service Type Backend

1 10.11.0.1:443 ClusterIP 1 => 172.18.0.3:6443 (active)

2 10.11.0.10:53 ClusterIP 1 => 10.10.0.184:53 (active)

2 => 10.10.0.252:53 (active)

3 10.11.0.10:9153 ClusterIP 1 => 10.10.0.184:9153 (active)

2 => 10.10.0.252:9153 (active)

4 10.11.218.4:2379 ClusterIP 1 => 10.10.1.250:2379 (active)

5 10.11.153.54:80 ClusterIP 1 => 10.10.1.59:80 (active)

2 => 10.10.1.39:80 (active)

3 => 10.20.1.204:80 (active)

4 => 10.20.1.166:80 (active)

rebel-basesvc 后端对应了 4 个 Pod 服务,kind-cluster1kind-cluster2集群各2个Pod

- 测试

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster1 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

请求负载到了2个集群的不同后端上,多集群流量管理生效

High Availability 容灾备份

# Cluster Failover

sleep 3

root@kind:~# kubectl --context kind-cluster1 scale deployment rebel-base --replicas=0

root@kind:~# kubectl --context kind-cluster1 get pods

NAME READY STATUS RESTARTS AGE

x-wing-7d5dc844c6-f2xlp 1/1 Running 1 (84m ago) 85m

x-wing-7d5dc844c6-zb6tl 1/1 Running 1 (85m ago) 85m

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster1 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

模拟 kind-cluster1 集群后端服务异常无法提供服务,此时所有访问后端流量都会转向 kind-cluster2 集群后端服务

取消全局负载均衡

取消全局负载均衡,可通过

io.cilium/shared-service: "false"注解实现,该注解模式默认是true

root@kind:~# kubectl --context kind-cluster1 annotate service rebel-base io.cilium/shared-service="false" --overwrite

- 测试

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster1 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster2 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

- 在

kind-cluster1集群中访问后端服务,依旧能负载均衡到 2 个后端的 k8s 集群中 - 在

kind-cluster2集群中访问后端服务,只能访问到kind-cluster2集群后端服务,因为kind-cluster1集群后端服务已经不在是全局共享

- 移除该注解

root@kind:~# kubectl --context kind-cluster1 annotate service rebel-base io.cilium/shared-service-

Service Affinity 服务亲和

- 在某些情况下,跨多个集群的负载平衡可能并不理想。通过注释

io.cilium/service-affinity: "local|remote|none"可用于指定首选端点目的地。

例如,如果注释

io.cilium/service-affinity: local,则全局服务将在健康的本地后端之间进行负载平衡,并且仅当所有本地后端均不可用或不健康时,才会使用远程端点。

apiVersion: v1

kind: Service

metadata:

name: rebel-base

annotations:

io.cilium/global-service: "true"

# Possible values:

# - local

# preferred endpoints from local cluster if available

# - remote

# preferred endpoints from remote cluster if available

# none (default)

# no preference. Default behavior if this annotation does not exist

io.cilium/service-affinity: "local"

spec:

type: ClusterIP

ports:

- port: 80

selector:

name: rebel-base

kind-cluster1 中的 rebel-base svc 添加 io.cilium/service-affinity: local

root@kind:~# kubectl --context kind-cluster1 annotate service rebel-base io.cilium/service-affinity=local --overwrite

service/rebel-base annotated

root@kind:~# kubectl --context kind-cluster1 describe svc rebel-base

Name: rebel-base

Namespace: default

Labels: <none>

Annotations: io.cilium/global-service: true

io.cilium/service-affinity: local

- 测试

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster1 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster2 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

- 在

kind-cluster1集群中访问后端服务,全部访问到kind-cluster1集群后端服务,因为默认优先使用本地集群后端提供服务 - 在

kind-cluster2集群中访问后端服务,依旧能负载均衡到 2 个后端的 k8s 集群中

- 查看 cilium service 信息

root@kind:~# kubectl -n kube-system --context kind-cluster1 exec -it ds/cilium -- cilium service list --clustermesh-affinity

ID Frontend Service Type Backend

1 10.11.0.1:443 ClusterIP 1 => 172.18.0.3:6443 (active)

2 10.11.0.10:53 ClusterIP 1 => 10.10.0.184:53 (active)

2 => 10.10.0.252:53 (active)

3 10.11.0.10:9153 ClusterIP 1 => 10.10.0.184:9153 (active)

2 => 10.10.0.252:9153 (active)

4 10.11.218.4:2379 ClusterIP 1 => 10.10.1.250:2379 (active)

5 10.11.153.54:80 ClusterIP 1 => 10.10.1.59:80 (active) (preferred) # 访问服务时优先选择

2 => 10.10.1.39:80 (active) (preferred) # 访问服务时优先选择

3 => 10.20.1.66:80 (active)

4 => 10.20.1.41:80 (active)

root@kind:~# kubectl -n kube-system --context kind-cluster2 exec -it ds/cilium -- cilium service list --clustermesh-affinity

ID Frontend Service Type Backend

1 10.21.0.1:443 ClusterIP 1 => 172.18.0.5:6443 (active)

2 10.21.0.10:53 ClusterIP 1 => 10.20.0.3:53 (active)

2 => 10.20.0.244:53 (active)

3 10.21.0.10:9153 ClusterIP 1 => 10.20.0.244:9153 (active)

2 => 10.20.0.3:9153 (active)

4 10.21.236.203:2379 ClusterIP 1 => 10.20.1.254:2379 (active)

5 10.21.255.13:80 ClusterIP 1 => 10.20.1.66:80 (active)

2 => 10.20.1.41:80 (active)

3 => 10.10.1.59:80 (active)

4 => 10.10.1.39:80 (active)

kind-cluster1 集群的 rebel-base svc 后端比 kind-cluster2 集群 rebel-base svc 后端信息多了 preferred 标示,表示访问服务时优先选择。而 10.10.X.X IP 是 kind-cluster1 集群 Pod IP

kind-cluster1 中的 rebel-base svc 添加 io.cilium/service-affinity: remote

root@kind:~# kubectl --context kind-cluster1 annotate service rebel-base io.cilium/service-affinity=remote --overwrite

service/rebel-base annotated

root@kind:~# kubectl --context kind-cluster1 describe svc rebel-base

Name: rebel-base

Namespace: default

Labels: <none>

Annotations: io.cilium/global-service: true

io.cilium/service-affinity: remote

- 测试

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster1 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

root@kind:~# for i in $(seq 1 10); do kubectl --context kind-cluster2 exec -ti deployment/x-wing -- curl rebel-base; done

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-1"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

{"Galaxy": "Alderaan", "Cluster": "Cluster-2"}

- 在

kind-cluster1集群中访问后端服务,全部访问到kind-cluster2集群后端服务,因为默认优先使用远端集群后端提供服务 - 在

kind-cluster2集群中访问后端服务,依旧能负载均衡到 2 个后端的 k8s 集群中

- 查看 cilium service 信息

root@kind:~# kubectl -n kube-system --context kind-cluster1 exec -it ds/cilium -- cilium service list --clustermesh-affinity

ID Frontend Service Type Backend

1 10.11.0.1:443 ClusterIP 1 => 172.18.0.3:6443 (active)

2 10.11.0.10:53 ClusterIP 1 => 10.10.0.184:53 (active)

2 => 10.10.0.252:53 (active)

3 10.11.0.10:9153 ClusterIP 1 => 10.10.0.184:9153 (active)

2 => 10.10.0.252:9153 (active)

4 10.11.218.4:2379 ClusterIP 1 => 10.10.1.250:2379 (active)

5 10.11.153.54:80 ClusterIP 1 => 10.10.1.59:80 (active)

2 => 10.10.1.39:80 (active)

3 => 10.20.1.66:80 (active) (preferred) # 访问服务时优先选择

4 => 10.20.1.41:80 (active) (preferred) # 访问服务时优先选择

root@kind:~# kubectl -n kube-system --context kind-cluster2 exec -it ds/cilium -- cilium service list --clustermesh-affinity

ID Frontend Service Type Backend

1 10.21.0.1:443 ClusterIP 1 => 172.18.0.5:6443 (active)

2 10.21.0.10:53 ClusterIP 1 => 10.20.0.3:53 (active)

2 => 10.20.0.244:53 (active)

3 10.21.0.10:9153 ClusterIP 1 => 10.20.0.244:9153 (active)

2 => 10.20.0.3:9153 (active)

4 10.21.236.203:2379 ClusterIP 1 => 10.20.1.254:2379 (active)

5 10.21.255.13:80 ClusterIP 1 => 10.20.1.66:80 (active)

2 => 10.20.1.41:80 (active)

3 => 10.10.1.59:80 (active)

4 => 10.10.1.39:80 (active)

kind-cluster1 集群的 rebel-base svc 后端比 kind-cluster2 集群 rebel-base svc 后端信息多了 preferred 标示,表示访问服务时优先选择。而 10.20.X.X IP 是 kind-cluster2 集群 Pod IP

Service Affinity 实际运用

echoserver-service.yaml

配置文件

# echoserver-service.yaml

---

apiVersion: v1

kind: Service

metadata:

name: echoserver-service-local

annotations:

io.cilium/global-service: "true"

io.cilium/service-affinity: local

spec:

type: ClusterIP

selector:

app: echoserver

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: echoserver-service-remote

annotations:

io.cilium/global-service: "true"

io.cilium/service-affinity: remote

spec:

type: ClusterIP

selector:

app: echoserver

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: echoserver-service-none

annotations:

io.cilium/global-service: "true"

io.cilium/service-affinity: none

spec:

type: ClusterIP

selector:

app: echoserver

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: echoserver-daemonset

labels:

app: echoserver

spec:

selector:

matchLabels:

app: echoserver

template:

metadata:

labels:

app: echoserver

spec:

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: echoserver

image: harbor.dayuan1997.com/devops/ealen/echo-server:0.9.2

env:

- name: NODE

valueFrom:

fieldRef:

fieldPath: spec.nodeName

netshoot-ds.yaml

配置文件

# netshoot-ds.yaml

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: netshoot

spec:

selector:

matchLabels:

app: netshoot

template:

metadata:

labels:

app: netshoot

spec:

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: netshoot

image: harbor.dayuan1997.com/devops/nettool:0.9

kind-cluster1kind-cluster2集群创建服务

root@kind:~# cat service-affinity.sh

#!/bin/bash

NAME=cluster

NAMESPACE=service-affinity

kubectl --context kind-${NAME}1 create ns $NAMESPACE

kubectl --context kind-${NAME}2 create ns $NAMESPACE

kubectl --context kind-${NAME}1 -n $NAMESPACE apply -f netshoot-ds.yaml

kubectl --context kind-${NAME}2 -n $NAMESPACE apply -f netshoot-ds.yaml

kubectl --context kind-${NAME}1 -n $NAMESPACE apply -f echoserver-service.yaml

kubectl --context kind-${NAME}2 -n $NAMESPACE apply -f echoserver-service.yaml

cilium clustermesh status --context kind-${NAME}1 --wait

cilium clustermesh status --context kind-${NAME}2 --wait

kubectl -n$NAMESPACE wait --for=condition=Ready=true pods --all --context kind-${NAME}1

kubectl -n$NAMESPACE wait --for=condition=Ready=true pods --all --context kind-${NAME}2

root@kind:~# bash service-affinity.sh

- 查看

kind-cluster2集群信息

root@kind:~# kubectl -n service-affinity get pods --context kind-cluster2

NAME READY STATUS RESTARTS AGE

echoserver-daemonset-5m6p6 1/1 Running 0 36s

echoserver-daemonset-lvfgk 1/1 Running 0 36s

netshoot-8nb8k 1/1 Running 0 38s

netshoot-mg4pt 1/1 Running 0 38s

root@kind:~# kubectl -n service-affinity get svc --context kind-cluster2

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echoserver-service-local ClusterIP 10.21.197.14 <none> 80/TCP 2m59s

echoserver-service-none ClusterIP 10.21.196.200 <none> 80/TCP 2m59s

echoserver-service-remote ClusterIP 10.21.226.141 <none> 80/TCP 2m59s

- 测试服务

root@kind:~# cat verify-service-affinity.sh

#!/bin/bash

set -v

# exec &>./verify-log-rec-2-verify-service-affinity.txt

NREQUESTS=10

echo "------------------------------------------------------"

echo Current_Context View:

echo "------------------------------------------------------"

kubectl config get-contexts

for affinity in local remote none; do

echo "------------------------------------------------------"

rm -f $affinity.txt

echo "Sending $NREQUESTS requests to service-affinity=$affinity service"

echo "------------------------------------------------------"

for i in $(seq 1 $NREQUESTS); do

Current_Cluster=`kubectl --context kind-cluster2 -n service-affinity exec -it ds/netshoot -- curl -q "http://echoserver-service-$affinity.service-affinity.svc.cluster.local?echo_env_body=NODE"`

echo -e Current_Rsp_From_Cluster: ${Current_Cluster}

done

done

echo "------------------------------------------------------"

从 kind-cluster2 集群进行测试

root@kind:~# bash verify-service-affinity.sh

------------------------------------------------------

Sending 10 requests to service-affinity=local service

------------------------------------------------------

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-control-plane"

Current_Rsp_From_Cluster: "cluster2-control-plane"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-control-plane"

------------------------------------------------------

Sending 10 requests to service-affinity=remote service

------------------------------------------------------

Current_Rsp_From_Cluster: "cluster1-worker"

Current_Rsp_From_Cluster: "cluster1-worker"

Current_Rsp_From_Cluster: "cluster1-control-plane"

Current_Rsp_From_Cluster: "cluster1-control-plane"

Current_Rsp_From_Cluster: "cluster1-worker"

Current_Rsp_From_Cluster: "cluster1-worker"

Current_Rsp_From_Cluster: "cluster1-worker"

Current_Rsp_From_Cluster: "cluster1-control-plane"

Current_Rsp_From_Cluster: "cluster1-worker"

Current_Rsp_From_Cluster: "cluster1-control-plane"

------------------------------------------------------

Sending 10 requests to service-affinity=none service

------------------------------------------------------

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster1-control-plane"

Current_Rsp_From_Cluster: "cluster1-worker"

Current_Rsp_From_Cluster: "cluster2-control-plane"

Current_Rsp_From_Cluster: "cluster1-control-plane"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster2-worker"

Current_Rsp_From_Cluster: "cluster1-control-plane"

Current_Rsp_From_Cluster: "cluster2-control-plane"

------------------------------------------------------

- 请求不同的

svc访问的后端pod服务来自不同的集群- local svc: 后端

pod均来自本地kind-cluster2集群 - remote svc: 后端

pod均来自远端kind-cluster1集群 - none svc: 后端

pod随即访问kind-cluster1kind-cluster2集群

- local svc: 后端

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· Vue3状态管理终极指南:Pinia保姆级教程