Cilium BGP ControlePlane(转载)

Cilium BGP(转载)

一、环境信息

| 主机 | IP |

|---|---|

| ubuntu | 172.16.94.141 |

| 软件 | 版本 |

|---|---|

| docker | 26.1.4 |

| helm | v3.15.0-rc.2 |

| kind | 0.18.0 |

| clab | 0.54.2 |

| kubernetes | 1.23.4 |

| ubuntu os | Ubuntu 20.04.6 LTS |

| kernel | 5.11.5 内核升级文档 |

二、Cilium BGP 介绍

随着 Kubernetes 在企业中的应用越来越多,用户在其环境中同时拥有传统应用程序和云原生应用程序,为了让彼此联通,同时还要允许 Kubernetes 集群外部的网络能够通过 BGP 协议动态获取到访问的 Pod 的路由,Cilium 引入了对 BGP 协议的支持。

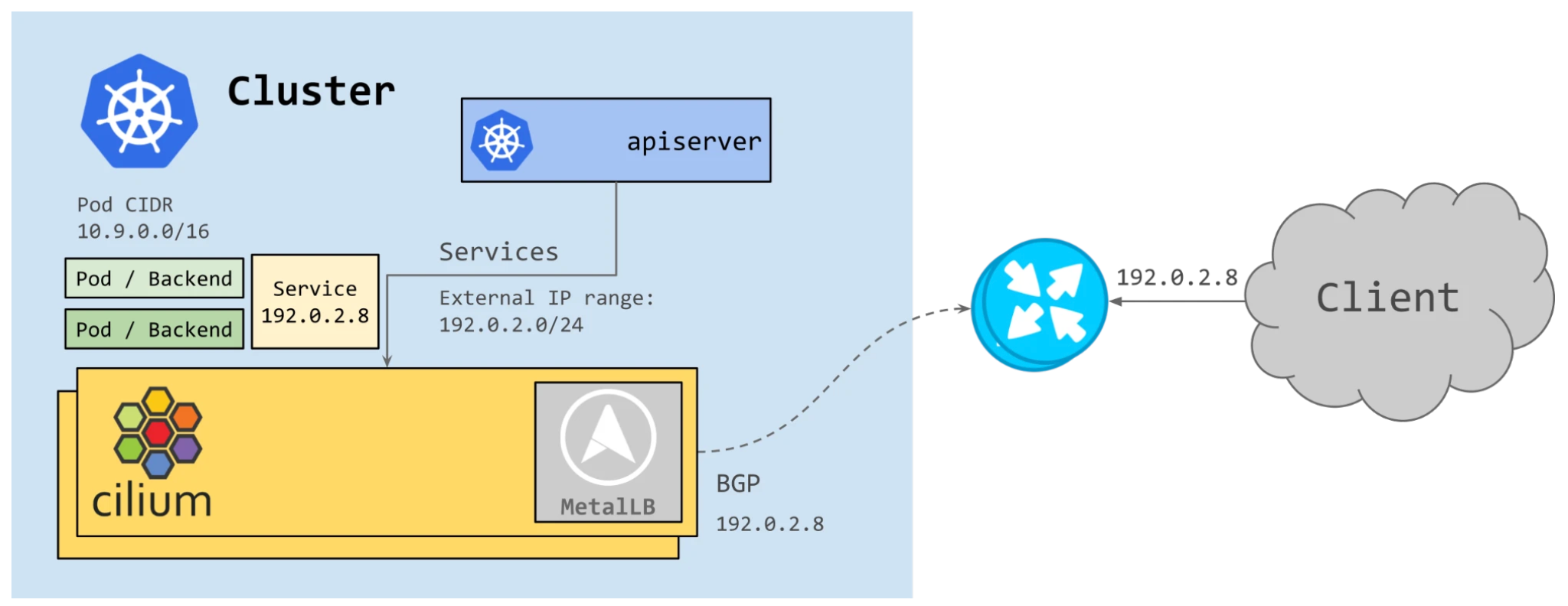

BGPbeta方案(Cilium+MetalLB)- Cilium 1.10 版本开始集成了对 BGP 的支持,通过集成 MetalLB 将 Kubernetes 暴露给外部。Cilium 现在能够为 LoadBalancer 类型的service分配IP,并向 BGP 邻居宣告路由信息。无需任何其他组件,就可以允许集群外部的流量访问k8s内运行的服务。

- cilium 在 1.10 开始支持BGP ,并在 1.11 中得到进一步增强。它使运维人员能够通告 Pod CIDR 和 ServiceIP,并在 Cilium 管理的 Pod 和现有网络基础设施之间提供路由对等。这些功能仅适用于 IPv4 协议并依赖 MetalLB。

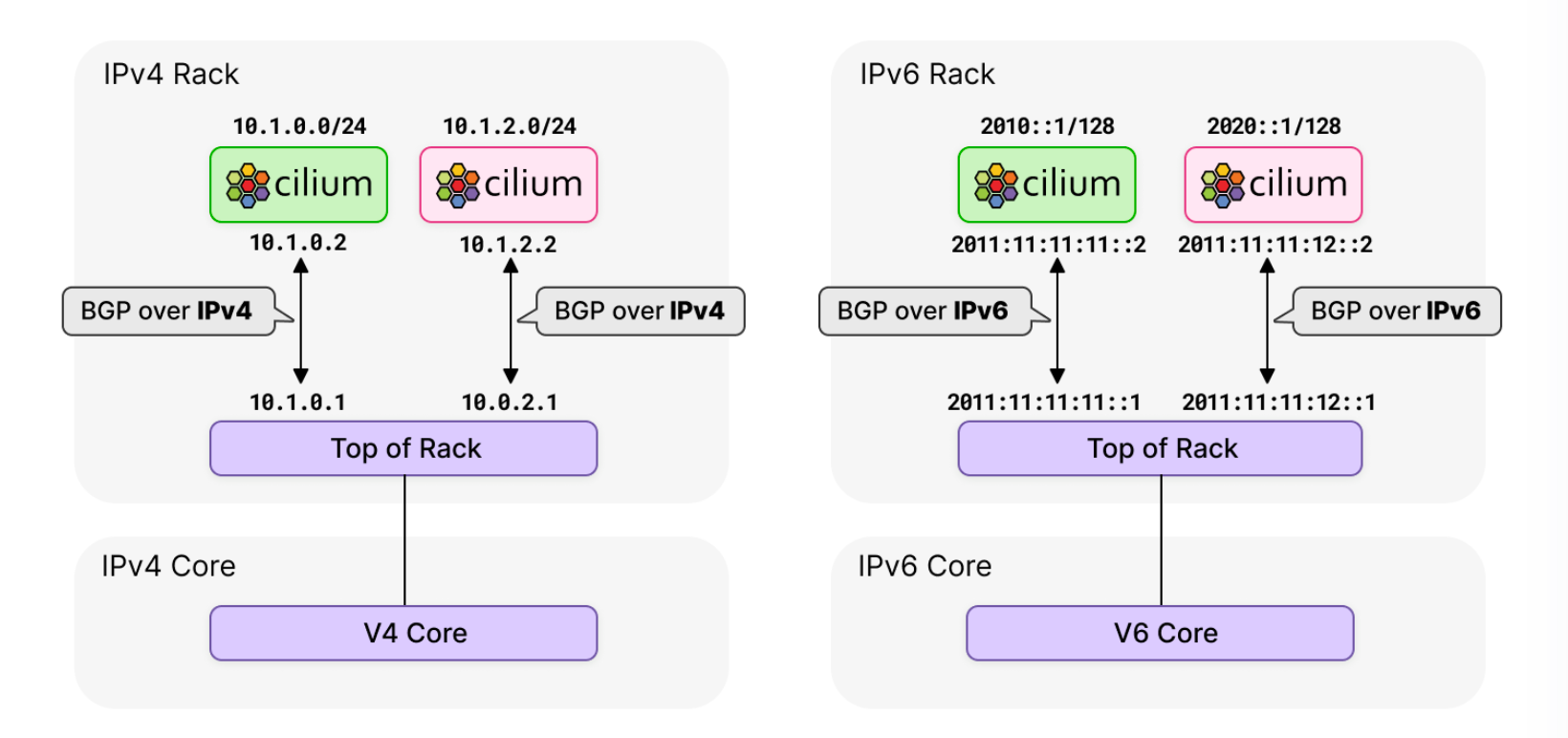

CiliumBGP ControlePlane方案- 随着 IPv6 的使用热度不断增长,Cilium 需要提供BGP IPv6 能力 。虽然 MetalLB 通过 FRR 提供了一些有限的 IPv6 支持,但它仍然处于实验阶段。 Cilium 团队评估了各种选项,并决定转向功能更丰富的 GoBGP 。

- 该方案也是Cilium社区主要的演进方向,更加推荐使用。

三、Cilium BGP 使用背景

- 随着用户的集群规模不断扩大,不同数据中心的节点都加入了同一个kubernetes 集群,集群内节点不处于同一个二层网络平面。(或者是混合云的场景中)

- 为了解决跨平面网络互通的问题,虽然通过overlay的隧道封装(Cilium vxlan)方案可以解决该问题,但同时也引入了新问题,即隧道封装带来的数据包封装和解封装的额外网络性能损耗。

- 这时我们可以基于 Cilium BGP ControlePlane 的能力 实现直接路由的 underlay 网络方案,该方案打通了node-node,node-pod,pod-pod之间的网络,能够保证较高的网络性能,并且支持大规模集群扩展。

四、Cilium BGP ControlePlane 模式环境搭建

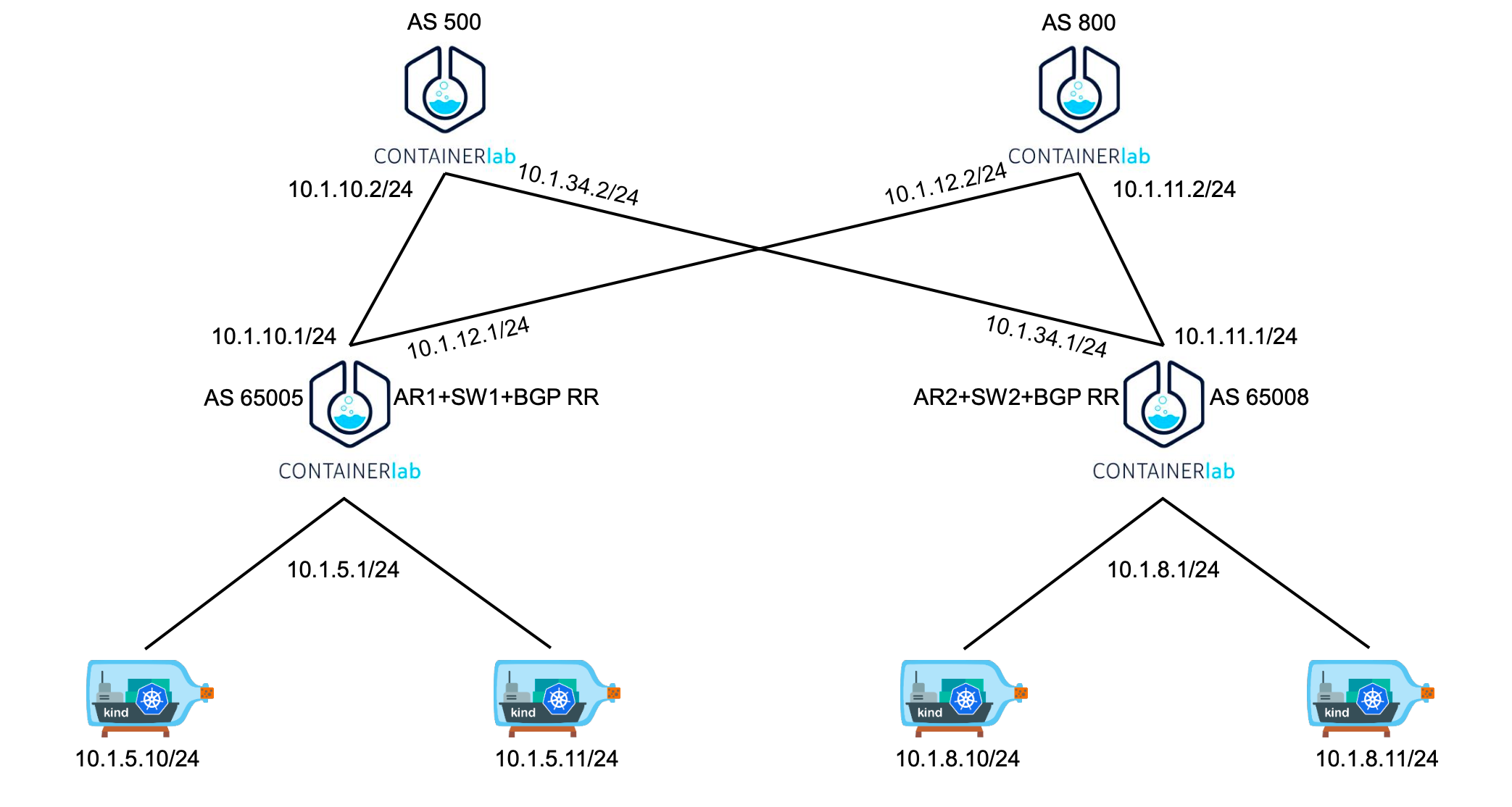

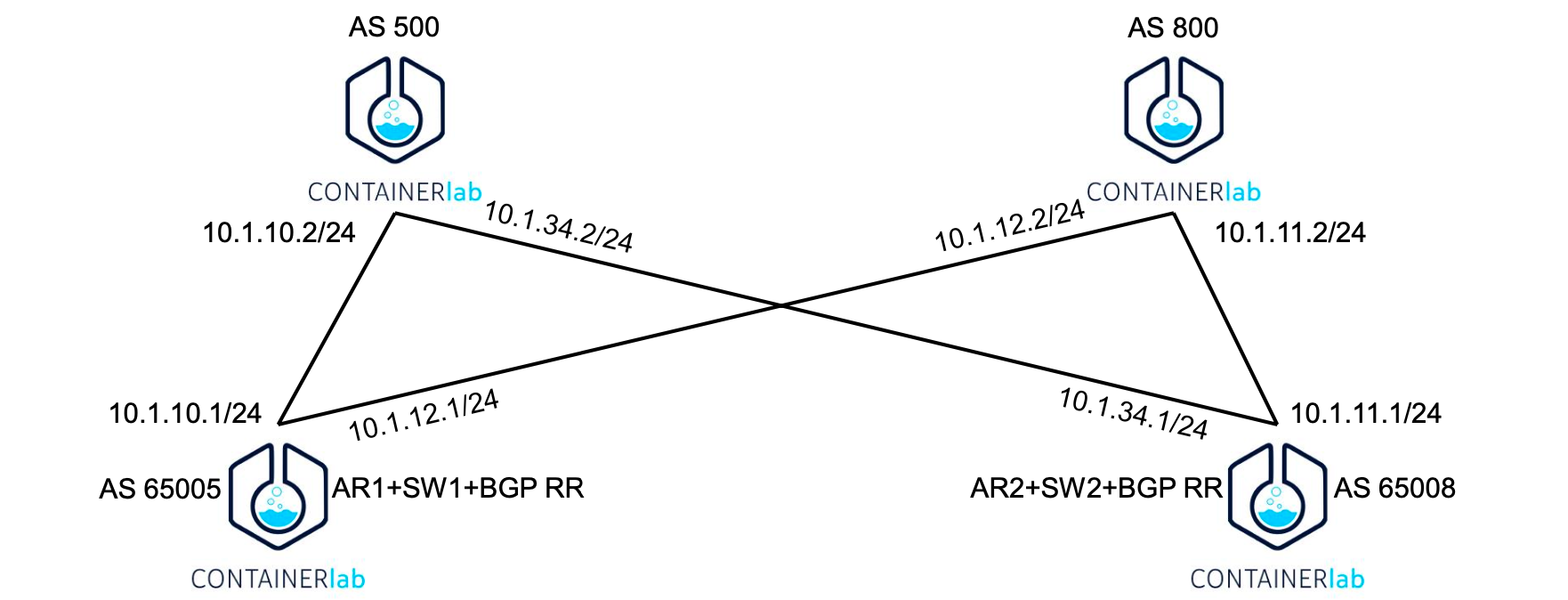

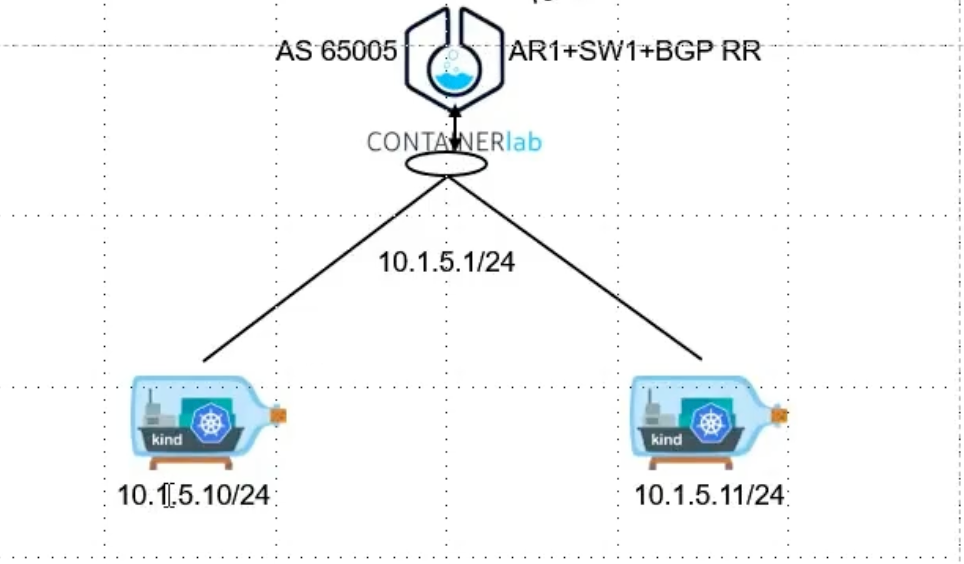

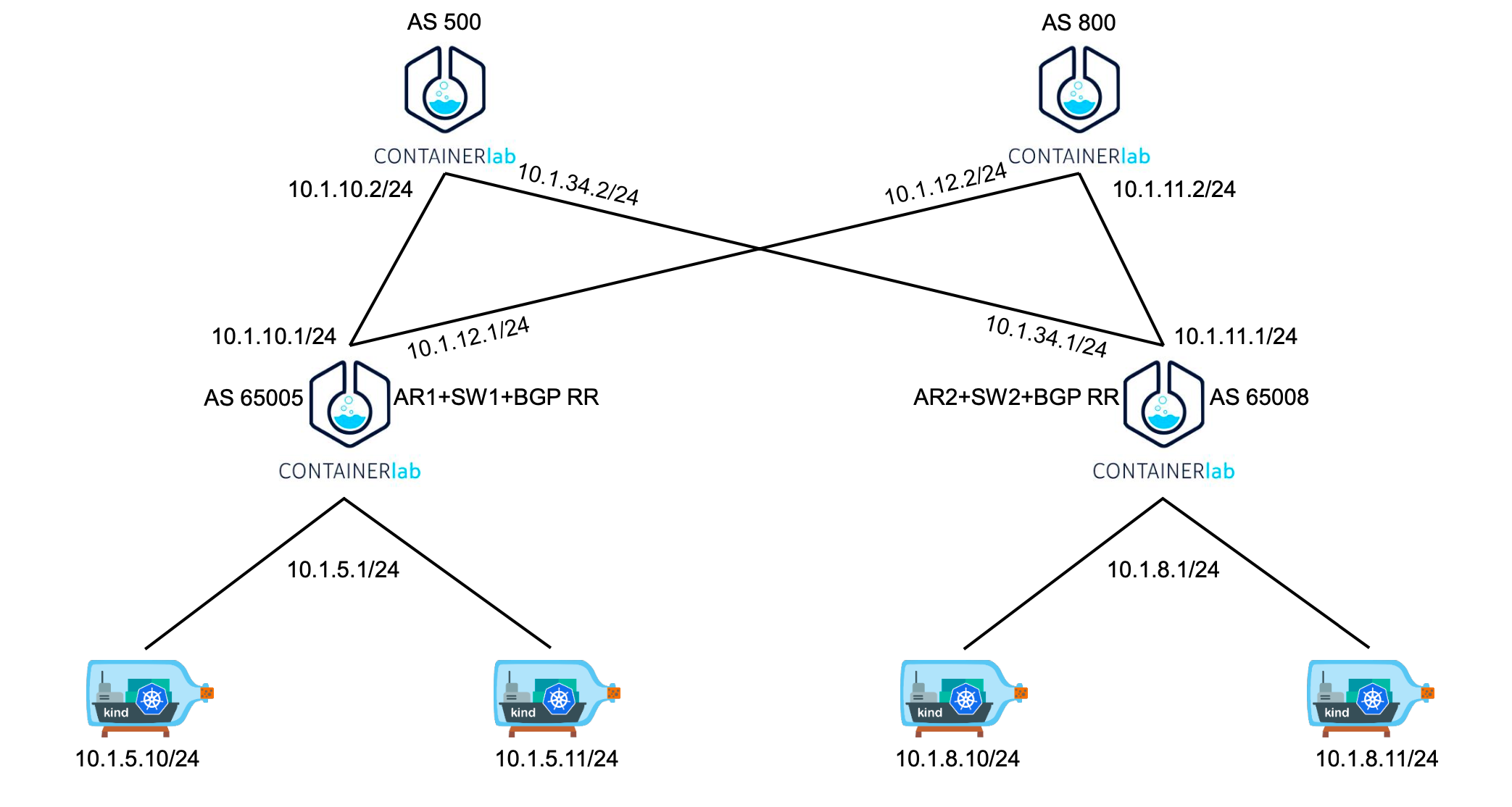

网络架构

- 架构图如上所示

- 包括

ip地址ASN编号 - 该 k8s 集群一共有4台节点:

1 master、3 node,同时节点之间不在同一个网段

- 包括

kind 配置文件信息

root@kind:~# cat install.sh

#!/bin/bash

date

set -v

cat <<EOF | kind create cluster --image=kindest/node:v1.23.4 --config=-

kind: Cluster

name: clab-bgp

apiVersion: kind.x-k8s.io/v1alpha4

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# 设置 pod 网段

podSubnet: "10.98.0.0/16"

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.10

# 设置 node 的 标签,后续创建 bgp 协议使用此标签

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.5.11

# 设置 node 的 标签,后续创建 bgp 协议使用此标签

node-labels: "rack=rack0"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.10

# 设置 node 的 标签,后续创建 bgp 协议使用此标签

node-labels: "rack=rack1"

- role: worker

kubeadmConfigPatches:

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-ip: 10.1.8.11

# 设置 node 的 标签,后续创建 bgp 协议使用此标签

node-labels: "rack=rack1"

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.evescn.com"]

endpoint = ["https://harbor.evescn.com"]

EOF

# 2.remove taints

controller_node=`kubectl get nodes --no-headers -o custom-columns=NAME:.metadata.name| grep control-plane`

kubectl taint nodes $controller_node node-role.kubernetes.io/master:NoSchedule-

kubectl get nodes -o wide

# 3.install necessary tools

for i in $(docker ps -a --format "table {{.Names}}" | grep cilium)

do

echo $i

docker cp /usr/bin/ping $i:/usr/bin/ping

docker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

- 安装

k8s集群

root@kind:~# ./install.sh

Creating cluster "clab-bgp" ...

✓ Ensuring node image (kindest/node:v1.23.4) 🖼

✓ Preparing nodes 📦 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-clab-bgp"

You can now use your cluster with:

kubectl cluster-info --context kind-clab-bgp

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

root@kind:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-bgp-control-plane NotReady control-plane,master 2m41s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker NotReady <none> 2m10s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker2 NotReady <none> 2m10s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker3 NotReady <none> 2m10s v1.23.4 <none> <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

集群创建完成后,可以看到节点状态因为没有

cni插件,状态为NotReady,同时也没有ip地址。这是正常现象

创建 clab 容器环境

创建网桥

root@kind:~# brctl addbr br-leaf0

root@kind:~# ifconfig br-leaf0 up

root@kind:~# brctl addbr br-leaf1

root@kind:~# ifconfig br-leaf1 up

root@kind:~# ip a l

13: br-leaf0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 72:55:98:38:b1:04 brd ff:ff:ff:ff:ff:ff

inet6 fe80::7055:98ff:fe38:b104/64 scope link

valid_lft forever preferred_lft forever

14: br-leaf1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 72:19:49:f9:71:83 brd ff:ff:ff:ff:ff:ff

inet6 fe80::7019:49ff:fef9:7183/64 scope link

valid_lft forever preferred_lft forever

创建这两个网桥主要是为了让

kind上节点通过虚拟交换机连接到containerLab,为什么不直连接containerLab,如果10.1.5.10/24使用vethPair和containerLab进行连接,10.1.5.11/24就没有额外的端口进行连接

clab 网络拓扑文件

# cilium.bgp.clab.yml

name: bgp

topology:

nodes:

spine0:

kind: linux

image: vyos/vyos:1.2.8

cmd: /sbin/init

binds:

- /lib/modules:/lib/modules

- ./startup-conf/spine0-boot.cfg:/opt/vyatta/etc/config/config.boot

spine1:

kind: linux

image: vyos/vyos:1.2.8

cmd: /sbin/init

binds:

- /lib/modules:/lib/modules

- ./startup-conf/spine1-boot.cfg:/opt/vyatta/etc/config/config.boot

leaf0:

kind: linux

image: vyos/vyos:1.2.8

cmd: /sbin/init

binds:

- /lib/modules:/lib/modules

- ./startup-conf/leaf0-boot.cfg:/opt/vyatta/etc/config/config.boot

leaf1:

kind: linux

image: vyos/vyos:1.2.8

cmd: /sbin/init

binds:

- /lib/modules:/lib/modules

- ./startup-conf/leaf1-boot.cfg:/opt/vyatta/etc/config/config.boot

br-leaf0:

kind: bridge

br-leaf1:

kind: bridge

server1:

kind: linux

image: harbor.dayuan1997.com/devops/nettool:0.9

# 复用节点网络,共享网络命名空间

network-mode: container:clab-bgp-control-plane

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.5.10/24 dev net0

- ip route replace default via 10.1.5.1

server2:

kind: linux

image: harbor.dayuan1997.com/devops/nettool:0.9

# 复用节点网络,共享网络命名空间

network-mode: container:clab-bgp-worker

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.5.11/24 dev net0

- ip route replace default via 10.1.5.1

server3:

kind: linux

image: harbor.dayuan1997.com/devops/nettool:0.9

# 复用节点网络,共享网络命名空间

network-mode: container:clab-bgp-worker2

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.8.10/24 dev net0

- ip route replace default via 10.1.8.1

server4:

kind: linux

image: harbor.dayuan1997.com/devops/nettool:0.9

# 复用节点网络,共享网络命名空间

network-mode: container:clab-bgp-worker3

# 配置是为了设置节点上的业务网卡,同时将默认路由的网关进行更改,使用业务网卡为出接口。

exec:

- ip addr add 10.1.8.11/24 dev net0

- ip route replace default via 10.1.8.1

links:

- endpoints: ["br-leaf0:br-leaf0-net0", "server1:net0"]

- endpoints: ["br-leaf0:br-leaf0-net1", "server2:net0"]

- endpoints: ["br-leaf1:br-leaf1-net0", "server3:net0"]

- endpoints: ["br-leaf1:br-leaf1-net1", "server4:net0"]

- endpoints: ["leaf0:eth1", "spine0:eth1"]

- endpoints: ["leaf0:eth2", "spine1:eth1"]

- endpoints: ["leaf0:eth3", "br-leaf0:br-leaf0-net2"]

- endpoints: ["leaf1:eth1", "spine0:eth2"]

- endpoints: ["leaf1:eth2", "spine1:eth2"]

- endpoints: ["leaf1:eth3", "br-leaf1:br-leaf1-net2"]

VyOS 配置文件

spine0-boot.cfg

配置文件

# ./startup-conf/spine0-boot.cfg

interfaces {

ethernet eth1 {

address 10.1.10.2/24

duplex auto

smp-affinity auto

speed auto

}

ethernet eth2 {

address 10.1.34.2/24

duplex auto

smp-affinity auto

speed auto

}

loopback lo {

}

}

protocols {

# 配置 bgp 信息,bpg 自治系统编号 500

bgp 500 {

# 配置其他 AS 信息 包括 自治系统编号

neighbor 10.1.10.1 {

remote-as 65005

}

# 配置其他 AS 信息 包括 自治系统编号

neighbor 10.1.34.1 {

remote-as 65008

}

parameters {

# 指定了 BGP 路由器的路由器 ID

router-id 10.1.10.2

}

}

}

system {

config-management {

commit-revisions 100

}

console {

device ttyS0 {

speed 9600

}

}

host-name spine0

login {

user vyos {

authentication {

encrypted-password $6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/

plaintext-password ""

}

level admin

}

}

ntp {

server 0.pool.ntp.org {

}

server 1.pool.ntp.org {

}

server 2.pool.ntp.org {

}

}

syslog {

global {

facility all {

level info

}

facility protocols {

level debug

}

}

}

time-zone UTC

}

/* Warning: Do not remove the following line. */

/* === vyatta-config-version: "qos@1:dhcp-server@5:webgui@1:pppoe-server@2:webproxy@2:firewall@5:pptp@1:dns-forwarding@1:mdns@1:quagga@7:webproxy@1:snmp@1:system@10:conntrack@1:l2tp@1:broadcast-relay@1:dhcp-relay@2:conntrack-sync@1:vrrp@2:ipsec@5:ntp@1:config-management@1:wanloadbalance@3:ssh@1:nat@4:zone-policy@1:cluster@1" === */

/* Release version: 1.2.8 */

spine1-boot.cfg

配置文件

# ./startup-conf/spine1-boot.cfg

interfaces {

ethernet eth1 {

address 10.1.12.2/24

duplex auto

smp-affinity auto

speed auto

}

ethernet eth2 {

address 10.1.11.2/24

duplex auto

smp-affinity auto

speed auto

}

loopback lo {

}

}

protocols {

bgp 800 {

neighbor 10.1.11.1 {

remote-as 65008

}

neighbor 10.1.12.1 {

remote-as 65005

}

parameters {

router-id 10.1.12.2

}

}

}

system {

config-management {

commit-revisions 100

}

console {

device ttyS0 {

speed 9600

}

}

host-name spine1

login {

user vyos {

authentication {

encrypted-password $6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/

plaintext-password ""

}

level admin

}

}

ntp {

server 0.pool.ntp.org {

}

server 1.pool.ntp.org {

}

server 2.pool.ntp.org {

}

}

syslog {

global {

facility all {

level info

}

facility protocols {

level debug

}

}

}

time-zone UTC

}

/* Warning: Do not remove the following line. */

/* === vyatta-config-version: "qos@1:dhcp-server@5:webgui@1:pppoe-server@2:webproxy@2:firewall@5:pptp@1:dns-forwarding@1:mdns@1:quagga@7:webproxy@1:snmp@1:system@10:conntrack@1:l2tp@1:broadcast-relay@1:dhcp-relay@2:conntrack-sync@1:vrrp@2:ipsec@5:ntp@1:config-management@1:wanloadbalance@3:ssh@1:nat@4:zone-policy@1:cluster@1" === */

/* Release version: 1.2.8 */

leaf0-boot.cfg

配置文件

# ./startup-conf/leaf0-boot.cfg

interfaces {

ethernet eth1 {

address 10.1.10.1/24

duplex auto

smp-affinity auto

speed auto

}

ethernet eth2 {

address 10.1.12.1/24

duplex auto

smp-affinity auto

speed auto

}

ethernet eth3 {

address 10.1.5.1/24

duplex auto

smp-affinity auto

speed auto

}

loopback lo {

}

}

# 配置 nat 信息,网络下的服务器可以访问外网

nat {

source {

rule 100 {

outbound-interface eth0

source {

address 10.1.0.0/16

}

translation {

address masquerade

}

}

}

}

protocols {

# 配置 bgp 信息,bpg 自治系统编号 65005

bgp 65005 {

# 本地网络段

address-family {

ipv4-unicast {

network 10.1.5.0/24 {

}

}

}

# 配置 客户机(Client) 以及 Client 自治系统编号

neighbor 10.1.5.10 {

address-family {

ipv4-unicast {

route-reflector-client

}

}

remote-as 65005

}

# 配置 客户机(Client) 以及 Client 自治系统编号

neighbor 10.1.5.11 {

address-family {

ipv4-unicast {

route-reflector-client

}

}

remote-as 65005

}

# 配置其他 AS 信息 包括 自治系统编号

neighbor 10.1.10.2 {

remote-as 500

}

# 配置其他 AS 信息 包括 自治系统编号

neighbor 10.1.12.2 {

remote-as 800

}

parameters {

# BGP 能够在不同的 AS-PATH 下使用多路径路由 ECMP

bestpath {

as-path {

multipath-relax

}

}

# 指定了 BGP 路由器的路由器 ID

router-id 10.1.5.1

}

}

}

system {

config-management {

commit-revisions 100

}

console {

device ttyS0 {

speed 9600

}

}

host-name leaf0

login {

user vyos {

authentication {

encrypted-password $6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/

plaintext-password ""

}

level admin

}

}

ntp {

server 0.pool.ntp.org {

}

server 1.pool.ntp.org {

}

server 2.pool.ntp.org {

}

}

syslog {

global {

facility all {

level info

}

facility protocols {

level debug

}

}

}

time-zone UTC

}

/* Warning: Do not remove the following line. */

/* === vyatta-config-version: "qos@1:dhcp-server@5:webgui@1:pppoe-server@2:webproxy@2:firewall@5:pptp@1:dns-forwarding@1:mdns@1:quagga@7:webproxy@1:snmp@1:system@10:conntrack@1:l2tp@1:broadcast-relay@1:dhcp-relay@2:conntrack-sync@1:vrrp@2:ipsec@5:ntp@1:config-management@1:wanloadbalance@3:ssh@1:nat@4:zone-policy@1:cluster@1" === */

/* Release version: 1.2.8 */

leaf1-boot.cfg

配置文件

# ./startup-conf/leaf1-boot.cfg

interfaces {

ethernet eth1 {

address 10.1.34.1/24

duplex auto

smp-affinity auto

speed auto

}

ethernet eth2 {

address 10.1.11.1/24

duplex auto

smp-affinity auto

speed auto

}

ethernet eth3 {

address 10.1.8.1/24

duplex auto

smp-affinity auto

speed auto

}

loopback lo {

}

}

nat {

source {

rule 100 {

outbound-interface eth0

source {

address 10.1.0.0/16

}

translation {

address masquerade

}

}

}

}

protocols {

bgp 65008 {

address-family {

ipv4-unicast {

network 10.1.8.0/24 {

}

}

}

neighbor 10.1.8.10 {

address-family {

ipv4-unicast {

route-reflector-client

}

}

remote-as 65008

}

neighbor 10.1.8.11 {

address-family {

ipv4-unicast {

route-reflector-client

}

}

remote-as 65008

}

neighbor 10.1.11.2 {

remote-as 800

}

neighbor 10.1.34.2 {

remote-as 500

}

parameters {

bestpath {

as-path {

multipath-relax

}

}

router-id 10.1.8.1

}

}

}

system {

config-management {

commit-revisions 100

}

console {

device ttyS0 {

speed 9600

}

}

host-name leaf1

login {

user vyos {

authentication {

encrypted-password $6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/

plaintext-password ""

}

level admin

}

}

ntp {

server 0.pool.ntp.org {

}

server 1.pool.ntp.org {

}

server 2.pool.ntp.org {

}

}

syslog {

global {

facility all {

level info

}

facility protocols {

level debug

}

}

}

time-zone UTC

}

/* Warning: Do not remove the following line. */

/* === vyatta-config-version: "qos@1:dhcp-server@5:webgui@1:pppoe-server@2:webproxy@2:firewall@5:pptp@1:dns-forwarding@1:mdns@1:quagga@7:webproxy@1:snmp@1:system@10:conntrack@1:l2tp@1:broadcast-relay@1:dhcp-relay@2:conntrack-sync@1:vrrp@2:ipsec@5:ntp@1:config-management@1:wanloadbalance@3:ssh@1:nat@4:zone-policy@1:cluster@1" === */

/* Release version: 1.2.8 */

部署服务

# tree -L 2 ./

./

├── cilium.bgp.clab.yml

└── startup-conf

├── spine0-boot.cfg

├── spine1-boot.cfg

├── leaf0-boot.cfg

└── leaf1-boot.cfg

# clab deploy -t cilium.bgp.clab.yml

INFO[0000] Containerlab v0.54.2 started

INFO[0000] Parsing & checking topology file: clab.yaml

INFO[0000] Creating docker network: Name="clab", IPv4Subnet="172.20.20.0/24", IPv6Subnet="2001:172:20:20::/64", MTU=1500

INFO[0000] Creating lab directory: /root/wcni-kind/cilium/cilium_1.13.0-rc5/cilium-bgp-control-plane-lb-ipam/clab-bgp

WARN[0000] node clab-bgp-control-plane referenced in namespace sharing not found in topology definition, considering it an external dependency.

WARN[0000] node clab-bgp-worker referenced in namespace sharing not found in topology definition, considering it an external dependency.

WARN[0000] node clab-bgp-worker2 referenced in namespace sharing not found in topology definition, considering it an external dependency.

WARN[0000] node clab-bgp-worker3 referenced in namespace sharing not found in topology definition, considering it an external dependency.

INFO[0000] Creating container: "leaf1"

INFO[0000] Creating container: "spine0"

INFO[0001] Created link: leaf1:eth1 <--> spine0:eth2

INFO[0001] Creating container: "spine1"

INFO[0001] Created link: leaf1:eth3 <--> br-leaf1:br-leaf1-net2

INFO[0001] Creating container: "leaf0"

INFO[0003] Created link: leaf1:eth2 <--> spine1:eth2

INFO[0003] Creating container: "server3"

INFO[0003] Created link: leaf0:eth1 <--> spine0:eth1

INFO[0003] Created link: leaf0:eth2 <--> spine1:eth1

INFO[0003] Created link: leaf0:eth3 <--> br-leaf0:br-leaf0-net2

INFO[0003] Creating container: "server1"

INFO[0004] Created link: br-leaf1:br-leaf1-net0 <--> server3:net0

INFO[0005] Created link: br-leaf0:br-leaf0-net0 <--> server1:net0

INFO[0005] Creating container: "server4"

INFO[0006] Creating container: "server2"

INFO[0006] Created link: br-leaf1:br-leaf1-net1 <--> server4:net0

INFO[0007] Created link: br-leaf0:br-leaf0-net1 <--> server2:net0

INFO[0007] Executed command "ip addr add 10.1.8.10/24 dev net0" on the node "server3". stdout:

INFO[0007] Executed command "ip route replace default via 10.1.8.1" on the node "server3". stdout:

INFO[0007] Executed command "ip addr add 10.1.5.10/24 dev net0" on the node "server1". stdout:

INFO[0007] Executed command "ip route replace default via 10.1.5.1" on the node "server1". stdout:

INFO[0007] Executed command "ip addr add 10.1.8.11/24 dev net0" on the node "server4". stdout:

INFO[0007] Executed command "ip route replace default via 10.1.8.1" on the node "server4". stdout:

INFO[0007] Executed command "ip addr add 10.1.5.11/24 dev net0" on the node "server2". stdout:

INFO[0007] Executed command "ip route replace default via 10.1.5.1" on the node "server2". stdout:

INFO[0007] Adding containerlab host entries to /etc/hosts file

INFO[0007] Adding ssh config for containerlab nodes

INFO[0007] 🎉 New containerlab version 0.56.0 is available! Release notes: https://containerlab.dev/rn/0.56/

Run 'containerlab version upgrade' to upgrade or go check other installation options at https://containerlab.dev/install/

+---+------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

| # | Name | Container ID | Image | Kind | State | IPv4 Address | IPv6 Address |

+---+------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

| 1 | clab-bgp-leaf0 | 862dd78315dc | vyos/vyos:1.2.8 | linux | running | 172.20.20.5/24 | 2001:172:20:20::5/64 |

| 2 | clab-bgp-leaf1 | 97c3a88de0e5 | vyos/vyos:1.2.8 | linux | running | 172.20.20.2/24 | 2001:172:20:20::2/64 |

| 3 | clab-bgp-server1 | 4b857414c61d | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

| 4 | clab-bgp-server2 | e8d5118ecf24 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

| 5 | clab-bgp-server3 | 6423690481c2 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

| 6 | clab-bgp-server4 | 6ce574829ad9 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | N/A | N/A |

| 7 | clab-bgp-spine0 | c653397be1ac | vyos/vyos:1.2.8 | linux | running | 172.20.20.3/24 | 2001:172:20:20::3/64 |

| 8 | clab-bgp-spine1 | 5bf97a972034 | vyos/vyos:1.2.8 | linux | running | 172.20.20.4/24 | 2001:172:20:20::4/64 |

+---+------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

检查 k8s 集群信息

root@kind:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-bgp-control-plane NotReady control-plane,master 5m33s v1.23.4 10.1.5.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker NotReady <none> 4m55s v1.23.4 10.1.5.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker2 NotReady <none> 4m54s v1.23.4 10.1.8.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker3 NotReady <none> 5m7s v1.23.4 10.1.8.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

# 查看 node 节点 ip 信息

root@kind:~# docker exec -it clab-bgp-control-plane ip a l

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::3/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:3/64 scope link

valid_lft forever preferred_lft forever

34: net0@if35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:f9:fe:fb brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.5.10/24 scope global net0

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:fef9:fefb/64 scope link

valid_lft forever preferred_lft forever

# 查看 node 节点路由信息

root@kind:~# docker exec -it clab-bgp-control-plane ip r s

default via 10.1.5.1 dev net0

10.1.5.0/24 dev net0 proto kernel scope link src 10.1.5.10

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3

查看 k8s 集群发现

node节点ip地址分配了,登陆容器查看到了新的ip地址,并且默认路由信息调整为了10.1.5.0/24 dev net0 proto kernel scope link src 10.1.5.10

安装 cilium 服务

root@kind:~# cat cilium.sh

#/bin/bash

helm repo add cilium https://helm.cilium.io > /dev/null 2>&1

helm repo update > /dev/null 2>&1

helm install cilium cilium/cilium \

--version 1.13.0-rc5 \

--namespace kube-system \

--set debug.enabled=true \

--set debug.verbose=datapath \

--set monitorAggregation=none \

--set cluster.name=clab-bgp-cplane \

--set ipam.mode=kubernetes \

--set tunnel=disabled \

--set ipv4NativeRoutingCIDR=10.0.0.0/8 \

--set bgpControlPlane.enabled=true \

--set k8s.requireIPv4PodCIDR=true

root@kind:~# bash cilium.sh

--set 参数解释

-

--set ipam.mode=kubernetes- 含义: 设置 IP 地址管理模式为 Kubernetes 模式

- 用途: Cilium 将依赖 Kubernetes 提供的 IP 地址分配。

-

--set tunnel=disabled- 含义: 禁用隧道模式。

- 用途: 禁用后,Cilium 将不使用 vxlan 技术,直接在主机之间路由数据包,即 direct-routing 模式。

-

--set ipv4NativeRoutingCIDR="10.0.0.0/8"- 含义: 指定用于 IPv4 本地路由的 CIDR 范围,这里是

10.0.0.0/8。 - 用途: 配置 Cilium 使其知道哪些 IP 地址范围应该通过本地路由进行处理,不做 snat , Cilium 默认会对所用地址做 snat。

- 含义: 指定用于 IPv4 本地路由的 CIDR 范围,这里是

-

--set bgpControlPlane.enabled=true- 含义: 启用 BGP 控制平面

- 用途: Cilium 将使用 BGP(边界网关协议)进行路由控制和广告。

-

--set k8s.requireIPv4PodCIDR=true:- 含义: 要求 Kubernetes 提供 IPv4 Pod CIDR(类网)

- 用途: Kubernetes 提供 IPv4 Pod CIDR,Cilium 使用这些 CIDR 进行 IP 地址分配和路由。

无需指定

k8sServiceHost和k8sServicePort,应该集群在初始化的时候有些信息模式使用的172.18.0.x这个ip地址进行设置,此处指定为10.0.5.10反而 cilium 安装不成功

- 查看安装的服务

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-64jd5 1/1 Running 0 2m24s

kube-system cilium-9hxns 1/1 Running 0 2m24s

kube-system cilium-gcfdm 1/1 Running 0 2m24s

kube-system cilium-operator-76564696fd-j6rcw 1/1 Running 0 2m24s

kube-system cilium-operator-76564696fd-tltxv 1/1 Running 0 2m24s

kube-system cilium-r7r5x 1/1 Running 0 2m24s

kube-system coredns-64897985d-fp477 1/1 Running 0 18m

kube-system coredns-64897985d-qtcj7 1/1 Running 0 18m

kube-system etcd-clab-bgp-control-plane 1/1 Running 0 18m

kube-system kube-apiserver-clab-bgp-control-plane 1/1 Running 0 18m

kube-system kube-controller-manager-clab-bgp-control-plane 1/1 Running 0 18m

kube-system kube-proxy-cxd7j 1/1 Running 0 17m

kube-system kube-proxy-jxm2g 1/1 Running 0 18m

kube-system kube-proxy-n6jzx 1/1 Running 0 17m

kube-system kube-proxy-xf9rs 1/1 Running 0 17m

kube-system kube-scheduler-clab-bgp-control-plane 1/1 Running 0 18m

local-path-storage local-path-provisioner-5ddd94ff66-9d9xn 1/1 Running 0 18m

创建 Cilium BGPPeeringPolicy 规则

root@kind:~# cat bgp_peering_policy.yaml

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack0

spec:

nodeSelector:

# 结合 kind 配置文件信息,选择 clab-bgp-control-plane clab-bgp-worker 节点

matchLabels:

rack: rack0

virtualRouters:

- localASN: 65005

exportPodCIDR: true

neighbors:

- peerAddress: "10.1.5.1/24"

peerASN: 65005

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack1

spec:

nodeSelector:

# 结合 kind 配置文件信息,选择 clab-bgp-worker2 clab-bgp-worker3 节点

matchLabels:

rack: rack1

virtualRouters:

- localASN: 65008

exportPodCIDR: true

neighbors:

- peerAddress: "10.1.8.1/24"

peerASN: 65008

root@kind:~# kubectl apply -f bgp_peering_policy.yaml

ciliumbgppeeringpolicy.cilium.io/rack0 created

ciliumbgppeeringpolicy.cilium.io/rack1 created

root@kind:~# kubectl get ciliumbgppeeringpolicy

NAME AGE

rack0 9s

rack1 9s

k8s 集群安装 Pod 和 Service

root@kind:~# cat cni.yaml

---

apiVersion: apps/v1

kind: DaemonSet

#kind: Deployment

metadata:

labels:

app: cni

name: cni

spec:

#replicas: 1

selector:

matchLabels:

app: cni

template:

metadata:

labels:

app: cni

spec:

containers:

- image: harbor.dayuan1997.com/devops/nettool:0.9

name: nettoolbox

securityContext:

privileged: true

---

apiVersion: v1

kind: Service

metadata:

name: cni

labels:

color: red

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

type: LoadBalancer

selector:

app: cni

root@kind:~# kubectl apply -f cni.yaml

daemonset.apps/cni created

service/serversvc created

- 查看安装服务信息

root@kind:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-5nrdx 1/1 Running 0 18s 10.98.1.39 clab-bgp-worker3 <none> <none>

cni-8cnm5 1/1 Running 0 18s 10.98.0.18 clab-bgp-control-plane <none> <none>

cni-lzwwq 1/1 Running 0 18s 10.98.2.249 clab-bgp-worker2 <none> <none>

cni-rcbdx 1/1 Running 0 18s 10.98.3.166 clab-bgp-worker <none> <none>

root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cni LoadBalancer 10.96.10.182 <pending> 80:31354/TCP 29s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19m

五、Cilium BGP ControlPlane 特性验证

root@kind:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

clab-bgp-control-plane Ready control-plane,master 145m v1.23.4 10.1.5.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker Ready <none> 144m v1.23.4 10.1.5.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker2 Ready <none> 144m v1.23.4 10.1.8.10 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

clab-bgp-worker3 Ready <none> 144m v1.23.4 10.1.8.11 <none> Ubuntu 21.10 5.11.5-051105-generic containerd://1.5.10

查看网络图谱和 k8s 集群信息可得: clab-bgp-control-plane 和 clab-bgp-worker 处于同一二层网络平面,clab-bgp-worker2 和 clab-bgp-worker3 处于另外一个二层平面

跨 2 层网络访问

clab-bgp-control-plane 节点 Pod 访问 clab-bgp-worker2 节点 Pod

Pod节点信息

root@kind:~# kubectl exec -it cni-8cnm5 -- bash

## ip 信息

cni-8cnm5~$ ip a l

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000

link/ether 32:ad:f8:13:67:5f brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.98.0.18/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::30ad:f8ff:fe13:675f/64 scope link

valid_lft forever preferred_lft forever

cni-8cnm5~$ ip r s

default via 10.98.0.124 dev eth0 mtu 9500

10.98.0.124 dev eth0 scope link

## 路由信息

root@kind:~# kubectl exec -it net -- ip r s

default via 10.0.2.34 dev eth0 mtu 1500

10.0.2.34 dev eth0 scope link

查看 Pod 信息发现数据包会走默认路由通过 eth0 网卡送出去,送往 pod eth0 网卡的 veth pair 网卡上

Pod节点所在Node节点信息

root@kind:~# docker exec -it clab-bgp-control-plane bash

## ip 信息

root@clab-bgp-control-plane:/# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000

link/ether fe:16:4a:39:ef:c5 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc16:4aff:fe39:efc5/64 scope link

valid_lft forever preferred_lft forever

3: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000

link/ether 16:bf:db:b8:a8:57 brd ff:ff:ff:ff:ff:ff

inet 10.98.0.124/32 scope link cilium_host

valid_lft forever preferred_lft forever

inet6 fe80::14bf:dbff:feb8:a857/64 scope link

valid_lft forever preferred_lft forever

5: lxc_health@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000

link/ether 7a:e4:32:21:70:79 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::78e4:32ff:fe21:7079/64 scope link

valid_lft forever preferred_lft forever

7: lxc191ea5f1c80c@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default qlen 1000

link/ether 0e:05:bf:61:e5:dc brd ff:ff:ff:ff:ff:ff link-netns cni-f879b8ee-048d-56a7-3380-19a2d79db129

inet6 fe80::c05:bfff:fe61:e5dc/64 scope link

valid_lft forever preferred_lft forever

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::3/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:3/64 scope link

valid_lft forever preferred_lft forever

34: net0@if35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:f9:fe:fb brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.5.10/24 scope global net0

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:fef9:fefb/64 scope link

valid_lft forever preferred_lft forever

## 路由信息

root@clab-bgp-control-plane:/# ip r s

default via 10.1.5.1 dev net0

10.1.5.0/24 dev net0 proto kernel scope link src 10.1.5.10

10.98.0.0/24 via 10.98.0.124 dev cilium_host src 10.98.0.124

10.98.0.124 dev cilium_host scope link

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3

Pod节点进行ping包测试

root@kind:~# kubectl exec -it cni-8cnm5 -- ping -c 1 10.98.2.249

PING 10.98.2.249 (10.98.2.249): 56 data bytes

64 bytes from 10.98.2.249: seq=0 ttl=57 time=1.230 ms

--- 10.98.2.249 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.230/1.230/1.230 ms

Pod节点eth0网卡抓包

net~$ tcpdump -pne -i eth0

09:34:33.044132 32:ad:f8:13:67:5f > 0e:05:bf:61:e5:dc, ethertype IPv4 (0x0800), length 98: 10.98.0.18 > 10.98.2.249: ICMP echo request, id 49, seq 0, length 64

09:34:33.045041 0e:05:bf:61:e5:dc > 32:ad:f8:13:67:5f, ethertype IPv4 (0x0800), length 98: 10.98.2.249 > 10.98.0.18: ICMP echo reply, id 49, seq 0, length 64

clab-bgp-control-plane节点Pod的veth pair网卡lxc191ea5f1c80c抓包

root@clab-bgp-control-plane:/# tcpdump -pne -i lxc191ea5f1c80c

09:36:13.329632 32:ad:f8:13:67:5f > 0e:05:bf:61:e5:dc, ethertype IPv4 (0x0800), length 98: 10.98.0.18 > 10.98.2.249: ICMP echo request, id 60, seq 0, length 64

09:36:13.330266 0e:05:bf:61:e5:dc > 32:ad:f8:13:67:5f, ethertype IPv4 (0x0800), length 98: 10.98.2.249 > 10.98.0.18: ICMP echo reply, id 60, seq 0, length 64

Pod 节点 eth0 网卡抓包信息同 clab-bgp-control-plane 节点 Pod 的 veth pair 网卡 lxc191ea5f1c80c 抓包信息,这2个 mac 地址分别是 eth0 网卡和 lxc191ea5f1c80c 网卡 mac 地址

- 查看

clab-bgp-control-plane节点路由信息,发现数据包会在通过default via 10.1.5.1 dev net0路由信息转发,送往clab-bgp-leaf0节点10.1.5.1ip在此节点上

clab-bgp-control-plane 节点 net0 网卡抓包

root@clab-bgp-control-plane:/# tcpdump -pne -i net0

09:41:43.232909 aa:c1:ab:f9:fe:fb > aa:c1:ab:83:50:0f, ethertype IPv4 (0x0800), length 98: 10.98.0.18 > 10.98.2.249: ICMP echo request, id 80, seq 0, length 64

09:41:43.233234 aa:c1:ab:83:50:0f > aa:c1:ab:f9:fe:fb, ethertype IPv4 (0x0800), length 98: 10.98.2.249 > 10.98.0.18: ICMP echo reply, id 80, seq 0, length 64

在此网卡上抓起到了数据包信息,目的 mac aa:c1:ab:f9:fe:fb 为 net0 网卡 mac 信息,即此数据包时送往目标 IP 的数据包信息

查看 net0 网卡抓包信息,数据包源 mac aa:c1:ab:f9:fe:fb 为 net0 网卡 mac 信息,目的 mac aa:c1:ab:83:50:0f 应该是 10.1.5.1 网卡 mac 地址

root@clab-bgp-control-plane:/# arp -n

Address HWtype HWaddress Flags Mask Iface

172.18.0.5 ether 02:42:ac:12:00:05 C eth0

172.18.0.1 ether 02:42:6a:31:74:79 C eth0

10.1.5.1 ether aa:c1:ab:83:50:0f C net0

10.1.5.11 ether aa:c1:ab:93:7a:7a C net0

172.18.0.2 ether 02:42:ac:12:00:02 C eth0

172.18.0.4 ether 02:42:ac:12:00:04 C eth0

- 查看

clab-bgp-leaf0节点网络信息

root@leaf0:/# ip a l

16: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:14:14:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.20.20.2/24 brd 172.20.20.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 2001:172:20:20::2/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe14:1402/64 scope link

valid_lft forever preferred_lft forever

20: eth2@if21: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:69:b5:28 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 10.1.12.1/24 brd 10.1.12.255 scope global eth2

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:fe69:b528/64 scope link

valid_lft forever preferred_lft forever

25: eth1@if24: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:16:3f:66 brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet 10.1.10.1/24 brd 10.1.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:fe16:3f66/64 scope link

valid_lft forever preferred_lft forever

29: eth3@if28: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:83:50:0f brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.5.1/24 brd 10.1.5.255 scope global eth3

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:fe83:500f/64 scope link

valid_lft forever preferred_lft forever

root@leaf0:/# ip r s

default via 172.20.20.1 dev eth0

10.1.5.0/24 dev eth3 proto kernel scope link src 10.1.5.1

10.1.8.0/24 proto bgp metric 20

nexthop via 10.1.10.2 dev eth1 weight 1

nexthop via 10.1.12.2 dev eth2 weight 1

10.1.10.0/24 dev eth1 proto kernel scope link src 10.1.10.1

10.1.12.0/24 dev eth2 proto kernel scope link src 10.1.12.1

10.98.0.0/24 via 10.1.5.10 dev eth3 proto bgp metric 20

10.98.1.0/24 proto bgp metric 20

nexthop via 10.1.10.2 dev eth1 weight 1

nexthop via 10.1.12.2 dev eth2 weight 1

10.98.2.0/24 proto bgp metric 20

nexthop via 10.1.10.2 dev eth1 weight 1

nexthop via 10.1.12.2 dev eth2 weight 1

10.98.3.0/24 via 10.1.5.11 dev eth3 proto bgp metric 20

172.20.20.0/24 dev eth0 proto kernel scope link src 172.20.20.2

查看 leaf0 节点路由信息,发现到达目的地址 10.98.2.0/24 的下一条有2条,这个就是 BGP 的 ECMP 特性,网卡流量分摊。

- 分别在

leaf0节点eth1: 10.1.10.1和eth2: 10.1.12.1这2个网卡抓包,查看路由是否符合ECMP特性

root@leaf0:/# tcpdump -pne -i eth1

09:59:22.703188 aa:c1:ab:f0:a5:5d > aa:c1:ab:16:3f:66, ethertype IPv4 (0x0800), length 98: 10.98.2.249 > 10.98.0.18: ICMP echo reply, id 87, seq 0, length 64

root@leaf0:/# tcpdump -pne -i eth2

09:59:22.702800 aa:c1:ab:69:b5:28 > aa:c1:ab:db:64:c5, ethertype IPv4 (0x0800), length 98: 10.98.0.18 > 10.98.2.249: ICMP echo request, id 87, seq 0, length 64

通过抓包我们发现了 ICMP 的 reply 包和 request 包,分别通过 eth1 和 eth2 2个网卡进行数据传输,符合 ECMP 特性,同理的我们在上层 spine0 和 spine1 节点,也只能分别抓到一条对应的 ICMP 数据包信息

- 查看

leaf0节点 bgp 信息

root@leaf0:/# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

K>* 0.0.0.0/0 [0/0] via 172.20.20.1, eth0, 00:48:16

C>* 10.1.5.0/24 is directly connected, eth3, 00:48:04

B>* 10.1.8.0/24 [20/0] via 10.1.10.2, eth1, 00:47:53

* via 10.1.12.2, eth2, 00:47:53

C>* 10.1.10.0/24 is directly connected, eth1, 00:48:01

C>* 10.1.12.0/24 is directly connected, eth2, 00:48:02

B>* 10.98.0.0/24 [200/0] via 10.1.5.10, eth3, 00:44:28

B>* 10.98.1.0/24 [20/0] via 10.1.10.2, eth1, 00:44:30

* via 10.1.12.2, eth2, 00:44:30

B>* 10.98.2.0/24 [20/0] via 10.1.10.2, eth1, 00:44:29

* via 10.1.12.2, eth2, 00:44:29

B>* 10.98.3.0/24 [200/0] via 10.1.5.11, eth3, 00:44:29

C>* 172.20.20.0/24 is directly connected, eth0, 00:48:16

root@leaf0:/# show ip bgp

BGP table version is 7, local router ID is 10.1.5.1, vrf id 0

Default local pref 100, local AS 65005

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 10.1.5.0/24 0.0.0.0 0 32768 i

*= 10.1.8.0/24 10.1.12.2 0 800 65008 i

*> 10.1.10.2 0 500 65008 i

*>i10.98.0.0/24 10.1.5.10 100 0 i

*= 10.98.1.0/24 10.1.12.2 0 800 65008 i

*> 10.1.10.2 0 500 65008 i

*= 10.98.2.0/24 10.1.12.2 0 800 65008 i

*> 10.1.10.2 0 500 65008 i

*>i10.98.3.0/24 10.1.5.11 100 0 i

- 查看

spine0节点 bgp 信息

root@spine0:/# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

K>* 0.0.0.0/0 [0/0] via 172.20.20.1, eth0, 00:48:51

B>* 10.1.5.0/24 [20/0] via 10.1.10.1, eth1, 00:48:28

B>* 10.1.8.0/24 [20/0] via 10.1.34.1, eth2, 00:48:30

C>* 10.1.10.0/24 is directly connected, eth1, 00:48:41

C>* 10.1.34.0/24 is directly connected, eth2, 00:48:39

B>* 10.98.0.0/24 [20/0] via 10.1.10.1, eth1, 00:45:03

B>* 10.98.1.0/24 [20/0] via 10.1.34.1, eth2, 00:45:05

B>* 10.98.2.0/24 [20/0] via 10.1.34.1, eth2, 00:45:04

B>* 10.98.3.0/24 [20/0] via 10.1.10.1, eth1, 00:45:04

C>* 172.20.20.0/24 is directly connected, eth0, 00:48:51

root@spine0:/# show ip bgp

BGP table version is 6, local router ID is 10.1.10.2, vrf id 0

Default local pref 100, local AS 500

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 10.1.5.0/24 10.1.10.1 0 0 65005 i

*> 10.1.8.0/24 10.1.34.1 0 0 65008 i

*> 10.98.0.0/24 10.1.10.1 0 65005 i

*> 10.98.1.0/24 10.1.34.1 0 65008 i

*> 10.98.2.0/24 10.1.34.1 0 65008 i

*> 10.98.3.0/24 10.1.10.1 0 65005 i

六、BGP ControlePlane + MetalLB 宣告 SVC IP

集群里的服务最终还是需要对外暴露的,仅仅在集群内可访问往往不能满足生产需求。借助

metalLB的能力进行LB IP管理,然后通过BGP将LB svc进行宣告。

安装 metallb 服务,提供 LoadBanlencer 功能

- 安装

metallb服务

root@kind:~# kubectl apply -f https://gh.api.99988866.xyz/https://raw.githubusercontent.com/metallb/metallb/v0.13.10/config/manifests/metallb-native.yaml

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/addresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

configmap/metallb-excludel2 created

secret/webhook-server-cert created

service/webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created

- 创建 metalLb layer2 的 IPAddressPool

root@kind:~# cat metallb-l2-ip-config.yaml

---

# metallb 分配给 loadbanlencer 的 ip 地址段定义

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: ippool

namespace: metallb-system

spec:

addresses:

- 172.18.0.200-172.18.0.210

---

# 创建 L2Advertisement 进行 IPAddressPool 地址段

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: ippool

namespace: metallb-system

spec:

ipAddressPools:

- ippool

root@kind:~# kubectl apply -f metallb-l2-ip-config.yaml

ipaddresspool.metallb.io/ippool unchanged

l2advertisement.metallb.io/ippool created

root@kind:~# kubectl get ipaddresspool -n metallb-system

NAME AUTO ASSIGN AVOID BUGGY IPS ADDRESSES

ippool true false ["172.18.0.200-172.18.0.210"]

root@kind:~# kubectl get l2advertisement -n metallb-system

NAME IPADDRESSPOOLS IPADDRESSPOOL SELECTORS INTERFACES

ippool ["ippool"]

重新查看 cni svc

root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cni LoadBalancer 10.96.10.182 172.18.0.200 80:31354/TCP 78m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 97m

cni svc 以及获取到了 EXTERNAL-IP: 172.18.0.200 ,接下来检查下 bgp 是否有宣告这个 ip 信息?其他地方使用有路由到达此 ip ?

查看 leaf0 路由信息

root@leaf0:/# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

K>* 0.0.0.0/0 [0/0] via 172.20.20.1, eth0, 01:24:40

C>* 10.1.5.0/24 is directly connected, eth3, 01:24:28

B>* 10.1.8.0/24 [20/0] via 10.1.10.2, eth1, 01:24:17

* via 10.1.12.2, eth2, 01:24:17

C>* 10.1.10.0/24 is directly connected, eth1, 01:24:25

C>* 10.1.12.0/24 is directly connected, eth2, 01:24:26

B>* 10.98.0.0/24 [200/0] via 10.1.5.10, eth3, 01:20:52

B>* 10.98.1.0/24 [20/0] via 10.1.10.2, eth1, 01:20:54

* via 10.1.12.2, eth2, 01:20:54

B>* 10.98.2.0/24 [20/0] via 10.1.10.2, eth1, 01:20:53

* via 10.1.12.2, eth2, 01:20:53

B>* 10.98.3.0/24 [200/0] via 10.1.5.11, eth3, 01:20:53

C>* 172.20.20.0/24 is directly connected, eth0, 01:24:40

查看路由后发现 此 ip 还为进行宣告,此时 k8s 节点能访问成功,但是 leaf0 leaf1 spine0 spine1 这些节点无法访问,因为他们没有到达 172.18.0.200 的路由信息

leaf0 节点访问测试

# 网络访问超时

root@leaf0:/# curl 172.18.0.200

修改 Cilium BGPPeeringPolicy 规则,进行路由宣告

root@kind:~# cat bgp_peering_policy.yaml

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack0

spec:

nodeSelector:

# 结合 kind 配置文件信息,选择 clab-bgp-control-plane clab-bgp-worker 节点

matchLabels:

rack: rack0

virtualRouters:

- localASN: 65005

serviceSelector:

# 新增配置

matchExpressions:

- {key: evn, operator: NotIn, values: ["test"]}

exportPodCIDR: true

neighbors:

- peerAddress: "10.1.5.1/24"

peerASN: 65005

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack1

spec:

nodeSelector:

# 结合 kind 配置文件信息,选择 clab-bgp-worker2 clab-bgp-worker3 节点

matchLabels:

rack: rack1

virtualRouters:

- localASN: 65008

serviceSelector:

# 新增配置

matchExpressions:

- {key: env, operator: NotIn, values: ["test"]}

exportPodCIDR: true

neighbors:

- peerAddress: "10.1.8.1/24"

peerASN: 65008

添加了 serviceSelector.matchExpressions=[xxx] ,该配置表示除了带有 env:test 标签的 service EXTERNAL-IP 外,其余 service EXTERNAL-IP 地址均会被 BGP 路由发布

root@kind:~# kubectl delete ciliumbgppeeringpolicies rack0 rack1

ciliumbgppeeringpolicy.cilium.io "rack0" deleted

ciliumbgppeeringpolicy.cilium.io "rack1" deleted

root@kind:~# kubectl apply -f bgp_peering_policy.yaml

ciliumbgppeeringpolicy.cilium.io/rack0 created

ciliumbgppeeringpolicy.cilium.io/rack1 created

再次查看 leaf0 spine0 路由信息

root@vyos:/# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

K>* 0.0.0.0/0 [0/0] via 172.20.20.1, eth0, 01:32:30

C>* 10.1.5.0/24 is directly connected, eth3, 01:32:18

B>* 10.1.8.0/24 [20/0] via 10.1.10.2, eth1, 01:32:07

* via 10.1.12.2, eth2, 01:32:07

C>* 10.1.10.0/24 is directly connected, eth1, 01:32:15

C>* 10.1.12.0/24 is directly connected, eth2, 01:32:16

B>* 10.98.0.0/24 [200/0] via 10.1.5.10, eth3, 00:00:00

B>* 10.98.1.0/24 [20/0] via 10.1.10.2, eth1, 00:00:01

* via 10.1.12.2, eth2, 00:00:01

B>* 10.98.2.0/24 [20/0] via 10.1.10.2, eth1, 00:00:03

* via 10.1.12.2, eth2, 00:00:03

B>* 10.98.3.0/24 [200/0] via 10.1.5.11, eth3, 00:00:00

B>* 172.18.0.200/32 [200/0] via 10.1.5.10, eth3, 00:00:00

* via 10.1.5.11, eth3, 00:00:00

C>* 172.20.20.0/24 is directly connected, eth0, 01:32:30

root@vyos:/# show ip bgp

BGP table version is 28, local router ID is 10.1.5.1, vrf id 0

Default local pref 100, local AS 65005

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 10.1.5.0/24 0.0.0.0 0 32768 i

*= 10.1.8.0/24 10.1.12.2 0 800 65008 i

*> 10.1.10.2 0 500 65008 i

*>i10.98.0.0/24 10.1.5.10 100 0 i

*= 10.98.1.0/24 10.1.12.2 0 800 65008 i

*> 10.1.10.2 0 500 65008 i

*= 10.98.2.0/24 10.1.12.2 0 800 65008 i

*> 10.1.10.2 0 500 65008 i

*>i10.98.3.0/24 10.1.5.11 100 0 i

*>i172.18.0.200/32 10.1.5.10 100 0 i

*=i 10.1.5.11 100 0 i

* 10.1.12.2 0 800 65008 i

* 10.1.10.2 0 500 65008 i

root@vyos:/# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

K>* 0.0.0.0/0 [0/0] via 172.20.20.1, eth0, 01:32:50

B>* 10.1.5.0/24 [20/0] via 10.1.10.1, eth1, 01:32:27

B>* 10.1.8.0/24 [20/0] via 10.1.34.1, eth2, 01:32:29

C>* 10.1.10.0/24 is directly connected, eth1, 01:32:40

C>* 10.1.34.0/24 is directly connected, eth2, 01:32:38

B>* 10.98.0.0/24 [20/0] via 10.1.10.1, eth1, 00:00:20

B>* 10.98.1.0/24 [20/0] via 10.1.34.1, eth2, 00:00:21

B>* 10.98.2.0/24 [20/0] via 10.1.34.1, eth2, 00:00:23

B>* 10.98.3.0/24 [20/0] via 10.1.10.1, eth1, 00:00:20

B>* 172.18.0.200/32 [20/0] via 10.1.34.1, eth2, 00:00:23

C>* 172.20.20.0/24 is directly connected, eth0, 01:32:50

root@vyos:/# show ip bgp

BGP table version is 25, local router ID is 10.1.10.2, vrf id 0

Default local pref 100, local AS 500

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 10.1.5.0/24 10.1.10.1 0 0 65005 i

*> 10.1.8.0/24 10.1.34.1 0 0 65008 i

*> 10.98.0.0/24 10.1.10.1 0 65005 i

*> 10.98.1.0/24 10.1.34.1 0 65008 i

*> 10.98.2.0/24 10.1.34.1 0 65008 i

*> 10.98.3.0/24 10.1.10.1 0 65005 i

* 172.18.0.200/32 10.1.10.1 0 65005 i

*> 10.1.34.1 0 65008 i

leaf0spine0节点均有到达172.18.0.200的路由信息,其他2个节点亦如此

spine0 节点测试

# 网络正常访问

root@spine0:/# curl 172.18.0.200

PodName: cni-8cnm5 | PodIP: eth0 10.98.0.18/32

七、BGP ControlPlane + LB IPAM 宣告 SVC IP

Cilium 本身也支持 service EXTERNAL-IP 地址的分配管理,除了 MetalLB 的方案外,还可以使用 IPAM 的特性完成 service 的宣告

清理metalLB环境

root@kind:~# kubectl apply -f metallb-l2-ip-config.yaml

ipaddresspool.metallb.io "ippool" deleted

l2advertisement.metallb.io "ippool" deleted

root@kind:~# kubectl delete -f https://gh.api.99988866.xyz/https://raw.githubusercontent.com/metallb/metallb/v0.13.10/config/manifests/metallb-native.yaml

namespace "metallb-system" deleted

customresourcedefinition.apiextensions.k8s.io "addresspools.metallb.io" deleted

customresourcedefinition.apiextensions.k8s.io "bfdprofiles.metallb.io" deleted

customresourcedefinition.apiextensions.k8s.io "bgpadvertisements.metallb.io" deleted

customresourcedefinition.apiextensions.k8s.io "bgppeers.metallb.io" deleted

customresourcedefinition.apiextensions.k8s.io "communities.metallb.io" deleted

customresourcedefinition.apiextensions.k8s.io "ipaddresspools.metallb.io" deleted

customresourcedefinition.apiextensions.k8s.io "l2advertisements.metallb.io" deleted

serviceaccount "controller" deleted

serviceaccount "speaker" deleted

role.rbac.authorization.k8s.io "controller" deleted

role.rbac.authorization.k8s.io "pod-lister" deleted

clusterrole.rbac.authorization.k8s.io "metallb-system:controller" deleted

clusterrole.rbac.authorization.k8s.io "metallb-system:speaker" deleted

rolebinding.rbac.authorization.k8s.io "controller" deleted

rolebinding.rbac.authorization.k8s.io "pod-lister" deleted

clusterrolebinding.rbac.authorization.k8s.io "metallb-system:controller" deleted

clusterrolebinding.rbac.authorization.k8s.io "metallb-system:speaker" deleted

configmap "metallb-excludel2" deleted

secret "webhook-server-cert" deleted

service "webhook-service" deleted

deployment.apps "controller" deleted

daemonset.apps "speaker" deleted

validatingwebhookconfiguration.admissionregistration.k8s.io "metallb-webhook-configuration" deleted

重新部署下 Pod Svc

root@kind:~# kubectl delete -f cni.yaml

daemonset.apps "cni" deleted

service "cni" deleted

root@kind:~# kubectl apply -f cni.yaml

daemonset.apps/cni created

service/cni created

root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cni LoadBalancer 10.96.154.8 <pending> 80:31337/TCP 2s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 117m

创建 CiliumLoadBalancerIPPool 定义IP地址池

root@kind:~# cat lb-ipam.yaml

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: "blue-pool"

spec:

cidrs:

- cidr: "20.0.10.0/24"

serviceSelector:

matchExpressions:

- {key: color, operator: In, values: [blue, cyan]}

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: "red-pool"

spec:

cidrs:

- cidr: "30.0.10.0/24"

serviceSelector:

# 该 ip 池只对指定标签 `color: red` 的 svc 生效

matchLabels:

color: red

root@kind:~# kubectl apply -f lb-ipam.yaml

ciliumloadbalancerippool.cilium.io/blue-pool created

ciliumloadbalancerippool.cilium.io/red-pool created

root@kind:~# kubectl get CiliumLoadBalancerIPPool

NAME DISABLED CONFLICTING IPS AVAILABLE AGE

blue-pool false False 254 3m17s

red-pool false False 253 3m17s

root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cni LoadBalancer 10.96.154.8 30.0.10.203 80:31337/TCP 5m13s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 122m

cni svc已经分配到了EXTERNAL-IP: 30.0.10.203

创建 CiliumBGPPeeringPolicy

由于上述步骤已经创建 CiliumBGPPeeringPolicy 资源配置,无需重复创建,复用即可

root@kind:~# cat bgp_peering_policy.yaml

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack0

spec:

nodeSelector:

# 结合 kind 配置文件信息,选择 clab-bgp-control-plane clab-bgp-worker 节点

matchLabels:

rack: rack0

virtualRouters:

- localASN: 65005

serviceSelector:

# 新增配置

matchExpressions:

- {key: evn, operator: NotIn, values: ["test"]}

exportPodCIDR: true

neighbors:

- peerAddress: "10.1.5.1/24"

peerASN: 65005

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rack1

spec:

nodeSelector:

# 结合 kind 配置文件信息,选择 clab-bgp-worker2 clab-bgp-worker3 节点

matchLabels:

rack: rack1

virtualRouters:

- localASN: 65008

serviceSelector:

# 新增配置

matchExpressions:

- {key: env, operator: NotIn, values: ["test"]}

exportPodCIDR: true

neighbors:

- peerAddress: "10.1.8.1/24"

peerASN: 65008

查看 leaf0 spine0 路由信息

root@vyos:/# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

K>* 0.0.0.0/0 [0/0] via 172.20.20.1, eth0, 01:52:27

C>* 10.1.5.0/24 is directly connected, eth3, 01:52:15

B>* 10.1.8.0/24 [20/0] via 10.1.10.2, eth1, 01:52:04

* via 10.1.12.2, eth2, 01:52:04

C>* 10.1.10.0/24 is directly connected, eth1, 01:52:12

C>* 10.1.12.0/24 is directly connected, eth2, 01:52:13

B>* 10.98.0.0/24 [200/0] via 10.1.5.10, eth3, 00:00:22

B>* 10.98.1.0/24 [20/0] via 10.1.10.2, eth1, 00:00:25

* via 10.1.12.2, eth2, 00:00:25

B>* 10.98.2.0/24 [20/0] via 10.1.10.2, eth1, 00:00:23

* via 10.1.12.2, eth2, 00:00:23

B>* 10.98.3.0/24 [200/0] via 10.1.5.11, eth3, 00:00:24

B>* 30.0.10.203/32 [200/0] via 10.1.5.10, eth3, 00:00:22

* via 10.1.5.11, eth3, 00:00:22

C>* 172.20.20.0/24 is directly connected, eth0, 01:52:27

root@vyos:/# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

K>* 0.0.0.0/0 [0/0] via 172.20.20.1, eth0, 01:52:41

B>* 10.1.5.0/24 [20/0] via 10.1.10.1, eth1, 01:52:18

B>* 10.1.8.0/24 [20/0] via 10.1.34.1, eth2, 01:52:20

C>* 10.1.10.0/24 is directly connected, eth1, 01:52:31

C>* 10.1.34.0/24 is directly connected, eth2, 01:52:29

B>* 10.98.0.0/24 [20/0] via 10.1.10.1, eth1, 00:00:36

B>* 10.98.1.0/24 [20/0] via 10.1.34.1, eth2, 00:00:39

B>* 10.98.2.0/24 [20/0] via 10.1.34.1, eth2, 00:00:37

B>* 10.98.3.0/24 [20/0] via 10.1.10.1, eth1, 00:00:38

B>* 30.0.10.203/32 [20/0] via 10.1.34.1, eth2, 00:00:39

C>* 172.20.20.0/24 is directly connected, eth0, 01:52:41

leaf0spine0节点均有到达30.0.10.203的路由信息,其他2个节点亦如此

spine0节点测试

# spine0 节点网络正常访问

root@spine0:/# curl 30.0.10.203

PodName: cni-dzn57 | PodIP: eth0 10.98.0.104/32

# clab-bgp-control-plane 节点网络正常访问

root@clab-bgp-control-plane:/# curl 30.0.10.203

PodName: cni-67gqw | PodIP: eth0 10.98.1.43/32

八、BGP 方案不是万能的

- Underlay + 三层路由的方案,在传统机房中是非常流行的方案,因为它的性能很好。但是在公有云vpc的场景下,受限使用,不是每个公有云供应商都让用,每一个云厂商对网络保护的定义不一样。Calio的BGP在AWS中可以实现,但是在Azure中不允许,它的VPC不允许不受管控范围的IP通过。

- 在BGP场景下,BGP Underlay即使能使用,但无法跨AZ。在公有云中跨AZ一般意味着跨子网,跨子网就意味着跨路由。VPC的 vRouter一般不支持BGP。BGP underlay可以用,也仅限于单az。

九、vyos 网关配置

spine0

set interfaces ethernet eth1 address '10.1.10.2/24'

set interfaces ethernet eth2 address '10.1.34.2/24'

set interfaces loopback lo

set protocols bgp 500 neighbor 10.1.10.1 remote-as '65005'

set protocols bgp 500 neighbor 10.1.34.1 remote-as '65008'

set protocols bgp 500 parameters router-id '10.1.10.2'

set system config-management commit-revisions '100'

set system console device ttyS0 speed '9600'

set system host-name 'spine0'

set system login user vyos authentication encrypted-password '$6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/'

set system login user vyos authentication plaintext-password ''

set system login user vyos level 'admin'

set system ntp server 0.pool.ntp.org

set system ntp server 1.pool.ntp.org

set system ntp server 2.pool.ntp.org

set system syslog global facility all level 'info'

set system syslog global facility protocols level 'debug'

spine1

set interfaces ethernet eth1 address '10.1.12.2/24'

set interfaces ethernet eth2 address '10.1.11.2/24'

set interfaces loopback lo

set protocols bgp 800 neighbor 10.1.11.1 remote-as '65008'

set protocols bgp 800 neighbor 10.1.12.1 remote-as '65005'

set protocols bgp 800 parameters router-id '10.1.12.2'

set system config-management commit-revisions '100'

set system console device ttyS0 speed '9600'

set system host-name 'spine1'

set system login user vyos authentication encrypted-password '$6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/'

set system login user vyos authentication plaintext-password ''

set system login user vyos level 'admin'

set system ntp server 0.pool.ntp.org

set system ntp server 1.pool.ntp.org

set system ntp server 2.pool.ntp.org

set system syslog global facility all level 'info'

set system syslog global facility protocols level 'debug'

leaf0

set interfaces ethernet eth1 address '10.1.10.1/24'

set interfaces ethernet eth2 address '10.1.12.1/24'

set interfaces ethernet eth3 address '10.1.5.1/24'

set interfaces loopback lo

set nat source rule 100 outbound-interface 'eth0'

set nat source rule 100 source address '10.1.0.0/16'

set nat source rule 100 translation address 'masquerade'

set protocols bgp 65005 address-family ipv4-unicast network 10.1.5.0/24

set protocols bgp 65005 neighbor 10.1.5.10 address-family ipv4-unicast route-reflector-client

set protocols bgp 65005 neighbor 10.1.5.10 remote-as '65005'

set protocols bgp 65005 neighbor 10.1.5.11 address-family ipv4-unicast route-reflector-client

set protocols bgp 65005 neighbor 10.1.5.11 remote-as '65005'

set protocols bgp 65005 neighbor 10.1.10.2 remote-as '500'

set protocols bgp 65005 neighbor 10.1.12.2 remote-as '800'

set protocols bgp 65005 parameters bestpath as-path multipath-relax

set protocols bgp 65005 parameters router-id '10.1.5.1'

set system config-management commit-revisions '100'

set system console device ttyS0 speed '9600'

set system host-name 'leaf0'

set system login user vyos authentication encrypted-password '$6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/'

set system login user vyos authentication plaintext-password ''

set system login user vyos level 'admin'

set system ntp server 0.pool.ntp.org

set system ntp server 1.pool.ntp.org

set system ntp server 2.pool.ntp.org

set system syslog global facility all level 'info'

set system syslog global facility protocols level 'debug'

leaf1

set interfaces ethernet eth1 address '10.1.34.1/24'

set interfaces ethernet eth2 address '10.1.11.1/24'

set interfaces ethernet eth3 address '10.1.8.1/24'

set interfaces loopback lo

set nat source rule 100 outbound-interface 'eth0'

set nat source rule 100 source address '10.1.0.0/16'

set nat source rule 100 translation address 'masquerade'

set protocols bgp 65008 address-family ipv4-unicast network 10.1.8.0/24

set protocols bgp 65008 neighbor 10.1.8.10 address-family ipv4-unicast route-reflector-client

set protocols bgp 65008 neighbor 10.1.8.10 remote-as '65008'

set protocols bgp 65008 neighbor 10.1.8.11 address-family ipv4-unicast route-reflector-client

set protocols bgp 65008 neighbor 10.1.8.11 remote-as '65008'

set protocols bgp 65008 neighbor 10.1.11.2 remote-as '800'

set protocols bgp 65008 neighbor 10.1.34.2 remote-as '500'

set protocols bgp 65008 parameters bestpath as-path multipath-relax

set protocols bgp 65008 parameters router-id '10.1.8.1'

set system config-management commit-revisions '100'

set system console device ttyS0 speed '9600'

set system host-name 'leaf1'

set system login user vyos authentication encrypted-password '$6$QxPS.uk6mfo$9QBSo8u1FkH16gMyAVhus6fU3LOzvLR9Z9.82m3tiHFAxTtIkhaZSWssSgzt4v4dGAL8rhVQxTg0oAG9/q11h/'

set system login user vyos authentication plaintext-password ''

set system login user vyos level 'admin'

set system ntp server 0.pool.ntp.org

set system ntp server 1.pool.ntp.org

set system ntp server 2.pool.ntp.org

set system syslog global facility all level 'info'

set system syslog global facility protocols level 'debug'

浙公网安备 33010602011771号

浙公网安备 33010602011771号