Cilium VxLAN with eBPF 模式

Cilium VxLAN with eBPF 模式

查看此文档前需要熟悉 VxLAN 相关信息,可以查看此文档 Linux 虚拟网络 VXLAN

一、环境信息

| 主机 | IP |

|---|---|

| ubuntu | 172.16.94.141 |

| 软件 | 版本 |

|---|---|

| docker | 26.1.4 |

| helm | v3.15.0-rc.2 |

| kind | 0.18.0 |

| kubernetes | 1.23.4 |

| ubuntu os | Ubuntu 20.04.6 LTS |

| kernel | 5.11.5 内核升级文档 |

二、安装服务

kind 配置文件信息

root@kind:~# cat install.sh

#!/bin/bash

date

set -v

# 1.prep noCNI env

cat <<EOF | kind create cluster --name=cilium-kubeproxy-replacement --image=kindest/node:v1.23.4 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:

# kind 默认使用 rancher cni,cni 我们需要自己创建

disableDefaultCNI: true

# kind 安装 k8s 集群需要禁用 kube-proxy 安装,是 cilium 代替 kube-proxy 功能

kubeProxyMode: "none"

nodes:

- role: control-plane

- role: worker

- role: worker

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.evescn.com"]

endpoint = ["https://harbor.evescn.com"]

EOF

# 2.get controller_node_ip

controller_node_ip=`kubectl get node -o wide --no-headers | grep -E "control-plane|bpf1" | awk -F " " '{print $6}'`

# 3.install cni

helm repo add cilium https://helm.cilium.io > /dev/null 2>&1

helm repo update > /dev/null 2>&1

# Direct Routing Options(--set kubeProxyReplacement=strict --set tunnel=disabled --set autoDirectNodeRoutes=true --set ipv4NativeRoutingCIDR="10.0.0.0/8")

# Host Routing[EBPF](--set bpf.masquerade=true)

helm install cilium cilium/cilium \

--set k8sServiceHost=$controller_node_ip \

--set k8sServicePort=6443 \

--version 1.13.0-rc5 \

--namespace kube-system \

--set debug.enabled=true \

--set debug.verbose=datapath \

--set monitorAggregation=none \

--set ipam.mode=cluster-pool \

--set cluster.name=cilium-kubeproxy-replacement-ebpf-vxlan \

--set kubeProxyReplacement=strict \

--set bpf.masquerade=true

# 4.install necessary tools

for i in $(docker ps -a --format "table {{.Names}}" | grep cilium)

do

echo $i

docker cp /usr/bin/ping $i:/usr/bin/ping

docker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"

docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

--set 参数解释

-

--set kubeProxyReplacement=strict- 含义: 启用 kube-proxy 替代功能,并以严格模式运行。

- 用途: Cilium 将完全替代 kube-proxy 实现服务负载均衡,提供更高效的流量转发和网络策略管理。

-

--set bpf.masquerade- 含义: 启用 eBPF 功能。

- 用途: 使用 eBPF 实现数据路由,提供更高效和灵活的网络地址转换功能。

- 安装

k8s集群和cilium服务

root@kind:~# ./install.sh

Creating cluster "cilium-kubeproxy-replacement-ebpf-vxlan" ...

✓ Ensuring node image (kindest/node:v1.23.4) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-cilium-kubeproxy-replacement-ebpf-vxlan"

You can now use your cluster with:

kubectl cluster-info --context kind-cilium-kubeproxy-replacement-ebpf-vxlan

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

- 查看安装的服务

root@kind:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-5zqzb 1/1 Running 0 9m24s

kube-system cilium-mlmk5 1/1 Running 0 9m24s

kube-system cilium-operator-dd757785c-phdrj 1/1 Running 0 9m24s

kube-system cilium-operator-dd757785c-rn9dh 1/1 Running 0 9m24s

kube-system cilium-xktlw 1/1 Running 0 9m24s

kube-system coredns-64897985d-cjbv8 1/1 Running 0 11m

kube-system coredns-64897985d-hpxvj 1/1 Running 0 11m

kube-system etcd-cilium-kubeproxy-replacement-ebpf-vxlan-control-plane 1/1 Running 0 11m

kube-system kube-apiserver-cilium-kubeproxy-replacement-ebpf-vxlan-control-plane 1/1 Running 0 11m

kube-system kube-controller-manager-cilium-kubeproxy-replacement-ebpf-vxlan-control-plane 1/1 Running 0 11m

kube-system kube-scheduler-cilium-kubeproxy-replacement-ebpf-vxlan-control-plane 1/1 Running 0 11m

local-path-storage local-path-provisioner-5ddd94ff66-gthlr 1/1 Running 0 11m

没有

kube-proxy服务,因为设置了kubeProxyReplacement=strict,那么cilium将完全替代kube-proxy实现服务负载均衡。并且在kind安装k8s集群的时候也需要设置禁用kube-proxy安装kubeProxyMode: "none"

cilium 配置信息

# kubectl -n kube-system exec -it ds/cilium -- cilium status

KVStore: Ok Disabled

Kubernetes: Ok 1.23 (v1.23.4) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Strict [eth0 172.18.0.2]

Host firewall: Disabled

CNI Chaining: none

CNI Config file: CNI configuration file management disabled

Cilium: Ok 1.13.0-rc5 (v1.13.0-rc5-dc22a46f)

NodeMonitor: Listening for events on 128 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

IPAM: IPv4: 3/254 allocated from 10.0.0.0/24,

IPv6 BIG TCP: Disabled

BandwidthManager: Disabled

Host Routing: BPF

Masquerading: BPF [eth0] 10.0.0.0/24 [IPv4: Enabled, IPv6: Disabled]

Controller Status: 22/22 healthy

Proxy Status: OK, ip 10.0.0.109, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Ok Current/Max Flows: 4095/4095 (100.00%), Flows/s: 1.06 Metrics: Disabled

Encryption: Disabled

Cluster health: 3/3 reachable (2024-06-28T07:39:34Z)

- 1.

KubeProxyReplacement: Strict [eth0 172.18.0.2]- Cilium 完全接管所有 kube-proxy 功能,包括服务负载均衡、NodePort 和其他网络策略管理。这种配置适用于你希望最大限度利用 Cilium 的高级网络功能,并完全替代 kube-proxy 的场景。此模式提供更高效的流量转发和更强大的网络策略管理。

- 并且不同于之前的

KubeProxyReplacement: Strict [eth0 172.18.0.3 (Direct Routing)],已经没有Direct Routing表示不在使用Direct Routing进行跨节点通讯,此处使用 VxLAN 模式通讯

- 2.

Host Routing: BPF- 使用 BPF 进行主机路由。

- 3.

Masquerading: BPF [eth0] 10.0.0.0/8 [IPv4: Enabled, IPv6: Disabled]- 使用 BPF 进行 IP 伪装(NAT),接口 eth0,IP 范围 10.0.0.0/8 不回进行 NAT。IPv4 伪装启用,IPv6 伪装禁用。

k8s 集群安装 Pod 测试网络

# cat cni.yaml

apiVersion: apps/v1

kind: DaemonSet

#kind: Deployment

metadata:

labels:

app: evescn

name: evescn

spec:

#replicas: 1

selector:

matchLabels:

app: evescn

template:

metadata:

labels:

app: evescn

spec:

containers:

- image: harbor.dayuan1997.com/devops/nettool:0.9

name: nettoolbox

securityContext:

privileged: true

---

apiVersion: v1

kind: Service

metadata:

name: serversvc

spec:

type: NodePort

selector:

app: evescn

ports:

- name: cni

port: 8080

targetPort: 80

nodePort: 32000

root@kind:~# kubectl apply -f cni.yaml

daemonset.apps/cilium-with-replacement created

service/serversvc created

root@kind:~# kubectl run net --image=harbor.dayuan1997.com/devops/nettool:0.9

pod/net created

- 查看安装服务信息

root@kind:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

evescn-2454l 1/1 Running 0 3m30s 10.0.0.24 cilium-kubeproxy-replacement-ebpf-vxlan-worker2 <none> <none>

evescn-qff5p 1/1 Running 0 3m30s 10.0.2.210 cilium-kubeproxy-replacement-ebpf-vxlan-worker <none> <none>

net 1/1 Running 0 10s 10.0.2.119 cilium-kubeproxy-replacement-ebpf-vxlan-worker <none> <none>

root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15m

serversvc NodePort 10.96.158.92 <none> 8080:32000/TCP 3m40s

三、测试网络

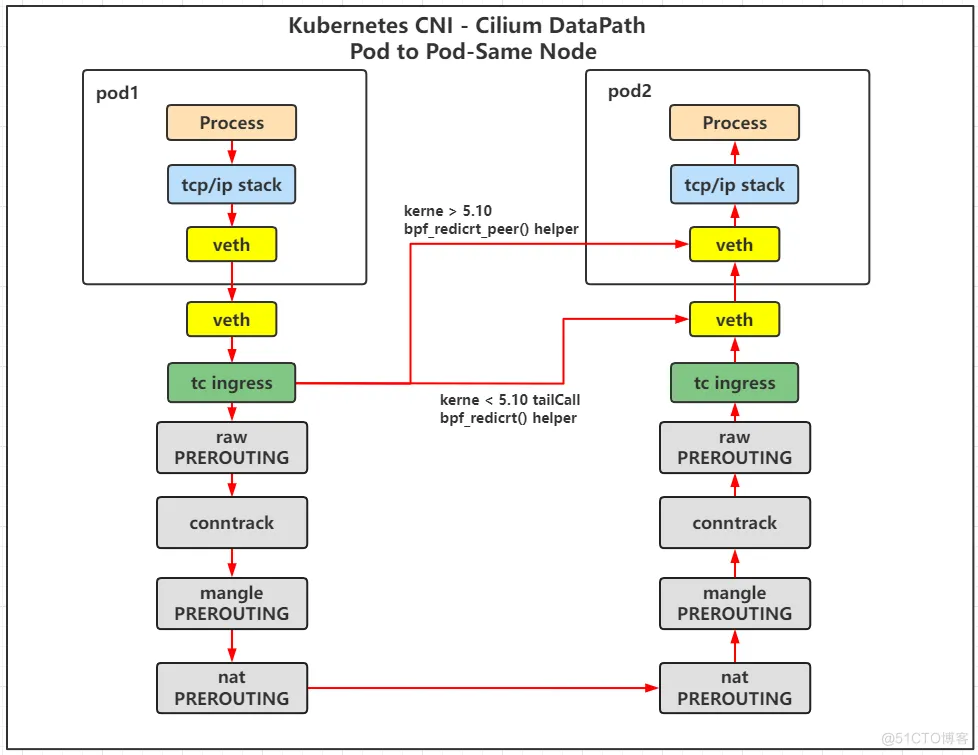

同节点 Pod 网络通讯

可以查看此文档 Cilium Native Routing with eBPF 模式 中,同节点网络通讯,数据包转发流程一致

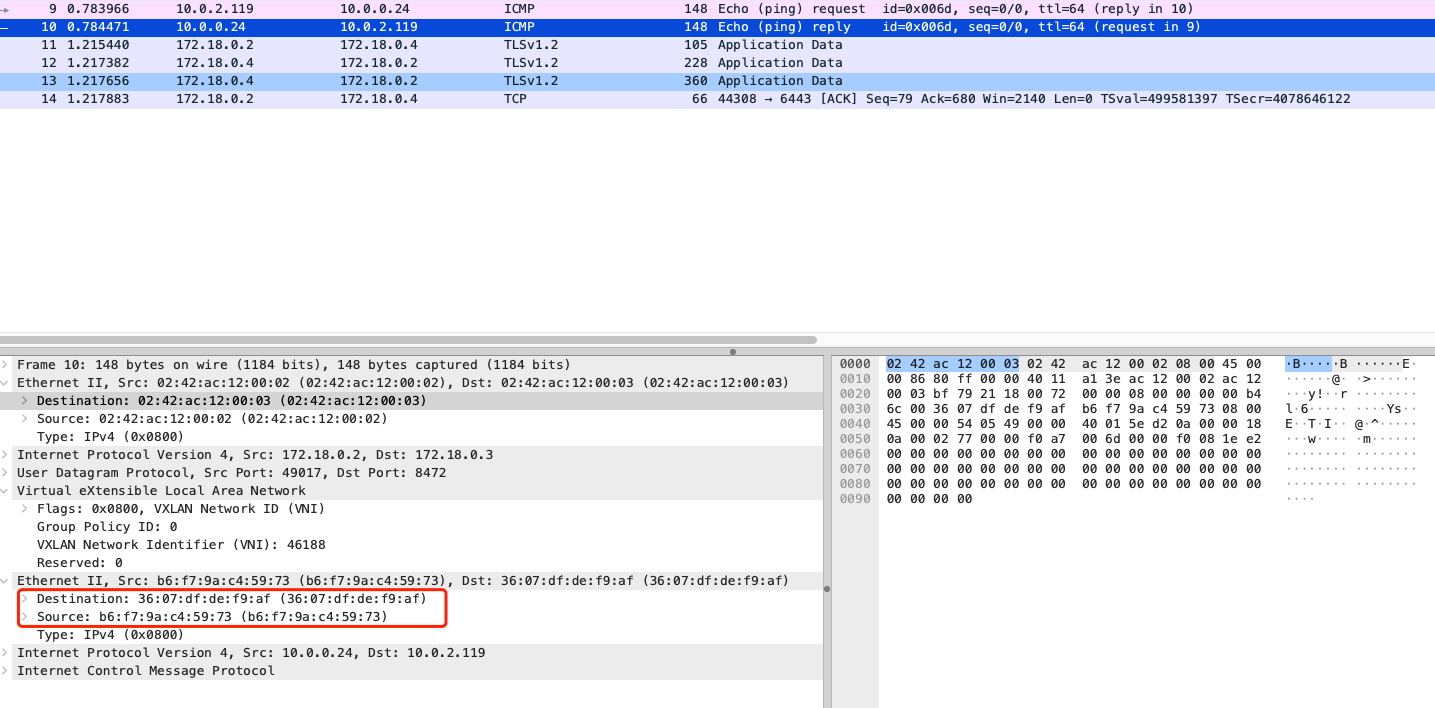

不同节点 Pod 网络通讯

Pod节点信息

## ip 信息

root@kind:~# kubectl exec -it net -- ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ae:32:09:5a:08:5c brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.2.40/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ac32:9ff:fe5a:85c/64 scope link

valid_lft forever preferred_lft forever

## 路由信息

root@kind:~# kubectl exec -it net -- ip r s

default via 10.0.2.194 dev eth0 mtu 1450

10.0.2.194 dev eth0 scope link

查看 Pod 信息发现在 cilium 中主机的 IP 地址为 32 位掩码,意味着该 IP 地址是单个主机的唯一标识,而不是一个子网。这个主机访问其他 IP 均会走路由到达

Pod节点所在Node节点信息

root@kind:~# docker exec -it cilium-kubeproxy-replacement-ebpf-vxlan-worker bash

## ip 信息

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 4e:cc:d4:f9:0e:52 brd ff:ff:ff:ff:ff:ff

inet6 fe80::4ccc:d4ff:fef9:e52/64 scope link

valid_lft forever preferred_lft forever

3: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ea:0c:d0:41:5c:59 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.194/32 scope link cilium_host

valid_lft forever preferred_lft forever

inet6 fe80::e80c:d0ff:fe41:5c59/64 scope link

valid_lft forever preferred_lft forever

4: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether a6:cc:fc:d1:d9:4f brd ff:ff:ff:ff:ff:ff

inet6 fe80::a4cc:fcff:fed1:d94f/64 scope link

valid_lft forever preferred_lft forever

6: lxc_health@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 22:11:17:5f:1f:15 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::2011:17ff:fe5f:1f15/64 scope link

valid_lft forever preferred_lft forever

8: lxc2e4ac370ba86@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 5a:34:6e:44:ca:47 brd ff:ff:ff:ff:ff:ff link-netns cni-bd3fd18b-78ca-2fd1-3cc6-05a99a711b83

inet6 fe80::5834:6eff:fe44:ca47/64 scope link

valid_lft forever preferred_lft forever

10: lxcf46963b8bfc3@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ee:7b:eb:76:25:37 brd ff:ff:ff:ff:ff:ff link-netns cni-5af07f3c-65fe-1453-606f-d38b4a698803

inet6 fe80::ec7b:ebff:fe76:2537/64 scope link

valid_lft forever preferred_lft forever

31: eth0@if32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::3/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:3/64 scope link

valid_lft forever preferred_lft forever

## 路由信息

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# ip r s

default via 172.18.0.1 dev eth0

10.0.0.0/24 via 10.0.2.194 dev cilium_host src 10.0.2.194 mtu 1450

10.0.1.0/24 via 10.0.2.194 dev cilium_host src 10.0.2.194 mtu 1450

10.0.2.0/24 via 10.0.2.194 dev cilium_host src 10.0.2.194

10.0.2.194 dev cilium_host scope link

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3

## ARP 信息

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# arp -n

Address HWtype HWaddress Flags Mask Iface

172.18.0.4 ether 02:42:ac:12:00:04 C eth0

172.18.0.1 ether 02:42:2f:fe:43:35 C eth0

172.18.0.2 ether 02:42:ac:12:00:02 C eth0

## VxLAN 信息

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# ip -d link show | grep -A 3 vxlan

4: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether a6:cc:fc:d1:d9:4f brd ff:ff:ff:ff:ff:ff promiscuity 0 minmtu 68 maxmtu 65535

vxlan external addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

-

1.查看

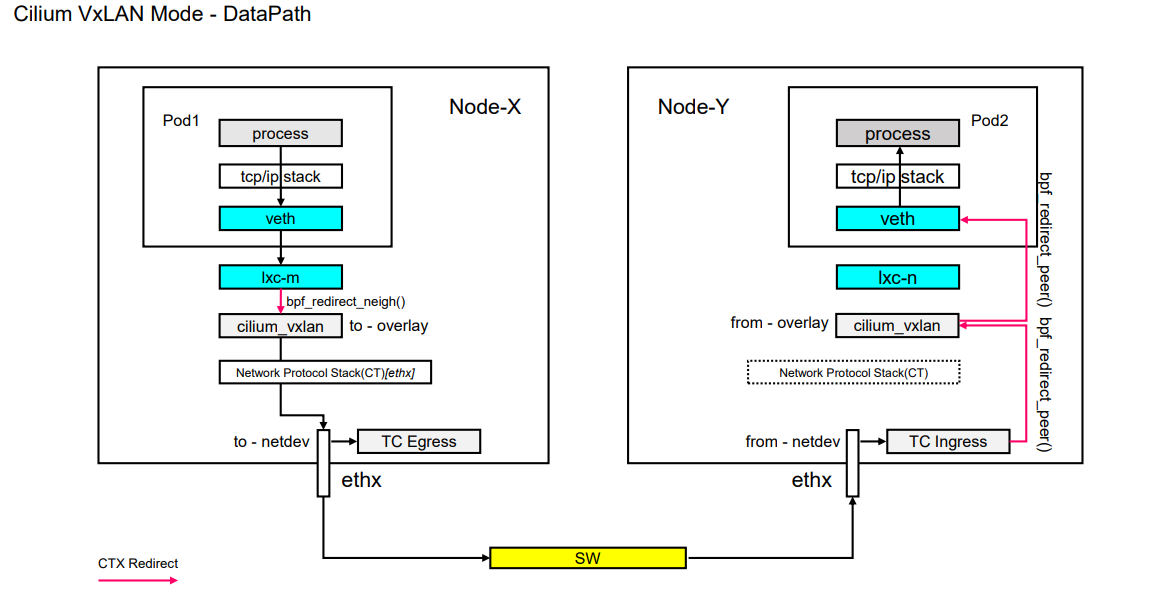

Cilium VxLAN信息发现,Cilium VxLAN的模式和传统的VxLAN模式区别很大- 没有 VxLAN 封装数据需要使用的信息

- 并且

cilium_vxlan网卡没有ip地址,那么如何学习到对端vxlan网卡的mac地址?那么在封装包的时候使用什么mac地址信息? - 传统模式数据包到达宿主机后通过

route路由表信息发送到vxlan接口,但是现在宿主机路由表对端都走的cilium_host网卡。转发跨主机数据包到cilium_vxlan网卡功能在cilium使用底层使用tc hook实现,类似 同节点通讯 也不会走到cilium_host网卡一样

-

2.

VxLAN封装数据需要使用的对端址信息,其实存在cilium中

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/home/cilium# cilium bpf tunnel list

TUNNEL VALUE

10.0.1.0:0 172.18.0.4:0

10.0.0.0:0 172.18.0.2:0

- 3.

VxLAN封装数据还需要使用vni信息,此信息也存放在cilium中

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/home/cilium# cilium identity list

ID LABELS

1 reserved:host

2 reserved:world

3 reserved:unmanaged

4 reserved:health

5 reserved:init

6 reserved:remote-node

7 reserved:kube-apiserver

reserved:remote-node

8 reserved:ingress

3178 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=cilium-kubeproxy-replacement-ebpf-vxlan

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

32359 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=cilium-kubeproxy-replacement-ebpf-vxlan

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:run=net

42512 k8s:app=local-path-provisioner

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=cilium-kubeproxy-replacement-ebpf-vxlan

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

46188 k8s:app=evescn

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=cilium-kubeproxy-replacement-ebpf-vxlan

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

Pod节点进行ping包测试

root@kind:~# kubectl exec -it net -- ping 10.0.0.72 -c 1

PING 10.0.0.72 (10.0.0.72): 56 data bytes

64 bytes from 10.0.0.72: seq=0 ttl=62 time=1.916 ms

--- 10.0.0.72 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.916/1.916/1.916 ms

Pod节点eth0网卡抓包

net~$ tcpdump -pne -i eth0

08:36:54.353393 72:4a:62:7e:62:6e > ee:7b:eb:76:25:37, ethertype IPv4 (0x0800), length 98: 10.0.2.119 > 10.0.0.24: ICMP echo request, id 64, seq 0, length 64

08:36:54.354259 ee:7b:eb:76:25:37 > 72:4a:62:7e:62:6e, ethertype IPv4 (0x0800), length 98: 10.0.0.24 > 10.0.2.119: ICMP echo reply, id 64, seq 0, length 64

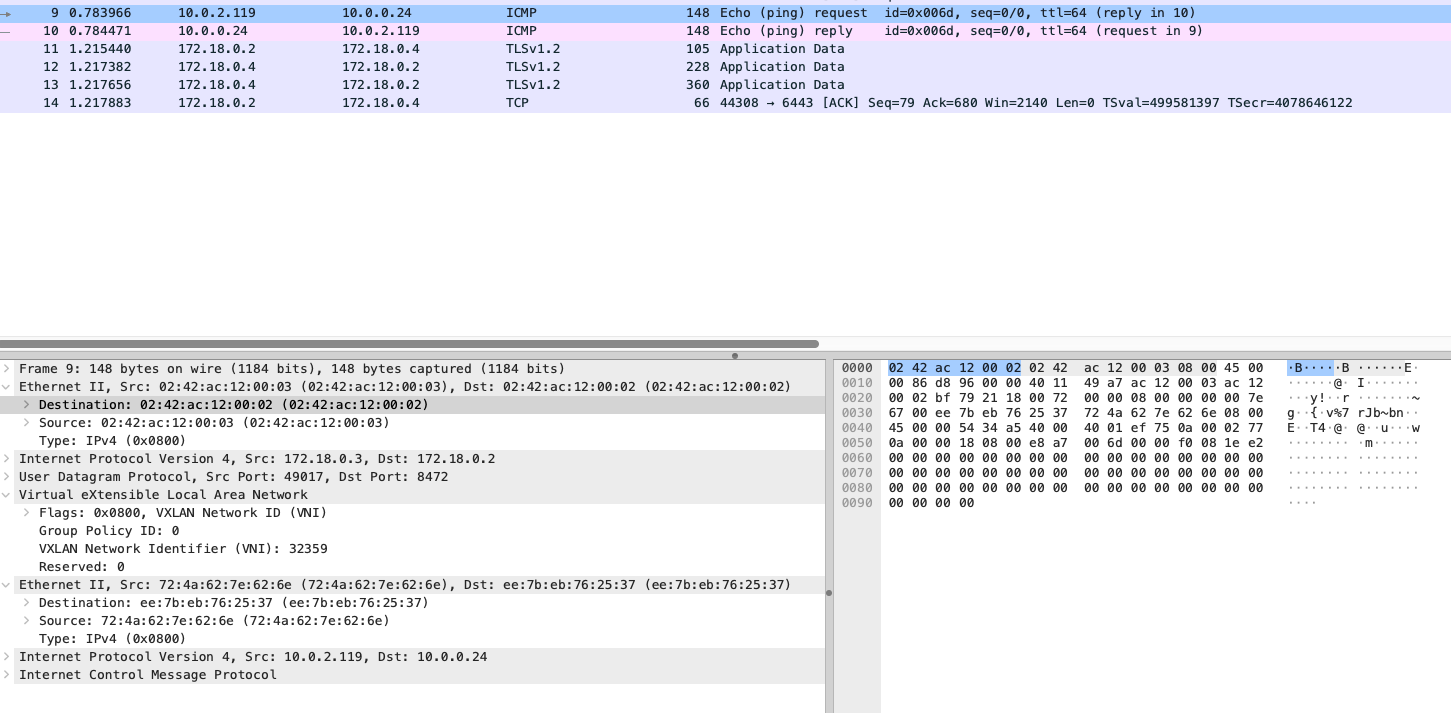

Node节点cilium-kubeproxy-replacement-ebpf-vxlan-worker的eth0网卡抓包,并使用 wireshark 工具分析

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# tcpdump -pne -i eth0 -w /tmp/vxlan.cap

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# sz /tmp/vxlan.cap

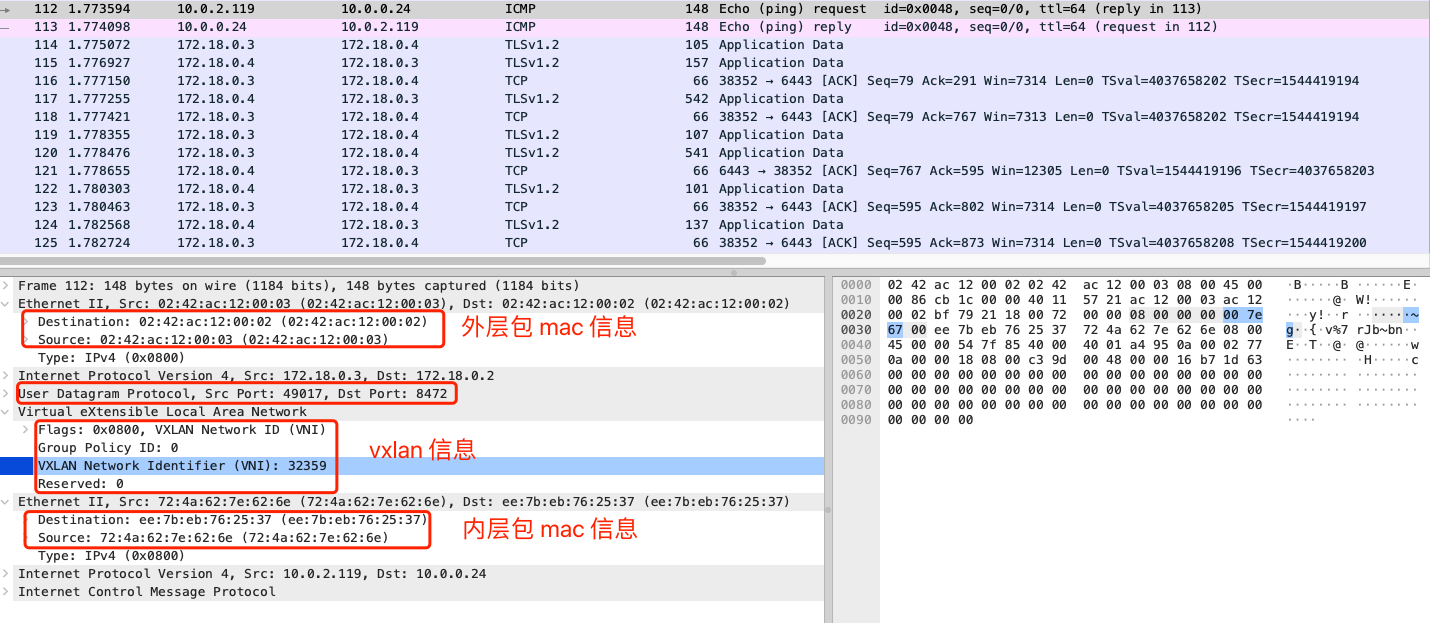

- 1.

request数据包信息信息icmp包中,外部mac信息中,源mac: 02:42:ac:12:00:02为cilium-kubeproxy-replacement-ebpf-vxlan-worker的eth0网卡mac,目的mac: 02:42:ac:12:00:03为对端Pod宿主机cilium-kubeproxy-replacement-ebpf-vxlan-worker2的eth0网卡mac。依旧使用udp协议8472端口进行数据传输,vxlan信息中vni为cilium identity list命令中的id信息。- 需要注意的此处内层数据包

mac信息,在传统的vxlan模式中,此处 内层源mac信息应该为本机vxlan网卡mac地址,内层目的mac应该为对端vxlan网卡mac地址,但是因为cilium vxlan模式中,vxlan网卡没有ip地址,无法学习到对端mac地址,那么此处使用的是什么地址信息?查看前文 源pod源node节点mac信息,发现这个内层数据包信息,居然同Pod节点eth0网卡抓包数据包信息一致,可以得出结论:在cilium vxlan模式中,vxlan网卡无法学习到对端mac地址,对比与传统vxlan模式需要替换内层数据包中的mac信息,cilium vxlan直接使用源pod eth0网卡mac和eth0网卡对应的veth pair网卡mac作为内层数据包mac地址,减少一次修改mac地址的上下文切换。

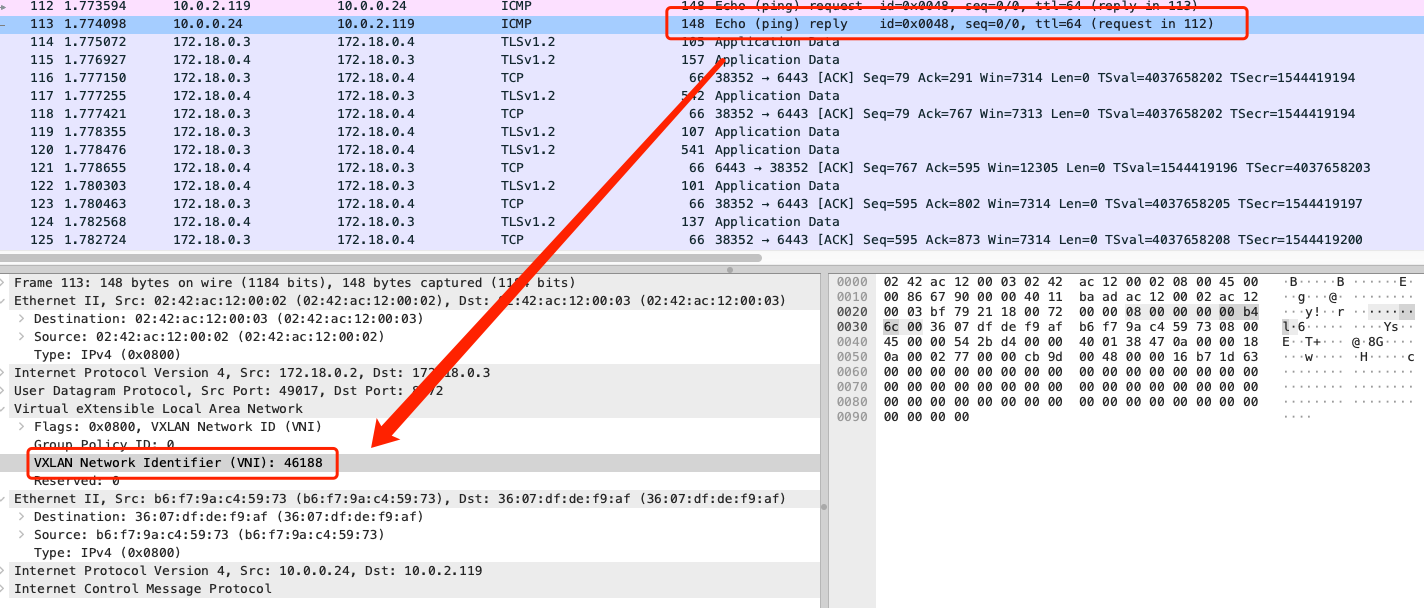

-

2.

reploy数据包信息信息- 查看

reploy数据包的vni信息,发现在cilium vxlan模式中,一对数据包使用的vni不一致,这也不同于传统vxlan模式,数据包中的vni信息,均是通过cilium identity list查询到对端id信息后,使用此id信息作为vni信息进行发包 reploy数据包中vni = 46188、46188 k8s:app=evescn即是发起服务podevescn-2454l自身pod所在identity id,request数据包中vni = 32359、32359 k8s:run=net也是发起服务podnet自身pod所在identity id

- 查看

-

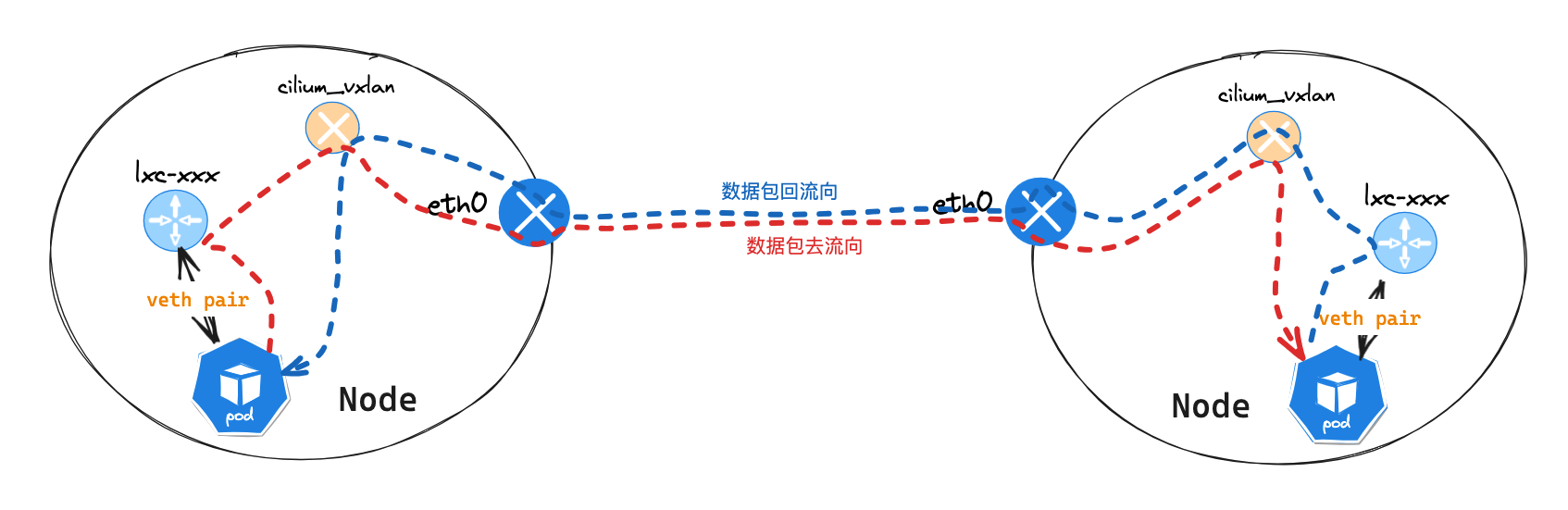

Node节点cilium-kubeproxy-replacement-ebpf-vxlan-worker2eth0网卡抓包分析

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker2:/# tcpdump -pne -i eth0 -w /tmp/vxlan2.cap

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker2:/# sz /tmp/vxlan2.cap

- 1.

request数据包信息信息同cilium-kubeproxy-replacement-ebpf-vxlan-workereth0网卡

-

2.

reploy数据包信息信息中,内层 mac 信息为目的 pod eht0 网卡和对于的 veth pair 网卡 mac 地址 -

Node节点cilium-kubeproxy-replacement-ebpf-worker2cilium_vxlan网卡抓包

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker2:/# tcpdump -pne -i cilium_vxlan

09:24:28.995715 72:4a:62:7e:62:6e > ee:7b:eb:76:25:37, ethertype IPv4 (0x0800), length 98: 10.0.2.119 > 10.0.0.24: ICMP echo request, id 123, seq 0, length 64

09:24:28.996094 b6:f7:9a:c4:59:73 > 36:07:df:de:f9:af, ethertype IPv4 (0x0800), length 98: 10.0.0.24 > 10.0.2.119: ICMP echo reply, id 123, seq 0, length 64

- 1.

request数据包中的 mac 信息为源pod eth0网卡mac和eth0网卡对应的veth pair网卡mac - 2.

reply数据包中的 mac 信息为目的pod eth0网卡mac和eth0网卡对应的veth pair网卡mac

查看 cilium-kubeproxy-replacement-worker2 节点 ip 信息

root@cilium-kubeproxy-replacement-worker:/# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c2:a6:57:6f:a9:3c brd ff:ff:ff:ff:ff:ff

inet6 fe80::c0a6:57ff:fe6f:a93c/64 scope link

valid_lft forever preferred_lft forever

3: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 32:b1:f8:2d:ab:7e brd ff:ff:ff:ff:ff:ff

inet 10.0.0.109/32 scope link cilium_host

valid_lft forever preferred_lft forever

inet6 fe80::30b1:f8ff:fe2d:ab7e/64 scope link

valid_lft forever preferred_lft forever

4: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether e6:ec:04:8e:b8:86 brd ff:ff:ff:ff:ff:ff

inet6 fe80::e4ec:4ff:fe8e:b886/64 scope link

valid_lft forever preferred_lft forever

6: lxc_health@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 02:30:97:b1:cc:17 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::30:97ff:feb1:cc17/64 scope link

valid_lft forever preferred_lft forever

8: lxc7c697c641cac@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 36:07:df:de:f9:af brd ff:ff:ff:ff:ff:ff link-netns cni-1a2f2717-a83f-1aea-102d-e9c4ae83cc51

inet6 fe80::3407:dfff:fede:f9af/64 scope link

valid_lft forever preferred_lft forever

29: eth0@if30: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::2/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:2/64 scope link

valid_lft forever preferred_lft forever

- 目标

Podip信息

root@kind:~# kubectl exec -it evescn-2454l -- ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

7: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether b6:f7:9a:c4:59:73 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.0.24/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::b4f7:9aff:fec4:5973/64 scope link

valid_lft forever preferred_lft forever

- 数据从

net服务发出,通过查看本机路由表,送往node节点。路由:default via 10.0.2.194 dev eth0 mtu 1450 node节点获取到数据包后,查询路由表后发现非节点的数据包信息,会被在node节点上的cilium服务劫持,cilium的tc hook会把数据包送往cilium_vxlan接口cilium_vxlan接口收到数据包信息后,基于cilium vxlan工作模式,不会在修改数据包mac地址信息,因为接口也没有mac地址。在cilium系统中查询 vxlan 信息 [cilium bpf tunnel list] 后,对数据进行封装后发送到eth0网卡。- 数据封装完成后,会送往

eth0网卡,并送往对端node节点。 - 对端

node节点接受到数据包后,发现这个是一个送往UDP 8472接口的vxlan数据包,将数据包交给监听UDP 8472端口的应用程序或内核模块处理。 - 解封装后发现内部的数据包,目的地址为

10.0.0.24,发现是本机Pod地址段,会直接送往目标Pod[bpf_redict_peer()]。 - 最终会把数据包送到目地

Pod主机

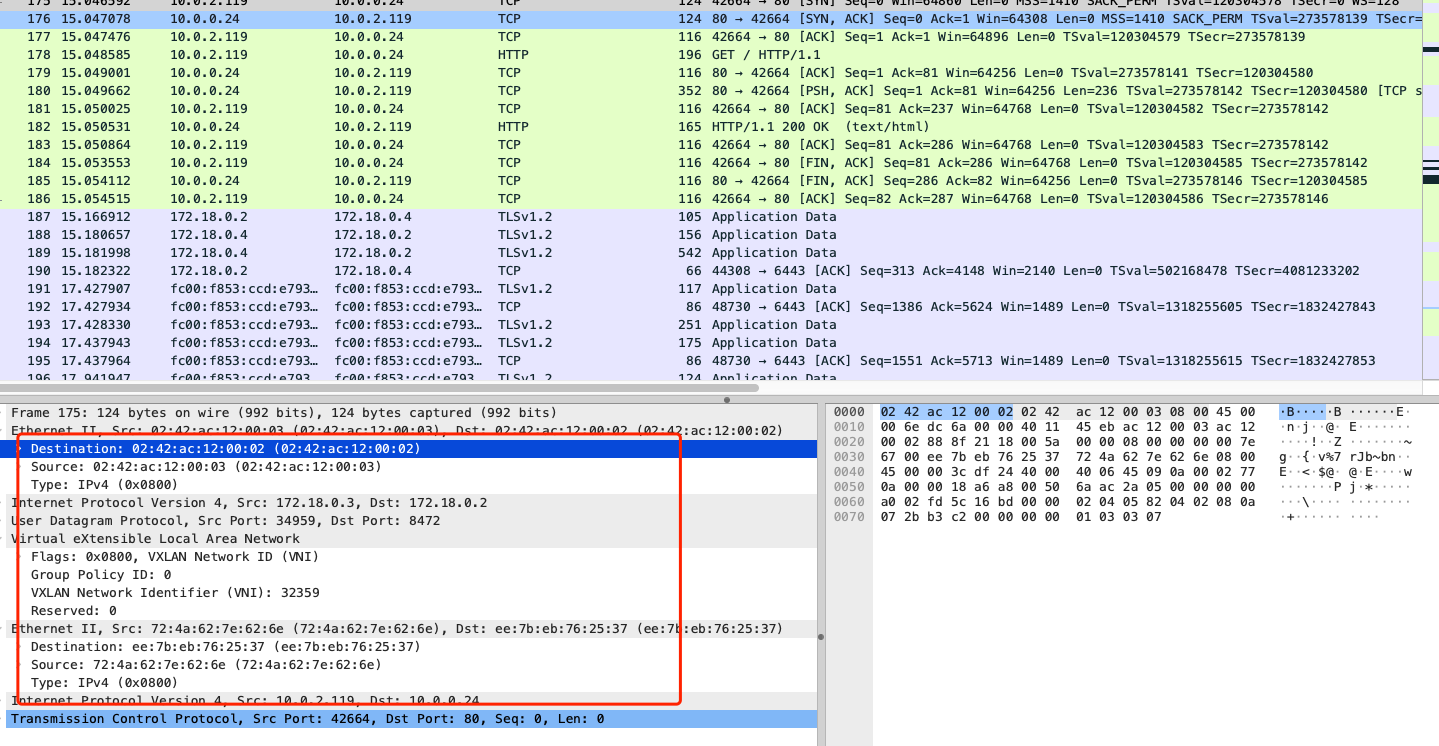

Service 网络通讯

可以查看此文档 Cilium Native Routing with eBPF 模式 中,Service 网络通讯,为什么会直接返回 pod ip 地址进行通讯,是设置了 cilium 代替 kube-proxy 功能 --set kubeProxyReplacement=strict

- 查看

Service信息

root@kind:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9m

serversvc NodePort 10.96.93.201 <none> 8080:32000/TCP 76s

net服务上请求Pod所在Node节点32000端口

root@kind:~# k exec -it net -- curl 172.18.0.3:32000

PodName: evescn-qff5p | PodIP: eth0 10.0.2.210/32

root@kind:~# k exec -it net -- curl 172.18.0.2:32000

PodName: evescn-2454l | PodIP: eth0 10.0.0.24/32

并在 node 节点 eth0 网卡抓包查看

在 node 节点 eth0 网卡只能抓取到跨节点的请求,并且此请求依旧是会使用 vxlan 模式进行通讯,而同节点的数据包在 eth0 网卡无法抓到,那么后续 cilium vxlan with ebpf 模式会升级?比如查询到 service 后端节点有对应的服务和 客户端 在同一节点上,优先使用这个 pod ip 给客户端发起请求?

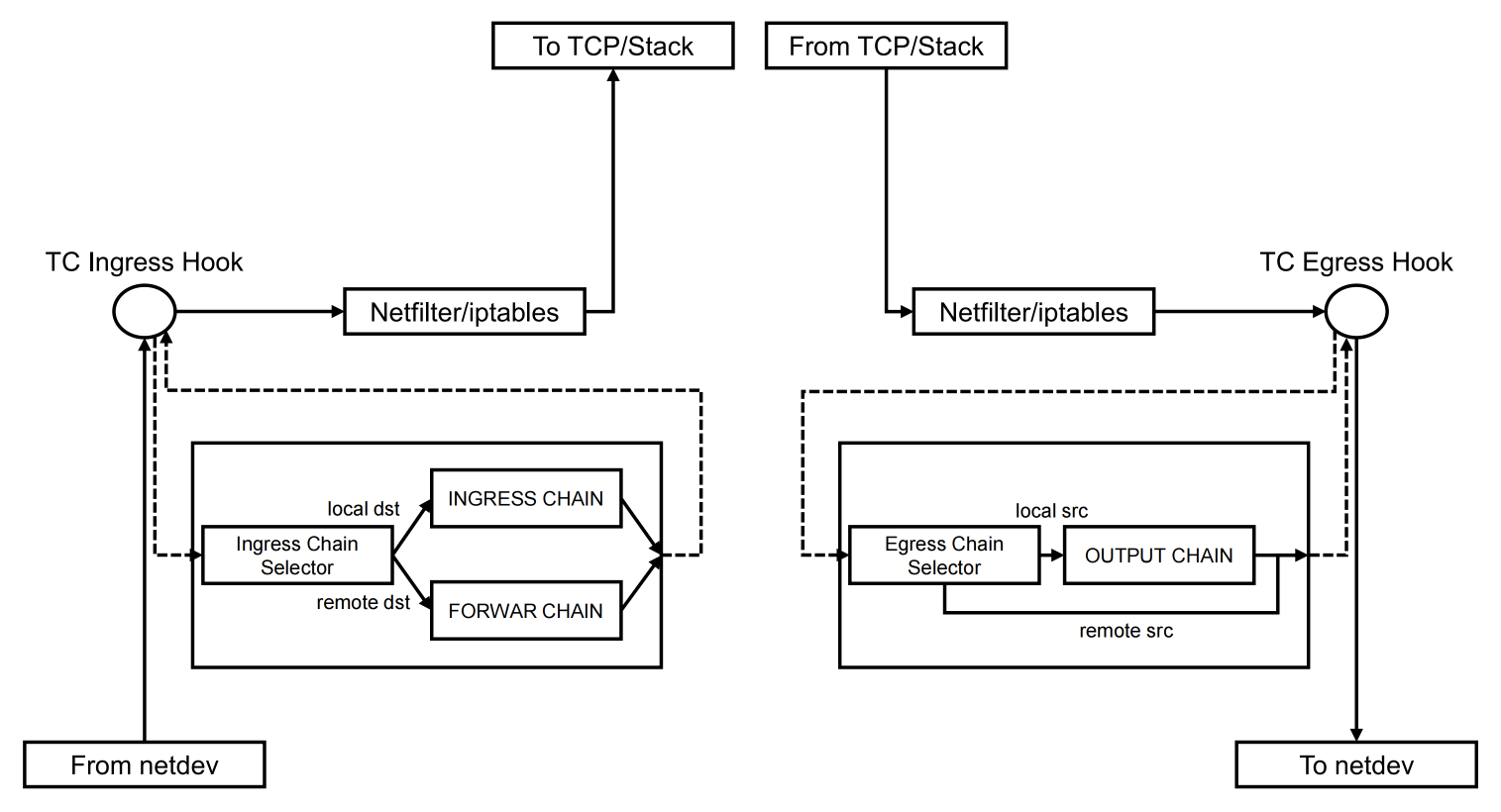

四、TC Hook

TC to-overlay

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker2:/# tc filter show dev cilium_vxlan egress

filter protocol all pref 1 bpf chain 0

filter protocol all pref 1 bpf chain 0 handle 0x1 bpf_overlay.o:[to-overlay] direct-action not_in_hw id 8264 tag 5f26d5ede04f0820 jited

- 1.这是一个

eBPF Section,在选择使用Cilium的overlay网络模型时,会使用到和overlay相关的虚拟网络设备,这些设备是CiliumAgent在启动的时候在主机空间创建出来的,基于VxLAN的技术完成overlay网络。 - 2.

to-overlay的挂载点就是挂载在cilium_vxlan这个网络设备的Egress上的。主要完成的工作包括:通过nodeport_nat_ipv4_fwd和snat_v4_process完成Egress方向的SNAT操作,SNAT的作用是跨主机访问的时候,需要将源地址改成主机的地址。 - 3.

to-overlay处理的数据包是通过lxc-xxx的from-container(CTX Redirect) 程序redirect过来的数据包,这说明to-overlay处理的是Pod通过vxlan出去的数据包。

TC from-overlay

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker2:/# tc filter show dev cilium_vxlan ingress

filter protocol all pref 1 bpf chain 0

filter protocol all pref 1 bpf chain 0 handle 0x1 bpf_overlay.o:[from-overlay] direct-action not_in_hw id 8251 tag 10c7559775622132 jited

- 1.这是一个

eBPF Section,在选择使用Cilium的overlay网络模型时,会使用到和overlay相关的虚拟网络设备,这些设备是Agent在启动的时候,在主机空间创建出来的,基于VxLAN的技术完成overlay网络。 - 2.

from-overlay的挂载点,就是挂载在cilium_vxlan这个网络设备的TC ingress上的,主要处理的数据包是进入Pod的,在进入Pod之前会先经过

cilium_vxlan的from-overlay处理。

主要完成的工作包括以下几点:

- 1.包括

IPsec安全相关的处理; - 2.包括将数据包通过

ipv4_local_delivery方法,传递到后端服务在本机的Pod中的case,其中也会经过to-container; - 3.通过

NodePort访问服务时,服务不在本地的时候,使用和from-netdev类似的方式,去处理NodePort的请求,其中包括DNAT、CT等操作,最后通过, ep_tail_call(ctx,CILIUM_CALL_IPV4_NODEPORT_NAT) 或者 ep_tail_call(ctx, CILIUM_CALL_IPV4_NODEPORT_DSR) 方法完成数据包的处理,具体是哪种,取决于NodePort的LB策略,是SNAT还是DSR。 - 4.数据包都是从物理网卡到达主机的,那是怎样到达

cilium_vxlan的?这个是由挂载在物理网卡的from-netdev,根据隧道类型,将数据包通过redirect的方式,传递到cilium_vxlan的。参见:from-netdev 的 encap_and_redirect_with_nodeid 方法,其中主要有两个方法,一是__encap_with_nodeid(ctx, tunnel_endpoint, seclabel, monitor) 方法完成 encapsulating ,二是 ctx_redirect(ctx, ENCAP_IFINDEX, 0) 方法完成将数据包 redirect 到 cilium_vxlan 对应的 ENCAP_IFINDEX 这个 ifindex。

TC to-netdev

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# tc filter show dev eth0 egress

filter protocol all pref 1 bpf chain 0

filter protocol all pref 1 bpf chain 0 handle 0x1 cilium-eth0 direct-action not_in_hw id 7186 tag d1f9b589b59b2d62 jited

此TC Hook挂载在物理网卡的 TC egress 上的 eBPF 程序。主要的作用是: 完成主机数据包出主机,对数据包进行处理。

这里主要有两个场景会涉及到 to-netdev:

- 1.第一个就是开启了主机的防火墙,这里的防火墙不是

iptables实现的,而是基于eBPF实现的Host Network Policy,用于处理什么样的数据包是可以出主机的,通过handle_to_netdev_ipv4方法完成; - 2.第二个就是开启了

NodePort之后,在需要访问的Backend Pod不在主机的时候,会在这里完成SNAT操作,通过handle_nat_fwd方法和nodeport_nat_ipv4_fwd方法完成SNAT。 - 3.和

from-overlay关系:数据包都是从物理网卡到达主机的,那是怎样到达cilium_vxlan的?这个是由挂载在物理网卡的from-netdev,根据隧道类型,将数据包通过redirect的方式,传递到cilium_vxlan的。参见:from-netdev的encap_and_redirect_with_nodeid方法,其中主要有两个方法,一是 __encap_with_nodeid(ctx, tunnel_endpoint, seclabel, monitor) 方法完成encapsulating,二是 ctx_redirect(ctx, ENCAP_IFINDEX, 0) 方法完成将数据包redirect到cilium_vxlan对应的ENCAP_IFINDEX这个ifindex。

TC from-netdev

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/# tc filter show dev eth0 ingress

filter protocol all pref 1 bpf chain 0

filter protocol all pref 1 bpf chain 0 handle 0x1 cilium-eth0 direct-action not_in_hw id 7177 tag 94772f5b3d31e1ac jited

此TC Hook挂载在物理网卡的 TC ingress 上的 eBPF 程序。主要的作用是,完成主机数据包到达主机,对数据包进行处理。

这里主要有两个场景会涉及到 from-netdev

- 1.第一个就是开启了主机的防火墙,这里的防火墙不是

iptables实现的,而是基于eBPF实现的Host Network Policy,用于处理什么样的数据包是可以访问主机的 - 2.第二个就是开启了

NodePort,可以处理外部通过K8S NodePort的服务访问方式,访问服务,包括DNAT、LB、CT等操作,将外部的访问流量打到本地的Pod,或者通过TC的redirect的方式,将数据流量转发到to-netdev进行SNAT之后,再转发到提供服务的Pod所在的主机

to-overlay from-overlay 流程

源端 cilium monitor 信息

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker:/home/cilium# cilium monitor -vv

CPU 01: MARK 0x0 FROM 189 DEBUG: Conntrack lookup 1/2: src=10.0.2.119:278 dst=10.0.0.24:0 // 1.Pod 内部堆栈处理

CPU 01: MARK 0x0 FROM 189 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=1

CPU 01: MARK 0x0 FROM 189 DEBUG: CT verdict: New, revnat=0

CPU 01: MARK 0x0 FROM 189 DEBUG: Successfully mapped addr=10.0.0.24 to identity=46188

CPU 01: MARK 0x0 FROM 189 DEBUG: Conntrack create: proxy-port=0 revnat=0 src-identity=32359 lb=0.0.0.0

CPU 01: MARK 0x0 FROM 189 DEBUG: Encapsulating to node 2886860802 (0xac120002) from seclabel 32359 // 2. 封装到 172.18.0.3 节点的 vxlan 包, ---- 下面是包信息

------------------------------------------------------------------------------

Ethernet {Contents=[..14..] Payload=[..86..] SrcMAC=72:4a:62:7e:62:6e DstMAC=ee:7b:eb:76:25:37 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..64..] Version=4 IHL=5 TOS=0 Length=84 Id=64237 Flags=DF FragOffset=0 TTL=64 Protocol=ICMPv4 Checksum=10541 SrcIP=10.0.2.119 DstIP=10.0.0.24 Options=[] Padding=[]}

ICMPv4 {Contents=[..8..] Payload=[..56..] TypeCode=EchoRequest Checksum=5865 Id=278 Seq=0}

Failed to decode layer: No decoder for layer type Payload

CPU 01: MARK 0x0 FROM 189 to-overlay: 98 bytes (98 captured), state new, interface cilium_vxlan, , identity 32359->46188, orig-ip 0.0.0.0 // to-verlay 完成包封装,随后发生到对端网卡

CPU 01: MARK 0x0 FROM 3386 DEBUG: Conntrack lookup 1/2: src=172.18.0.3:49017 dst=172.18.0.2:8472 // 3. 对端返回的数据包信息 After cilium_vxlan, the encapsulated packet need deal with host tcp/stack.

CPU 01: MARK 0x0 FROM 3386 DEBUG: Conntrack lookup 2/2: nexthdr=17 flags=1

CPU 01: MARK 0x0 FROM 3386 DEBUG: CT entry found lifetime=342890, revnat=0

CPU 01: MARK 0x0 FROM 3386 DEBUG: CT verdict: Established, revnat=0

CPU 01: MARK 0x0 FROM 3386 DEBUG: Successfully mapped addr=172.18.0.2 to identity=6

CPU 01: MARK 0x0 FROM 0 DEBUG: Tunnel decap: id=46188 flowlabel=0

CPU 01: MARK 0x0 FROM 0 DEBUG: Attempting local delivery for container id 189 from seclabel 46188

CPU 01: MARK 0x0 FROM 189 DEBUG: Conntrack lookup 1/2: src=10.0.0.24:0 dst=10.0.2.119:278 // 5. ICMP EchoReplay ( After eth0 from-netdev and cilium_vxlan from-overlay ) , 本地 Pod 内部堆栈处理

CPU 01: MARK 0x0 FROM 189 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=0

CPU 01: MARK 0x0 FROM 189 DEBUG: CT entry found lifetime=342891, revnat=0

CPU 01: MARK 0x0 FROM 189 DEBUG: CT verdict: Reply, revnat=0

------------------------------------------------------------------------------ // 4. 对端返回数据包信息详情

Ethernet {Contents=[..14..] Payload=[..86..] SrcMAC=ee:7b:eb:76:25:37 DstMAC=72:4a:62:7e:62:6e EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..64..] Version=4 IHL=5 TOS=0 Length=84 Id=53736 Flags= FragOffset=0 TTL=63 Protocol=ICMPv4 Checksum=37682 SrcIP=10.0.0.24 DstIP=10.0.2.119 Options=[] Padding=[]}

ICMPv4 {Contents=[..8..] Payload=[..56..] TypeCode=EchoReply Checksum=7913 Id=278 Seq=0}

Failed to decode layer: No decoder for layer type Payload

CPU 01: MARK 0x0 FROM 189 to-endpoint: 98 bytes (98 captured), state reply, interface lxcf46963b8bfc3, , identity 46188->32359, orig-ip 10.0.0.24, to endpoint 189

目的端 cilium monitor 信息

root@cilium-kubeproxy-replacement-ebpf-vxlan-worker2:/home/cilium# cilium monitor -vv

CPU 01: MARK 0x0 FROM 630 DEBUG: Conntrack lookup 1/2: src=172.18.0.2:49017 dst=172.18.0.3:8472 // 1. 获取到对端发送过来的数据包信息

CPU 01: MARK 0x0 FROM 630 DEBUG: Conntrack lookup 2/2: nexthdr=17 flags=1

CPU 01: MARK 0x0 FROM 630 DEBUG: CT entry found lifetime=342875, revnat=0

CPU 01: MARK 0x0 FROM 630 DEBUG: CT verdict: Established, revnat=0

CPU 01: MARK 0x0 FROM 630 DEBUG: Successfully mapped addr=172.18.0.3 to identity=6

CPU 01: MARK 0x0 FROM 0 DEBUG: Tunnel decap: id=32359 flowlabel=0

CPU 01: MARK 0x0 FROM 0 DEBUG: Attempting local delivery for container id 2546 from seclabel 32359

CPU 01: MARK 0x0 FROM 2546 DEBUG: Conntrack lookup 1/2: src=10.0.2.119:278 dst=10.0.0.24:0 // 2. ICMP EchoRequest ( After eth0 from-netdev and cilium_vxlan from-overlay ) , 经过 eth0 的 from-netdev 和 cilium_vxlan 的 from-overlay 接包后,替换内层包 mac 地址信息,并发生到本地 Pod 内部堆栈处理

CPU 01: MARK 0x0 FROM 2546 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=0

CPU 01: MARK 0x0 FROM 2546 DEBUG: CT verdict: New, revnat=0

CPU 01: MARK 0x0 FROM 2546 DEBUG: Conntrack create: proxy-port=0 revnat=0 src-identity=32359 lb=0.0.0.0

------------------------------------------------------------------------------ // 3. 内部包信息,此时 mac 信息已经替换为 本地 Pod eth0 网卡和 对应的 veth pair 网卡 mac 地址

Ethernet {Contents=[..14..] Payload=[..86..] SrcMAC=36:07:df:de:f9:af DstMAC=b6:f7:9a:c4:59:73 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..64..] Version=4 IHL=5 TOS=0 Length=84 Id=64237 Flags=DF FragOffset=0 TTL=63 Protocol=ICMPv4 Checksum=10797 SrcIP=10.0.2.119 DstIP=10.0.0.24 Options=[] Padding=[]}

ICMPv4 {Contents=[..8..] Payload=[..56..] TypeCode=EchoRequest Checksum=5865 Id=278 Seq=0}

Failed to decode layer: No decoder for layer type Payload

CPU 01: MARK 0x0 FROM 2546 to-endpoint: 98 bytes (98 captured), state new, interface lxc7c697c641cac, , identity 32359->46188, orig-ip 10.0.2.119, to endpoint 2546

CPU 01: MARK 0x0 FROM 2546 DEBUG: Conntrack lookup 1/2: src=10.0.0.24:0 dst=10.0.2.119:278 // 4. ICMP EchoReplay 返回数据包

CPU 01: MARK 0x0 FROM 2546 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=1

CPU 01: MARK 0x0 FROM 2546 DEBUG: CT entry found lifetime=342891, revnat=0

CPU 01: MARK 0x0 FROM 2546 DEBUG: CT verdict: Reply, revnat=0

CPU 01: MARK 0x0 FROM 2546 DEBUG: Successfully mapped addr=10.0.2.119 to identity=32359

CPU 01: MARK 0x0 FROM 2546 DEBUG: Encapsulating to node 2886860803 (0xac120003) from seclabel 46188 // 5. 封装到 172.18.0.2 节点的 vxlan 包,并发生给对端 ---- 下面是包信息

------------------------------------------------------------------------------

Ethernet {Contents=[..14..] Payload=[..86..] SrcMAC=b6:f7:9a:c4:59:73 DstMAC=36:07:df:de:f9:af EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..64..] Version=4 IHL=5 TOS=0 Length=84 Id=53736 Flags= FragOffset=0 TTL=64 Protocol=ICMPv4 Checksum=37426 SrcIP=10.0.0.24 DstIP=10.0.2.119 Options=[] Padding=[]}

ICMPv4 {Contents=[..8..] Payload=[..56..] TypeCode=EchoReply Checksum=7913 Id=278 Seq=0}

Failed to decode layer: No decoder for layer type Payload

CPU 01: MARK 0x0 FROM 2546 to-overlay: 98 bytes (98 captured), state reply, interface cilium_vxlan, , identity 46188->32359, orig-ip 0.0.0.0 // to-verlay 完成包封装,随后发生到对端网卡

CPU 01: MARK 0x0 FROM 630 DEBUG: Conntrack lookup 1/2: src=172.18.0.2:49017 dst=172.18.0.3:8472

CPU 01: MARK 0x0 FROM 630 DEBUG: Conntrack lookup 2/2: nexthdr=17 flags=1

CPU 01: MARK 0x0 FROM 630 DEBUG: CT entry found lifetime=342890, revnat=0

CPU 01: MARK 0x0 FROM 630 DEBUG: CT verdict: Established, revnat=0

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)

2023-06-28 Python yaml模块(转载)

2023-06-28 Python shelve模块

2023-06-28 Python xml处理模块

2023-06-28 Python time和datetime模块