Linux 虚拟网络设备 veth pair

veth pair

一、veth pair 是什么

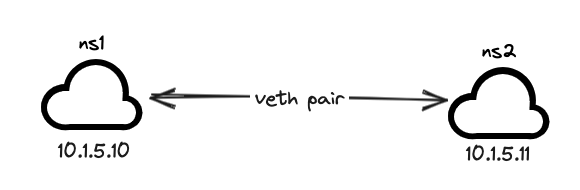

veth pair 全称是 Virtual Ethernet Pair,是一个成对的端口,所有从这对端口一端进入的数据包都将从另一端出来,反之也是一样。

引入 veth pair 是为了在不同的 Network Namespace 直接进行通信,利用它可以直接将两个 Network Namespace 连接起来。

二、通过 Containerlab 构建 veth pair 网络

使用

Containerlab模拟veth pair

a | 网络拓扑文件

# veth.clab.yml

name: veth

topology:

nodes:

server1:

kind: linux

image: harbor.dayuan1997.com/devops/nettool:0.9

exec:

- ip addr add 10.1.5.10/24 dev net0

server2:

kind: linux

image: harbor.dayuan1997.com/devops/nettool:0.9

exec:

- ip addr add 10.1.5.11/24 dev net0

links:

- endpoints: ["server1:net0", "server2:net0"]

此拓扑会启动两个节点,并使用单个点对点接口相互互连节点

b | 部署服务

# clab deploy -t veth.clab.yml

INFO[0000] Containerlab v0.54.2 started

INFO[0000] Parsing & checking topology file: clab.yaml

INFO[0000] Creating docker network: Name="clab", IPv4Subnet="172.20.20.0/24", IPv6Subnet="2001:172:20:20::/64", MTU=1500

INFO[0000] Creating lab directory: /root/wcni-kind/network/4-basic-netwotk/4-veth-pair/1-clab-veth-pair/clab-veth

INFO[0000] Creating container: "server1"

INFO[0000] Creating container: "server2"

INFO[0000] Created link: server1:net0 <--> server2:net0

INFO[0001] Executed command "ip addr add 10.1.5.11/24 dev net0" on the node "server2". stdout:

INFO[0001] Executed command "ip addr add 10.1.5.10/24 dev net0" on the node "server1". stdout:

INFO[0001] Adding containerlab host entries to /etc/hosts file

INFO[0001] Adding ssh config for containerlab nodes

INFO[0001] 🎉 New containerlab version 0.55.0 is available! Release notes: https://containerlab.dev/rn/0.55/

Run 'containerlab version upgrade' to upgrade or go check other installation options at https://containerlab.dev/install/

+---+-------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

| # | Name | Container ID | Image | Kind | State | IPv4 Address | IPv6 Address |

+---+-------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

| 1 | clab-veth-server1 | 4068913afebb | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | 172.20.20.2/24 | 2001:172:20:20::2/64 |

| 2 | clab-veth-server2 | bd0193b46ed6 | harbor.dayuan1997.com/devops/nettool:0.9 | linux | running | 172.20.20.3/24 | 2001:172:20:20::3/64 |

+---+-------------------+--------------+------------------------------------------+-------+---------+----------------+----------------------+

c | 随机查看一个容器网卡信息

# lo clab-veth-server1 ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:14:14:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.20.20.2/24 brd 172.20.20.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 2001:172:20:20::2/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe14:1402/64 scope link

valid_lft forever preferred_lft forever

## 本端 ID 为 10

10: net0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:b8:1d:41 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 10.1.5.10/24 scope global net0

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:feb8:1d41/64 scope link

valid_lft forever preferred_lft forever

d | 查看容器 net0 网卡的相关信息

确认对端的

veth pair信息

# lo clab-veth-server1 ethtool -S net0

NIC statistics:

peer_ifindex: 11

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_xdp_drops: 0

本端

ID为10, 对端ID为11

e | 查看对端服务 IP 网卡信息

# lo clab-veth-server2 ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:14:14:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.20.20.3/24 brd 172.20.20.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 2001:172:20:20::3/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe14:1403/64 scope link

valid_lft forever preferred_lft forever

## 本端 ID 为 11

11: net0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9500 qdisc noqueue state UP group default

link/ether aa:c1:ab:d1:ab:90 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 10.1.5.11/24 scope global net0

valid_lft forever preferred_lft forever

inet6 fe80::a8c1:abff:fed1:ab90/64 scope link

valid_lft forever preferred_lft forever

# lo clab-veth-server2 ethtool -S net0

NIC statistics:

peer_ifindex: 10

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_xdp_drops: 0

本端

ID为11, 对端ID为10,所以sever1和server2的net0网卡 互为veth pair,双方使用net0网卡可以进行数据交换

f | 网络测试

# lo clab-veth-server1 ping 10.1.5.10 -I net0

PING 10.1.5.10 (10.1.5.10): 56 data bytes

64 bytes from 10.1.5.10: seq=0 ttl=64 time=0.039 ms

64 bytes from 10.1.5.10: seq=1 ttl=64 time=0.050 ms

64 bytes from 10.1.5.10: seq=2 ttl=64 time=0.047 ms

^C

--- 10.1.5.10 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.039/0.045/0.050 ms

g | 销毁服务

# clab destroy -t veth.clab.yml

三、手动构建 veth pair 网络

a | 网络拓扑

b | 配置命令

## 创建名称空间

ip netns add ns1

ip netns add ns2

## 创建 veth pair 网卡

ip link add veth-01 type veth peer name veth-02

## ns1 配置信息

ip link set veth-01 netns ns1

ip netns exec ns1 ip link set veth-01 up

ip netns exec ns1 ip address add 10.1.5.10/24 dev veth-01

## ns2 配置信息

ip link set veth-02 netns ns2

ip netns exec ns2 ip link set veth-02 up

ip netns exec ns2 ip address add 10.1.5.11/24 dev veth-02

在测试服务器上,应用上述命令

c | 查看 ns1 名称空间信息

- 查看

ip信息

# ip netns exec ns1 bash

ns1 # ip a l

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

13: veth-01@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ba:a9:01:d3:1c:af brd ff:ff:ff:ff:ff:ff link-netns ns2

inet 10.1.5.10/24 scope global veth-01

valid_lft forever preferred_lft forever

inet6 fe80::b8a9:1ff:fed3:1caf/64 scope link

valid_lft forever preferred_lft forever

- 查看网卡信息

ns1 # ethtool -S veth-01

NIC statistics:

peer_ifindex: 12

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_xdp_drops: 0

root@evescn:~/wcni-kind/network/4-basic-netwotk/4-veth-pair/1-clab-veth-pair# exit

exit

本端

ID为13, 对端ID为12

d | 查看 ns2 名称空间信息

- 查看

ip信息

# ip netns exec ns2 bash

ns2 # ip a l

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

12: veth-02@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 4a:8b:65:32:41:ca brd ff:ff:ff:ff:ff:ff link-netns ns1

inet 10.1.5.11/24 scope global veth-02

valid_lft forever preferred_lft forever

inet6 fe80::488b:65ff:fe32:41ca/64 scope link

valid_lft forever preferred_lft forever

- 查看网卡信息

ns2 # ethtool -S veth-02

NIC statistics:

peer_ifindex: 13

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_xdp_drops: 0

本端

ID为12, 对端ID为13

e | 测试网络

ns2 # ping 10.1.5.10

PING 10.1.5.10 (10.1.5.10) 56(84) bytes of data.

64 bytes from 10.1.5.10: icmp_seq=1 ttl=64 time=0.033 ms

64 bytes from 10.1.5.10: icmp_seq=2 ttl=64 time=0.032 ms

^C

--- 10.1.5.10 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1011ms

rtt min/avg/max/mdev = 0.032/0.032/0.033/0.000 ms

f | 回收名称空间

# ip netns del ns1 && ip netns del ns2

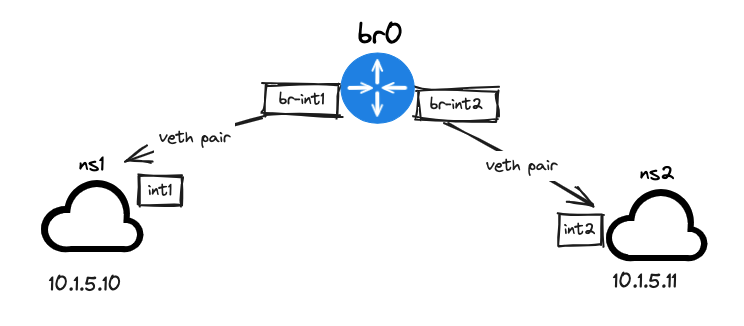

四、手动构建网桥网络

a | 网络拓扑

b | 配置命令

## 创建名称空间

ip netns add ns1

ip netns add ns2

## 创建 br0 类型为网桥

ip l a br0 type bridge

ip l s br0 up

## 创建 2对 veth pair 网卡

ip l a int1 type veth peer name br-int1

ip l a int2 type veth peer name br-int2

## ns1 配置信息,网卡 int1 一端插到 ns1,一端插到 br0 网桥

ip l s int1 netns ns1

ip netns exec ns1 ip l s int1 up

ip netns exec ns1 ip a a 10.1.5.10/24 dev int1

## ns2 配置信息,网卡 int2 一端插到 ns2,一端插到 br0 网桥

ip l s int2 netns ns2

ip netns exec ns2 ip l s int2 up

ip netns exec ns2 ip a a 10.1.5.11/24 dev int2

## 网卡 int1 另一端插到 br0 网桥

ip l s br-int1 master br0

ip l s br-int1 up

## 网卡 int2 另一端插到 br0 网桥

ip l s br-int2 master br0

ip l s br-int2 up

在测试服务器上,应用上述命令

c | 查看 ns1 名称空间信息

- 查看

ip信息

# ip netns exec ns1 bash

ns1 # ip a l

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

16: int1@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 02:e1:98:20:c7:3e brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.5.10/24 scope global int1

valid_lft forever preferred_lft forever

inet6 fe80::e1:98ff:fe20:c73e/64 scope link

valid_lft forever preferred_lft forever

- 查看网卡信息

ns1 # ethtool -S int1

NIC statistics:

peer_ifindex: 15

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_xdp_drops:

ns1 # exit

exit

本端

ID为16, 对端ID为15

d | 查看 ns2 名称空间信息

- 查看

ip信息

# ip netns exec ns2 bash

ns2 # ip a l

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

18: int2@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 06:b2:aa:92:19:b3 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.5.11/24 scope global int2

valid_lft forever preferred_lft forever

inet6 fe80::4b2:aaff:fe92:19b3/64 scope link

valid_lft forever preferred_lft forever

- 查看网卡信息

ns2 # ethtool -S int2

NIC statistics:

peer_ifindex: 17

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_xdp_drops:

本端

ID为18, 对端ID为17

e | 查看宿主机网络

# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:81:cc:3a brd ff:ff:ff:ff:ff:ff

inet 172.16.94.141/24 brd 172.16.94.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe81:cc3a/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:0f:a2:2e:f6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: br-c1a42599c7fe: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:dd:46:08:c7 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-c1a42599c7fe

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::1/64 scope global tentative

valid_lft forever preferred_lft forever

inet6 fe80::1/64 scope link tentative

valid_lft forever preferred_lft forever

14: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d6:cd:25:93:c3:a2 brd ff:ff:ff:ff:ff:ff

inet6 fe80::8c5a:e2ff:feb2:609b/64 scope link

valid_lft forever preferred_lft forever

15: br-int1@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP group default qlen 1000

link/ether d6:cd:25:93:c3:a2 brd ff:ff:ff:ff:ff:ff link-netns ns1

inet6 fe80::d4cd:25ff:fe93:c3a2/64 scope link

valid_lft forever preferred_lft forever

17: br-int2@if18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP group default qlen 1000

link/ether e2:af:58:2b:b5:74 brd ff:ff:ff:ff:ff:ff link-netns ns2

inet6 fe80::e0af:58ff:fe2b:b574/64 scope link

valid_lft forever preferred_lft forever

- 对比 ns1 和 ns2 网卡信息,可以确定以下信息

- ns1 对端

ID为15的网卡为br-int1 - ns2 对端

ID为17的网卡为br-int2

- ns1 对端

br-int1和br-int2网卡都存在master br0,表示他们都连接到 br0 网桥上

f | 测试网络

ns2 # ping 10.1.5.10

PING 10.1.5.10 (10.1.5.10) 56(84) bytes of data.

64 bytes from 10.1.5.10: icmp_seq=1 ttl=64 time=0.045 ms

64 bytes from 10.1.5.10: icmp_seq=2 ttl=64 time=0.060 ms

64 bytes from 10.1.5.10: icmp_seq=3 ttl=64 time=0.057 ms

64 bytes from 10.1.5.10: icmp_seq=4 ttl=64 time=0.051 ms

^C

--- 10.1.5.10 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3055ms

rtt min/avg/max/mdev = 0.045/0.053/0.060/0.005 ms

g | 回收名称空间

# ip netns del ns1 && ip netns del ns2

浙公网安备 33010602011771号

浙公网安备 33010602011771号