13 快照与克隆特性(转载)

快照与克隆特性

Ceph 快照克隆概述

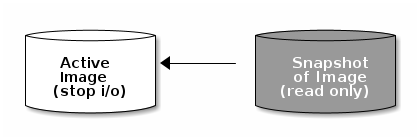

Ceph RBD 块原生提供了快照(snapshot)和克隆(clone)的能力,何为快照?

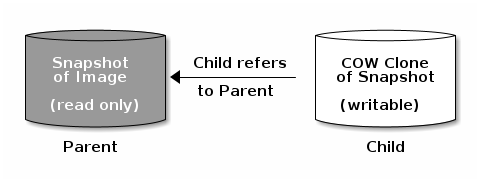

快照是镜像某个时刻的状态(包含有镜像当时已落盘的数据),制作快照之后将会成为一个只读镜像文件,无法对快照的数据进行写入操作,因此需要将其保护起来(protect),数据丢失之后可以基于快照做回滚(rollback)操作。同时RBD还提供了一个非常好用的功能叫Copy-on-write,即基于快照做快速的克隆(clone) 功能,克隆出来的子镜像和快照是一个相互依赖的关系(快照是父,克隆出来的镜像是子),可以完成虚拟机妙极部署的的高级特性。

克隆的功能如何实现呢?假定我们已经在RBD块上安装了一个Linux系统,安装配置完毕后将其制作为快照(snapshot),为了防止快照倍破坏将其保护(protect)起来,保护起来之后即可基于原始快照做克隆clone操作。

安装控制器和 CRD

容器中并没有直接使用 RBD 块集成而是通过 PVC 实现和底层块存储的调用,容器中如何完成和底层存储接口的交互完成快照制作的能力呢?需要一个 snapshot 的控制器(snapshot controller)来实现这个功能,snapshot 控制器是一个第三方的 CRD ,其通过 CRDs 的方式和 kubernetes 集成,能够完成 PVC 快照到底层RBD块的控制逻辑。

安装 CRDs 资源

git 仓库地址 使用 release-4.0 分支,直接使用 master 分支发现后续 snapshot 无法创建

- 下载代码包

[root@m1 ~]# git clone https://ghproxy.com/https://github.com/kubernetes-csi/external-snapshotter.git

Cloning into 'external-snapshotter'...

remote: Enumerating objects: 51642, done.

remote: Counting objects: 100% (7/7), done.

remote: Compressing objects: 100% (6/6), done.

remote: Total 51642 (delta 1), reused 5 (delta 1), pack-reused 51635

Receiving objects: 100% (51642/51642), 65.68 MiB | 2.83 MiB/s, done.

Resolving deltas: 100% (26973/26973), done.

[root@m1 ~]# ls

anaconda-ks.cfg external-snapshotter rook

[root@m1 ~]# cd external-snapshotter/

[root@m1 external-snapshotter]# git branch -a

* master

......

[root@m1 external-snapshotter]# git checkout -f remotes/origin/release-4.0 -b release-4.0

[root@m1 external-snapshotter]# ls

CHANGELOG cloudbuild.yaml code-of-conduct.md deploy go.mod LICENSE OWNERS pkg release-tools vendor

client cmd CONTRIBUTING.md examples go.sum Makefile OWNERS_ALIASES README.md SECURITY_CONTACTS

[root@m1 external-snapshotter]# git branch -a

master

* release-4.0

- 安装

CRDs资源

[root@m1 external-snapshotter]# kubectl apply -f client/config/crd

customresourcedefinition.apiextensions.k8s.io/volumesnapshotclasses.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshotcontents.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshots.snapshot.storage.k8s.io created

查看 CRDs

[root@m1 external-snapshotter]# kubectl get customresourcedefinitions.apiextensions.k8s.io

NAME CREATED AT

.......

volumesnapshotclasses.snapshot.storage.k8s.io 2022-12-02T04:39:20Z

volumesnapshotcontents.snapshot.storage.k8s.io 2022-12-02T04:39:20Z

volumesnapshots.snapshot.storage.k8s.io 2022-12-02T04:39:21Z

安装控制器

[root@m1 external-snapshotter]# kubectl apply -f deploy/kubernetes/snapshot-controller

serviceaccount/snapshot-controller created

clusterrole.rbac.authorization.k8s.io/snapshot-controller-runner created

clusterrolebinding.rbac.authorization.k8s.io/snapshot-controller-role created

role.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

rolebinding.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

- 查看服务发现镜像拉取失败

[root@m1 ceph]# kubectl describe pods snapshot-controller-0

Warning Failed 2m17s (x4 over 5m2s) kubelet Error: ErrImagePull

Normal BackOff 108s (x6 over 5m1s) kubelet Back-off pulling image "registry.k8s.io/sig-storage/snapshot-controller:v4.0.0"

- 修改镜像拉取脚本

[root@m1 external-snapshotter]# cat /tmp/1.sh

#!/bin/bash

image_list=(

csi-node-driver-registrar:v2.0.1

csi-attacher:v3.0.0

csi-snapshotter:v3.0.0

csi-resizer:v1.0.0

csi-provisioner:v2.0.0

)

aliyuncs="registry.aliyuncs.com/it00021hot"

google_gcr="k8s.gcr.io/sig-storage"

for image in ${image_list[*]}

do

docker image pull ${aliyuncs}/${image}

docker image tag ${aliyuncs}/${image} ${google_gcr}/${image}

docker image rm ${aliyuncs}/${image}

echo "${aliyuncs}/${image} ${google_gcr}/${image} downloaded."

done

# 新增配置

docker pull dyrnq/snapshot-controller:v4.0.0

docker image tag dyrnq/snapshot-controller:v4.0.0 registry.k8s.io/sig-storage/snapshot-controller:v4.0.0

- 拉取新镜像

[root@m1 ceph]# ansible all -m copy -a "src=/tmp/1.sh dest=/tmp/2.sh"

[root@m1 ceph]# ansible all -m shell -a "bash /tmp/2.sh"

- 再次查看

POD信息

[root@m1 external-snapshotter]# kubectl get pods

NAME READY STATUS RESTARTS AGE

snapshot-controller-0 1/1 Running 0 63m

安装 RBD 快照类

安装好控制器和 CRDs 之后,为了能够使用快照的功能,首先需要定义一个 VolumeSnapshotClass ,类似于 StorageClass , VolumeSnapshotClass 是专⻔针对快照功能而提供的存储类,是 kubernetes 中定义的一个特性,参考 the kubernetes documentation

- 查看资源清单

[root@m1 rbd]# pwd

/root/rook/cluster/examples/kubernetes/ceph/csi/rbd

[root@m1 rbd]# cat snapshotclass.yaml

---

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshotClass

metadata:

name: csi-rbdplugin-snapclass

driver: rook-ceph.rbd.csi.ceph.com # driver:namespace:operator

parameters:

# Specify a string that identifies your cluster. Ceph CSI supports any

# unique string. When Ceph CSI is deployed by Rook use the Rook namespace,

# for example "rook-ceph".

clusterID: rook-ceph # namespace:cluster

csi.storage.k8s.io/snapshotter-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/snapshotter-secret-namespace: rook-ceph # namespace:cluster

deletionPolicy: Delete

- 查看信息

[root@m1 rbd]# kubectl get VolumeSnapshotClass

NAME DRIVER DELETIONPOLICY AGE

csi-rbdplugin-snapclass rook-ceph.rbd.csi.ceph.com Delete 3m54s

初尝 Volumesnapshot

如何使用快照的功能呢?这里需要使用到 Volumesnapshot 向 VolumeSnapshotClass 类发起申请,如下为定义的方式:

[root@m1 rbd]# cat snapshot.yaml

---

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshot

metadata:

name: rbd-pvc-snapshot

spec:

volumeSnapshotClassName: csi-rbdplugin-snapclass

source:

persistentVolumeClaimName: mysql-pv-claim # 修改为当前存在的 pvc 名称

[root@m1 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-cfe4a32c-c746-46af-84e8-cd981cdf8706 30Gi RWO rook-ceph-block 4d5h

www-web-0 Bound pvc-9d32f5ff-174a-461f-9f3c-e0f0f5e60cc8 10Gi RWO rook-ceph-block 6d8h

www-web-1 Bound pvc-af0e2916-ec93-4f55-871f-0d008c0394e0 10Gi RWO rook-ceph-block 6d8h

www-web-2 Bound pvc-174fb859-91ad-49e2-8e44-d7ee64645e7e 10Gi RWO rook-ceph-block 6d8h

创建 Volumesnapshot

[root@m1 rbd]# kubectl apply -f snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/rbd-pvc-snapshot created

# 查看创建信息

[root@m1 rbd]# kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

rbd-pvc-snapshot true mysql-pv-claim 40Gi csi-rbdplugin-snapclass snapcontent-8823207f-e347-425d-9084-7453ffba378a 61m 61m

创建完成之后可以看到 volumesnapshot 的 READYTOUSE 已经处于 true 状态,即当前快照已经完成创建!

快照卷原理解析

快照卷的功能实现流程:

- 向

k8s申请创建volumesnapshot资源 volumesnapshot创建自动向volumesnapshotcontent资源申请volumesnapshotcontent申请底层ceph底层卷ceph底层卷做快照- 最后基于快照克隆一个卷出来

查看 volumesnapshot 资源

[root@m1 rbd]# kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

rbd-pvc-snapshot true mysql-pv-claim 40Gi csi-rbdplugin-snapclass snapcontent-8823207f-e347-425d-9084-7453ffba378a 61m 61m

查看 volumesnapshotcontent 资源

# 查看 volumesnapshotcontent 资源的 snapcontent-8823207f-e347-425d-9084-7453ffba378a 信息

[root@m1 rbd]# kubectl get volumesnapshotcontents snapcontent-8823207f-e347-425d-9084-7453ffba378a

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT AGE

snapcontent-8823207f-e347-425d-9084-7453ffba378a true 42949672960 Delete rook-ceph.rbd.csi.ceph.com csi-rbdplugin-snapclass rbd-pvc-snapshot 69m

# 查看 snapcontent-8823207f-e347-425d-9084-7453ffba378a 详细信息

[root@m1 rbd]# kubectl get volumesnapshotcontents snapcontent-8823207f-e347-425d-9084-7453ffba378a -o yaml

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotContent

metadata:

......

name: snapcontent-8823207f-e347-425d-9084-7453ffba378a

......

spec:

deletionPolicy: Delete

driver: rook-ceph.rbd.csi.ceph.com

source:

volumeHandle: 0001-0009-rook-ceph-0000000000000002-197480ab-6e0f-11ed-b5c4-6efb82c232c4 # 底层 rbd 信息 6e0f-11ed-b5c4-6efb82c232c4

volumeSnapshotClassName: csi-rbdplugin-snapclass

volumeSnapshotRef:

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

name: rbd-pvc-snapshot

namespace: default

resourceVersion: "2727164"

uid: 8823207f-e347-425d-9084-7453ffba378a

status:

creationTime: 1669956249037999547

readyToUse: true

restoreSize: 42949672960

snapshotHandle: 0001-0009-rook-ceph-0000000000000002-f551456b-71fb-11ed-bb18-5a5c11760ffc # 底层 snapshot rbd 信息

查看 Ceph 中信息

# 查看 ceph pool 信息

[root@m1 rbd]# ceph osd lspools

1 device_health_metrics

2 replicapool # 默认 rbd 块使用的是 replicapool 资源池

3 myfs-metadata

4 myfs-data0

5 my-store.rgw.control

6 my-store.rgw.meta

7 my-store.rgw.log

8 my-store.rgw.buckets.index

9 my-store.rgw.buckets.non-ec

10 .rgw.root

11 my-store.rgw.buckets.data

12 evescn_test

# 查看 replicapool 资源池信息

[root@m1 rbd]# rbd -p replicapool ls

csi-snap-f551456b-71fb-11ed-bb18-5a5c11760ffc

csi-vol-197480ab-6e0f-11ed-b5c4-6efb82c232c4

csi-vol-84d64d8d-6c61-11ed-b5c4-6efb82c232c4

csi-vol-9b7146ad-6c61-11ed-b5c4-6efb82c232c4

csi-vol-a3dbf5a7-6c61-11ed-b5c4-6efb82c232c4

# 查找下 volumesnapshotcontent 资源清单中出现的 6e0f-11ed-b5c4-6efb82c232c4 信息,为前面章节手动扩容 mysql 40g 底层的 ceph 块

[root@m1 rbd]# rbd -p replicapool ls | grep 197480ab-6e0f-11ed-b5c4-6efb82c232c4

csi-vol-197480ab-6e0f-11ed-b5c4-6efb82c232c4

在 Pool 中我们还看到了 csi-snap-5433c4f6-83a9-11eb-9fbd-8ab8cdee23b3 这个 snap 的卷,是什么东⻄呢?

[root@m1 rbd]# rbd -p replicapool info csi-snap-f551456b-71fb-11ed-bb18-5a5c11760ffc

rbd image 'csi-snap-f551456b-71fb-11ed-bb18-5a5c11760ffc':

size 40 GiB in 10240 objects

order 22 (4 MiB objects)

snapshot_count: 1

id: 23f608a286980d

block_name_prefix: rbd_data.23f608a286980d

format: 2

features: layering, deep-flatten, operations

op_features: clone-child

flags:

create_timestamp: Fri Dec 2 12:44:09 2022

access_timestamp: Fri Dec 2 12:44:09 2022

modify_timestamp: Fri Dec 2 12:44:09 2022

parent: replicapool/csi-vol-197480ab-6e0f-11ed-b5c4-6efb82c232c4@f89484f5-a51a-4743-ae18-bef17e4f3fa4 # 依赖这个镜像快照克隆出来的磁盘

overlap: 40 GiB

# 为空,创建好了后此硬盘的快照已经删除了

[root@m1 rbd]# rbd snap ls replicapool/csi-vol-197480ab-6e0f-11ed-b5c4-6efb82c232c4

快照回滚特性

当容器中 RBD 块的数据丢失或者误删除之后,快照的功能就非常重要了,我们可以基于快照做数据的恢复,通过 PVC 调用 volumesnapshot

[root@m1 rbd]# cat pvc-restore.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-restore

spec:

storageClassName: rook-ceph-block

dataSource:

name: rbd-pvc-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 40Gi # 修改为上述 rbd-pvc-snapshot volumesnapshot 的 40Gi 配置

在 dataSource 中调用 VolumeSnapshot 对象,完成数据的调用过程

# apply 资源清单

[root@m1 rbd]# kubectl apply -f pvc-restore.yaml

persistentvolumeclaim/rbd-pvc-restore created

# 查看生成的 pvc 信息

[root@m1 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-cfe4a32c-c746-46af-84e8-cd981cdf8706 30Gi RWO rook-ceph-block 5d1h

rbd-pvc-restore Bound pvc-078f8b13-a015-4eb2-aee1-db122445cf5c 40Gi RWO rook-ceph-block 2s

然后在容器中调用 PVC 获取到快照中的数据

[root@m1 rbd]# cat pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: csirbd-demo-pod

spec:

containers:

- name: web-server

image: nginx

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc-restore # 修改配置细腻

readOnly: false

[root@m1 rbd]# kubectl apply -f pod.yaml

pod/csirbd-demo-pod created

[root@m1 rbd]# kubectl get pods

NAME READY STATUS RESTARTS AGE

csirbd-demo-pod 1/1 Running 0 2m10s

[root@m1 rbd]# kubectl exec -it csirbd-demo-pod -- bash

root@csirbd-demo-pod:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 37G 8.6G 29G 24% /

tmpfs 64M 0 64M 0% /dev

tmpfs 1.4G 0 1.4G 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 8.6G 29G 24% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/rbd0 40G 164M 40G 1% /var/lib/www/html

tmpfs 1.4G 12K 1.4G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 1.4G 0 1.4G 0% /proc/acpi

tmpfs 1.4G 0 1.4G 0% /proc/scsi

tmpfs 1.4G 0 1.4G 0% /sys/firmware

root@csirbd-demo-pod:/# ls /var/lib/www/html/

auto.cnf ib_logfile0 ib_logfile1 ibdata1 lost+found mysql performance_schema

root@csirbd-demo-pod:/# ls /var/lib/www/html/ -lh

total 109M

-rw-rw---- 1 999 999 56 Nov 27 04:51 auto.cnf

-rw-rw---- 1 999 999 48M Dec 1 06:20 ib_logfile0

-rw-rw---- 1 999 999 48M Nov 27 04:51 ib_logfile1

-rw-rw---- 1 999 999 12M Dec 1 06:20 ibdata1

drwx------ 2 999 root 16K Nov 27 04:51 lost+found

drwx------ 2 999 999 4.0K Nov 27 04:51 mysql

drwx------ 2 999 999 4.0K Nov 27 04:51 performance_schema

CephFS 快照功能

要使用 CephFS 快照的功能,首先需要部署 VolumeSnapshotClass 类, VolumeSnapshotClass 会和快照的控制器完成 PVC 快照到后端 CephFS 文件存储快照的创建过程

[root@m1 rbd]# cd ../cephfs/

[root@m1 cephfs]# pwd

/root/rook/cluster/examples/kubernetes/ceph/csi/cephfs

[root@m1 cephfs]# cat snapshotclass.yaml

---

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshotClass

metadata:

name: csi-cephfsplugin-snapclass

driver: rook-ceph.cephfs.csi.ceph.com # driver:namespace:operator

parameters:

# Specify a string that identifies your cluster. Ceph CSI supports any

# unique string. When Ceph CSI is deployed by Rook use the Rook namespace,

# for example "rook-ceph".

clusterID: rook-ceph # namespace:cluster

csi.storage.k8s.io/snapshotter-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/snapshotter-secret-namespace: rook-ceph # namespace:cluster

deletionPolicy: Delete

创建后会自动生成一个 volumesnapshotclass 存储类

[root@m1 cephfs]# kubectl apply -f snapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-cephfsplugin-snapclass created

[root@m1 cephfs]# kubectl get volumesnapshotclasses.snapshot.storage.k8s.io

NAME DRIVER DELETIONPOLICY AGE

csi-cephfsplugin-snapclass rook-ceph.cephfs.csi.ceph.com Delete 9s

csi-rbdplugin-snapclass rook-ceph.rbd.csi.ceph.com Delete 142m

创建好后就可以通过 VolumeSnapshot 资源类型向 VolumeSnapshotClass 创建快照

[root@m1 cephfs]# cat snapshot.yaml

---

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshot

metadata:

namespace: kube-system # 新增 namespace 配置, cephfs-pvc 在 kube-system 名称空间

name: cephfs-pvc-snapshot

spec:

volumeSnapshotClassName: csi-cephfsplugin-snapclass

source:

persistentVolumeClaimName: cephfs-pvc

# kube-system 名称空间存在 cephfs-pvc

[root@m1 cephfs]# kubectl -n kube-system get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-c06e2d87-d5a2-43d5-bc47-16eadd0d18bf 1Gi RWX rook-cephfs 7d3h

# 应用配置,检查 volumesnapshot 资源

[root@m1 cephfs]# kubectl apply -f snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/cephfs-pvc-snapshot created

[root@m1 cephfs]# kubectl -n kube-system get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

cephfs-pvc-snapshot true cephfs-pvc 1Gi csi-cephfsplugin-snapclass snapcontent-f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32 37s 37s

查看 volumesnapshot 资源信息

[root@m1 ~]# kubectl -n kube-system get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

cephfs-pvc-snapshot true cephfs-pvc 1Gi csi-cephfsplugin-snapclass snapcontent-f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32 37s 37s

[root@m1 ~]# kubectl -n kube-system get volumesnapshotcontents.snapshot.storage.k8s.io snapcontent-f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT AGE

snapcontent-f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32 true 1073741824 Delete rook-ceph.cephfs.csi.ceph.com csi-cephfsplugin-snapclass cephfs-pvc-snapshot 95s

[root@m1 ~]# kubectl -n kube-system get volumesnapshotcontents.snapshot.storage.k8s.io

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT AGE

snapcontent-8823207f-e347-425d-9084-7453ffba378a true 42949672960 Delete rook-ceph.rbd.csi.ceph.com csi-rbdplugin-snapclass rbd-pvc-snapshot 144m

snapcontent-f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32 true 1073741824 Delete rook-ceph.cephfs.csi.ceph.com csi-cephfsplugin-snapclass cephfs-pvc-snapshot 103s

[root@m1 ~]# kubectl -n kube-system get volumesnapshotcontents.snapshot.storage.k8s.io snapcontent-f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32 -o yaml

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotContent

metadata:

......

name: snapcontent-f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32

......

spec:

deletionPolicy: Delete

driver: rook-ceph.cephfs.csi.ceph.com

source:

volumeHandle: 0001-0009-rook-ceph-0000000000000001-393d244e-6c71-11ed-b915-ceaf19f1c01f

volumeSnapshotClassName: csi-cephfsplugin-snapclass

volumeSnapshotRef:

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

name: cephfs-pvc-snapshot

namespace: kube-system

resourceVersion: "2755774"

uid: f1e07ab9-0fa4-4d2f-b37a-ced4c6334d32

status:

creationTime: 1669964833809443000

readyToUse: true

restoreSize: 1073741824

snapshotHandle: 0001-0009-rook-ceph-0000000000000001-f28ff741-720f-11ed-84f8-2284d7aedd45

卷快速克隆特性

容器里面也提供了卷快速克隆的功能,该功能是通过 PVC 另外一个 PVC 实现克隆,底层自动通过快照完成对接,安装好 snapshot controller 默认即刻提供该功能,直接使用即可。

[root@m1 cephfs]# cd ../rbd/

[root@m1 rbd]# pwd

/root/rook/cluster/examples/kubernetes/ceph/csi/rbd

[root@m1 rbd]# cat pvc-clone.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-clone

spec:

storageClassName: rook-ceph-block

dataSource:

# name: rbd-pvc

# 修改配置备份 mysql-pv-claim,由于以前章节手动修改过存储大小,所以此时磁盘空间为 40Gi

name: mysql-pv-claim

kind: PersistentVolumeClaim ## dataSource定义了创建克隆PVC的来源

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 40Gi

[root@m1 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-cfe4a32c-c746-46af-84e8-cd981cdf8706 30Gi RWO rook-ceph-block 5d2h

rbd-pvc-restore Bound pvc-078f8b13-a015-4eb2-aee1-db122445cf5c 40Gi RWO rook-ceph-block 53m

执行创建后,可以看到如下 PVC 已经绑定成功

[root@m1 rbd]# kubectl apply -f pvc-clone.yaml

persistentvolumeclaim/rbd-pvc-clone created

[root@m1 rbd]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-cfe4a32c-c746-46af-84e8-cd981cdf8706 30Gi RWO rook-ceph-block 5d2h

rbd-pvc-clone Bound pvc-7dcc8398-8a6c-456e-a9c7-7fc46897d3fc 40Gi RWO rook-ceph-block 71s

rbd-pvc-restore Bound pvc-078f8b13-a015-4eb2-aee1-db122445cf5c 40Gi RWO rook-ceph-block 53m

查看其 PV 的信息可以看到底层对应的块

[root@m1 rbd]# kubectl describe pv pvc-7dcc8398-8a6c-456e-a9c7-7fc46897d3fc

Name: pvc-7dcc8398-8a6c-456e-a9c7-7fc46897d3fc

Labels: <none>

Annotations: pv.kubernetes.io/provisioned-by: rook-ceph.rbd.csi.ceph.com

Finalizers: [kubernetes.io/pv-protection]

StorageClass: rook-ceph-block

Status: Bound

Claim: default/rbd-pvc-clone

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 40Gi

Node Affinity: <none>

Message:

Source:

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: rook-ceph.rbd.csi.ceph.com

FSType: ext4

VolumeHandle: 0001-0009-rook-ceph-0000000000000002-44366fc7-7214-11ed-bb18-5a5c11760ffc

ReadOnly: false

VolumeAttributes: clusterID=rook-ceph

imageFeatures=layering

imageFormat=2

imageName=csi-vol-44366fc7-7214-11ed-bb18-5a5c11760ffc # 对应的底层块信息

journalPool=replicapool

pool=replicapool

radosNamespace=

storage.kubernetes.io/csiProvisionerIdentity=1669948426349-8081-rook-ceph.rbd.csi.ceph.com

Events: <none>

查看 rbd csi-vol-853cc812-83dc-11eb-9fbd-8ab8cdee23b3 块信息

# 可以看到其是通过父镜像创建而来(parent)

[root@m1 rbd]# rbd -p replicapool info csi-vol-44366fc7-7214-11ed-bb18-5a5c11760ffc

rbd image 'csi-vol-44366fc7-7214-11ed-bb18-5a5c11760ffc':

size 40 GiB in 10240 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 23f608cc7e1c64

block_name_prefix: rbd_data.23f608cc7e1c64

format: 2

features: layering, operations

op_features: clone-child

flags:

create_timestamp: Fri Dec 2 15:38:10 2022

access_timestamp: Fri Dec 2 15:38:10 2022

modify_timestamp: Fri Dec 2 15:38:10 2022

parent: replicapool/csi-vol-44366fc7-7214-11ed-bb18-5a5c11760ffc-temp@1d348f06-51ab-4b00-aa47-49ceb4a806ac

overlap: 40 GiB

# 查看 replicapool 池信息

[root@m1 rbd]# rbd -p replicapool ls

csi-snap-f551456b-71fb-11ed-bb18-5a5c11760ffc

csi-vol-197480ab-6e0f-11ed-b5c4-6efb82c232c4

csi-vol-44366fc7-7214-11ed-bb18-5a5c11760ffc

csi-vol-44366fc7-7214-11ed-bb18-5a5c11760ffc-temp # 依赖的临时块还未删除

csi-vol-84d64d8d-6c61-11ed-b5c4-6efb82c232c4

csi-vol-9b7146ad-6c61-11ed-b5c4-6efb82c232c4

csi-vol-a3dbf5a7-6c61-11ed-b5c4-6efb82c232c4

csi-vol-e915f368-720c-11ed-bb18-5a5c11760ffc

至此,完成整个创建过程,当然 CephFS 也提供类似的能力,如下定义

[root@m1 rbd]# cd ../cephfs/

[root@m1 cephfs]# pwd

/root/rook/cluster/examples/kubernetes/ceph/csi/cephfs

[root@m1 cephfs]# cat pvc-clone.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

namespace: kube-system # 新增名称空间配置, cephfs-pvc 在 kube-system 名称空间

name: cephfs-pvc-clone

spec:

storageClassName: rook-cephfs

dataSource:

name: cephfs-pvc

kind: PersistentVolumeClaim

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

查看创建的情况,可以看到 cephfs-pvc-clone 已经完成了克隆状态

[root@m1 cephfs]# kubectl apply -f pvc-clone.yaml

persistentvolumeclaim/cephfs-pvc-clone created

[root@m1 rbd]# kubectl -n kube-system get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-c06e2d87-d5a2-43d5-bc47-16eadd0d18bf 1Gi RWX rook-cephfs 7d4h

cephfs-pvc-clone Bound pvc-57847a7f-74ac-4f0f-8f2e-5edf2e443a40 1Gi RWX rook-cephfs 7m51s

浙公网安备 33010602011771号

浙公网安备 33010602011771号