03 Rook 基础(转载)

Rook 快速入⻔

集群环境介绍

需要提前安装

kubernetes环境,使用kubeasz安装集群

| 节点名称 | IP地址 | k8s节点⻆色 | 服务配置 | 组件名称 | 磁盘-50G |

|---|---|---|---|---|---|

| m1 | 192.168.100.133 | master | 2c3g | ceph-mon ceph-mgr ceph-osd csi-cephfsplugin csi-rdbplugin |

/dev/sdb |

| n1 | 192.168.100.134 | node | 2c3g | ceph-mon ceph-mgr ceph-osd csi-cephfsplugin csi-rdbplugin |

/dev/sdb |

| n2 | 192.168.100.135 | node | 2c3g | ceph-mon ceph-osd csi-cephfsplugin csi-rdbplugin |

/dev/sdb |

| n3 | 192.168.100.136 | node | 2c3g | ceph-osd csi-cephfsplugin csi-rdbplugin |

/dev/sdb |

| n4 | 192.168.100.137 | node | 2c3g | ceph-osd csi-cephfsplugin csi-rdbplugin |

/dev/sdb |

| 服务 | 版本 |

|---|---|

| CentOS | 7.9 |

| Docker | 20.10.5 |

| Kubernetes | 1.20.5 |

| Ansible | 2.9.27 |

| Kubeasz | 3.0.1 |

部署前提条件

前提条件

- 已部署好的kubernetes集群,版本1.11+ 部署参考文档

- osd节点需要有未格式化文件系统的磁盘

- 需要具备Linux基础

- 需要具备Ceph基础

- mon

- mds

- rgw

- osd

- 需要具备kubernetes基础

- Node, Pod, kubectl操作

- deployments/services/statefulsets

- Volume/PV/PVC/StorageClass

获取安装源码

获取 rook 安装源

[root@m1 ~]# git clone --single-branch --branch v1.5.5 https://github.com/rook/rook.git

Cloning into 'rook'...

目录树结构

需要执行的

yaml资源清单路径/root/rook/cluster/examples/kubernetes/

[root@m1 ~]# tree /root/rook/cluster/examples/kubernetes/

/root/rook/cluster/examples/kubernetes/

├── cassandra

│ ├── cluster.yaml

│ └── operator.yaml

├── ceph

│ ├── ceph-client.yaml

│ ├── cluster-external-management.yaml

│ ├── cluster-external.yaml

│ ├── cluster-on-pvc.yaml

│ ├── cluster-stretched.yaml

│ ├── cluster-test.yaml

│ ├── cluster-with-drive-groups.yaml

│ ├── cluster.yaml

│ ├── common-external.yaml

│ ├── common.yaml

│ ├── config-admission-controller.sh

│ ├── crds.yaml

│ ├── create-external-cluster-resources.py

│ ├── create-external-cluster-resources.sh

│ ├── csi

│ │ ├── cephfs

│ │ │ ├── kube-registry.yaml

│ │ │ ├── pod.yaml

│ │ │ ├── pvc-clone.yaml

│ │ │ ├── pvc-restore.yaml

│ │ │ ├── pvc.yaml

│ │ │ ├── snapshotclass.yaml

│ │ │ ├── snapshot.yaml

│ │ │ └── storageclass.yaml

│ │ ├── rbd

│ │ │ ├── pod.yaml

│ │ │ ├── pvc-clone.yaml

│ │ │ ├── pvc-restore.yaml

│ │ │ ├── pvc.yaml

│ │ │ ├── snapshotclass.yaml

│ │ │ ├── snapshot.yaml

│ │ │ ├── storageclass-ec.yaml

│ │ │ ├── storageclass-test.yaml

│ │ │ └── storageclass.yaml

│ │ └── template

│ │ ├── cephfs

│ │ │ ├── csi-cephfsplugin-provisioner-dep.yaml

│ │ │ ├── csi-cephfsplugin-svc.yaml

│ │ │ └── csi-cephfsplugin.yaml

│ │ └── rbd

│ │ ├── csi-rbdplugin-provisioner-dep.yaml

│ │ ├── csi-rbdplugin-svc.yaml

│ │ └── csi-rbdplugin.yaml

│ ├── dashboard-external-https.yaml

│ ├── dashboard-external-http.yaml

│ ├── dashboard-ingress-https.yaml

│ ├── dashboard-loadbalancer.yaml

│ ├── direct-mount.yaml

│ ├── filesystem-ec.yaml

│ ├── filesystem-test.yaml

│ ├── filesystem.yaml

│ ├── flex

│ │ ├── kube-registry.yaml

│ │ ├── storageclass-ec.yaml

│ │ ├── storageclass-test.yaml

│ │ └── storageclass.yaml

│ ├── import-external-cluster.sh

│ ├── monitoring

│ │ ├── csi-metrics-service-monitor.yaml

│ │ ├── prometheus-ceph-v14-rules-external.yaml

│ │ ├── prometheus-ceph-v14-rules.yaml

│ │ ├── prometheus-ceph-v15-rules-external.yaml -> prometheus-ceph-v14-rules-external.yaml

│ │ ├── prometheus-ceph-v15-rules.yaml -> prometheus-ceph-v14-rules.yaml

│ │ ├── prometheus-service.yaml

│ │ ├── prometheus.yaml

│ │ ├── rbac.yaml

│ │ └── service-monitor.yaml

│ ├── nfs-test.yaml

│ ├── nfs.yaml

│ ├── object-bucket-claim-delete.yaml

│ ├── object-bucket-claim-retain.yaml

│ ├── object-ec.yaml

│ ├── object-external.yaml

│ ├── object-multisite-pull-realm.yaml

│ ├── object-multisite.yaml

│ ├── object-openshift.yaml

│ ├── object-test.yaml

│ ├── object-user.yaml

│ ├── object.yaml

│ ├── operator-openshift.yaml

│ ├── operator.yaml

│ ├── osd-purge.yaml

│ ├── pool-ec.yaml

│ ├── pool-test.yaml

│ ├── pool.yaml

│ ├── pre-k8s-1.16

│ │ └── crds.yaml

│ ├── rbdmirror.yaml

│ ├── rgw-external.yaml

│ ├── scc.yaml

│ ├── storageclass-bucket-delete.yaml

│ ├── storageclass-bucket-retain.yaml

│ ├── toolbox-job.yaml

│ └── toolbox.yaml

├── cockroachdb

│ ├── cluster.yaml

│ ├── loadgen-kv.yaml

│ └── operator.yaml

├── edgefs

│ ├── cluster_kvssd.yaml

│ ├── cluster.yaml

│ ├── csi

│ │ ├── iscsi

│ │ │ ├── edgefs-iscsi-csi-driver-config.yaml

│ │ │ ├── edgefs-iscsi-csi-driver.yaml

│ │ │ └── examples

│ │ │ ├── dynamic-nginx.yaml

│ │ │ ├── pre-provisioned-nginx.yaml

│ │ │ └── snapshots

│ │ │ ├── create-snapshot.yaml

│ │ │ ├── nginx-snapshot-clone-volume.yaml

│ │ │ └── snapshot-class.yaml

│ │ └── nfs

│ │ ├── edgefs-nfs-csi-driver-config.yaml

│ │ ├── edgefs-nfs-csi-driver.yaml

│ │ └── examples

│ │ ├── dynamic-nginx.yaml

│ │ └── preprovisioned-edgefs-volume-nginx.yaml

│ ├── iscsi.yaml

│ ├── isgw.yaml

│ ├── monitoring

│ │ ├── prometheus-service.yaml

│ │ ├── prometheus.yaml

│ │ └── service-monitor.yaml

│ ├── nfs.yaml

│ ├── operator.yaml

│ ├── persistent-volume.yaml

│ ├── s3PayloadSecret.yaml

│ ├── s3x.yaml

│ ├── s3.yaml

│ ├── smb.yaml

│ ├── sslKeyCertificate.yaml

│ ├── storage-class.yaml

│ ├── swift.yaml

│ └── upgrade-from-v1beta1-create.yaml

├── mysql.yaml

├── nfs

│ ├── busybox-rc.yaml

│ ├── common.yaml

│ ├── nfs-ceph.yaml

│ ├── nfs-xfs.yaml

│ ├── nfs.yaml

│ ├── operator.yaml

│ ├── psp.yaml

│ ├── pvc.yaml

│ ├── rbac.yaml

│ ├── scc.yaml

│ ├── sc.yaml

│ ├── webhook.yaml

│ ├── web-rc.yaml

│ └── web-service.yaml

├── README.md

├── wordpress.yaml

└── yugabytedb

├── cluster.yaml

└── operator.yaml

每一个存储

driver录下包含有相关的安装部署方法,均会包含一个核心的组件operator

部署 rook 自定义资源对象

[root@m1 ~]# cd rook/cluster/examples/kubernetes/ceph/

[root@m1 ceph]# kubectl apply -f crds.yaml

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclients.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephrbdmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectrealms.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzonegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzones.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/volumes.rook.io created

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created

crds 中包含有 Ceph 的相关自定义资源对象,如:

- cephclusters

- cephclients

- volumes

- cephfilesystem

- cephobjectstores

- objectbuckets

- objectbucketclaims等

通过 kubectl get customresourcedefinitions.apiextensions.k8s.io 可以获取到自定义资源对象

[root@m1 ceph]# kubectl get customresourcedefinitions.apiextensions.k8s.io

NAME CREATED AT

cephblockpools.ceph.rook.io 2022-11-23T01:43:32Z

cephclients.ceph.rook.io 2022-11-23T01:43:32Z

cephclusters.ceph.rook.io 2022-11-23T01:43:32Z

cephfilesystems.ceph.rook.io 2022-11-23T01:43:32Z

cephnfses.ceph.rook.io 2022-11-23T01:43:32Z

cephobjectrealms.ceph.rook.io 2022-11-23T01:43:32Z

cephobjectstores.ceph.rook.io 2022-11-23T01:43:32Z

cephobjectstoreusers.ceph.rook.io 2022-11-23T01:43:32Z

cephobjectzonegroups.ceph.rook.io 2022-11-23T01:43:32Z

cephobjectzones.ceph.rook.io 2022-11-23T01:43:32Z

cephrbdmirrors.ceph.rook.io 2022-11-23T01:43:32Z

objectbucketclaims.objectbucket.io 2022-11-23T01:43:32Z

objectbuckets.objectbucket.io 2022-11-23T01:43:32Z

volumes.rook.io 2022-11-23T01:43:32Z

部署集群认证信息

[root@m1 ceph]# kubectl apply -f common.yaml

namespace/rook-ceph created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

serviceaccount/rook-ceph-admission-controller created

clusterrole.rbac.authorization.k8s.io/rook-ceph-admission-controller-role created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-admission-controller-rolebinding created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

role.rbac.authorization.k8s.io/rook-ceph-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

serviceaccount/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

podsecuritypolicy.policy/00-rook-privileged created

clusterrole.rbac.authorization.k8s.io/psp:rook created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

会自动生成 RBAC 认证相关的⻆色,包括:

- Role

- clusterrole

- clusterrolebinding

- serviceaccount等对象

通过可以获取到对应权限信息

- kubectl get clusterrole

- kubectl get clusterrolebindings.rbac.authorization.k8s.io

- kubectl get serviceaccounts -n rook-ceph

clusterrole 和 clusterrolebindings 是 kubernetes 中集群级别的资源, serviceaccounts 则是 namespace

级资源

operator 详解

部署 operator

[root@m1 ceph]# kubectl apply -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

会通过 deployment 创建一个 pods 容器管理 Ceph 集群,配置文件包含在 configmap 中,通过如下可以查看到

[root@m1 ceph]# kubectl get deploy -n rook-ceph

NAME READY UP-TO-DATE AVAILABLE AGE

rook-ceph-operator 0/1 1 0 33s

[root@m1 ceph]# kubectl get cm -n rook-ceph

NAME DATA AGE

kube-root-ca.crt 1 3m45s

rook-ceph-operator-config 6 42s

拉取 ceph 镜像

ceph的镜像空间较大,包含有1G的容量,因此pods创建过程中需要较⻓的时间,可以通过手动方式拉取镜像

[root@m1 ceph]# docker image pull rook/ceph:v1.5.5

下载后将其导出

[root@m1 ceph]# docker image save -o /root/rook-ceph.tar rook/ceph:v1.5.5

复制到其他节点

ansible hosts配置[all] m1 n1 n2 n3 n4

[root@m1 ceph]# ansible all -m copy -a "src=/root/rook-ceph.tar dest=/tmp/" -v

Using /etc/ansible/ansible.cfg as config file

n1 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "f1c89007f07ab53fb98b850cea437433958e4ebf",

"dest": "/tmp/rook-ceph.tar",

"gid": 0,

"group": "root",

"md5sum": "8516a3d210085403a3b83b4dcfb01150",

"mode": "0644",

"owner": "root",

"size": 1066678272,

"src": "/root/.ansible/tmp/ansible-tmp-1669170022.55-96522-49181090820854/source",

"state": "file",

"uid": 0

}

n4 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "f1c89007f07ab53fb98b850cea437433958e4ebf",

"dest": "/tmp/rook-ceph.tar",

"gid": 0,

"group": "root",

"md5sum": "8516a3d210085403a3b83b4dcfb01150",

"mode": "0644",

"owner": "root",

"size": 1066678272,

"src": "/root/.ansible/tmp/ansible-tmp-1669170022.58-96529-44939501615986/source",

"state": "file",

"uid": 0

}

m1 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "f1c89007f07ab53fb98b850cea437433958e4ebf",

"dest": "/tmp/rook-ceph.tar",

"gid": 0,

"group": "root",

"md5sum": "8516a3d210085403a3b83b4dcfb01150",

"mode": "0644",

"owner": "root",

"size": 1066678272,

"src": "/root/.ansible/tmp/ansible-tmp-1669170022.48-96520-229124032996439/source",

"state": "file",

"uid": 0

}

n2 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "f1c89007f07ab53fb98b850cea437433958e4ebf",

"dest": "/tmp/rook-ceph.tar",

"gid": 0,

"group": "root",

"md5sum": "8516a3d210085403a3b83b4dcfb01150",

"mode": "0644",

"owner": "root",

"size": 1066678272,

"src": "/root/.ansible/tmp/ansible-tmp-1669170022.72-96524-274832934731995/source",

"state": "file",

"uid": 0

}

n3 | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "f1c89007f07ab53fb98b850cea437433958e4ebf",

"dest": "/tmp/rook-ceph.tar",

"gid": 0,

"group": "root",

"md5sum": "8516a3d210085403a3b83b4dcfb01150",

"mode": "0644",

"owner": "root",

"size": 1066678272,

"src": "/root/.ansible/tmp/ansible-tmp-1669170022.64-96527-61530936196590/source",

"state": "file",

"uid": 0

}

然后在其他节点上导入

[root@m1 ceph]# ansible all -m shell -a "docker image load -i /tmp/rook-ceph.tar"

m1 | CHANGED | rc=0 >>

Loaded image: rook/ceph:v1.5.5

n1 | CHANGED | rc=0 >>

Loaded image: rook/ceph:v1.5.5

n2 | CHANGED | rc=0 >>

Loaded image: rook/ceph:v1.5.5

n4 | CHANGED | rc=0 >>

Loaded image: rook/ceph:v1.5.5

n3 | CHANGED | rc=0 >>

Loaded image: rook/ceph:v1.5.5

cluster 详解

创建 cluster

[root@m1 ceph]# kubectl apply -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created

集群创建过程

通过 logs 可以看到 Ceph 集群初始化的过程,初始化是通过 rook operator 进行控制的,operator 会自动编排 ceph mon,mgr,osd 等⻆色的初始化过程

[root@m1 ceph]# kubectl logs rook-ceph-operator-6dd84fc776-js9bj -n rook-ceph -f

包含有以下等动作:

- mon集群初始化

- CSI认证所需key

- mgr初始化

- osd初始化

获取 CSI 镜像

此时集群会自动创建 pods 来构建 Ceph 集群,包括 mon,mgr,osd 等⻆色,初次之外还会在每一个节点部署驱动,包含有 rbd 和 cephfs 两种不同的驱动类型,两种驱动镜像需要到 google 下载,因此需要手动拉取一下,先获取到镜像的名称

[root@m1 ceph]# for i in `kubectl get pods -n rook-ceph -o jsonpath='{.items[*].spec.containers[*].image}'`;do echo ${i} | grep gcr.io;done

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-attacher:v3.0.0

k8s.gcr.io/sig-storage/csi-snapshotter:v3.0.0

k8s.gcr.io/sig-storage/csi-resizer:v1.0.0

k8s.gcr.io/sig-storage/csi-provisioner:v2.0.0

k8s.gcr.io/sig-storage/csi-attacher:v3.0.0

k8s.gcr.io/sig-storage/csi-snapshotter:v3.0.0

k8s.gcr.io/sig-storage/csi-resizer:v1.0.0

k8s.gcr.io/sig-storage/csi-provisioner:v2.0.0

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

k8s.gcr.io/sig-storage/csi-provisioner:v2.0.0

k8s.gcr.io/sig-storage/csi-resizer:v1.0.0

k8s.gcr.io/sig-storage/csi-attacher:v3.0.0

k8s.gcr.io/sig-storage/csi-snapshotter:v3.0.0

k8s.gcr.io/sig-storage/csi-provisioner:v2.0.0

k8s.gcr.io/sig-storage/csi-resizer:v1.0.0

k8s.gcr.io/sig-storage/csi-attacher:v3.0.0

k8s.gcr.io/sig-storage/csi-snapshotter:v3.0.0

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1

从国内的镜像手动拉取一下,使用如下脚本替换

[root@m1 ceph]# cat /tmp/1.sh

#!/bin/bash

image_list=(

csi-node-driver-registrar:v2.0.1

csi-attacher:v3.0.0

csi-snapshotter:v3.0.0

csi-resizer:v1.0.0

csi-provisioner:v2.0.0

)

aliyuncs="registry.aliyuncs.com/it00021hot"

google_gcr="k8s.gcr.io/sig-storage"

for image in ${image_list[*]}

do

docker image pull ${aliyuncs}/${image}

docker image tag ${aliyuncs}/${image} ${google_gcr}/${image}

docker image rm ${aliyuncs}/${image}

echo "${aliyuncs}/${image} ${google_gcr}/${image} downloaded."

done

执行脚本

[root@m1 ceph]# ansible all -m copy -a "src=/tmp/1.sh dest=/tmp/2.sh"

[root@m1 ceph]# ansible all -m shell -a "bash /tmp/2.sh"

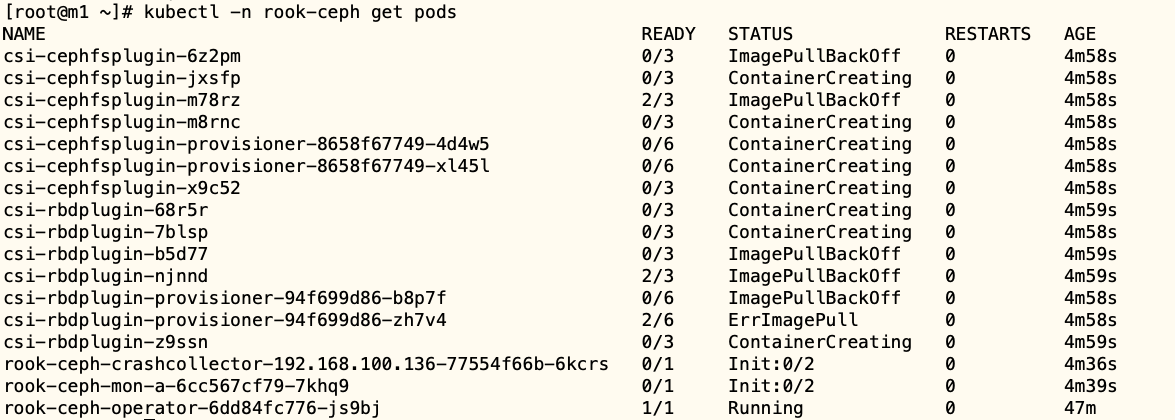

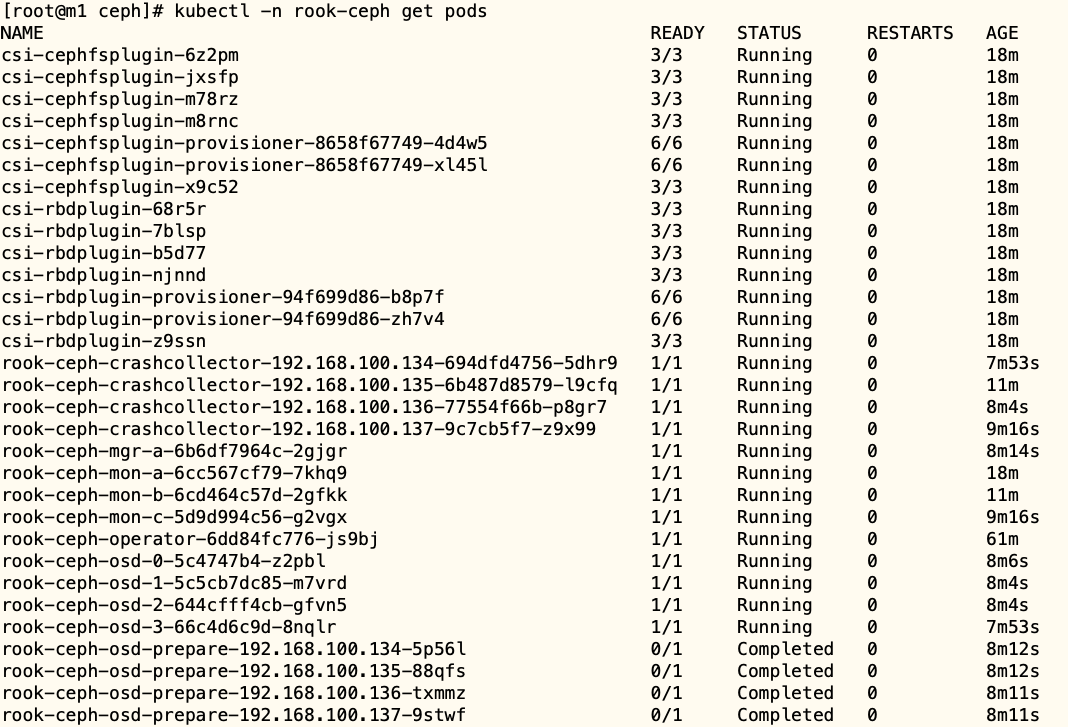

确认 pods 运行情况

除了使用上述的方法之外,也可以在 operator.yaml 文件中修改镜像仓库的地址,如:

[root@m1 ceph]# cat operator.yaml | grep IMAGE

# ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.2.0"

# ROOK_CSI_REGISTRAR_IMAGE: "k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.0.1"

# ROOK_CSI_RESIZER_IMAGE: "k8s.gcr.io/sig-storage/csi-resizer:v1.0.0"

# ROOK_CSI_PROVISIONER_IMAGE: "k8s.gcr.io/sig-storage/csi-provisioner:v2.0.0"

# ROOK_CSI_SNAPSHOTTER_IMAGE: "k8s.gcr.io/sig-storage/csi-snapshotter:v3.0.0"

# ROOK_CSI_ATTACHER_IMAGE: "k8s.gcr.io/sig-storage/csi-attacher:v3.0.0"

ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.2.0"

ROOK_CSI_REGISTRAR_IMAGE: "registry.aliyuncs.com/it00021hot/csi-node-driver-registrar:v2.0.1"

ROOK_CSI_RESIZER_IMAGE: "registry.aliyuncs.com/it00021hot/csi-resizer:v1.0.0"

ROOK_CSI_PROVISIONER_IMAGE: "registry.aliyuncs.com/it00021hot/csi-provisioner:v2.0.0"

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.aliyuncs.com/it00021hot/csi-snapshotter:v3.0.0"

ROOK_CSI_ATTACHER_IMAGE: "registry.aliyuncs.com/it00021hot/csi-attacher:v3.0.0"

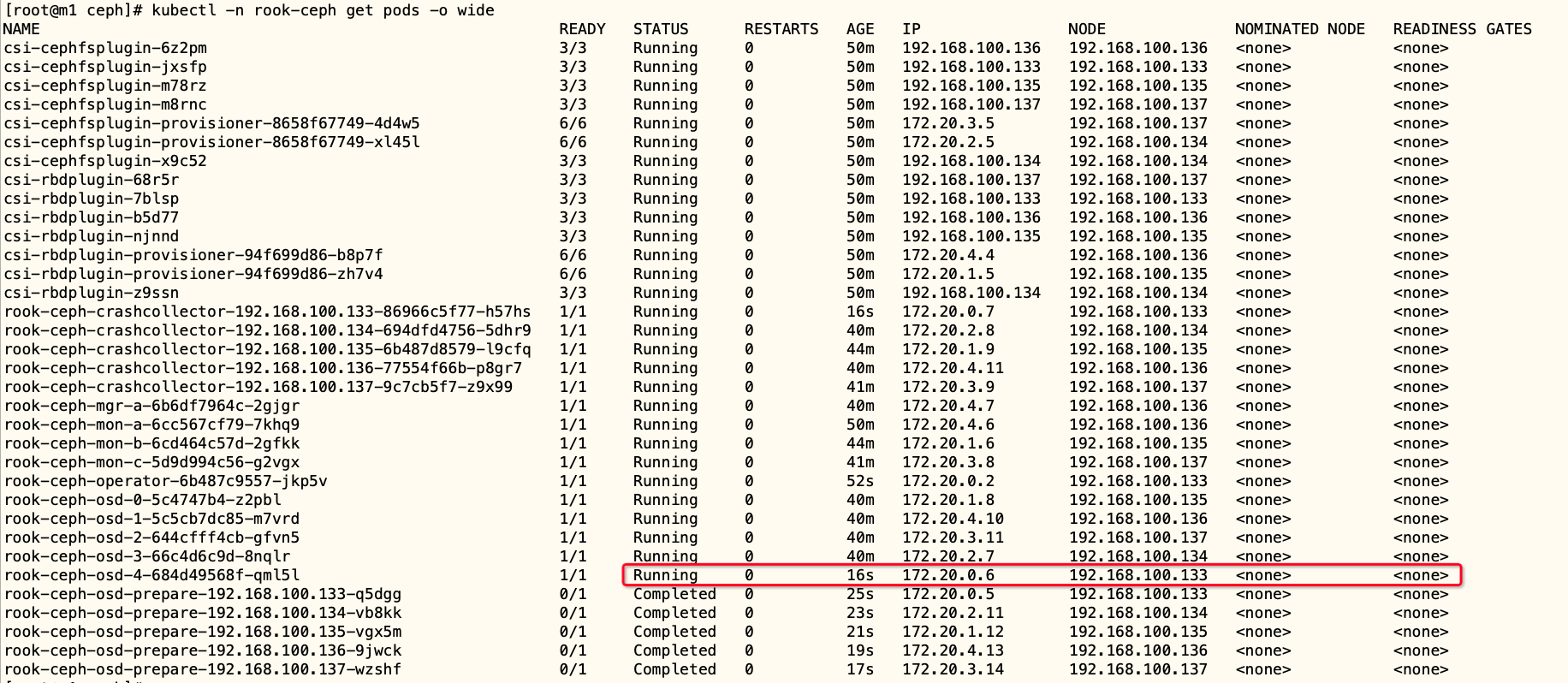

master 加入 osd

默认 master 节点没有加入到 Ceph 集群中作为 osd 存储⻆色,是因为 master 节点默认设置了污点,因此默认不会将其调度到 master 节点,解决方法:

- 设置osd的调度参数,设置污点容忍,设置方法可以在cluster.yaml中的placement中调整tolerations参数

- 删除master的污点

[root@m1 ceph]# kubectl taint nodes 192.168.100.133 node.kubernetes.io/unschedulable:NoSchedule-

node/192.168.100.133 untainted

调整 osd 发现参数

配置中使用了所有的节点和节点所有的设备,rook 会自动去扫描所有节点的设备,一旦有节点或者磁盘

添加进来,会自动将其添加到 Ceph 集群中,operator 是通过启动 rook-discover 一个容器定期去扫描,参数为 ROOK_DISCOVER_DEVICES_INTERVAL

间隔默认是 60m,为了看到效果,将其调整为 60s,然后在重新 kubectp apply -f operator.yaml,可以 m1 的 osd 也添加至了集群中

# The duration between discovering devices in the rook-discover daemonset.

- name: ROOK_DISCOVER_DEVICES_INTERVAL

# value: "60m"

value: "60s"

[root@m1 ceph]# kubectl apply -f operator.yaml

configmap/rook-ceph-operator-config unchanged

deployment.apps/rook-ceph-operator configured

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· Vue3状态管理终极指南:Pinia保姆级教程